Immediate response becomes the implicit expectation, with barely any barriers or restrictions in place

Why Slack is a great distraction:

in the absence of barriers convenience always wins

Immediate response becomes the implicit expectation, with barely any barriers or restrictions in place

Why Slack is a great distraction:

in the absence of barriers convenience always wins

In our experience, the best way to prevent a useless meeting is to write up our goals and thoughts first. Despite working in the same office, our team at Nuclino has converted nearly all of our meetings into asynchronously written reports.

Weekly status report (example):

Data scientists and data engineers have built-in job security relative to other positions as businesses transition their operations to rely more heavily on data, data science, and AI. That’s a long-term trend that is not likely to change due to COVID-19, although momentum had started to slow in 2019 as venture capital investments ebbed.

According to a Dice Tech Jobs report released in February, demand for data engineers was up 50% and demand for data scientists was up 32% in 2019 compared to the prior year.

Need for Data Scientist / Engineers in 2019 vs 2018

competency doesn’t factor as much as likability in most corporate promotions, especially when the ship is smooth sailing.

Another truth of the corporate world

Find a results-oriented job if you’re fiercely independent and opinionated. Climb the ladder in a big corporation if you’re highly diplomatic or a crowd-pleaser.

Advice for two different working profiles

The two things I really like about working for smaller places or starting a company is you get very direct access to users and customers and their problems, which means you can actually have empathy for what's actually going on with them, and then you can directly solve it. That cycle is so powerful, the sooner you learn how to make that cycle happen in your career, the better off you'll be. If you can make software and make software for other people, the outcome truly is hundreds of millions of dollars worth of value if you get it right. That's where I'm here to try and encourage you to do. I'm not really saying that you shouldn't go work at a big tech company. I am saying you should probably leave before it makes you soft.

What are the benefits of working at the smaller companies/startups over the tech giants

afternoons are spent reading/researching/online classes.This has really helped me avoid burn out. I go into the weekend less exhausted and more motivated to return on Monday and implement new stuff. It has also helped generate some inspiration for weekend/personal projects.

Learning at work as solution to burn out and inspiration for personal projects

Second, in my experience working with ex-FAANG - these engineers, while they all tend to be very smart, tend to be borderline junior engineers in the real world. They simply don't know how to build or operate something without the luxury of the mature tooling that exists at FAANG. You may be in for a reality shock when you leave the FAANG bubble

Working with engineers out of FAANG can be surprising

We want to learn, but we worry that we might not like what we learn. Or that learning will cost us too much. Or that we will have to give up cherished ideas.

I believe it is normal to worry about the usage of a new domain-based knowledge

When People Work Together

How to lay off your lovely co-workers

Choose health insurance (Krankenkasse) - in Switzerland you have to pay your health insurance separately (it’s not deducted from your salary). You can use the Comparis website to compare the options. You have 3 months to choose both the company and your franchise.

Choosing health insurance in Switzerland

Other important things - if you plan to use public transport, we recommend you to buy the Half Fare card. It gives you a 50% discount on most public transport in Switzerland (it costs 185 CHF per year).

Recommendation to buy a Half Fare Card for a public transport discount

There are also some general expat groups like Zurich Together

Zurich Together <--- expat group for Zurich

we think the async culture is one of the core reasons why most of the people we’ve hired at Doist the past 5 years have stayed with us. Our employee retention is 90%+ — much higher than the overall tech industry. For example, even a company like Google — with its legendary campuses full of perks from free meals to free haircuts — has a median tenure of just 1.1 years. Freedom to work from anywhere at any time beats fun vanity perks any day, and it costs our company $0 to provide

Employee retention rate at Doist vs Google

Praca w Facebooku - doskonała znajomość JSa, React, zarządzanie projektem OSS na GitHubie, prowadzenie społeczności, pisanie dokumentacji i wpisów na blogu.Szkolenia - dobra znajomość JSa, React, tworzenie szkoleń (struktura, zadania, itd), uczenie i swobodne przekazywanie wiedzy, marketing, sprzedaż.Startupy - dobra znajomość JSa, React, praca w zespole, rozmawianie z klientami, analiza biznesowa, szybkie dowożenie MVP, praca w stresie i dziwnych strefach czasowych.

Examples of restructuring tasks into more precise actions:

Some of the people in the company are your friends in the current context. It’s like your dorm in college.

"Company is like a college dorm"... interesting comparison

It’s also okay to take risks. Staying at a company that’s slowly dying has its costs too. Stick around too long and you’ll lose your belief that you can build, that change is possible. Try not to learn the wrong habits.

Cons of staying too long in the same company

Defining what “time well spent” means to you and making space for these moments is one of the greatest gifts you can make to your future self.

Think really well what "time well spent" means to you

Research shows that humans tend to do whatever it takes to keep busy, even if the activity feels meaningless to them. Dr Brené Brown from the University of Houston describes being “crazy busy” as a numbing strategy we use to avoid facing the truth of our lives.

People simply prefer to be busy

A few takeaways

Summarising the article:

Simulations show that for most study designs and settings, it is more likely for a research claim to be false than true.

There is increasing concern that most current published research findings are false.

The probability that the research is true may depend on:

Research finding is less likely to be true when:

golden rule: If someone calls saying they’re from your bank, just hang up and call them back — ideally using a phone number that came from the bank’s Web site or from the back of your payment card.

Golden rule of talking to your bank

“When the representative finally answered my call, I asked them to confirm that I was on the phone with them on the other line in the call they initiated toward me, and so the rep somehow checked and saw that there was another active call with Mitch,” he said. “But as it turned out, that other call was the attackers also talking to my bank pretending to be me.”

Phishing situation scenario:

It is difficult to choose a typical reading speed, research has been conducted on various groups of people to get typical rates, what you regularly see quoted is: 100 to 200 words per minute (wpm) for learning, 200 to 400 wpm for comprehension.

On average people read:

DevOps tools enable DevOps in organizations

Common DevOps tools:

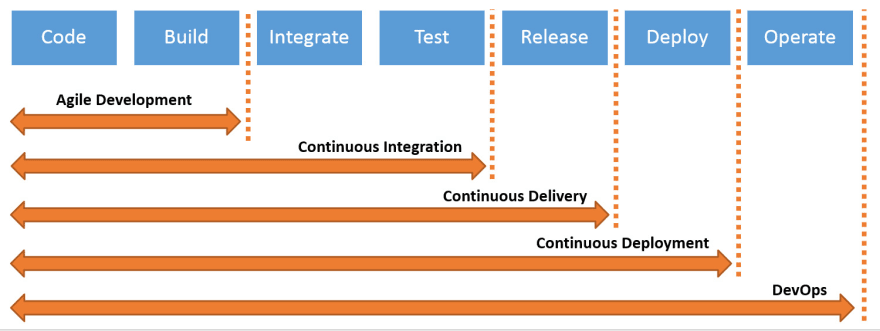

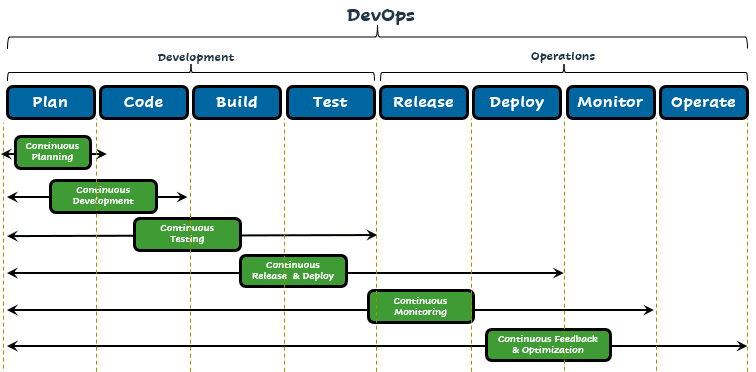

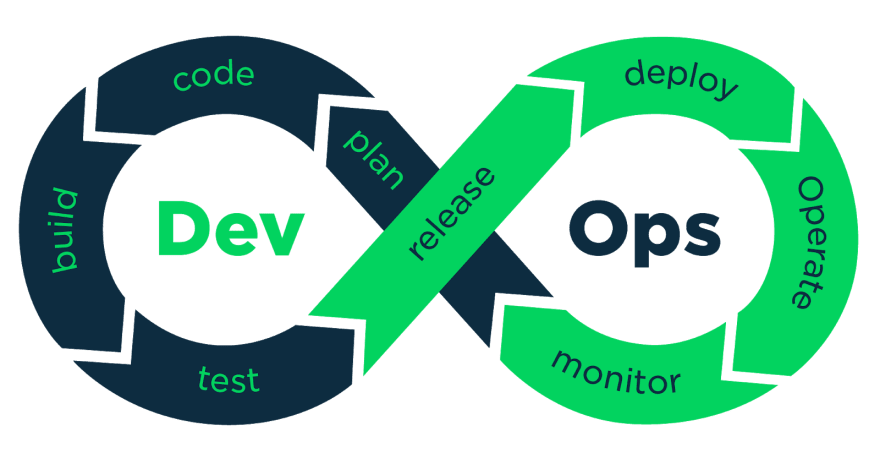

While talking about DevOps, three things are important continuous integration, continuous deployment, and continuous delivery.

DevOps process

another version of the image:

and one more:

and one more:

Basic prerequisites to learn DevOps

Basic prerequisites to learn DevOps:

DevOps benefits

DevOps benefits:

Operations in the software industry include administrative processes and support for both hardware and software for clients as well as internal to the company. Infrastructure management, quality assurance, and monitoring are the basic roles for operations.

Operations (1/2 of DevOps):

I set it with a few clicks at Travis CI, and by creating a .travis.yml file in the repo

You can set CI with a few clicks using Travis CI and creating a .travis.yml file in your repo:

language: node_js

node_js: node

before_script:

- npm install -g typescript

- npm install codecov -g

script:

- yarn lint

- yarn build

- yarn test

- yarn build-docs

after_success:

- codecov

I set it with a few clicks at Travis CI, and by creating a .travis.yml file in the repo

You can set CI with a few clicks using Travis CI and creating a .travis.yml file in your repo:

language: node_js

node_js: node

before_script:

- npm install -g typescript

- npm install codecov -g

script:

- yarn lint

- yarn build

- yarn test

- yarn build-docs

after_success:

- codecov

Continuous integration makes it easy to check against cases when the code: does not work (but someone didn’t test it and pushed haphazardly), does work only locally, as it is based on local installations, does work only locally, as not all files were committed.

CI - Continuous Integration helps to check the code when it :

In Python, when trying to do a dubious operation, you get an error pretty soon. In JavaScript… an undefined can fly through a few layers of abstraction, causing an error in a seemingly unrelated piece of code.

Undefined nature of JavaScript can hide an error for a long time. For example,

function add(a,b) { return + (a + b) }

add(2,2)

add('2', 2)

will result in a number, but is it the same one?

With Codecov it is easy to make jest & Travis CI generate one more thing:

Codecov lets you generate a score on your tests:

I would use ESLint in full strength, tests for some (especially end-to-end, to make sure a commit does not make project crash), and add continuous integration.

Advantage of tests

It is fine to start adding tests gradually, by adding a few tests to things that are the most difficult (ones you need to keep fingers crossed so they work) or most critical (simple but with many other dependent components).

Start small by adding tests to the most crucial parts

I found that the overhead to use types in TypeScript is minimal (if any).

In TypeScript, unlike in JS we need to specify the types:

I need to specify types of input and output. But then I get speedup due to autocompletion, hints, and linting if for any reason I make a mistake.

In TypeScript, you spend a bit more time in the variable definition, but then autocompletion, hints, and linting will reward you. It also boosts code readability

TSDoc is a way of writing TypeScript comments where they’re linked to a particular function, class or method (like Python docstrings).

TSDoc <--- TypeScript comment syntax. You can create documentation with TypeDoc

ESLint does automatic code linting

ESLint <--- pluggable JS linter:

if (x = 5) { ... })Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.

According to the Kernighan's Law, writing code is not as hard as debugging

Write a new test and the result. If you want to make it REPL-like, instead of writing console.log(x.toString()) use expect(x.toString()).toBe('') and you will directly get the result.

jest <--- interactive JavaScript (TypeScript and others too) testing framework. You can use it as a VS Code extension.

Basically, instead of console.log(x.toString()), you can use except(x.toString()).toBe(''). Check this gif to understand it further

interactive notebooks fall short when you want to write bigger, maintainable code

Survey regarding programming notebooks:

I recommend Airbnb style JavaScript style guide and Airbnb TypeScript)

Recommended style guides from Airbnb for:

Creating meticulous tests before exploring the data is a big mistake, and will result in a well-crafted garbage-in, garbage-out pipeline. We need an environment flexible enough to encourage experiments, especially in the initial place.

Overzealous nature of TDD may discourage from explorable data science

Continuous Deployment is the next step. You deploy the most up to date and production ready version of your code to some environment. Ideally production if you trust your CD test suite enough.

Continuous Deployment

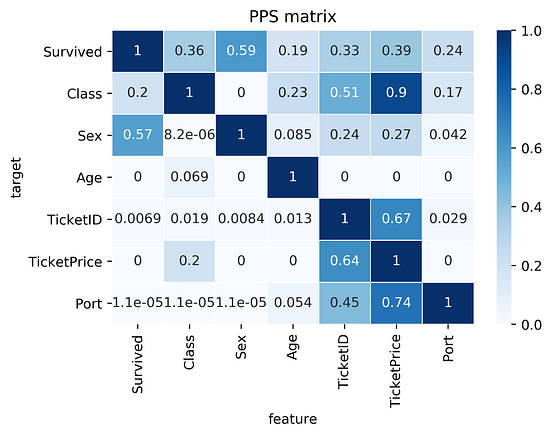

the limitations of the PPS

Limitations of the PPS:

Although the PPS has many advantages over the correlation, there is some drawback: it takes longer to calculate.

PPS is slower to calculate the correlation.

How to use the PPS in your own (Python) project

Using PPS with Python

pip install ppscoreshellimport ppscore as pps

pps.score(df, "feature_column", "target_column")

pps.matrix(df)

The PPS clearly has some advantages over correlation for finding predictive patterns in the data. However, once the patterns are found, the correlation is still a great way of communicating found linear relationships.

PPS:

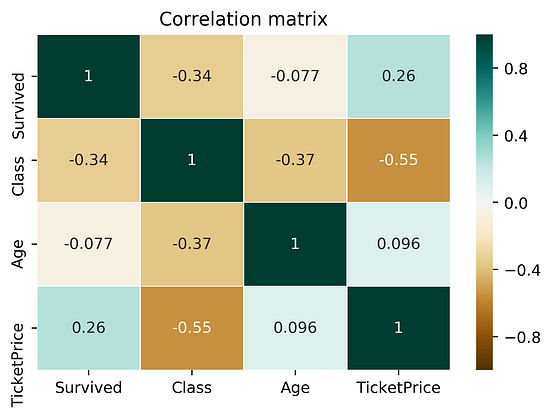

Let’s compare the correlation matrix to the PPS matrix on the Titanic dataset.

Comparing correlation matrix and the PPS matrix of the Titanic dataset:

findings about the correlation matrix:

TicketPrice and Class. For PPS, it's a strong predictor (0.9 PPS), but not the other way Class to TicketPrice (ticket of 5000-10000$ is most likely the highest class, but the highest class itself cannot determine the price)findings about the PPS matrix:

Survived is the column Sex (Sex was dropped for correlation)TicketID uncovers a hidden pattern as well as it's connection with the TicketPrice

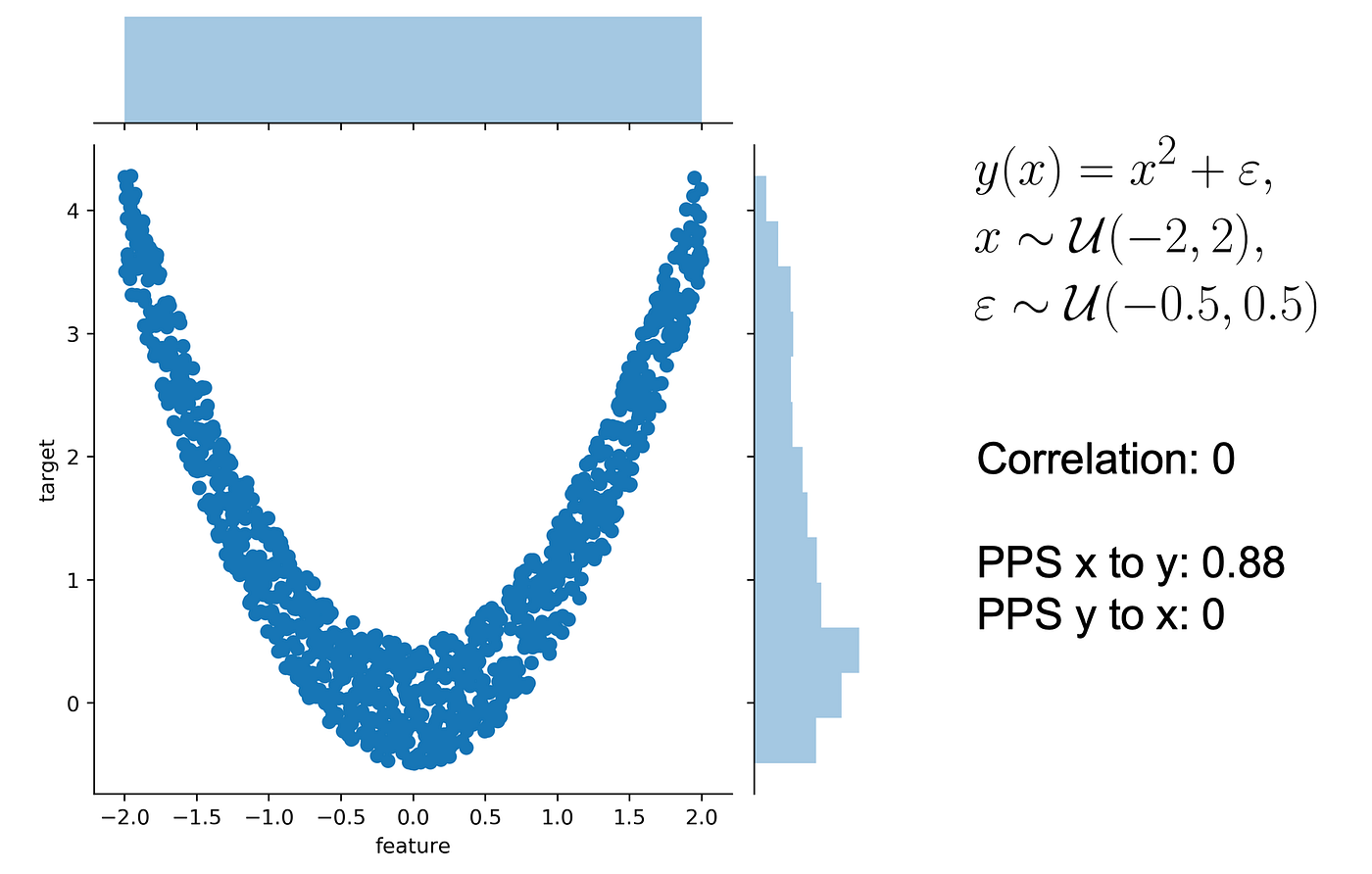

Let’s use a typical quadratic relationship: the feature x is a uniform variable ranging from -2 to 2 and the target y is the square of x plus some error.

In this scenario:

how do you normalize a score? You define a lower and an upper limit and put the score into perspective.

Normalising a score:

For a classification problem, always predicting the most common class is pretty naive. For a regression problem, always predicting the median value is pretty naive.

What is a naive model:

Let’s say we have two columns and want to calculate the predictive power score of A predicting B. In this case, we treat B as our target variable and A as our (only) feature. We can now calculate a cross-validated Decision Tree and calculate a suitable evaluation metric.

If the target (B) variable is:

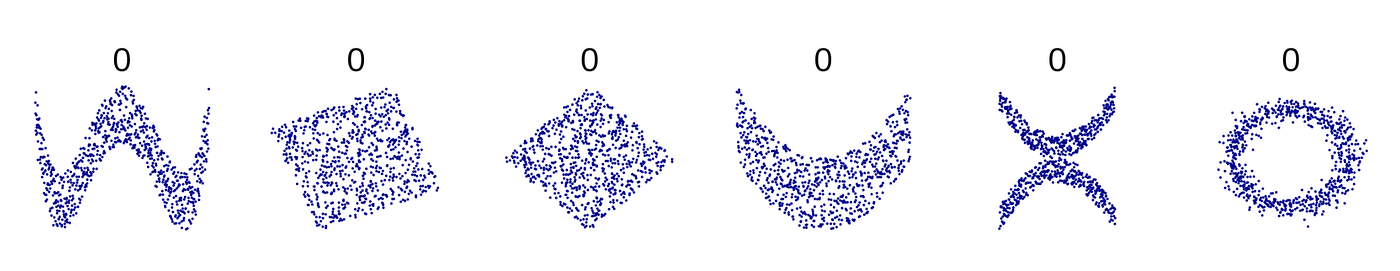

More often, relationships are asymmetric

a column with 3 unique values will never be able to perfectly predict another column with 100 unique values. But the opposite might be true

there are many non-linear relationships that the score simply won’t detect. For example, a sinus wave, a quadratic curve or a mysterious step function. The score will just be 0, saying: “Nothing interesting here”. Also, correlation is only defined for numeric columns.

Correlation:

Examples:

There are many types of CRDTs

CRDTs have different types, such as Grow-only set and Last-writer-wins register. Check more of them here

Some of our main takeaways:CRDT literature can be relevant even if you're not creating a decentralized systemMultiplayer for a visual editor like ours wasn't as intimidating as we thoughtTaking time to research and prototype in the beginning really paid off

Key takeaways of developing a live editing tool

traditional approaches that informed ours — OTs and CRDTs

Traditional approaches of the multiplayer technology

CRDTs refer to a collection of different data structures commonly used in distributed systems. All CRDTs satisfy certain mathematical properties which guarantee eventual consistency. If no more updates are made, eventually everyone accessing the data structure will see the same thing. This constraint is required for correctness; we cannot allow two clients editing the same Figma document to diverge and never converge again

CRDTs (Conflict-free Replicated Data Types)

They’re a great way of editing long text documents with low memory and performance overhead, but they are very complicated and hard to implement correctly

Characteristics of OTs

Even if you have a client-server setup, CRDTs are still worth researching because they provide a well-studied, solid foundation to start with

CRDTs are worth studying for a good foundation

Figma’s multiplayer servers keep track of the latest value that any client has sent for a given property on a given object

✅ No conflict:

❎ Conflict:

Figma doesn’t store any properties of deleted objects on the server. That data is instead stored in the undo buffer of the client that performed the delete. If that client wants to undo the delete, then it’s also responsible for restoring all properties of the deleted objects. This helps keep long-lived documents from continuing to grow in size as they are edited

Undo option

it's important to be able to iterate quickly and experiment before committing to an approach. That's why we first created a prototype environment to test our ideas instead of working in the real codebase

First work with a prototype, then the real codebase

Designers worried that live collaborative editing would result in “hovering art directors” and “design by committee” catastrophes.

Worries of using a live collaborative editing

We had a lot of trouble until we settled on a principle to help guide us: if you undo a lot, copy something, and redo back to the present (a common operation), the document should not change. This may seem obvious but the single-player implementation of redo means “put back what I did” which may end up overwriting what other people did next if you’re not careful. This is why in Figma an undo operation modifies redo history at the time of the undo, and likewise a redo operation modifies undo history at the time of the redo

Undo/Redo working

operational transforms (a.k.a. OTs), the standard multiplayer algorithm popularized by apps like Google Docs. As a startup we value the ability to ship features quickly, and OTs were unnecessarily complex for our problem space

Operational Transforms (OT) are unnecessarily complex for problems unlike Google Docs

Every Figma document is a tree of objects, similar to the HTML DOM. There is a single root object that represents the entire document. Underneath the root object are page objects, and underneath each page object is a hierarchy of objects representing the contents of the page. This tree is is presented in the layers panel on the left-hand side of the Figma editor.

Structure of Figma documents

When a document is opened, the client starts by downloading a copy of the file. From that point on, updates to that document in both directions are synced over the WebSocket connection. Figma lets you go offline for an arbitrary amount of time and continue editing. When you come back online, the client downloads a fresh copy of the document, reapplies any offline edits on top of this latest state, and then continues syncing updates over a new WebSocket connection

Offline editing isn't a problem, unlike the online one

An important consequence of this is that changes are atomic at the property value boundary. The eventually consistent value for a given property is always a value sent by one of the clients. This is why simultaneous editing of the same text value doesn’t work in Figma. If the text value is B and someone changes it to AB at the same time as someone else changes it to BC, the end result will be either AB or BC but never ABC

Consequence of approaches like last-writer-wins

We use a client/server architecture where Figma clients are web pages that talk with a cluster of servers over WebSockets. Our servers currently spin up a separate process for each multiplayer document which everyone editing that document connects to

Way Figma approaches client/server architecture

CRDTs are designed for decentralized systems where there is no single central authority to decide what the final state should be. There is some unavoidable performance and memory overhead with doing this. Since Figma is centralized (our server is the central authority), we can simplify our system by removing this extra overhead and benefit from a faster and leaner implementation

CRDTs are designed for decentralized systems

Sometimes it's interesting to explain some code (How many time you spend trying to figure out a regex pattern when you see one?), but, in 99% of the time, comments could be avoided.

Generally try to avoid (avoid != forbid) comments.

Comments:

When we talk about abstraction levels, we can classify the code in 3 levels: high: getAdress medium: inactiveUsers = Users.findInactives low: .split(" ")

3 abstraction levels:

getAdressinactiveUsers = Users.findInactives.split(" ")Explanation:

searchForsomething()account.unverifyAccountmap, to_downncase and so on The ideal is not to mix the abstraction levels in only one function.

Try not mixing abstraction levels inside a single function

There is another maxim also that says: you must write the same code a maximum of 3 times. The third time you should consider refactoring and reducing duplication

Avoid repeating the same code over and over

Should be nouns, and not verbs, because classes represent concrete objects

Class names = nouns

Uncle Bob, in clean code, defends that the best order to write code is: Write unit tests. Create code that works. Refactor to clean the code.

Best order to write code (according to Uncle Bob):

int d could be int days

When naming things, focus on giving meaningful names, that you can pronounce and are searchable. Also, avoid prefixes

naming things, write better functions and a little about comments. Next, I intend to talk about formatting, objects and data structures, how to handle with errors, about boundaries (how to deal with another's one code), unit testing and how to organize your class better. I know that it'll be missing an important topic about code smells

Ideas to consider while developing clean code:

Should be verbs, and not nouns, because methods represent actions that objects must do

Methods names = verbs

decrease the switch/if/else is to use polymorphism

It's better to avoid excessive switch/if/else statements

In the ideal world, they should be 1 or 2 levels of indentation

Functions in the ideal world shouldn't be long

"The Big Picture" is one of those things that people say a whole lot but can mean so many different things. Going through all of these articles, they tend to mean any (or all) of these things

Thinking about The Big Picture:

Considering that there are still a ton of COBOL jobs out there, there is no particular technology that you need to know

RIght, there is no specific need to learn that one technology

read Knuth, or Pragmatic Programming, or Clean Code, or some other popular book

Classic programming related books

Senior developers are more cautious, thoughtful, pragmatic, practical and simple in their approaches to solving problems.

Interesting definition of senior devs

In recent years we’ve also begun to see increasing interest in exploratory testing as an important part of the agile toolbox

Waterfall software development ---> agile ---> exploratory testing

When I began coding, around 30 years ago, waterfall software development was used nearly exclusively.

Mathematica didn’t really help me build anything useful, because I couldn’t distribute my code or applications to colleagues (unless they spent thousands of dollars for a Mathematica license to use it), and I couldn’t easily create web applications for people to access from the browser. In addition, I found my Mathematica code would often end up much slower and more memory hungry than code I wrote in other languages.

Disadvantages of Mathematica:

In the 1990s, however, things started to change. Agile development became popular. People started to understand the reality that most software development is an iterative process

a methodology that combines a programming language with a documentation language, thereby making programs more robust, more portable, more easily maintained, and arguably more fun to write than programs that are written only in a high-level language. The main idea is to treat a program as a piece of literature, addressed to human beings rather than to a computer.

Exploratory testing described by Donald Knuth

Development Pros Cons

Table comparing pros and cons of:

This kind of “exploring” is easiest when you develop on the prompt (or REPL), or using a notebook-oriented development system like Jupyter Notebooks

It's easier to explore the code:

but, it's not efficient to develop in them

notebook contains an actual running Python interpreter instance that you’re fully in control of. So Jupyter can provide auto-completions, parameter lists, and context-sensitive documentation based on the actual state of your code

Notebook makes it easier to handle dynamic Python features

They switch to get features like good doc lookup, good syntax highlighting, integration with unit tests, and (critically!) the ability to produce final, distributable source code files, as opposed to notebooks or REPL histories

Things missed in Jupyter Notebooks:

Exploratory programming is based on the observation that most of us spend most of our time as coders exploring and experimenting

In exploratory programming, we:

Developing in the cloud

Well paid cloud platforms:

Finding a database management system that works for you

Well paid database technologies:

Here are a few very prominent technologies that you can look into and what impact each one might have on your salary

Other well paid frameworks, libraries and tools:

What programming language should I learn next?

Most paid programming languages:

Android and iOS

Payment for mobile OS:

Frontend Devs: What should I learn after JavaScript? Explore these frameworks and libraries

Most paid JS frameworks and libraries:

First, you’ve spread the logic across a variety of different systems, so it becomes more difficult to reason about the application as a whole. Second, more importantly, the logic has been implemented as configuration as opposed to code. The logic is constrained by the ability of the applications which have been wired together, but it’s still there.

Why "no code" trend is dangerous in some way (on the example of Zapier):

the developer doesn’t need to worry about allocating memory, or the character set encoding of the string, or a host of other things.

Comparison of C (1972) and TypeScript (2012) code.

(check the code above)

“No Code” systems are extremely good for putting together proofs-of-concept which can demonstrate the value of moving forward with development.

Great point of "no code" trend

With someone else’s platform, you often end up needing to construct elaborate work-arounds for missing functionality, or indeed cannot implement a required feature at all.

You can quickly implement 80% of the solution in Salesforce using a mix of visual programming (basic rule setting and configuration), but later it's not so straightforward to add the missing 20%

Summary

In doing a code review, you should make sure that:

"Continuous Delivery is the ability to get changes of all types — including new features, configuration changes, bug fixes, and experiments — into production, or into the hands of users, safely and quickly in a sustainable way". -- Jez Humble and Dave Farley

Continuous Delivery

Another approach is to use a tool like H2O to export the model as a POJO in a JAR Java library, which you can then add as a dependency in your application. The benefit of this approach is that you can train the models in a language familiar to Data Scientists, such as Python or R, and export the model as a compiled binary that runs in a different target environment (JVM), which can be faster at inference time

H2O - export models trained in Python/R as a POJO in JAR

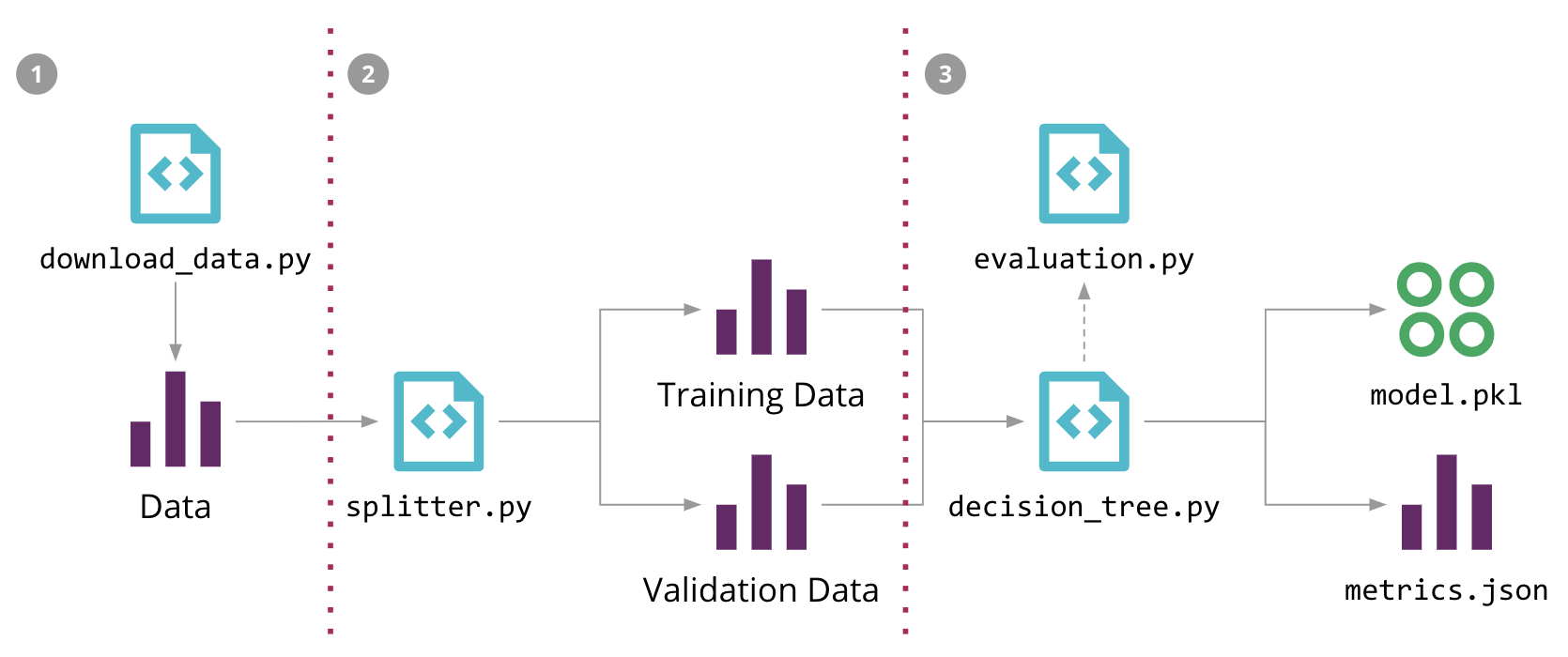

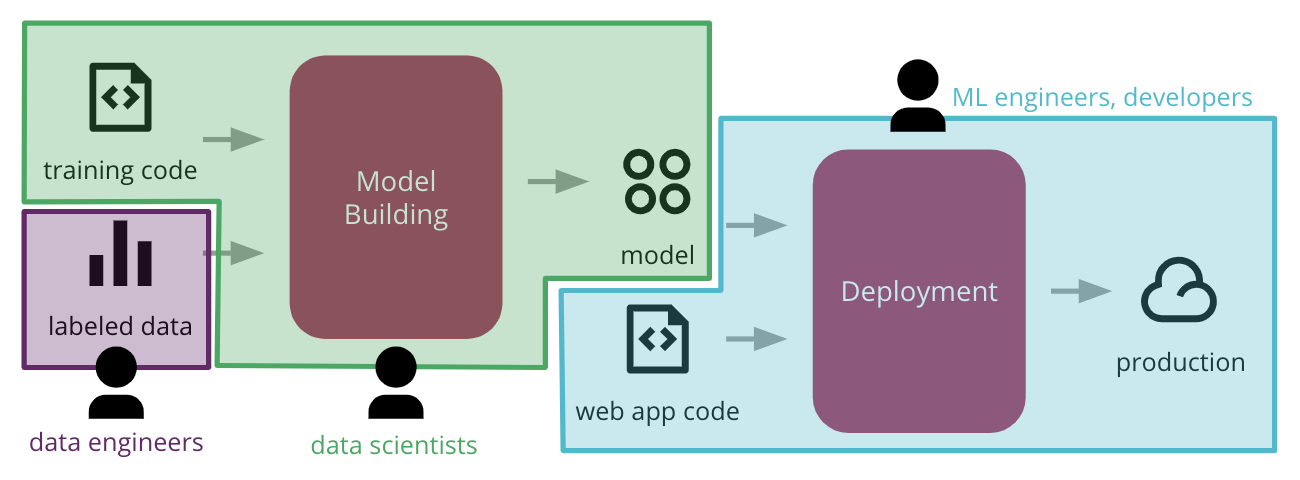

Continuous Delivery for Machine Learning (CD4ML) is a software engineering approach in which a cross-functional team produces machine learning applications based on code, data, and models in small and safe increments that can be reproduced and reliably released at any time, in short adaptation cycles.

Continuous Delivery for Machine Learning (CD4ML) (long definition)

Basic principles:

In order to formalise the model training process in code, we used an open source tool called DVC (Data Science Version Control). It provides similar semantics to Git, but also solves a few ML-specific problems:

DVC - transform model training process into code.

Advantages:

Machine Learning pipeline for our Sales Forecasting problem, and the 3 steps to automate it with DVC

Sales Forecasting process

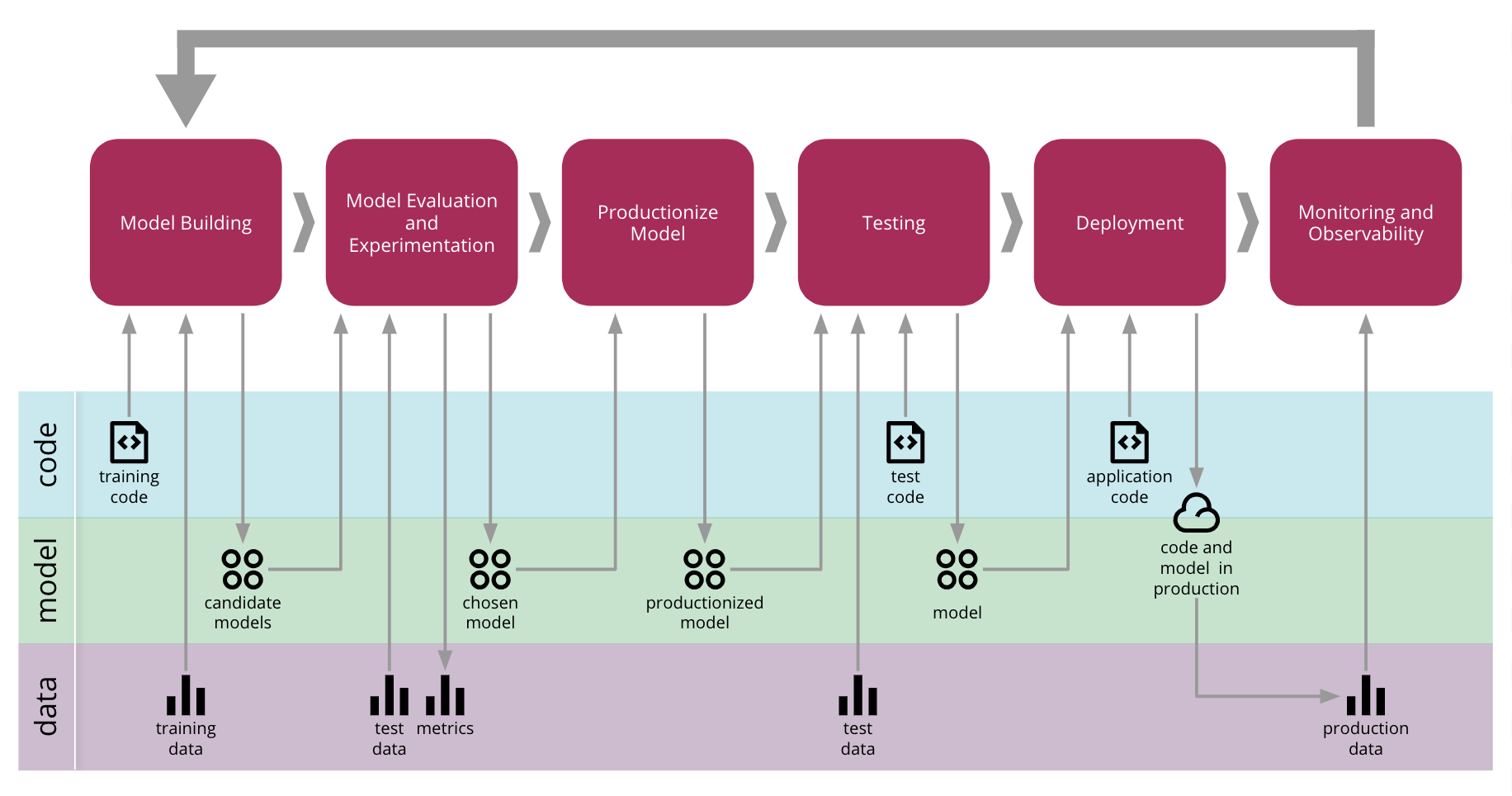

Continuous Delivery for Machine Learning end-to-end process

common functional silos in large organizations can create barriers, stifling the ability to automate the end-to-end process of deploying ML applications to production

Common ML process (leading to delays and frictions)

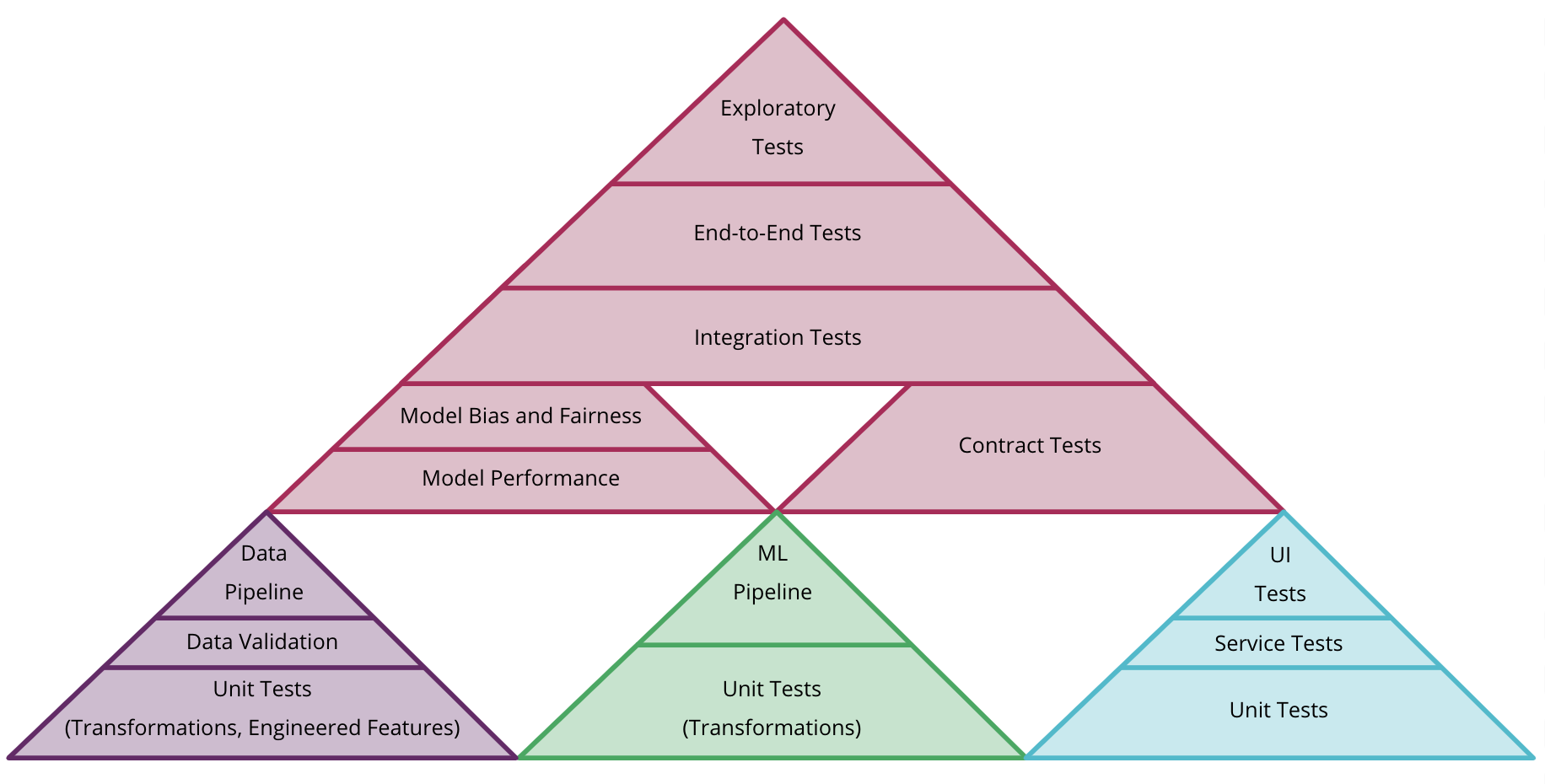

There are different types of testing that can be introduced in the ML workflow.

Automated tests for ML system:

example of how to combine different test pyramids for data, model, and code in CD4ML

Combining tests for data (purple), model (green) and code (blue)

A deployment pipeline automates the process for getting software from version control into production, including all the stages, approvals, testing, and deployment to different environments

Deployment pipeline

We chose to use GoCD as our Continuous Delivery tool, as it was built with the concept of pipelines as a first-class concern

GoCD - open source Continuous Delivery tool

Continuous Delivery for Machine Learning (CD4ML) is the discipline of bringing Continuous Delivery principles and practices to Machine Learning applications.

Continuous Delivery for Machine Learning (CD4ML)

Sometimes, the best way to learn is to mimic others. Here are some great examples of projects that use documentation well:

Examples of projects that use documentation well

(chech the list below)

“Code is more often read than written.” — Guido van Rossum

Documenting code is describing its use and functionality to your users. While it may be helpful in the development process, the main intended audience is the users.

Documenting code:

Class method docstrings should contain the following: A brief description of what the method is and what it’s used for Any arguments (both required and optional) that are passed including keyword arguments Label any arguments that are considered optional or have a default value Any side effects that occur when executing the method Any exceptions that are raised Any restrictions on when the method can be called

Class method should contain:

(check example below)

Comments to your code should be kept brief and focused. Avoid using long comments when possible. Additionally, you should use the following four essential rules as suggested by Jeff Atwood:

Comments should be as concise as possible. Moreover, you should follow 4 rules of Jeff Atwood:

From examining the type hinting, you can immediately tell that the function expects the input name to be of a type str, or string. You can also tell that the expected output of the function will be of a type str, or string, as well.

Type hinting introduced in Python 3.5 extends 4 rules of Jeff Atwood and comments the code itself, such as this example:

def hello_name(name: str) -> str:

return(f"Hello {name}")

strDocstrings can be further broken up into three major categories: Class Docstrings: Class and class methods Package and Module Docstrings: Package, modules, and functions Script Docstrings: Script and functions

3 main categories of docstrings

According to PEP 8, comments should have a maximum length of 72 characters.

If comment_size > 72 characters:

use `multiple line comment`

Docstring conventions are described within PEP 257. Their purpose is to provide your users with a brief overview of the object.

Docstring conventions

All multi-lined docstrings have the following parts: A one-line summary line A blank line proceeding the summary Any further elaboration for the docstring Another blank line

Multi-line docstring example:

"""This is the summary line

This is the further elaboration of the docstring. Within this section,

you can elaborate further on details as appropriate for the situation.

Notice that the summary and the elaboration is separated by a blank new

line.

# Notice the blank line above. Code should continue on this line.

say_hello.__doc__ = "A simple function that says hello... Richie style"

Example of using __doc:

Code (version 1):

def say_hello(name):

print(f"Hello {name}, is it me you're looking for?")

say_hello.__doc__ = "A simple function that says hello... Richie style"

Code (alternative version):

def say_hello(name):

"""A simple function that says hello... Richie style"""

print(f"Hello {name}, is it me you're looking for?")

Input:

>>> help(say_hello)

Returns:

Help on function say_hello in module __main__:

say_hello(name)

A simple function that says hello... Richie style

class constructor parameters should be documented within the __init__ class method docstring

init

Scripts are considered to be single file executables run from the console. Docstrings for scripts are placed at the top of the file and should be documented well enough for users to be able to have a sufficient understanding of how to use the script.

Docstrings in scripts

Documenting your code, especially large projects, can be daunting. Thankfully there are some tools out and references to get you started

You can always facilitate documentation with tools.

(check the table below)

Commenting your code serves multiple purposes

Multiple purposes of commenting:

BUG, FIXME, TODOIn general, commenting is describing your code to/for developers. The intended main audience is the maintainers and developers of the Python code. In conjunction with well-written code, comments help to guide the reader to better understand your code and its purpose and design

Commenting code:

Along with these tools, there are some additional tutorials, videos, and articles that can be useful when you are documenting your project

Recommended videos to start documenting

(check the list below)

If you use argparse, then you can omit parameter-specific documentation, assuming it’s correctly been documented within the help parameter of the argparser.parser.add_argument function. It is recommended to use the __doc__ for the description parameter within argparse.ArgumentParser’s constructor.

argparse

There are specific docstrings formats that can be used to help docstring parsers and users have a familiar and known format.

Different docstring formats:

Daniele Procida gave a wonderful PyCon 2017 talk and subsequent blog post about documenting Python projects. He mentions that all projects should have the following four major sections to help you focus your work:

Public and Open Source Python projects should have the docs folder, and inside of it:

(check the table below for a summary)

Since everything in Python is an object, you can examine the directory of the object using the dir() command

dir() function examines directory of Python objects. For example dir(str).

Inside dir(str) you can find interesting property __doc__

Documenting your Python code is all centered on docstrings. These are built-in strings that, when configured correctly, can help your users and yourself with your project’s documentation.

Docstrings - built-in strings that help with documentation

Along with docstrings, Python also has the built-in function help() that prints out the objects docstring to the console.

help() function.

After typing help(str) it will return all the info about str object

The general layout of the project and its documentation should be as follows:

project_root/

│

├── project/ # Project source code

├── docs/

├── README

├── HOW_TO_CONTRIBUTE

├── CODE_OF_CONDUCT

├── examples.py

(private, shared or open sourced)

In all cases, the docstrings should use the triple-double quote (""") string format.

Think only about """ when using docstrings

Each format makes tradeoffs in encoding, flexibility, and expressiveness to best suit a specific use case.

Each data format brings different tradeoffs:

Computers can only natively store integers, so they need some way of representing decimal numbers. This representation comes with some degree of inaccuracy. That's why, more often than not, .1 + .2 != .3

Computers make up their way to store decimal numbers

Cross-platform development is now a standard because of wide variety of architectures like mobile devices, cloud servers, embedded IoT systems. It was almost exclusively PCs 20 years ago.

A package management ecosystem is essential for programming languages now. People simply don’t want to go through the hassle of finding, downloading and installing libraries anymore. 20 years ago we used to visit web sites, downloaded zip files, copied them to correct locations, added them to the paths in the build configuration and prayed that they worked.

How library management changed in 20 years

IDEs and the programming languages are getting more and more distant from each other. 20 years ago an IDE was specifically developed for a single language, like Eclipse for Java, Visual Basic, Delphi for Pascal etc. Now, we have text editors like VS Code that can support any programming language with IDE like features.

How IDEs "unified" in comparison to the last 20 years

Your project has no business value today unless it includes blockchain and AI, although a centralized and rule-based version would be much faster and more efficient.

Comparing current project needs to those 20 years ago

Being a software development team now involves all team members performing a mysterious ritual of standing up together for 15 minutes in the morning and drawing occult symbols with post-its.

In comparison to 20 years ago ;)

Language tooling is richer today. A programming language was usually a compiler and perhaps a debugger. Today, they usually come with the linter, source code formatter, template creators, self-update ability and a list of arguments that you can use in a debate against the competing language.

How coding became much more supported in comparison to the last 20 years

There is StackOverflow which simply didn’t exist back then. Asking a programming question involved talking to your colleagues.

20 years ago StackOverflow wouldn't give you a hand

Since we have much faster CPUs now, numerical calculations are done in Python which is much slower than Fortran. So numerical calculations basically take the same amount of time as they did 20 years ago.

Python vs Fortran ;)

I am not sure how but one kind soul somehow found the project, forked it, refactored it, "modernized" it, added linting, code sniffing, added CI and opened the pull request.

It's worth sharing your code, since someone can always find it and improve it, so that you can learn from it

It is solved when you understand why it occurred and why it no longer does.

What does it mean for a problem to be solved?

Let's reason through our memoizer before we write any code.

Operations performed by a memoizer:

Which is written as:

// Takes a reference to a function

const memoize = func => {

// Creates a cache of results

const results = {};

// Returns a function

return (...args) => {

// Create a key for results cache

const argsKey = JSON.stringify(args);

// Only execute func if no cached value

if (!results[argsKey]) {

// Store function call result in cache

results[argsKey] = func(...args);

}

// Return cached value

return results[argsKey];

};

};

Let's replicate our inefficientSquare example, but this time we'll use our memoizer to cache results.

Replication of a function with the use of memoizer (check the code below this annotation)

The biggest problem with JSON.stringify is that it doesn't serialize certain inputs, like functions and Symbols (and anything you wouldn't find in JSON).

Problem with JSON.stringify.

This is why the previous code shouldn't be used in production

Memoization is an optimization technique used in many programming languages to reduce the number of redundant, expensive function calls. This is done by caching the return value of a function based on its inputs.

Memoization (simple definition)

The best way to explain the difference between launch and attach is to think of a launch configuration as a recipe for how to start your app in debug mode before VS Code attaches to it, while an attach configuration is a recipe for how to connect VS Code's debugger to an app or process that's already running.

Simple difference between two core debugging modes: Launch and Attach available in VS Code.

Depending on the request (attach or launch), different attributes are required, and VS Code's launch.json validation and suggestions should help with that.

Logpoint is a variant of a breakpoint that does not "break" into the debugger but instead logs a message to the console. Logpoints are especially useful for injecting logging while debugging production servers that cannot be paused or stopped. A Logpoint is represented by a "diamond" shaped icon. Log messages are plain text but can include expressions to be evaluated within curly braces ('{}').

Logpoints - log messages to the console when breakpoint is hit.

Can include expressions to be evaluated with {}, e.g.:

fib({num}): {result}

Here are some optional attributes available to all launch configurations

Optional arguments for launch.json:

presentation ("order", "group" or "hidden")preLaunchTaskpostDebugTaskinternalConsoleOptionsdebugServerserverReadyActionThe following attributes are mandatory for every launch configuration

In the launch.json file you've to define at least those 3 variables:

type (e.g. "node", "php", "go")request ("launch" or "attach")name (name to appear in the Debug launch configuration drop-down)Many debuggers support some of the following attributes

Some of the possibly supported attributes in launch.json:

programargsenvcwdportstopOnEntryconsole (e.g. "internalConsole", "integratedTerminal", "externalTerminal")Version control is at the heart of any modern engineering org. The ability for multiple engineers to asynchronously contribute to a codebase is crucial—and with notebooks, it’s very hard.

Version control in notebooks?

The priorities in building a production machine learning pipeline—the series of steps that take you from raw data to product—are not fundamentally different from those of general software engineering.

Reproducibility is an issue with notebooks. Because of the hidden state and the potential for arbitrary execution order, generating a result in a notebook isn’t always as simple as clicking “Run All.”

Problem of reproducibility in notebooks

A notebook, at a very basic level, is just a bunch of JSON that references blocks of code and the order in which they should be executed.But notebooks prioritize presentation and interactivity at the expense of reproducibility. YAML is the other side of that coin, ignoring presentation in favor of simplicity and reproducibility—making it much better for production.

Summary of the article:

Notebook = presentation + interactivity

YAML = simplicity + reproducibility

Notebook files, however, are essentially giant JSON documents that contain the base-64 encoding of images and binary data. For a complex notebook, it would be extremely hard for anyone to read through a plaintext diff and draw meaningful conclusions—a lot of it would just be rearranged JSON and unintelligible blocks of base-64.

Git traces plaintext differences and with notebooks it's a problem

There is no hidden state or arbitrary execution order in a YAML file, and any changes you make to it can easily be tracked by Git

In comparison to notebooks, YAML is more compatible for Git and in the end might be a better solution for ML

Python unit testing libraries, like unittest, can be used within a notebook, but standard CI/CD tooling has trouble dealing with notebooks for the same reasons that notebook diffs are hard to read.

unittest Python library doesn't work well in a notebook

Use camelCase when naming objects, functions, and instances.

camelCase for objects, functions and instances

const thisIsMyFuction() {}

Use PascalCase only when naming constructors or classes.

PascalCase for constructors and classes

// good

class User {

constructor(options) {

this.name = options.name;

}

}

const good = new User({

name: 'yup',

});

Use uppercase only in constants.

Uppercase for constants

export const API_KEY = 'SOMEKEY';

If you'd just like to see refactorings without Quick Fixes, you can use the Refactor command (Ctrl+Shift+R).

To easily see all the refactoring options, use the "Refactor" command

Stary, dobry Uncle Bob mówi, że poza etatem trzeba na programowanie poświęcić 20h tygodniowo.Gdy podzielimy to na 7 dni w tygodniu, to wychodzi prawie 3 godziny dziennie.Dla jednych mało, dla innych dużo.

Uncle Bob's advice: ~ 3h/day for programming

Suppose you have only two rolls of dice. then your best strategy would be to take the first roll if its outcome is more than its expected value (ie 3.5) and to roll again if it is less.

Expected payoff of a dice game:

Description: You have the option to throw a die up to three times. You will earn the face value of the die. You have the option to stop after each throw and walk away with the money earned. The earnings are not additive. What is the expected payoff of this game?

Rolling twice: $$\frac{1}{6}(6+5+4) + \frac{1}{2}3.5 = 4.25.$$

Rolling three times: $$\frac{1}{6}(6+5) + \frac{2}{3}4.25 = 4 + \frac{2}{3}$$

Therefore, En=2n+1−2=2(2n−1)

Simplified formula for the expected number of tosses (e) to get n consecutive heads (n≥1):

$$e_n=2(2^n-1)$$

For example, to get 5 consecutive heads, we've to toss the coin 62 times:

$$e_n=2(2^5-1)=62$$

We can also start with the longer analysis of the 5 scenarios:

Thus:

$$e=\frac{1}{2}(e+1)+\frac{1}{4}(e+2)+\frac{1}{8}(e+3)+\frac{1}{16}\\(e+4)+\frac{1}{32}(e+5)+\frac{1}{32}(5)=62$$

We can also generalise the formula to:

$$e_n=\frac{1}{2}(e_n+1)+\frac{1}{4}(e_n+2)+\frac{1}{8}(e_n+3)+\frac{1}{16}\\(e_n+4)+\cdots +\frac{1}{2^n}(e_n+n)+\frac{1}{2^n}(n) $$

It's responsible for allocating and scheduling containers, providing then with abstracted functionality like internal networking and file storage, and then monitoring the health of all of these elements and stepping in to repair or adjust them as necessary.In short, it's all about abstracting how, when and where containers are run.

Kubernetes (simple explanation)

You’ll see pressure to push towards “Cloud neutral” solutions using Kubernetes in various places

Maybe Kubernetes has the advantage of being cloud neutral, but: you pay the cost of a cloud migration:

Heroku? App Services? App Engine?

You can set up yourself in production in minutes to only a few hours

Kubernetes (often irritatingly abbreviated to k8s, along with it’s wonderful ecosystem of esoterically named additions like helm, and flux) requires a full time ops team to operate, and even in “managed vendor mode” on EKS/AKS/GKS the learning curve is far steeper than the alternatives.

Kubernetes:

Azure App Services, Google App Engine and AWS Lambda will be several orders of magnitude more productive for you as a programmer. They’ll be easier to operate in production, and more explicable and supported.

Use the closest thing to a pure-managed platform as you possibly can. It will be easier to operate in production, and more explicable and supported:

With the popularisation of docker and containers, there’s a lot of hype gone into things that provide “almost platform like” abstractions over Infrastructure-as-a-Service. These are all very expensive and hard work.

Kubernetes aren't always required unless you work on huge problems

By using events that are buffered in queues, your system can support outage, scaling up and scaling down, and rolling upgrades without any special consideration. It’s normal mode of operation is “read from a queue”, and this doesn’t change in exceptional circumstances.

Event driven architectures with replay / message logs

Reserved resources, capacity, or physical hardware can be protected for pieces of your software, so that an outage in one part of your system doesn’t ripple down to another.

Idea of Bulkheads

The complimentary design pattern for all your circuit breakers – you need to make sure that you wrap all outbound connections in a retry policy, and a back-off.

Idempotency and Retries design pattern

Circuit breaking is a useful distributed system pattern where you model out-going connections as if they’re an electrical circuit. By measuring the success of calls over any given circuit, if calls start failing, you “blow the fuse”, queuing outbound requests rather than sending requests you know will fail.

Circuit breaking - useful distributed system pattern. It's phenomenal way to make sure you don't fail when you know you might.

“scaling out is the only cost-effective thing”, but plenty of successful companies managed to scale up with a handful of large machines or VMs

Scaling is hard if you try do it yourself, so absolutely don’t try do it yourself. Use vendor provided, cloud abstractions like Google App Engine, Azure Web Apps or AWS Lambda with autoscaling support enabled if you can possibly avoid it.

Scaling shall be done with cloud abstractions

Hexagonal architectures, also known as “the ports and adapters” pattern

Hexagonal architectures - one of the better pieces of "real application architecture" advice.

Good microservice design follows a few simple rules

Microservice design rules:

What Microservices are supposed to be: Small, independently useful, independently versionable, independently shippable services that execute a specific domain function or operation. What Microservices often are: Brittle, co-dependent, myopic services that act as data access objects over HTTP that often fail in a domino like fashion.

What Microservices are supposed to be: independent

VS

what they often are: dependent

In the mid-90s, “COM+” (Component Services) and SOAP were popular because they reduced the risk of deploying things, by splitting them into small components

History of Microservices: