12 Matching Annotations

- Mar 2023

-

www.ndss-symposium.org www.ndss-symposium.org

- Jan 2023

-

www.sciencedirect.com www.sciencedirect.com

-

Highlights

- We exploit language differences to study the causal effect of fake news on voting.

- Language affects exposure to fake news.

- German-speaking voters from South Tyrol (Italy) are less likely to be exposed to misinformation.

- Exposure to fake news favours populist parties regardless of prior support for populist parties.

- However, fake news alone cannot explain most of the growth in populism.

-

-

euvsdisinfo.eu euvsdisinfo.eu

-

The uptake of mis- and disinformation is intertwined with the way our minds work. The large body of research on the psychological aspects of information manipulation explains why.

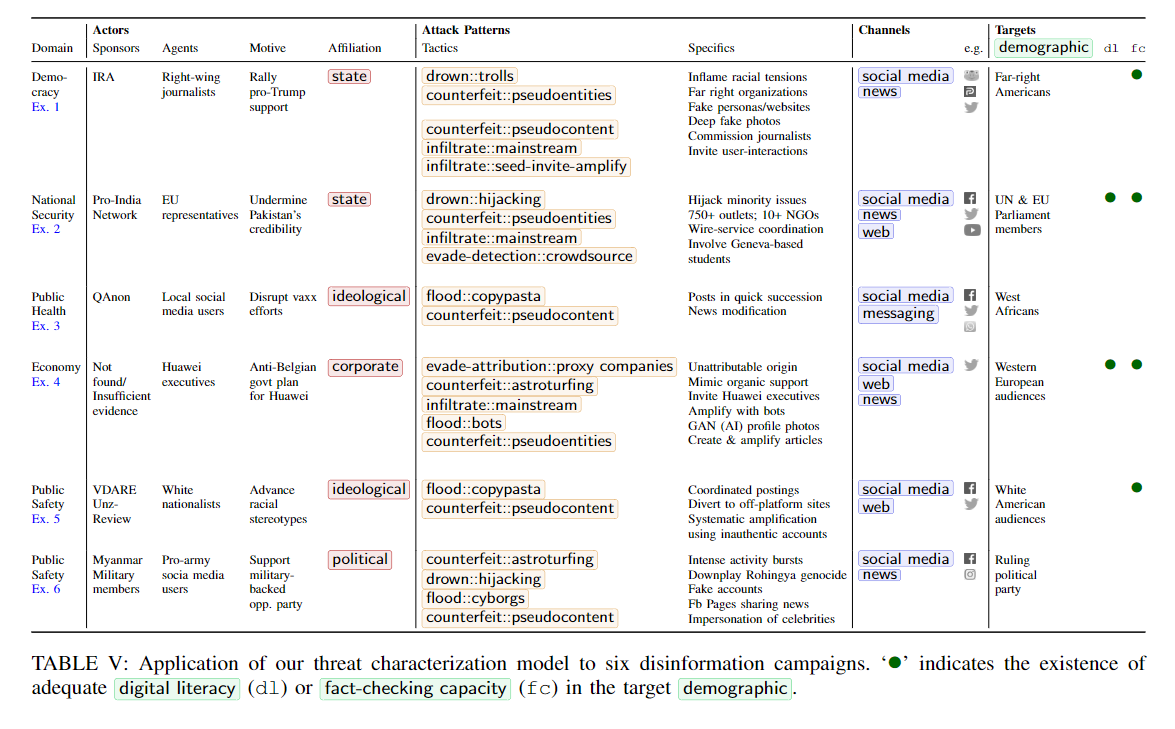

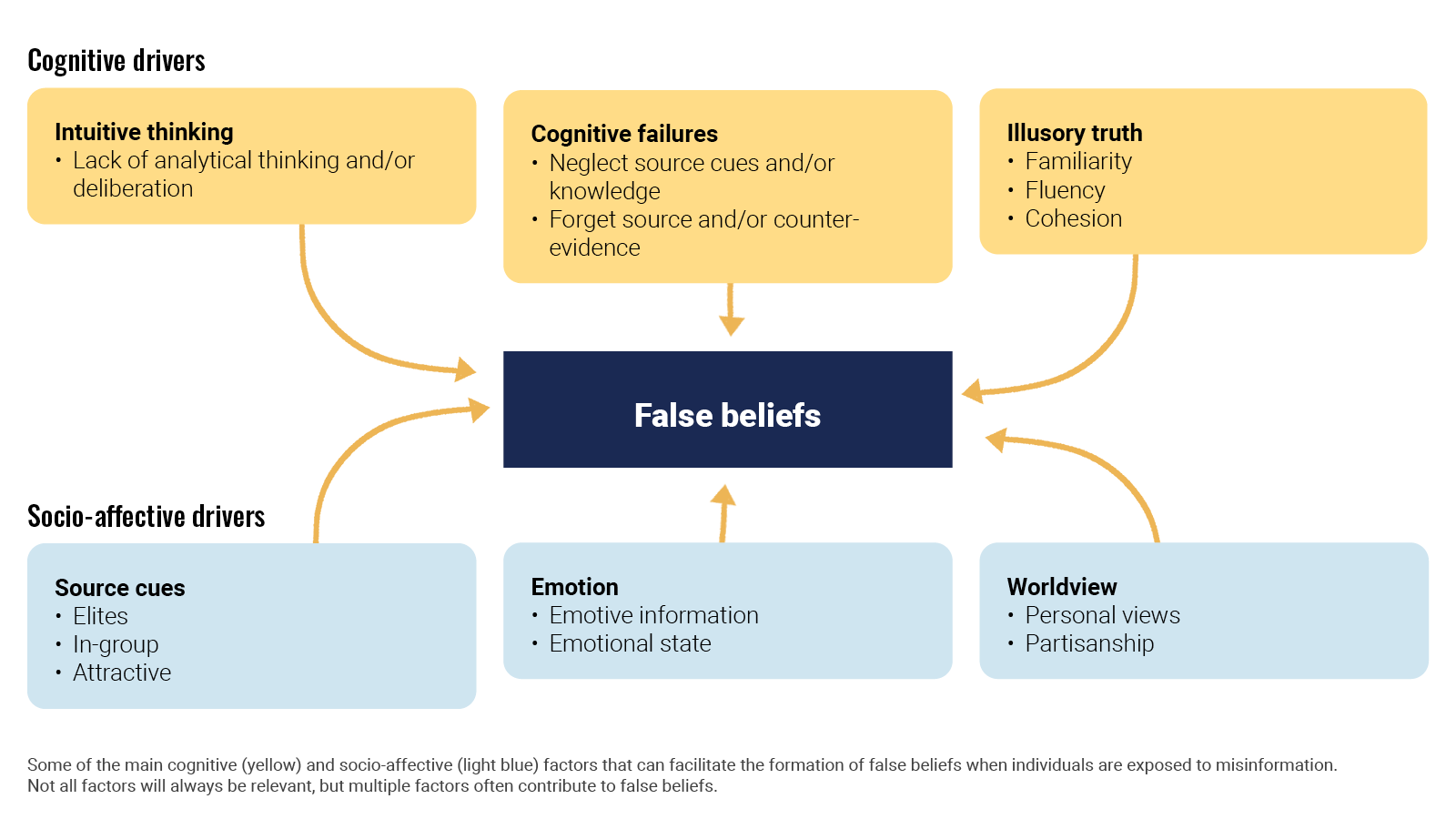

In an article for Nature Review Psychology, Ullrich K. H. Ecker et al looked(opens in a new tab) at the cognitive, social, and affective factors that lead people to form or even endorse misinformed views. Ironically enough, false beliefs generally arise through the same mechanisms that establish accurate beliefs. It is a mix of cognitive drivers like intuitive thinking and socio-affective drivers. When deciding what is true, people are often biased to believe in the validity of information and to trust their intuition instead of deliberating. Also, repetition increases belief in both misleading information and facts.

Ecker, U.K.H., Lewandowsky, S., Cook, J. et al. (2022). The psychological drivers of misinformation belief and its resistance to correction.

Going a step further, Álex Escolà-Gascón et al investigated the psychopathological profiles that characterise people prone to consuming misleading information. After running a number of tests on more than 1,400 volunteers, they concluded that people with high scores in schizotypy (a condition not too dissimilar from schizophrenia), paranoia, and histrionism (more commonly known as dramatic personality disorder) are more vulnerable to the negative effects of misleading information. People who do not detect misleading information also tend to be more anxious, suggestible, and vulnerable to strong emotions.

-

-

www.danielpipes.org www.danielpipes.org

-

Americans especially tend reflexively to dismiss the idea of conspiracy. Living in a political culture ignorant of secret police, a political underground, and coups d'état,

Not anymore.

-

- Dec 2022

-

www.nature.com www.nature.com

-

In the co-share network, a cluster of websites shared more by conservatives is also shared more by users with higher misinformation exposure scores.

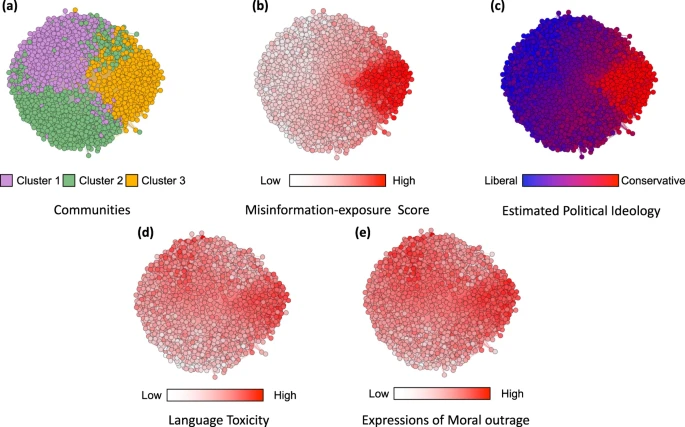

Nodes represent website domains shared by at least 20 users in our dataset and edges are weighted based on common users who shared them. a Separate colors represent different clusters of websites determined using community-detection algorithms29. b The intensity of the color of each node shows the average misinformation-exposure score of users who shared the website domain (darker = higher PolitiFact score). c Nodes’ color represents the average estimated ideology of the users who shared the website domain (red: conservative, blue: liberal). d The intensity of the color of each node shows the average use of language toxicity by users who shared the website domain (darker = higher use of toxic language). e The intensity of the color of each node shows the average expression of moral outrage by users who shared the website domain (darker = higher expression of moral outrage). Nodes are positioned using directed-force layout on the weighted network.

-

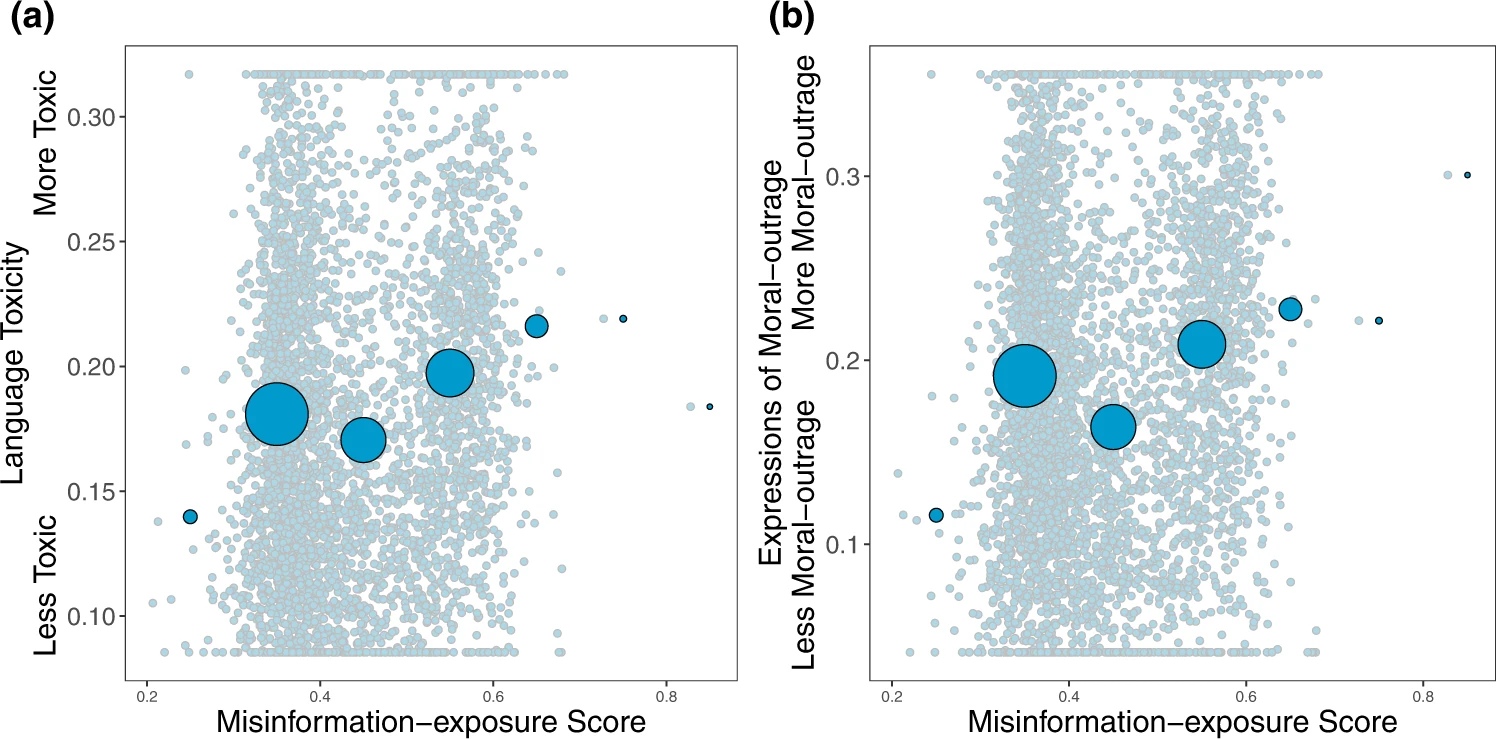

Exposure to elite misinformation is associated with the use of toxic language and moral outrage.

Shown is the relationship between users’ misinformation-exposure scores and (a) the toxicity of the language used in their tweets, measured using the Google Jigsaw Perspective API27, and (b) the extent to which their tweets involved expressions of moral outrage, measured using the algorithm from ref. 28. Extreme values are winsorized by 95% quantile for visualization purposes. Small dots in the background show individual observations; large dots show the average value across bins of size 0.1, with size of dots proportional to the number of observations in each bin. Source data are provided as a Source Data file.

-

-

www.nature.com www.nature.com

-

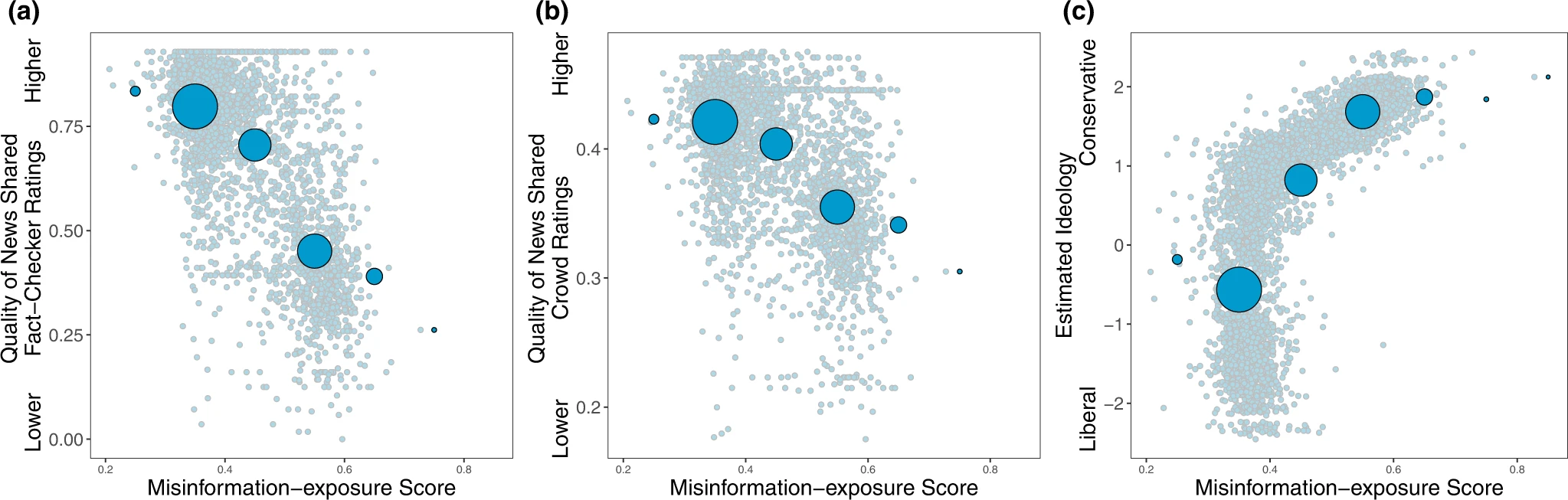

Exposure to elite misinformation is associated with sharing news from lower-quality outlets and with conservative estimated ideology.

Shown is the relationship between users’ misinformation-exposure scores and (a) the quality of the news outlets they shared content from, as rated by professional fact-checkers21, (b) the quality of the news outlets they shared content from, as rated by layperson crowds21, and (c) estimated political ideology, based on the ideology of the accounts they follow10. Small dots in the background show individual observations; large dots show the average value across bins of size 0.1, with size of dots proportional to the number of observations in each bin.

-

-

arxiv.org arxiv.org

-

On Facebook, we identified 51,269 posts (0.25% of all posts)sharing links to Russian propaganda outlets, generating 5,065,983interactions (0.17% of all interactions); 80,066 posts (0.4% of allposts) sharing links to low-credibility news websites, generating28,334,900 interactions (0.95% of all interactions); and 147,841 postssharing links to high-credibility news websites (0.73% of all posts),generating 63,837,701 interactions (2.13% of all interactions). Asshown in Figure 2, we notice that the number of posts sharingRussian propaganda and low-credibility news exhibits an increas-ing trend (Mann-Kendall 𝑃 < .001), whereas after the invasion ofUkraine both time series yield a significant decreasing trend (moreprominent in the case of Russian propaganda); high-credibilitycontent also exhibits an increasing trend in the Pre-invasion pe-riod (Mann-Kendall 𝑃 < .001), which becomes stable (no trend)in the period afterward. T

-

We estimated the contribution of veri-fied accounts to sharing and amplifying links to Russian propagandaand low-credibility sources, noticing that they have a dispropor-tionate role. In particular, superspreaders of Russian propagandaare mostly accounts verified by both Facebook and Twitter, likelydue to Russian state-run outlets having associated accounts withverified status. In the case of generic low-credibility sources, a sim-ilar result applies to Facebook but not to Twitter, where we alsonotice a few superspreaders accounts that are not verified by theplatform.

-

-

ieeexplore.ieee.org ieeexplore.ieee.org

-

We applied two scenarios to compare how these regular agents behave in the Twitter network, with and without malicious agents, to study how much influence malicious agents have on the general susceptibility of the regular users. To achieve this, we implemented a belief value system to measure how impressionable an agent is when encountering misinformation and how its behavior gets affected. The results indicated similar outcomes in the two scenarios as the affected belief value changed for these regular agents, exhibiting belief in the misinformation. Although the change in belief value occurred slowly, it had a profound effect when the malicious agents were present, as many more regular agents started believing in misinformation.

-

-

www.mdpi.com www.mdpi.com

-

Therefore, although the social bot individual is “small”, it has become a “super spreader” with strategic significance. As an intelligent communication subject in the social platform, it conspired with the discourse framework in the mainstream media to form a hybrid strategy of public opinion manipulation.

-

We analyzed and visualized Twitter data during the prevalence of the Wuhan lab leak theory and discovered that 29% of the accounts participating in the discussion were social bots. We found evidence that social bots play an essential mediating role in communication networks. Although human accounts have a more direct influence on the information diffusion network, social bots have a more indirect influence. Unverified social bot accounts retweet more, and through multiple levels of diffusion, humans are vulnerable to messages manipulated by bots, driving the spread of unverified messages across social media. These findings show that limiting the use of social bots might be an effective method to minimize the spread of conspiracy theories and hate speech online.

-