Chris Aldrich's Hypothes.is List<br /> by [[Dan Allosso]]<br /> accessed on 2026-01-03T07:56:28

- Jan 2026

-

lifelonglearn.substack.com lifelonglearn.substack.com

- Dec 2025

-

-

That is a situation we are now living through, and it is no coincidence that the democratic conversation is breaking down all over the world because the algorithms are hijacking it. We have the most sophisticated information technology in history and we are losing the ability to talk with each other to hold a reasoned conversation.

for - progress trap - social media - misinformation - AI algorithms hijacking and pretending to be human

-

- Nov 2025

-

www.youtube.com www.youtube.com

-

What got us to the social media problems is everybody optimizing for a narrow metric of eyeballs at the expense of democracy and kids mental health and addiction and loneliness and no one knowing it. You know, being

for - social media - progress trap

Tags

Annotators

URL

-

-

go.gale.com go.gale.com

-

Instead of writing Eileen and Rhiannon's texts off as derivative, we began to see them as contributions to an ongoing, intertextual conversation about such issues as friendship, loyalty, power, and sexuality.

-

As a form, fanfictions make intertextuality visible because they rely on readers' ability to see relationships between the fan-writer's stories and the original media sources.

What many people who brush fan fiction off as irrelevant tend to ignore is the vast understanding of a pre-existing setting needed to contextualize the writings made, as well as the effort and organization required to properly build off of such settings.

-

While the counters on these sites indicate that they did not receive many visits, Rhiannon did report that they were visited by friends she met online who lived as far away as North Carolina and New Mexico in the United States.

-

-

go.gale.com go.gale.com

-

new tools for working with various modes of communication are producing a change in the way that young people are choosing to construct meaningful texts for themselves and others in their affinity spaces.

As more young people spend more time online, they are developing new ways to express themselves linguistically.

-

Because many young people growing up in a digital world will find their own reasons for becoming literate--reasons that go beyond reading and writing to acquire academic knowledge-it is important to remain open to changes in subject matter learning that will invite and extend the literacy practices they already possess and value.

-

In sum, these young people's penchant for creating online content that was easily distributed and used by others with similar interests was facilitated in part by their ability to remix multimodal texts, use new tools to show and tell, and rewrite their social identities.

-

The storylines through which young people exist in online spaces are highly social as are the literacy skills they employ.

-

-

www.instagram.com www.instagram.com

- Sep 2025

-

en.wikipedia.org en.wikipedia.org

- Aug 2025

-

Local file Local file

-

Lanier, J. (2013). Who Owns the Future? Simon & Schuster. https://amzn.to/3YzotPZ

-

- Jul 2025

-

revolution.social revolution.social

-

https://revolution.social/

-

-

www.manton.org www.manton.org

-

poem Children Learn What They Live by Dorothy Nolte. There are variations of it, but the first line is essentially: If children live with criticism, they learn to condemn.

via Live with criticism, learn to condemn by [[Manton Reece]]

-

- Jun 2025

-

incowrimo.org incowrimo.org

-

InCoWriMo is the short name for International Correspondence Writing Month, otherwise known as February. With an obvious nod to NaNoWriMo for the inspiration, InCoWriMo challenges you to hand-write and mail/deliver one letter, card, note or postcard every day during the month of February.

-

- Mar 2025

-

documenta.jesuits.global documenta.jesuits.globalPart III1

-

1All should take special care to guard with great diligence the gates of their senses (especially the eyes, ears, and tongue) from all disorder,2to preserve themselves in peace and true humility of their souls, and to show this by their silence when it should be kept and, when they must speak, by the discretion and edification of their words,3the modesty of their countenance, the maturity of their walk, and all their movements, without giving any sign of impatience or pride.4In all things they should try and desire to give the advantage to the others, esteeming them all in their hearts as if they were their superiors and showing outwardly, in an unassuming and simple religious manner, the respect and reverence appropriate to each one’s state,5so that by consideration of one another they may thus grow in devotion and praise God our Lord, whom each one should strive to recognize in the other as in his image.

Great paragraph about relationship to things and to others

-

- Feb 2025

-

www.curbed.com www.curbed.com

-

“Monet’s Garden” had a selfie station

selfie stsation....instagram...

-

-

spaghettiboost.com spaghettiboost.com

-

‘Instagrammable’

way to promote, the author argues that is a common way for established museums as well

-

- Jan 2025

-

substack.com substack.com

-

when i hear that kids are on their phones all day, i know that their parents are too.

-

- Dec 2024

-

media.dltj.org media.dltj.org

-

Social media is the very reason truth is threatened — why our ability to see the world clearly is threatened. Any military expert will tell you that on a real battlefield NATO would be unbeatable to an increasingly weakened Russia. But in this unmoderated vulnerable social media space, our truth is an easy target. And that brings us to how we are under attack.

Social media as a weapon against truth

-

- Nov 2024

-

www.theatlantic.com www.theatlantic.com

-

In its last report before Musk’s acquisition, in just the second half of 2021, Twitter suspended about 105,000 of the more than 5 million accounts reported for hateful conduct. In the first half of 2024, according to X, the social network received more than 66 million hateful-conduct reports, but suspended just 2,361 accounts. It’s not a perfect comparison, as the way X reports and analyzes data has changed under Musk, but the company is clearly taking action far less frequently.

-

-

experiments.myhub.ai experiments.myhub.ai

-

https://experiments.myhub.ai/ai4communities_post

Matthew Lowry experiment

-

People do not actually spend a lot of time browsing junk content,

The vast majority of people browsing social media streams via the web are doing just this: spending a lot of time browsing junk content.

While much of this "junk content" is for entertainment or some means of mental and/or emotional health, at root it becomes the opiate of the masses.

-

- Oct 2024

-

www.webnerd.me www.webnerd.me

-

Know and Master Your Social Media Data Flow by [[Louis Gray]]

See commentary at https://boffosocko.com/2017/04/11/a-new-way-to-know-and-master-your-social-media-flow/

-

-

mathewlowry.medium.com mathewlowry.medium.com

-

A Minimum Viable Ecosystem for collective intelligence by [[Mathew Lowry]]

Relation to Louis Gray's 2009 diagram/post: https://boffosocko.com/2017/04/11/a-new-way-to-know-and-master-your-social-media-flow/

-

-

en.wikipedia.org en.wikipedia.org

-

www.youtube.com www.youtube.com

-

term spectacle refers to

for - definition - the spectacle - context - the society of the spectacle - cacooning - the spectacle - social media - the spectacle

definition - the spectacle - context - the society of the spectacle - A society where images presented by mass media / mass entertainment not only dominate - but replaces real experiences with a superficial reality that is - focused on appearances designed primarily to distract people from reality - This ultimately disconnects them from - themselves and - those around them

comment - How much does our interaction with virtual reality of - written symbols - audio - video - two dimensional images - derived from our screens both large and small affect our direct experience of life? - When people are distracted by such manufactured entertainment, they have less time to devote to important issues and connecting with real people - We can sit for hours in social isolation, ignoring our bodies need for exercise and our emotional need for real social connection - We can ignore the real crisis going on in the world and instead numb ourselves out with contrived entertainment

-

- Sep 2024

-

dl.acm.org dl.acm.org

-

Shneiderman’s design principles for creativity support tools

Ben Shneiderman's work is deeply influential in HCI; his work has assisted in creating strong connections between tech and creativity, especially when applied to fostering innovation.

his 2007 national science foundation funded report on creativity support tools, led by UMD, provides a seminal overview of the definitions of creativity at that time.

-

-

danallosso.substack.com danallosso.substack.com

-

What To Do With Substack? by [[Dan Allosso]]

The "recency" problem is difficult in general in social media which tends to accentuate it versus the rest of the open web which is more of a network.

-

- Aug 2024

-

connect.iftas.org connect.iftas.org

Tags

Annotators

URL

-

- Jul 2024

-

substack.com substack.com

Tags

Annotators

URL

-

-

pcalv.es pcalv.es

-

www.gurwinder.blog www.gurwinder.blog

-

On X, meanwhile, there is a self-propagating system known as “the culture war”. This game consists of trying to score points (likes and retweets) by attacking the enemy political tribe. Unlike in a regular war, the combatants can’t kill each other, only make each other angrier, so little is ever achieved, except that all players become stressed by constant bickering. And yet they persist in bickering, if only because their opponents do, in an endless state of mutually assured distraction.

-

On Instagram, the main self-propagating system is a beauty pageant. Young women compete to be as pretty as possible, going to increasingly extreme lengths: makeup, filters, fillers, surgery. The result is that all women begin to feel ugly, online and off.

-

These features turned social media into the world’s most addictive status game.

-

- Jun 2024

-

disruptedjournal.postdigitalcultures.org disruptedjournal.postdigitalcultures.org

-

In this respect, we join Fitzpatrick (2011) in exploring “the extent to which the means of media production and distribution are undergoing a process of radical democratization in the Web 2.0 era, and a desire to test the limits of that democratization”

Comment by chrisaldrich: Something about this is reminiscent of WordPress' mission to democratize publishing. We can also compare it to Facebook whose (stated) mission is to connect people, while it's actual mission is to make money by seemingly radicalizing people to the extremes of our political spectrum.

This highlights the fact that while many may look at content moderation on platforms like Facebook as removing their voices or deplatforming them in the case of people like Donald J. Trump or Alex Jones as an anti-democratic move. In fact it is not. Because of Facebooks active move to accelerate extreme ideas by pushing them algorithmically, they are actively be un-democratic. Democratic behavior on Facebook would look like one voice, one account and reach only commensurate with that person's standing in real life. Instead, the algorithmic timeline gives far outsized influence and reach to some of the most extreme voices on the platform. This is patently un-democratic.

-

-

docdrop.org docdrop.org

-

meta they just rolled out they're like hey if you want to pay a certain subscription we will show your stuff to your followers 00:03:14 on Instagram and Facebook

for - example - social media platforms bleeding content producers - Meta - Facebook - Instagram

-

- May 2024

-

spec.matrix.org spec.matrix.org

-

Every event graph has a single root event with no parent

Weird. That means one user must start a topic. Whereas a topic like "Obama" could be started by multiple folks, not knowing about each other, later on discovering and interconnecting their reasoning, if they so wish.

-

Extensible user management (inviting, joining, leaving, kicking, banning) mediated by a power-level based user privilege system

Additionally: community-based management, ban polls.

Alternative: per-user configuration of access. Let rooms be topics on which peers discuss. A friend can see what he's friends and foafs are saying.

-

- Apr 2024

-

typepals.com typepals.com

-

Pen pals with typewriters. Pre-Twitter/Facebook social media modality.

Tags

Annotators

URL

-

-

snarfed.org snarfed.org

-

journal.jatan.space journal.jatan.space

-

Introducing a network for thoughtful conversations by [[Jatan Mehta]]

-

Reply by writing a blog post

This has broadly been implemented by Tumblr and is a first class feature within the IndieWeb.

-

The system will check if the link being submitted has an associated RSS feed, particularly one with an explicit title field instead of just a date, and only then allow posting it. Blogs, many research journals, YouTube channels, and podcasts have RSS feeds to aid reading and distribution, whereas things like tweets, Instagram photos, and LinkedIn posts don’t. So that’s a natively available filter on the web for us to utilize.

Existence of an RSS feed could be used as a filter to remove large swaths of social media content which don't have them.

-

-

shareopenly.org shareopenly.org

-

ShareOpenly https://shareopenly.org/<br /> built by Ben Werdmuller

Tags

Annotators

URL

-

- Mar 2024

-

thebaffler.com thebaffler.com

-

Ongweso Jr., Edward. “The Miseducation of Kara Swisher: Soul-Searching with the Tech ‘Journalist.’” The Baffler, March 29, 2024. https://thebaffler.com/latest/the-miseducation-of-kara-swisher-ongweso.

ᔥ[[Pete Brown]] in Exploding Comma

Tags

- bad technology

- Satya Nadella

- techno-utopianism

- Travis Kalanick (Uber)

- attention economy

- toxic technology

- Sheryl Sandberg

- acceleration

- Sundar Pichai

- Tony West

- surveillance capitalism

- diversity equity and inclusion

- Kara Swisher

- technology and the military

- read

- Microsoft

- social media machine guns

- access journalism

Annotators

URL

-

- Jan 2024

-

www.kickstarter.com www.kickstarter.com

-

blogs.cornell.edu blogs.cornell.edu

-

ᔥ[[Doug Belshaw]] in I am so tired of moving platforms

-

The Evaporative Cooling Effect describes the phenomenon that high value contributors leave a community because they cannot gain something from it, which leads to the decrease of the quality of the community. Since the people most likely to join a community are those whose quality is below the average quality of the community, these newcomers are very likely to harm the quality of the community. With the expansion of community, it is very hard to maintain the quality of the community.

via ref to Xianhang Zhang in Social Software Sundays #2 – The Evaporative Cooling Effect « Bumblebee Labs Blog [archived] who saw it

via [[Eliezer Yudkowsky]] in Evaporative Cooling of Group Beliefs

-

-

dougbelshaw.com dougbelshaw.com

-

By its very nature, moderation is a form of censorship. You, as a community, space, or platform are deciding who and what is unacceptable. In Substack’s case, for example, they don’t allow pornography but they do allow Nazis. That’s not “free speech” but rather a business decision. If you’re making moderation based on financials, fine, but say so. Then platform users can make choices appropriately.

-

- Dec 2023

-

harpers.org harpers.org

-

One could easily replace World War I and idea of war here with social media/media and the essay broadly reads well today.

-

-

erinkissane.com erinkissane.com

-

Untangling Threads by Erin Kissane

-

-

Local file Local file

-

I dislike isolated events anddisconnected details. I really hate state-ments, views, prejudices, and beliefsthat jump at you suddenly out of mid-air.

Wells would really hate social media, which he seems to have perfectly defined with this statement.

Tags

Annotators

-

-

erinkissane.com erinkissane.com

-

Untangling Threads by Erin Kissane on 2023-12-21

This immediately brings up the questions of how the following - founder effects and being overwhelmed by the scale of an eternal September - communism of community interactions being subverted bent for the purposes of (surveillance) capitalism (see @Graeber2011, Debt)

-

-

munk.org munk.org

-

On the “Death” of the Typosphere, a Few Thoughts and Ideas by Ted Munk on 2018-06-02

TTSSASTT = To Type, Shoot Straight, and Speak the Truth…

-

-

clalliance.org clalliance.org

-

In my book Technology’s Child: Digital Media’s Role in the Ages and Stages of Growing Up, I explore how the design of platforms and the way people engage with those designs helps to shape the cultures that emerge on different social media platforms. I propose three layers for understanding this process.

-

-

handwiki.org handwiki.org

-

tracydurnell.com tracydurnell.com

-

While social media emphasizes the show-off stuff — the vacation in Puerto Vallarta, the full kitchen remodel, the night out on the town — on blogs it still seems that people are sharing more than signalling.

Yes!

-

- Nov 2023

-

books.openbookpublishers.com books.openbookpublishers.com

-

In contrast, media ecologists focus on understanding media as environments and how those environments affect society.

The World Wide Web takes on an ecological identity in that it is defined by the ecology of relationships exercised within, determining the "environmental" aspects of the online world. What of media ecology and its impact on earth's ecology? There are climate change ramifications simply in the use of social media itself, yet alone the influences or behaviors associated with it: here is a carbon emissions calculator for seemingly "innocent" internet use:

-

- Oct 2023

-

snarfed.org snarfed.org

-

Following the Not So Online<br /> by Ryan Barrett

-

-

abcnews.go.com abcnews.go.com

-

Everyone is super ambitious and that creates a little bit of a toxic environment where people feel like it's a very comparative space

Competitive in what way? Grades? Jobs? Finances? Material things? Relationships?

-

"And I think social media turbocharged us all of this.

wow...tell me more.

-

-

www.theverge.com www.theverge.com

-

Managing a half-dozen identities on a half-dozen platforms is too much work!

-

-

danallosso.substack.com danallosso.substack.com

-

In both cases, it's up to us now to discipline ourselves to avoid the fats in junk food, and the breaking news and dopamine thrill-ride of social media.

A nice encapsulation of evolutionary challenges that humans are facing.

-

-

delong.typepad.com delong.typepad.com

-

Television, radio, and all the sources of amusement andinformation that surround us in our daily lives are also artificialprops. They can give us the impression that our minds are active, because we are required to react to stimuli from outside.But the power of those external stimuli to keep us going islimited. They are like drugs. We grow used to them, and wecontinuously need more and more of them. Eventually, theyhave little or no effect. Then, if we lack resources within ourselves, we cease to grow intellectually, morally, and spiritually.And when we cease to grow, we begin to die.

One could argue that Adler and Van Doren would lump social media into the sources of amusement category.

-

-

bryanalexander.org bryanalexander.org

- Sep 2023

-

www.wired.com www.wired.com

-

DiResta, Renee. “Free Speech Is Not the Same As Free Reach.” Wired, August 30, 2018. https://www.wired.com/story/free-speech-is-not-the-same-as-free-reach/.

-

-

jonathanhaidt.com jonathanhaidt.com

-

-

for: annotate, annotate - social media, progress trap - social media

-

source: connectathon 2023 09 23

- session on social media

-

-

-

docdrop.org docdrop.org

-

- for: doppleganger, conflict resolution, deep humanity, common denominators, CHD, Douglas Rushkoff, Naomi Klein, Into the Mirror World, conspiracy theory, conspiracy theories, conspiracy culture, nonduality, self-other, human interbeing, polycrisis, othering, storytelling, myth-making, social media amplifier

-summary

- This conversation was insightful on so many dimensions salient to the polycrisis humanity is moving through.

- It makes me think of the old cliches:

- "The more things change, the more they remain the same"

- "What's old is new" ' "History repeats"

- the conversation explores Naomi's latest book (as of this podcast), Into the Mirror World, in which Naomi adopts a different style of writing to explicate, articulate and give voice to

- implicit and tacit discomforting ideas and feelings she experienced during covid and earlier, and

- became a focal point through a personal comparative analysis with another female author and thought leader, Naomi Wolf,

- a feminist writer who ended up being rejected by mainstream media and turned to right wing media.

- The conversation explores the process of:

- othering,

- coopting and

- abandoning

- of ideas important for personal and social wellbeing.

- and speaks to the need to identify what is going on and to reclaim those ideas for the sake of humanity

- In this context, the doppleganger is the people who are mirror-like imiages of ourselves, but on the other side of polarized issues.

- Charismatic leaders who are bad actors often are good at identifying the suffering of the masses, and coopt the ideas of good actors to serve their own ends of self-enrichment.

- There are real world conspiracies that have caused significant societal harm, and still do,

- however, when there ithere are phenomena which we have no direct sense experience of, the mixture of

- a sense of helplessness,

- anger emerging from injustice

- a charismatic leader proposing a concrete, possible but explanatory theory

- is a powerful story whose mythology can be reified by many people believing it

- Another cliche springs to mind

- A lie told a hundred times becomes a truth

- hence the amplifying role of social media

- When we think about where this phenomena manifests, we find it everywhere:

- for: doppleganger, conflict resolution, deep humanity, common denominators, CHD, Douglas Rushkoff, Naomi Klein, Into the Mirror World, conspiracy theory, conspiracy theories, conspiracy culture, nonduality, self-other, human interbeing, polycrisis, othering, storytelling, myth-making, social media amplifier

-summary

Tags

- conflict resolution

- storytellilng

- myth-making

- Into the Mirror World

- doppleganger

- polycrisis

- CHD

- othering

- common denominators

- Douglas Rushkoff

- social media amplifier

- conspiracy theories

- conspiracy theory

- human interbeing

- conspiracy culture

- Deep Humanity

- nonduality

- self-other entanglement

- Naomi Klein

Annotators

URL

-

- Aug 2023

-

factr.com factr.com

-

A social network for "organizing and sharing your knowledge".

-

-

Local file Local file

-

T9 (text prediction):generative AI::handgun:machine gun

-

-

www.pewresearch.org www.pewresearch.org

-

I do expect new social platforms to emerge that focus on privacy and ‘fake-free’ information, or at least they will claim to be so. Proving that to a jaded public will be a challenge. Resisting the temptation to exploit all that data will be extremely hard. And how to pay for it all? If it is subscriber-paid, then only the wealthy will be able to afford it.

- for: quote, quote - Sam Adams, quote - social media

- quote, indyweb - support, people-centered

- I do expect new social platforms to emerge that focus on privacy and ‘fake-free’ information, or at least they will claim to be so.

- Proving that to a jaded public will be a challenge.

- Resisting the temptation to exploit all that data will be extremely hard.

- And how to pay for it all?

- If it is subscriber-paid, then only the wealthy will be able to afford it.

- author: Sam Adams

- 24 year IBM veteran -senior research scientist in AI at RTI International working on national scale knowledge graphs for global good

- comment

- his comment about exploiting all that data is based on an assumption

- a centralized, server data model

- his comment about exploiting all that data is based on an assumption

- this doesn't hold true with a people-centered, person-owned data network such as Inyweb

-

Will members-only, perhaps subscription-based ‘online communities’ reemerge instead of ‘post and we’ll sell your data’ forms of social media? I hope so, but at this point a giant investment would be needed to counter the mega-billions of companies like Facebook!

- for: quote, quote - Janet Salmons, quote - online communities, quote - social media, indyweb - support

- paraphrase

- Will members-only, perhaps subscription-based ‘online communities’ reemerge instead of

- ‘post and we’ll sell your data’ forms of social media?

- I hope so, but at this point a giant investment would be needed to counter the mega-billions of companies like Facebook!

-

-

www.sciencedirect.com www.sciencedirect.com

-

David E. Williams, Spencer P. Greenhalgh. (2022). Pseudonymous academics: Authentic tales from the Twitter trenches. The Internet and Higher Education. Volume 55, October 2022 https://doi.org/10.1016/j.iheduc.2022.100870

-

-

www.pewresearch.org www.pewresearch.org

-

The big tech companies, left to their own devices (so to speak), have already had a net negative effect on societies worldwide. At the moment, the three big threats these companies pose – aggressive surveillance, arbitrary suppression of content (the censorship problem), and the subtle manipulation of thoughts, behaviors, votes, purchases, attitudes and beliefs – are unchecked worldwide

- for: quote, quote - Robert Epstein, quote - search engine bias,quote - future of democracy, quote - tilting elections, quote - progress trap, progress trap, cultural evolution, technology - futures, futures - technology, progress trap, indyweb - support, future - education

- quote

- The big tech companies, left to their own devices , have already had a net negative effect on societies worldwide.

- At the moment, the three big threats these companies pose

- aggressive surveillance,

- arbitrary suppression of content,

- the censorship problem, and

- the subtle manipulation of

- thoughts,

- behaviors,

- votes,

- purchases,

- attitudes and

- beliefs

- are unchecked worldwide

- author: Robert Epstein

- senior research psychologist at American Institute for Behavioral Research and Technology

- paraphrase

- Epstein's organization is building two technologies that assist in combating these problems:

- passively monitor what big tech companies are showing people online,

- smart algorithms that will ultimately be able to identify online manipulations in realtime:

- biased search results,

- biased search suggestions,

- biased newsfeeds,

- platform-generated targeted messages,

- platform-engineered virality,

- shadow-banning,

- email suppression, etc.

- Tech evolves too quickly to be managed by laws and regulations,

- but monitoring systems are tech, and they can and will be used to curtail the destructive and dangerous powers of companies like Google and Facebook on an ongoing basis.

- Epstein's organization is building two technologies that assist in combating these problems:

- reference

- seminar paper on monitoring systems, ‘Taming Big Tech -: https://is.gd/K4caTW.

Tags

- quote - election bias

- quote - mind control

- progress trap - Google

- progress trap - search engine

- search engine manipulation effect

- quote - tilting elections

- quote

- progress trap - digital technology

- search engine bias

- progress trap - social media

- SEME

- quote -search engine manipulation effect

- quote - progress trap

- quote SEME

- progress trap

- quote - Robert Epstein

Annotators

URL

-

-

hackernoon.com hackernoon.com

-

- for: titling elections, voting - social media, voting - search engine bias, SEME, search engine manipulation effect, Robert Epstein

- summary

- research that shows how search engines can actually bias towards a political candidate in an election and tilt the election in favor of a particular party.

-

In our early experiments, reported by The Washington Post in March 2013, we discovered that Google’s search engine had the power to shift the percentage of undecided voters supporting a political candidate by a substantial margin without anyone knowing.

- for: search engine manipulation effect, SEME, voting, voting - bias, voting - manipulation, voting - search engine bias, democracy - search engine bias, quote, quote - Robert Epstein, quote - search engine bias, stats, stats - tilting elections

- paraphrase

- quote

- In our early experiments, reported by The Washington Post in March 2013,

- we discovered that Google’s search engine had the power to shift the percentage of undecided voters supporting a political candidate by a substantial margin without anyone knowing.

- 2015 PNAS research on SEME

- http://www.pnas.org/content/112/33/E4512.full.pdf?with-ds=yes&ref=hackernoon.com

- stats begin

- search results favoring one candidate

- could easily shift the opinions and voting preferences of real voters in real elections by up to 80 percent in some demographic groups

- with virtually no one knowing they had been manipulated.

- stats end

- Worse still, the few people who had noticed that we were showing them biased search results

- generally shifted even farther in the direction of the bias,

- so being able to spot favoritism in search results is no protection against it.

- stats begin

- Google’s search engine

- with or without any deliberate planning by Google employees

- was currently determining the outcomes of upwards of 25 percent of the world’s national elections.

- This is because Google’s search engine lacks an equal-time rule,

- so it virtually always favors one candidate over another, and that in turn shifts the preferences of undecided voters.

- Because many elections are very close, shifting the preferences of undecided voters can easily tip the outcome.

- stats end

-

What if, early in the morning on Election Day in 2016, Mark Zuckerberg had used Facebook to broadcast “go-out-and-vote” reminders just to supporters of Hillary Clinton? Extrapolating from Facebook’s own published data, that might have given Mrs. Clinton a boost of 450,000 votes or more, with no one but Mr. Zuckerberg and a few cronies knowing about the manipulation.

- for: Hiliary Clinton could have won, voting, democracy, voting - social media, democracy - social media, election - social media, facebook - election, 2016 US elections, 2016 Trump election, 2016 US election, 2016 US election - different results, 2016 election - social media

- interesting fact

- If Facebook had sent a "Go out and vote" message on election day of 2016 election, Clinton may have had a boost of 450,000 additional votes

- and the outcome of the election might have been different

- If Facebook had sent a "Go out and vote" message on election day of 2016 election, Clinton may have had a boost of 450,000 additional votes

Tags

- Robert Epstein

- PNAS SEME study

- 2016 US election

- Trump could have lost

- democracy - search engine bias

- Washington Post story - search engine bias

- Hilary Clinton could have won

- search engine manipulation effect

- voting

- search engine bias

- voting - social media

- elections - interference

- quote

- stats - tilting elections

- stats

- quote - Robert Epstein

- democracy

- quote - search engine bias

- SEME

- elections - bias

- election - social media

- facebook - election

- democracy - social media

- 2016 US election - different results

- voting - search engine bias

Annotators

URL

-

- Jul 2023

-

acecomments.mu.nu acecomments.mu.nu

-

As Threads "soars", Bluesky and Mastodon are adopting algorithmic feeds. (Tech Crunch) You will eat the bugs. You will live in the pod. You will read what we tell you. You will own nothing and we don't much care if you are happy.

Applying the WEF meme about pods and bugs to Threads inspiring Bluesky and one Mastodon app to push algorithmic feeds.

Tags

Annotators

URL

-

-

academic.oup.com academic.oup.com

-

specific uses of the technology help develop what we call “relational confidence,” or the confidence that one has a close enough relationship to a colleague to ask and get needed knowledge. With greater relational confidence, knowledge sharing is more successful.

-

-

euobserver.com euobserver.com

-

Not that an E2E rule precludes algorithmic feeds: remember, E2E is the idea that you see what you ask to see. If a user opts into a feed that promotes content that they haven't subscribed to at the expense of the things they explicitly asked to see, that's their choice. But it's not a choice that social media services reliably offer, which is how they are able to extract ransom payments from publishers.

I don't understand how you could audit this, unless you had to force a default of chronological presentation of posts etc.

Tags

Annotators

URL

-

-

www.youtube.com www.youtube.com

-

the folly of endless bla, bla bla, people viewing the mind as a big boy, while in reality, it is a little boy who is undisciplined and goes on random rants and tangents, liking and disliking everything it sees on social-media

Tags

Annotators

URL

-

-

babylonbee.com babylonbee.com

-

"After years of research, our engineers have created a revolution in social media technology: a Twitter clone on Instagram that offers the absolute worst of both worlds," said a VR headset-wearing Zuckerberg in an address to dozens of friends in the Metaverse. "At long last, you can read caustic hot takes written by talentless idiots, while still enjoying oppressive censorship and sepia-toned thirst traps from yoga pants models with obnoxious lip injections. You're welcome!"

Babylon Bee article with made up Mark Zuckerberg quote touting the virtues of Threads. This is some of the Bee's finest writing and not at all inaccurate.

-

- Jun 2023

-

www.youtube.com www.youtube.com

-

(14:20-19:00) Dopamine Prediction Error is explained by Andrew Huberman in the following way: When we anticipate something exciting dopamine levels rise and rise, but when we fail it drops below baseline, decreasing motivation and drive immensely, sometimes even causing us to get sad. However, when we succeed, dopamine rises even higher, increasing our drive and motivation significantly... This is the idea that successes build upon each other, and why celebrating the "marginal gains" is a very powerful tool to build momentum and actually make progress. Surprise increases this effect even more: big dopamine hit, when you don't anticipate it.

Social Media algorithms make heavy use of this principle, therefore enslaving its user, in particular infinite scrolling platforms such as TikTok... Your dopamine levels rise as you're looking for that one thing you like, but it drops because you don't always have that one golden nugget. Then it rises once in a while when you find it. This contrast creates an illusion of enjoyment and traps the user in an infinite search of great content, especially when it's shortform. It makes you waste time so effectively. This is related to getting the success mindset of preferring delayed gratification over instant gratification.

It would be useful to reflect and introspect on your dopaminic baseline, and see what actually increases and decreases your dopamine, in addition to whether or not these things help to achieve your ambitions. As a high dopaminic baseline (which means your dopamine circuit is getting used to high hits from things as playing games, watching shortform content, watching porn) decreases your ability to focus for long amounts of time (attention span), and by extent your ability to learn and eventually reach success. Studying and learning can actually be fun, if your dopamine levels are managed properly, meaning you don't often engage in very high-dopamine emitting activities. You want your brain to be used to the low amounts of dopamine that studying gives. A framework to help with this reflection would be Kolb's.

A short-term dopamine reset is to not use the tool or device for about half an hour to an hour (or do NSDR). However, this is not a long-term solution.

-

-

www.marginalia.nu www.marginalia.nu

- May 2023

-

nostr.com nostr.com

-

Nostr is a simple, open protocol that enables global, decentralized, and censorship-resistant social media.

Peter Kominski likes this generally.

-

-

-

Trakt DataRecoveryIMPORTANTOn December 11 at 7:30 pm PST our main database crashed and corrupted some of the data. We're deeply sorry for the extended downtime and we'll do better moving forward. Updates to our automated backups are already in place and they will be tested on an ongoing basis.Data prior to November 7 is fully restored.Watched history between November 7 and Decmber 11 has been recovered. There is a separate message on your dashboard allowing you to review and import any recovered data.All other data (besides watched history) after November 7 has already been restored and imported.Some data might be permanently lost due to data corruption.Trakt API is back online as of December 20.Active VIP members will get 2 free months added to their expiration date

From late 2022

Tags

Annotators

URL

-

-

atomicbooks.com atomicbooks.com

-

https://atomicbooks.com/pages/john-waters-fan-mail

John Waters receives fan mail via Atomic Books in Baltimore, MD.

-

-

firesky.tv firesky.tvFiresky1

Tags

Annotators

URL

- Apr 2023

-

www.reddit.com www.reddit.com

-

Benefits of sharing permanent notes .t3_12gadut._2FCtq-QzlfuN-SwVMUZMM3 { --postTitle-VisitedLinkColor: #9b9b9b; --postTitleLink-VisitedLinkColor: #9b9b9b; --postBodyLink-VisitedLinkColor: #989898; }

reply to u/bestlunchtoday at https://www.reddit.com/r/Zettelkasten/comments/12gadut/benefits_of_sharing_permanent_notes/

I love the diversity of ideas here! So many different ways to do it all and perspectives on the pros/cons. It's all incredibly idiosyncratic, just like our notes.

I probably default to a far extreme of sharing the vast majority of my notes openly to the public (at least the ones taken digitally which account for probably 95%). You can find them here: https://hypothes.is/users/chrisaldrich.

Not many people notice or care, but I do know that a small handful follow and occasionally reply to them or email me questions. One or two people actually subscribe to them via RSS, and at least one has said that they know more about me, what I'm reading, what I'm interested in, and who I am by reading these over time. (I also personally follow a handful of people and tags there myself.) Some have remarked at how they appreciate watching my notes over time and then seeing the longer writing pieces they were integrated into. Some novice note takers have mentioned how much they appreciate being able to watch such a process of note taking turned into composition as examples which they might follow. Some just like a particular niche topic and follow it as a tag (so if you were interested in zettelkasten perhaps?) Why should I hide my conversation with the authors I read, or with my own zettelkasten unless it really needed to be private? Couldn't/shouldn't it all be part of "The Great Conversation"? The tougher part may be having means of appropriately focusing on and sharing this conversation without some of the ills and attention economy practices which plague the social space presently.

There are a few notes here on this post that talk about social media and how this plays a role in making them public or not. I suppose that if I were putting it all on a popular platform like Twitter or Instagram then the use of the notes would be or could be considered more performative. Since mine are on what I would call a very quiet pseudo-social network, but one specifically intended for note taking, they tend to be far less performative in nature and the majority of the focus is solely on what I want to make and use them for. I have the opportunity and ability to make some private and occasionally do so. Perhaps if the traffic and notice of them became more prominent I would change my habits, but generally it has been a net positive to have put my sensemaking out into the public, though I will admit that I have a lot of privilege to be able to do so.

Of course for those who just want my longer form stuff, there's a website/blog for that, though personally I think all the fun ideas at the bleeding edge are in my notes.

Since some (u/deafpolygon, u/Magnifico99, and u/thiefspy; cc: u/FastSascha, u/A_Dull_Significance) have mentioned social media, Instagram, and journalists, I'll share a relevant old note with an example, which is also simultaneously an example of the benefit of having public notes to be able to point at, which u/PantsMcFail2 also does here with one of Andy Matuschak's public notes:

[Prominent] Journalist John Dickerson indicates that he uses Instagram as a commonplace: https://www.instagram.com/jfdlibrary/ here he keeps a collection of photo "cards" with quotes from famous people rather than photos. He also keeps collections there of photos of notes from scraps of paper as well as photos of annotations he makes in books.

It's reasonably well known that Ronald Reagan shared some of his personal notes and collected quotations with his speechwriting staff while he was President. I would say that this and other similar examples of collaborative zettelkasten or collaborative note taking and their uses would blunt u/deafpolygon's argument that shared notes (online or otherwise) are either just (or only) a wiki. The forms are somewhat similar, but not all exactly the same. I suspect others could add to these examples.

And of course if you've been following along with all of my links, you'll have found yourself reading not only these words here, but also reading some of a directed conversation with entry points into my own personal zettelkasten, which you can also query as you like. I hope it has helped to increase the depth and level of the conversation, should you choose to enter into it. It's an open enough one that folks can pick and choose their own path through it as their interests dictate.

-

-

on.substack.com on.substack.com

-

Introducing Substack Notes<br /> by Hamish McKenzie, Chris Best, Jairaj Sethi

-

In Notes, writers will be able to post short-form content and share ideas with each other and their readers. Like our Recommendations feature, Notes is designed to drive discovery across Substack. But while Recommendations lets writers promote publications, Notes will give them the ability to recommend almost anything—including posts, quotes, comments, images, and links.

Substack slowly adding features and functionality to make them a full stack blogging/social platform... first long form, then short note features...

Also pushing in on Twitter's lunch as Twitter is having issues.

-

- Mar 2023

-

web.archive.org web.archive.org

-

Die schiere Menge sprengt die Möglichkeiten der Buchpublikation, die komplexe, vieldimensionale Struktur einer vernetzten Informationsbasis ist im Druck nicht nachzubilden, und schließlich fügt sich die Dynamik eines stetig wachsenden und auch stetig zu korrigierenden Materials nicht in den starren Rhythmus der Buchproduktion, in der jede erweiterte und korrigierte Neuauflage mit unübersehbarem Aufwand verbunden ist. Eine Buchpublikation könnte stets nur die Momentaufnahme einer solchen Datenbank, reduziert auf eine bestimmte Perspektive, bieten. Auch das kann hin und wieder sehr nützlich sein, aber dadurch wird das Problem der Publikation des Gesamtmaterials nicht gelöst.

link to https://hypothes.is/a/U95jEs0eEe20EUesAtKcuA

Is this phenomenon of "complex narratives" related to misinformation spread within the larger and more complex social network/online network? At small, local scales, people know how to handle data and information which is locally contextualized for them. On larger internet-scale communication social platforms this sort of contextualization breaks down.

For a lack of a better word for this, let's temporarily refer to it as "complex narratives" to get a handle on it.

-

-

www.ndss-symposium.org www.ndss-symposium.org

- Feb 2023

-

-

Related here is the horcrux problem of note taking or even social media. The mental friction of where did I put that thing? As a result, it's best to put it all in one place.

How can you build on a single foundation if you're in multiple locations? The primary (only?) benefit of multiple locations is redundancy in case of loss.

Ryan Holiday and Robert Greene are counter examples, though Greene's books are distinct projects generally while Holiday's work has a lot of overlap.

-

-

aeon.co aeon.co

-

If Seneca or Martial were around today, they would probably write sarcastic epigrams about the very public exhibition of reading text messages and in-your-face displays of texting. Digital reading, like the perusing of ancient scrolls, constitutes an important statement about who we are. Like the public readers of Martial’s Rome, the avid readers of text messages and other forms of social media appear to be everywhere. Though in both cases the performers of reading are tirelessly constructing their self-image, the identity they aspire to establish is very different. Young people sitting in a bar checking their phones for texts are not making a statement about their refined literary status. They are signalling that they are connected and – most importantly – that their attention is in constant demand.

-

-

www.washingtonpost.com www.washingtonpost.com

-

Internet ‘algospeak’ is changing our language in real time, from ‘nip nops’ to ‘le dollar bean’ by [[Taylor Lorenz]]

shifts in language and meaning of words and symbols as the result of algorithmic content moderation

instead of slow semantic shifts, content moderation is actively pushing shifts of words and their meanings

article suggested by this week's Dan Allosso Book club on Pirate Enlightenment

-

Could it be the sift from person to person (known in both directions) to massive broadcast that is driving issues with content moderation. When it's person to person, one can simply choose not to interact and put the person beyond their individual pale. This sort of shunning is much harder to do with larger mass publics at scale in broadcast mode.

How can bringing content moderation back down to the neighborhood scale help in the broadcast model?

-

In January, Kendra Calhoun, a postdoctoral researcher in linguistic anthropology at UCLA, and Alexia Fawcett, a doctoral student in linguistics at UC Santa Barbara, gave a presentation about language on TikTok. They outlined how, by self-censoring words in the captions of TikToks, new algospeak code words emerged.

follow up on this for the relevant forthcoming paper....

-

“It makes me feel like I need a disclaimer because I feel like it makes you seem unprofessional to have these weirdly spelled words in your captions,” she said, “especially for content that's supposed to be serious and medically inclined.”

Where's the balance for professionalism with respect to dodging the algorithmic filters for serious health-related conversations online?

-

But algorithmic content moderation systems are more pervasive on the modern Internet, and often end up silencing marginalized communities and important discussions.

What about non-marginalized toxic communities like Neo-Nazis?

-

Unlike other mainstream social platforms, the primary way content is distributed on TikTok is through an algorithmically curated “For You” page; having followers doesn’t guarantee people will see your content. This shift has led average users to tailor their videos primarily toward the algorithm, rather than a following, which means abiding by content moderation rules is more crucial than ever.

Social media has slowly moved away from communication between people who know each other to people who are farther apart in social spaces. Increasingly in 2021 onward, some platforms like TikTok have acted as a distribution platform and ignored explicit social connections like follower/followee in lieu of algorithmic-only feeds to distribute content to people based on a variety of criteria including popularity of content and the readers' interests.

Tags

- human computer interaction

- social media

- marginalized groups

- misinformation

- dialects

- cultural anthropology

- colloquialisms

- leetspeak

- broadcasting models

- social media history

- public health

- algospeak

- content moderation

- Voldemorting

- cancel culture

- demonitization

- Alexia Fawcett

- beyond the pale

- cultural taboos

- coded language

- historical linguistics

- TikTok

- dialect creation

- linguistics

- Kendra Calhoun

- health care

- social media machine guns

- neo-Nazis

- algorithmic feeds

- shunning

- euphemisms

Annotators

URL

-

-

www.thecrimson.com www.thecrimson.com

-

https://www.thecrimson.com/article/2023/2/2/donovan-forced-leave-hks/

This is a massive loss for HKS, but a potential major win for the school that picks the project up.

It seems to be a sad use of "rules" to shut down a project which may not jive with an administrations' perspective/needs.

Read on Fri 2023-02-03 at 7:14 PM

-

-

www.heise.de www.heise.de

-

Man kann die ganze Situation nämlich auch einmal zum Anlass nehmen, darüber nachzudenken, ob man das Ganze wirklich braucht. Ist der Nutzen der sozialen Medien so hoch, dass er den Preis rechtfertigt? Das ist eine Frage, die ich mir stelle, seit ich meinen persönlichen Twitter-Account stillgelegt habe, aber so verkehrt fühlt es sich zumindest für mich nicht an, nicht mehr auf Twitter, Mastodon & Co. vertreten zu sein. Vielleicht hatte ein solcher Dienst auch einfach seine Zeit, und vielleicht überschätzen wir die Relevanz von sozialen Medien, und vielleicht wäre es gut, davon mehr Abstand zu nehmen.

-

-

www.reddit.com www.reddit.com

-

One can find utility in asking questions of their own note box, but why not also leverage the utility of a broader audience asking questions of it as well?!

One of the values of social media is that it can allow you to practice or rehearse the potential value of ideas and potentially getting useful feedback on individual ideas which you may be aggregating into larger works.

-

- Jan 2023

-

twitterisgoinggreat.com twitterisgoinggreat.com

-

ncase.itch.io ncase.itch.io

-

We become what we behold, a game by Nicky Case.

A commentary on news cycles and social media.

-

-

cohost.org cohost.orgcohost!1

-

social media platform

This technical jargon, in the context of Cohost.org, means "a website".

Tags

Annotators

URL

-

-

-

is zettelkasten gamification of note-taking? .t3_zkguan._2FCtq-QzlfuN-SwVMUZMM3 { --postTitle-VisitedLinkColor: #9b9b9b; --postTitleLink-VisitedLinkColor: #9b9b9b; --postBodyLink-VisitedLinkColor: #989898; }

reply to u/theinvertedform at https://www.reddit.com/r/Zettelkasten/comments/zkguan/is_zettelkasten_gamification_of_notetaking/

Social media and "influencers" have certainly grabbed onto the idea and squeezed with both hands. Broadly while talking about their own versions of rules, tips, tricks, and tools, they've missed a massive history of the broader techniques which pervade the humanities for over 500 years. When one looks more deeply at the broader cross section of writers, educators, philosophers, and academics who have used variations on the idea of maintaining notebooks or commonplace books, it becomes a relative no-brainer that it is a useful tool. I touch on some of the history as well as some of the recent commercialization here: https://boffosocko.com/2022/10/22/the-two-definitions-of-zettelkasten/.

-

- Dec 2022

-

arstechnica.com arstechnica.com

-

"Queer people built the Fediverse," she said, adding that four of the five authors of the ActivityPub standard identify as queer. As a result, protections against undesired interaction are built into ActivityPub and the various front ends. Systems for blocking entire instances with a culture of trolling can save users the exhausting process of blocking one troll at a time. If a post includes a “summary” field, Mastodon uses that summary as a content warning.

-

-

fedvte.usalearning.gov fedvte.usalearning.gov

-

Investigating social structures through the use of network or graphs Networked structures Usually called nodes ((individual actors, people, or things within the network) Connections between nodes: Edges or Links Focus on relationships between actors in addition to the attributes of actors Extensively used in mapping out social networks (Twitter, Facebook) Examples: Palantir, Analyst Notebook, MISP and Maltego

-

-

www.sciencedirect.com www.sciencedirect.com

-

Drawing from negativity bias theory, CFM, ICM, and arousal theory, this study characterizes the emotional responses of social media users and verifies how emotional factors affect the number of reposts of social media content after two natural disasters (predictable and unpredictable disasters). In addition, results from defining the influential users as those with many followers and high activity users and then characterizing how they affect the number of reposts after natural disasters

-

-

psycnet.apa.org psycnet.apa.org

-

Using actual fake-news headlines presented as they were seen on Facebook, we show that even a single exposure increases subsequent perceptions of accuracy, both within the same session and after a week. Moreover, this “illusory truth effect” for fake-news headlines occurs despite a low level of overall believability and even when the stories are labeled as contested by fact checkers or are inconsistent with the reader’s political ideology. These results suggest that social media platforms help to incubate belief in blatantly false news stories and that tagging such stories as disputed is not an effective solution to this problem.

-

-

www.nature.com www.nature.com

-

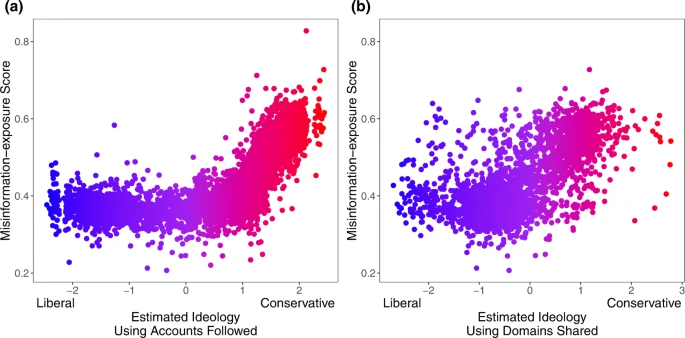

. Furthermore, our results add to the growing body of literature documenting—at least at this historical moment—the link between extreme right-wing ideology and misinformation8,14,24 (although, of course, factors other than ideology are also associated with misinformation sharing, such as polarization25 and inattention17,37).

Misinformation exposure and extreme right-wing ideology appear associated in this report. Others find that it is partisanship that predicts susceptibility.

-

. We also find evidence of “falsehood echo chambers”, where users that are more often exposed to misinformation are more likely to follow a similar set of accounts and share from a similar set of domains. These results are interesting in the context of evidence that political echo chambers are not prevalent, as typically imagined

-

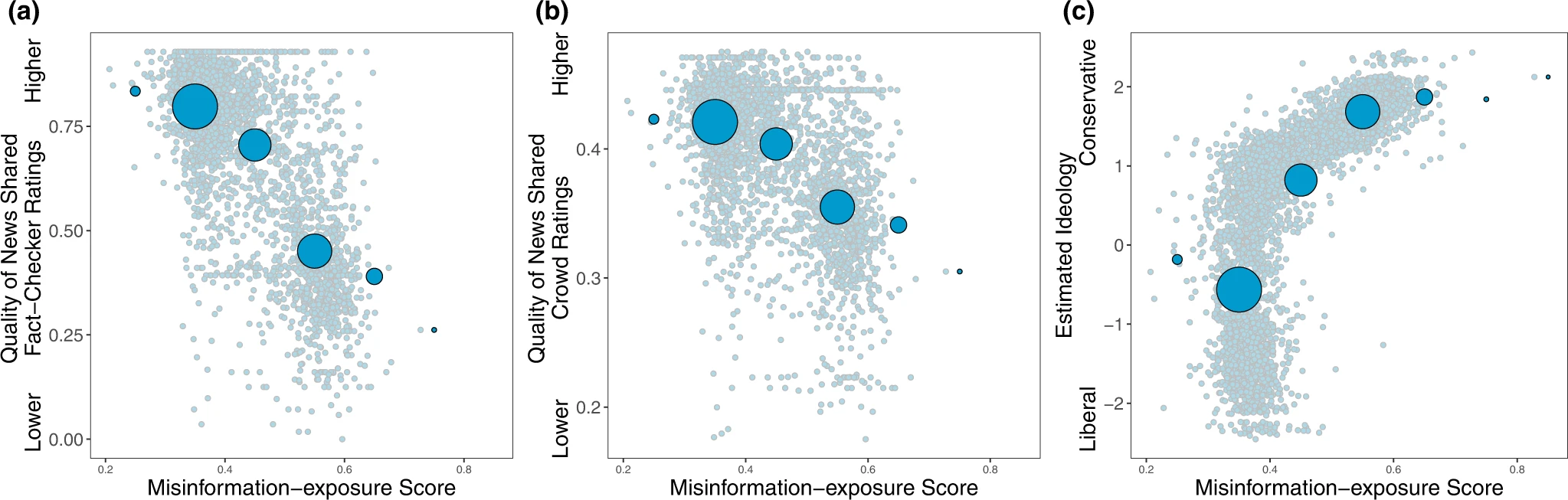

And finally, at the individual level, we found that estimated ideological extremity was more strongly associated with following elites who made more false or inaccurate statements among users estimated to be conservatives compared to users estimated to be liberals. These results on political asymmetries are aligned with prior work on news-based misinformation sharing

This suggests the misinformation sharing elites may influence whether followers become more extreme. There is little incentive not to stoke outrage as it improves engagement.

-

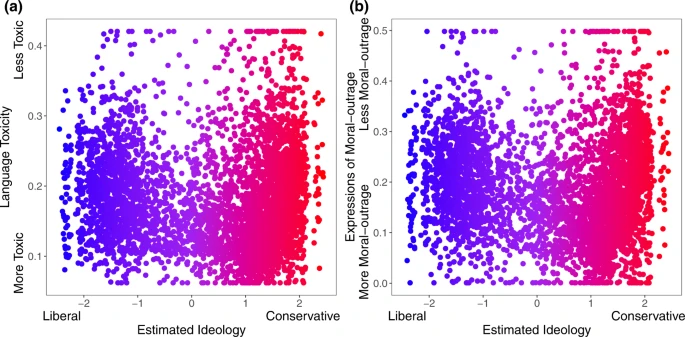

Estimated ideological extremity is associated with higher elite misinformation-exposure scores for estimated conservatives more so than estimated liberals.

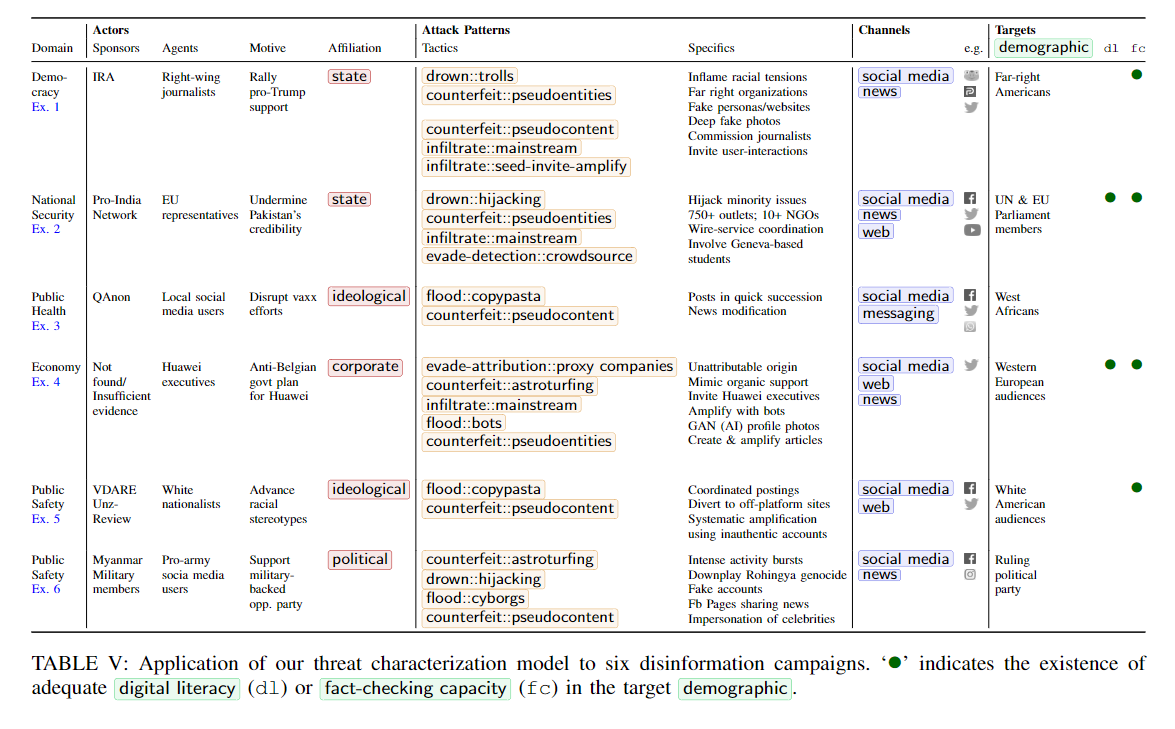

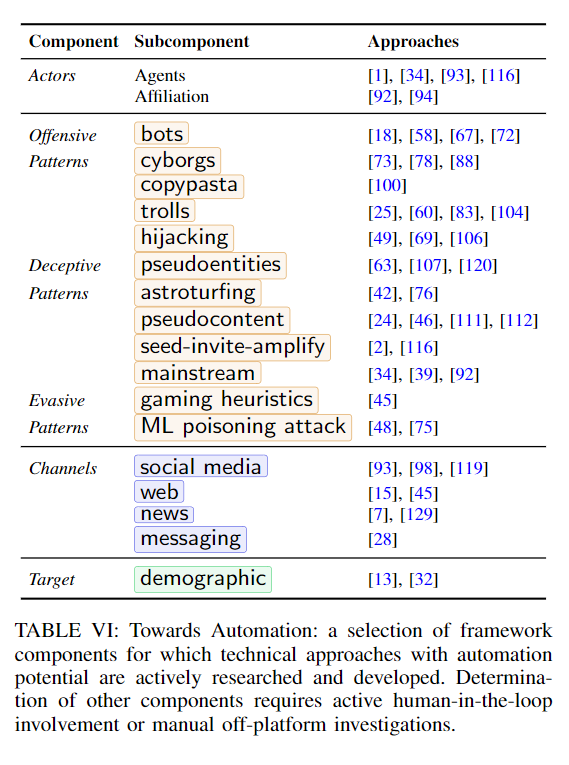

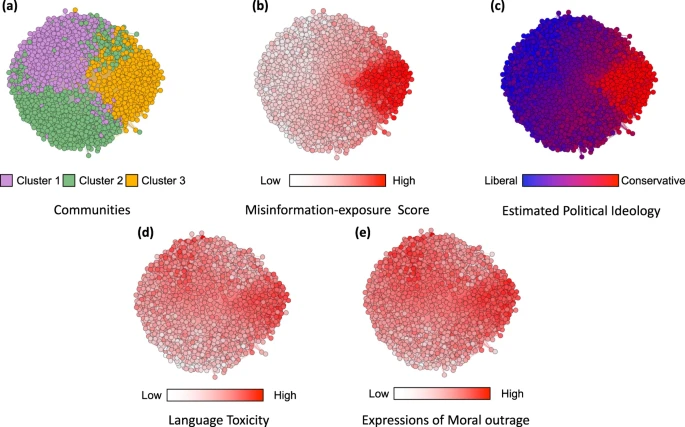

Political ideology is estimated using accounts followed10. b Political ideology is estimated using domains shared30 (Red: conservative, blue: liberal). Source data are provided as a Source Data file.

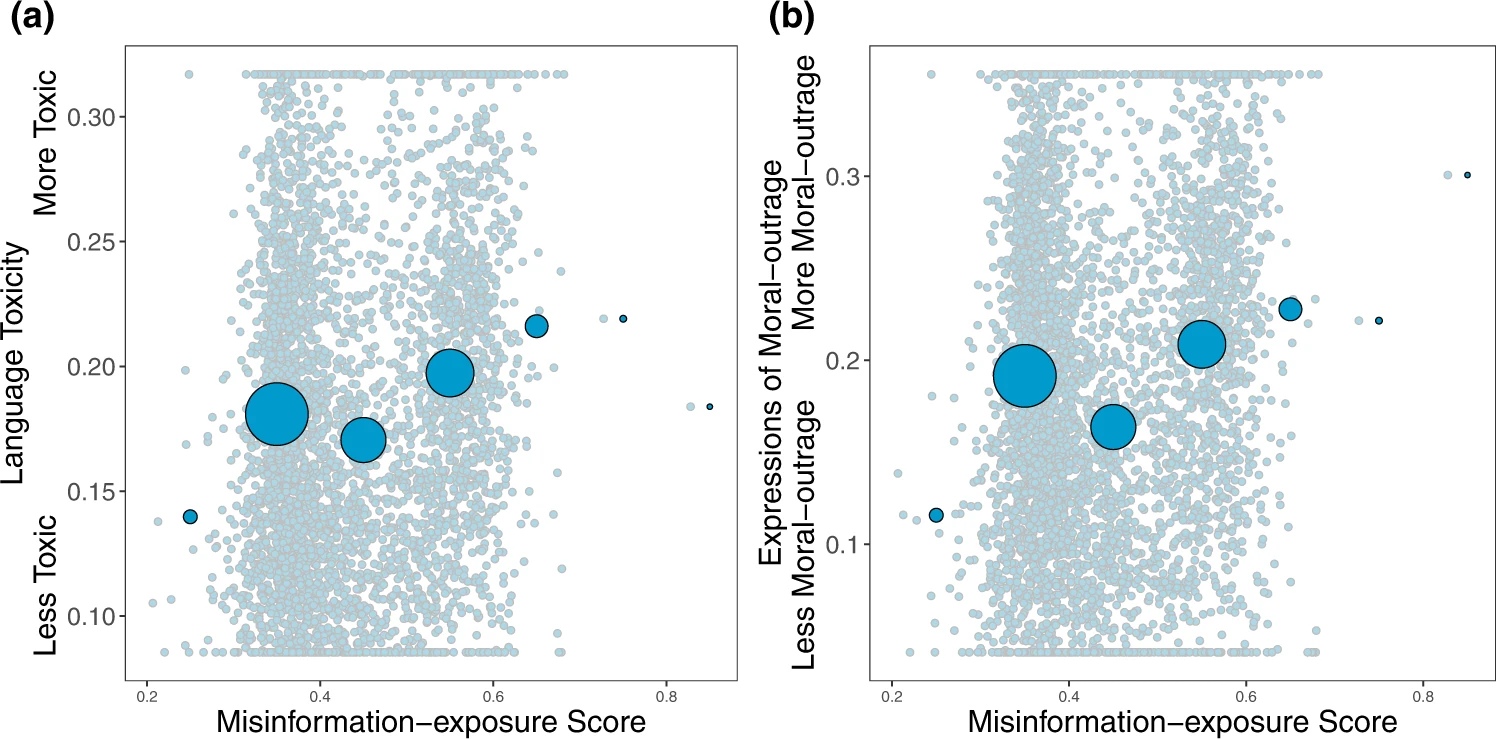

Estimated ideological extremity is associated with higher language toxicity and moral outrage scores for estimated conservatives more so than estimated liberals.

The relationship between estimated political ideology and (a) language toxicity and (b) expressions of moral outrage. Extreme values are winsorized by 95% quantile for visualization purposes. Source data are provided as a Source Data file.

-

In the co-share network, a cluster of websites shared more by conservatives is also shared more by users with higher misinformation exposure scores.

Nodes represent website domains shared by at least 20 users in our dataset and edges are weighted based on common users who shared them. a Separate colors represent different clusters of websites determined using community-detection algorithms29. b The intensity of the color of each node shows the average misinformation-exposure score of users who shared the website domain (darker = higher PolitiFact score). c Nodes’ color represents the average estimated ideology of the users who shared the website domain (red: conservative, blue: liberal). d The intensity of the color of each node shows the average use of language toxicity by users who shared the website domain (darker = higher use of toxic language). e The intensity of the color of each node shows the average expression of moral outrage by users who shared the website domain (darker = higher expression of moral outrage). Nodes are positioned using directed-force layout on the weighted network.

-

Exposure to elite misinformation is associated with the use of toxic language and moral outrage.

Shown is the relationship between users’ misinformation-exposure scores and (a) the toxicity of the language used in their tweets, measured using the Google Jigsaw Perspective API27, and (b) the extent to which their tweets involved expressions of moral outrage, measured using the algorithm from ref. 28. Extreme values are winsorized by 95% quantile for visualization purposes. Small dots in the background show individual observations; large dots show the average value across bins of size 0.1, with size of dots proportional to the number of observations in each bin. Source data are provided as a Source Data file.

-

-

www.nature.com www.nature.com

-

Exposure to elite misinformation is associated with sharing news from lower-quality outlets and with conservative estimated ideology.

Shown is the relationship between users’ misinformation-exposure scores and (a) the quality of the news outlets they shared content from, as rated by professional fact-checkers21, (b) the quality of the news outlets they shared content from, as rated by layperson crowds21, and (c) estimated political ideology, based on the ideology of the accounts they follow10. Small dots in the background show individual observations; large dots show the average value across bins of size 0.1, with size of dots proportional to the number of observations in each bin.

-

-

arxiv.org arxiv.org

-

Notice that Twitter’s account purge significantly impacted misinformation spread worldwide: the proportion of low-credible domains in URLs retweeted from U.S. dropped from 14% to 7%. Finally, despite not having a list of low-credible domains in Russian, Russia is central in exporting potential misinformation in the vax rollout period, especially to Latin American countries. In these countries, the proportion of low-credible URLs coming from Russia increased from 1% in vax development to 18% in vax rollout periods (see Figure 8 (b), Appendix).

-

Interestingly, the fraction of low-credible URLs coming from U.S. dropped from 74% in the vax devel-opment period to 55% in the vax rollout. This large decrease can be directly ascribed to Twitter’s moderationpolicy: 46% of cross-border retweets of U.S. users linking to low-credible websites in the vax developmentperiod came from accounts that have been suspended following the U.S. Capitol attack (see Figure 8 (a), Ap-pendix).

-

Considering the behavior of users in no-vax communities,we find that they are more likely to retweet (Figure 3(a)), share URLs (Figure 3(b)), and especially URLs toYouTube (Figure 3(c)) than other users. Furthermore, the URLs they post are much more likely to be fromlow-credible domains (Figure 3(d)), compared to those posted in the rest of the networks. The differenceis remarkable: 26.0% of domains shared in no-vax communities come from lists of known low-credibledomains, versus only 2.4% of those cited by other users (p < 0.001). The most common low-crediblewebsites among the no-vax communities are zerohedge.com, lifesitenews.com, dailymail.co.uk (consideredright-biased and questionably sourced) and childrenshealthdefense.com (conspiracy/pseudoscience)

-

-

ieeexplore.ieee.org ieeexplore.ieee.org

-

We applied two scenarios to compare how these regular agents behave in the Twitter network, with and without malicious agents, to study how much influence malicious agents have on the general susceptibility of the regular users. To achieve this, we implemented a belief value system to measure how impressionable an agent is when encountering misinformation and how its behavior gets affected. The results indicated similar outcomes in the two scenarios as the affected belief value changed for these regular agents, exhibiting belief in the misinformation. Although the change in belief value occurred slowly, it had a profound effect when the malicious agents were present, as many more regular agents started believing in misinformation.

-

-

www.mdpi.com www.mdpi.com

-

Therefore, although the social bot individual is “small”, it has become a “super spreader” with strategic significance. As an intelligent communication subject in the social platform, it conspired with the discourse framework in the mainstream media to form a hybrid strategy of public opinion manipulation.

-

There were 120,118 epidemy-related tweets in this study, and 34,935 Twitter accounts were detected as bot accounts by Botometer, accounting for 29%. In all, 82,688 Twitter accounts were human, accounting for 69%; 2495 accounts had no bot score detected.In social network analysis, degree centrality is an index to judge the importance of nodes in the network. The nodes in the social network graph represent users, and the edges between nodes represent the connections between users. Based on the network structure graph, we may determine which members of a group are more influential than others. In 1979, American professor Linton C. Freeman published an article titled “Centrality in social networks conceptual clarification“, on Social Networks, formally proposing the concept of degree centrality [69]. Degree centrality denotes the number of times a central node is retweeted by other nodes (or other indicators, only retweeted are involved in this study). Specifically, the higher the degree centrality is, the more influence a node has in its network. The measure of degree centrality includes in-degree and out-degree. Betweenness centrality is an index that describes the importance of a node by the number of shortest paths through it. Nodes with high betweenness centrality are in the “structural hole” position in the network [69]. This kind of account connects the group network lacking communication and can expand the dialogue space of different people. American sociologist Ronald S. Bert put forward the theory of a “structural hole” and said that if there is no direct connection between the other actors connected by an actor in the network, then the actor occupies the “structural hole” position and can obtain social capital through “intermediary opportunities”, thus having more advantages.

-

We analyzed and visualized Twitter data during the prevalence of the Wuhan lab leak theory and discovered that 29% of the accounts participating in the discussion were social bots. We found evidence that social bots play an essential mediating role in communication networks. Although human accounts have a more direct influence on the information diffusion network, social bots have a more indirect influence. Unverified social bot accounts retweet more, and through multiple levels of diffusion, humans are vulnerable to messages manipulated by bots, driving the spread of unverified messages across social media. These findings show that limiting the use of social bots might be an effective method to minimize the spread of conspiracy theories and hate speech online.

-

-

www.robinsloan.com www.robinsloan.com

-

I want to insist on an amateur internet; a garage internet; a public library internet; a kitchen table internet.

Social media should be comprised of people from end to end. Corporate interests inserted into the process can only serve to dehumanize the system.

Robin Sloan is in the same camp as Greg McVerry and I.

-

-

atproto.com atproto.com

-

www.getrevue.co www.getrevue.co

-

pluralistic.net pluralistic.net

-

Alas, lawmakers are way behind the curve on this, demanding new "online safety" rules that require firms to break E2E and block third-party de-enshittification tools: https://www.openrightsgroup.org/blog/online-safety-made-dangerous/ The online free speech debate is stupid because it has all the wrong focuses: Focusing on improving algorithms, not whether you can even get a feed of things you asked to see; Focusing on whether unsolicited messages are delivered, not whether solicited messages reach their readers; Focusing on algorithmic transparency, not whether you can opt out of the behavioral tracking that produces training data for algorithms; Focusing on whether platforms are policing their users well enough, not whether we can leave a platform without losing our important social, professional and personal ties; Focusing on whether the limits on our speech violate the First Amendment, rather than whether they are unfair: https://doctorow.medium.com/yes-its-censorship-2026c9edc0fd

This list is particularly good.

Proper regulation of end to end services would encourage the creation of filtering and other tools which would tend to benefit users rather than benefit the rent seeking of the corporations which own the pipes.

-

-

www.garbageday.email www.garbageday.email

-

my best guess is it’s the moderation

-

-

rhiaro.co.uk rhiaro.co.uk

-

I'd love it to be normal and everyday to not assume that when you post a message on your social network, every person is reading it in a similar UI, either to the one you posted from, or to the one everyone else is reading it in.

🤗

-

-

a.gup.pe a.gup.pe

-

[https://a.gup.pe/ Guppe Groups] a group of bot accounts that can be used to aggregate social groups within the [[fediverse]] around a variety of topics like [[crafts]], books, history, philosophy, etc.

Tags

Annotators

URL

-

-

zephoria.medium.com zephoria.medium.com

-

https://zephoria.medium.com/what-if-failure-is-the-plan-2f219ea1cd62

-

A lot has changed about our news media ecosystem since 2007. In the United States, it’s hard to overstate how the media is entangled with contemporary partisan politics and ideology. This means that information tends not to flow across partisan divides in coherent ways that enable debate.

Our media and social media systems have been structured along with the people who use them such that debate is stifled because information doesn't flow coherently across the political partisan divide.

-

-

blog.jonudell.net blog.jonudell.net

-

Humans didn’t evolve to thrive in frictionless social networks with high fanout and velocity, and arguably we shouldn’t.

-

-

oulipo.social oulipo.social

-

https://oulipo.social/about

Social media without the letter "e".

-

-

beesbuzz.biz beesbuzz.biz

-

blog.erinshepherd.net blog.erinshepherd.net

-

https://blog.erinshepherd.net/2022/11/a-better-moderation-system-is-possible-for-the-social-web/

-

The trust one must place in the creator of a blocklist is enormous, because the most dangerous failure mode isn’t that it doesn’t block who it says it does, but that it blocks who it says it doesn’t and they just disappear.

-

-

research.google research.google

-

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>Erin Alexis Owen Shepherd</span> in A better moderation system is possible for the social web (<time class='dt-published'>12/03/2022 11:10:32</time>)</cite></small>

-

-

www.noemamag.com www.noemamag.com

-

“The damage commercial social media has done to politics, relationships and the fabric of society needs undoing.

-

As users begin migrating to the noncommercial fediverse, they need to reconsider their expectations for social media — and bring them in line with what we expect from other arenas of social life. We need to learn how to become more like engaged democratic citizens in the life of our networks.

-

-

-

I have about fourteen or sixteen weeks to do this, so I'm breaking the course into an "intro" section that covers some basic stuff like affordances, and other insights into how tech functions. There's a section on AI which is nothing but critical appraisals on AI from a variety of areas. And there's a section on Social Media, which is the most well formed section in terms of readings.

https://zirk.us/@shengokai/109440759945863989

If the individuals in an environment don't understand or perceive the affordances available to them, can the interactions between them and the environment make it seem as if the environment possesses agency?

cross reference: James J. Gibson book The Senses Considered as Perceptual Systems (1966)

People often indicate that social media "causes" outcomes among groups of people who use it. Eg: Social media (via algorithmic suggestions of fringe content) causes people to become radicalized.

-

- Nov 2022

-

andy-bell.co.uk andy-bell.co.uk

-

The TTRG (time to reply guy) was getting so fast, that I can’t actually remember the last time I tweeted something helpful like a design or development tip. I just couldn’t be arsed, knowing some dickhead would be around to waste my time with whataboutisms and “will it scale”?

-

-

www.washingtonpost.com www.washingtonpost.com

-

The Post analyzed data from ProPublica’s Represent tool, which tracks congressional Twitter activity.

-

-

community.interledger.org community.interledger.org

-

11/30 Youth Collaborative

I went through some of the pieces in the collection. It is important to give a platform to the voices that are missing from the conversation usually.

Just a few similar initiatives that you might want to check out:

Storycorps - people can record their stories via an app

Project Voice - spoken word poetry

Living Library - sharing one's story

Freedom Writers - book and curriculum based on real-life stories

-

-

www.theatlantic.com www.theatlantic.com

-

As part of the Election Integrity Partnership, my team at the Stanford Internet Observatory studies online rumors, and how they spread across the internet in real time.

-

-

www.zylstra.org www.zylstra.org

-

socialmediaissues.net socialmediaissues.net

-

https://socialmediaissues.net/

Website for Social Media Issues, A resource for Comm 182/282. A course offered by Howard Rheingold at Stanford, Autumn, 2013

-

-

wiki.laptop.org wiki.laptop.org

-

cohost.org cohost.orgcohost!1

-

lucahammer.com lucahammer.com

-

the-federation.info the-federation.info

-

An aggregation site with data about the broader Fediverse and projects within it.

Tags

Annotators

URL

-

-

morningconsult.com morningconsult.com

-

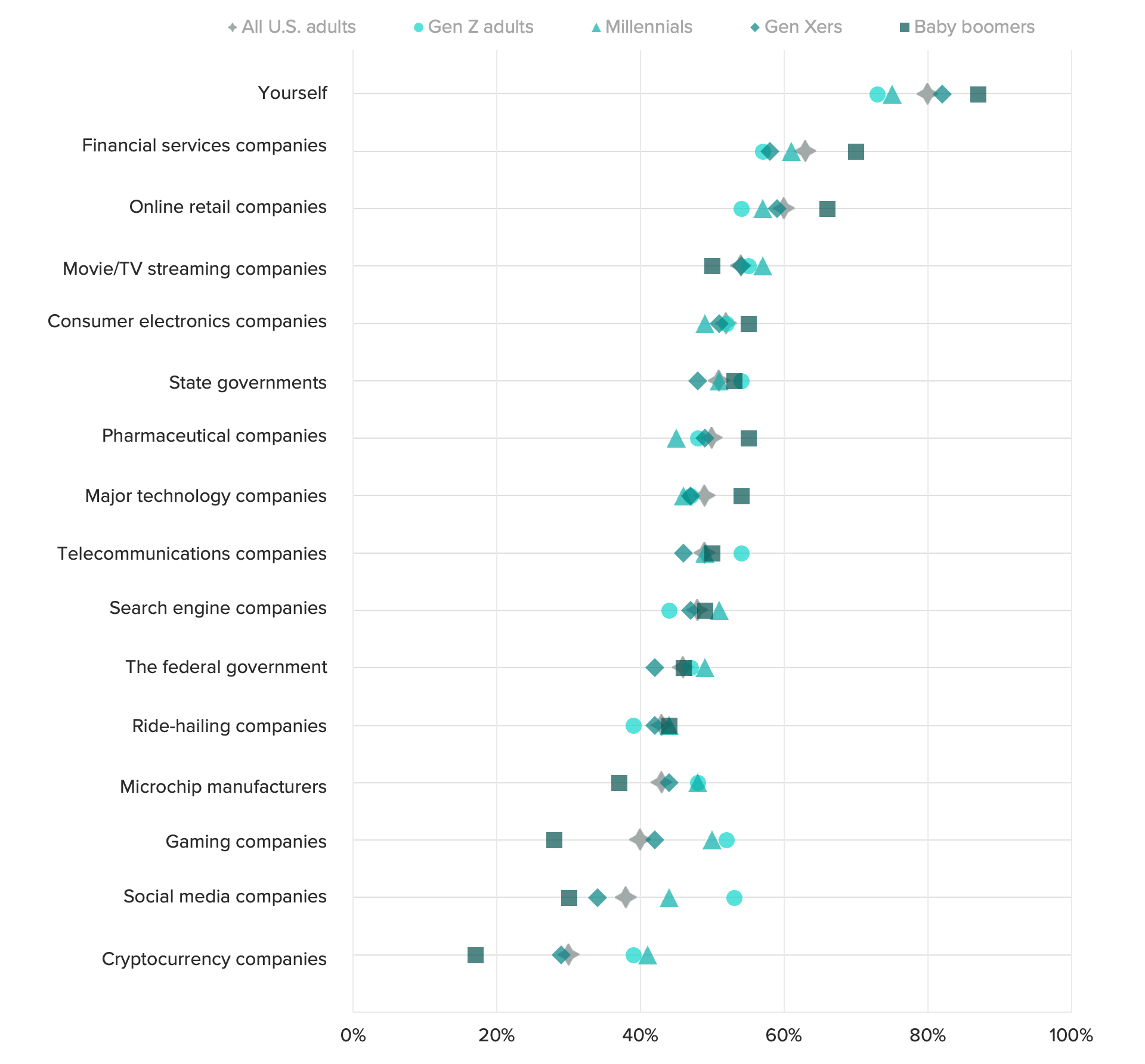

The notable exception: social media companies. Gen Zers are more likely to trust social media companies to handle their data properly than older consumers, including millennials, are.

Gen-Z is more trusting of data handling by social media companies

For most categories of businesses, Gen Z adults are less likely to trust a business to protect the privacy of their data as compared to other generations. Social media is the one exception.

-

-

masto.host masto.host

-

https://zettelkasten.social/about

Someone has registered the domain and it is hosted by masto.host, but not yet active as of 2022-11-13

Tags

Annotators

URL

-

-

blog.archive.org blog.archive.org

-

Looking forward to many social media alternatives: Blue Sky, Matrix, and many others.

If wishing only made it happen...

-

-

threadreaderapp.com threadreaderapp.com

-

fedified.com fedified.com

-

meh... This looks dreadful...

Why not just use the built in rel-me verification available in Twitter directly with respect to individual websites?

-

-

tracydurnell.com tracydurnell.com

-

doctorow.medium.com doctorow.medium.com

-

pruvisto.org pruvisto.org

-

https://pruvisto.org/debirdify/

Tool for moving some of your Twitter data over to Mastodon or other parts of the Fediverse.

-

-

theconversation.com theconversation.com

-

-

Any migration is likely to face many of the challenges previous platform migrations have faced: content loss, fragmented communities, broken social networks and shifted community norms.

-

By asking participants about their experiences moving across these platforms – why they left, why they joined and the challenges they faced in doing so – we gained insights into factors that might drive the success and failure of platforms, as well as what negative consequences are likely to occur for a community when it relocates.

-

-

theintercept.com theintercept.com

-