Both the output charge and gate charge are ten times lowerthan with Si, and the reverse recovery charge is almost zero, which is key for high-frequency operations.

advantage of GaN over Si

Both the output charge and gate charge are ten times lowerthan with Si, and the reverse recovery charge is almost zero, which is key for high-frequency operations.

advantage of GaN over Si

Đọc sách nghe tưởng chừng là việc quen thuộc và phức tạp vô cùng đối với nhiều người mà lại trở nên đơn giản đến không tưởng với cách diễn tả sâu sắc qua từng câu chuyện của tác giả Phan Thanh Dũng.

the generator and discriminator losses derive from a single measure of distance between probability distributions. In both of these schemes, however, the generator can only affect one term in the distance measure: the term that reflects the distribution of the fake data. So during generator training we drop the other term, which reflects the distribution of the real data.

Loss of GAN- How the two loss function are working on GAN training

So we train the generator with the following procedure: Sample random noise. Produce generator output from sampled random noise. Get discriminator "Real" or "Fake" classification for generator output. Calculate loss from discriminator classification. Backpropagate through both the discriminator and generator to obtain gradients. Use gradients to change only the generator weights.

GAN- Training for both generator and discriminator as a whole

The portion of the GAN that trains the generator includes: random input generator network, which transforms the random input into a data instance discriminator network, which classifies the generated data discriminator output generator loss, which penalizes the generator for failing to fool the discriminator

GAN- Generator

Steps or the exact work happening in the network

generator part of a GAN learns to create fake data by incorporating feedback from the discriminator

GAN- Generator learns to create fake data by incorporating feedback from discriminator

During discriminator training the generator does not train. Its weights remain constant while it produces examples for the discriminator to train on.

GAN - Discriminator training the generator remain quite. Does not train.

Both the generator and the discriminator are neural networks. The generator output is connected directly to the discriminator input. Through backpropagation, the discriminator's classification provides a signal that the generator uses to update its weights.

Working of GAN

generated instances become negative training examples for the discriminator

GAN- Generator. It is trying to produce the input for discriminator

Discriminative models try to draw boundaries in the data space, while generative models try to model how data is placed throughout the space.

GAN - Discriminative and Generative network work

MelNet: A Generative Model for Audio in the Frequency Domain

本文的主要贡献如下:

提出了 MelNet。一个语谱图的生成模型,它结合了细粒度的自回归模型和多尺度生成过程,能够同时捕获局部和全局的结构。

展示了 MelNet 在长程依赖性上卓越的性能。

展示了 MelNet 在多种音频生成任务上优秀的能力:无条件语音生成任务、音乐生成任务、文字转语音合成任务。而且在这些任务上,MelNet 都是端到端的实现。

An Introduction to Variational Autoencoders

【导读】变分自编码器(VAE)是重要的生成式模型。与生成式对抗网络(GAN)类似,VAE也可以被用来生成逼真的图像和文本信息,但VAE的思想却与GAN有很大的区别。本文介绍Arxiv上的一篇93页VAE导论,该导论包含大量的公式推导和图示。

近几年来,生成式对抗网络(GAN)吸引了大量科研人员和工程师的关注。然而除了GAN,变分自编码器(VAE)也是这几年较为火热的重要的生成式模型。与GAN的利用生成器和判别器进行对抗的思路不同,VAE的核心组件是自编码器和KL散度约束。

Object Discovery with a Copy-Pasting GAN

利用复制粘贴生成对抗网络(GAN)进行对象发现 Relja Arandjelovic、Andrew Zisserman

在本文中,研究者解决了对象发现(object discovery)的问题,即针对给定的输入图像进行对象分割,并在不使用任何直接监督的情况下训练系统。研究者提出了一种新的复制粘贴生成对抗网络(GAN)框架,其中通过将一幅图像中的一个对象合成到另一幅图像中,生成器能够发现图像中的对象,因此判别器无法分清楚生成的是否为伪图像。在认真解决了一些细小问题,如阻止生成器「作弊」,这个游戏最终导致生成器学会选择对象,因为复制粘贴对象最有可能欺骗判别器。结果显示,该系统在四个迥然不同的数据集上表现良好,包括挑战杂乱背景中的大型对象外观变化。

Image Generation from Small Datasets via Batch Statistics Adaptation

用批量统计适应从小数据集生成图像,仅用25张人脸图像微调预训练BigGANs/SNGANs

Evolutionary Generative Adversarial Networks

因为当前现有的很多GANS结构在训练的时候并不稳定,很容易就会发生模式崩塌的现象,在文中,作者提出一个新颖GAN框架称为进化对抗网络(E-GAN)。摒弃了单一的生成器的设定,将生成器当做一个族群,每个单个的生成器就是一个体,而每个个体的变异的方式是不同的。作者利用一种评价机制来衡量生成的样本的质量和多样性,这样只有性能良好的生成器才能保留下来,并用于进一步的培训。通过这种方式,E-GAN克服了个体对抗性训练目标的局限性,始终保留了对GANs的进步和成功做出贡献的最佳个体。

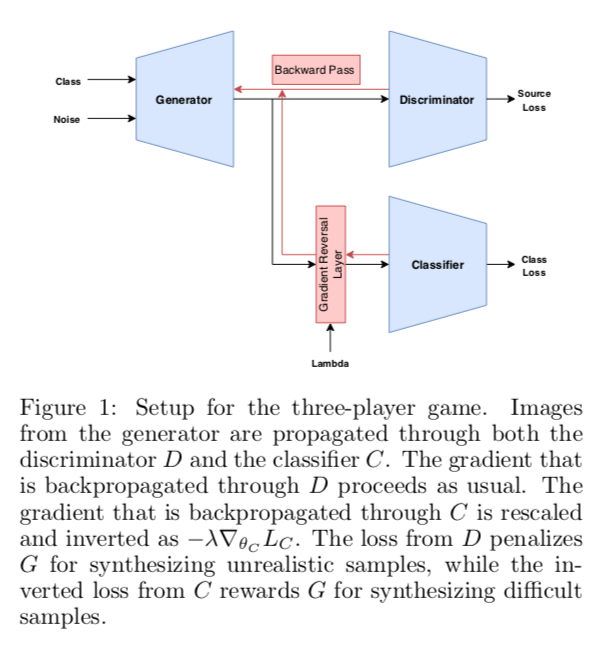

A Three-Player GAN: Generating Hard Samples To Improve Classification Networks

三方GANs,在生成器和分类器之间引入竞争,生成器的目标是合成既逼真又难以被分类器标记的样本

O-GAN: Extremely Concise Approach for Auto-Encoding Generative Adversarial Networks

本文通过简单地修改原来的GAN模型,就可以让判别器变成一个编码器,从而让GAN同时具备生成能力和编码能力,并且几乎不会增加训练成本。这个新模型被称为O-GAN(正交GAN,即Orthogonal Generative Adversarial Network),因为它是基于对判别器的正交分解操作来完成的,是对判别器自由度的最充分利用。

NIPS 2016 Tutorial: Generative Adversarial Networks

Maximum Entropy Generators for Energy-Based Models

【能量视角下的GAN模型】本文直接受启发于Bengio团队的新作《Maximum Entropy Generators for Energy-Based Models》,作者给出了GAN/WGAN的清晰直观的能量图像,讨论了判别器(能量函数)的训练情况和策略,指出了梯度惩罚一个非常漂亮而直观的能量解释。此外,本文还讨论了GAN中优化器的选择问题。http://t.cn/EcBIwqJ

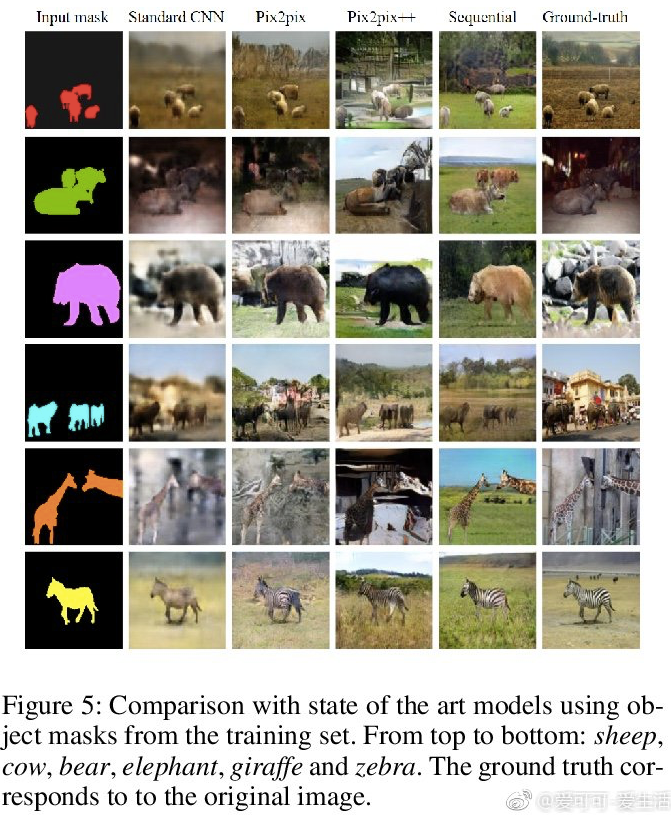

A Layer-Based Sequential Framework for Scene Generation with GANs

以 Mask 作为 Input 进行“场景生成”。现在对 GAN 生成技术的控制玩得越来越柔韧有俞~~

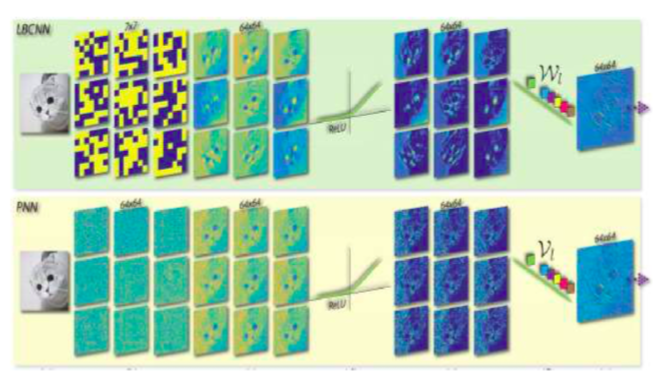

Perturbative GAN: GAN with Perturbation Layers

作者提出了更好更快更强的 Perturbative GAN。记得去年 CVPR2018的一篇《Perturbative Neural Networks》被 reddit 一网友提出质疑闹得满城风雨,最后还不是打脸(http://t.cn/EcYUjg4)[doge],而这进一步的工作又出来了,真的是真金不怕火炼啊![赞]

An Introduction to Image Synthesis with Generative Adversarial Nets

GAN 自 2014 年诞生至今也有 4 个多年头了,大量围绕 GAN 展开的文章被发表在各大期刊和会议,以改进和分析 GAN 的数学研究、提高 GAN 的生成质量研究、GAN 在图像生成上的应用(指定图像合成、文本到图像,图像到图像、视频)以及 GAN 在 NLP 和其它领域的应用。图像生成是研究最多的,并且该领域的研究已经证明了在图像合成中使用 GAN 的巨大潜力。本文对 GAN 在图像生成应用做个综述。

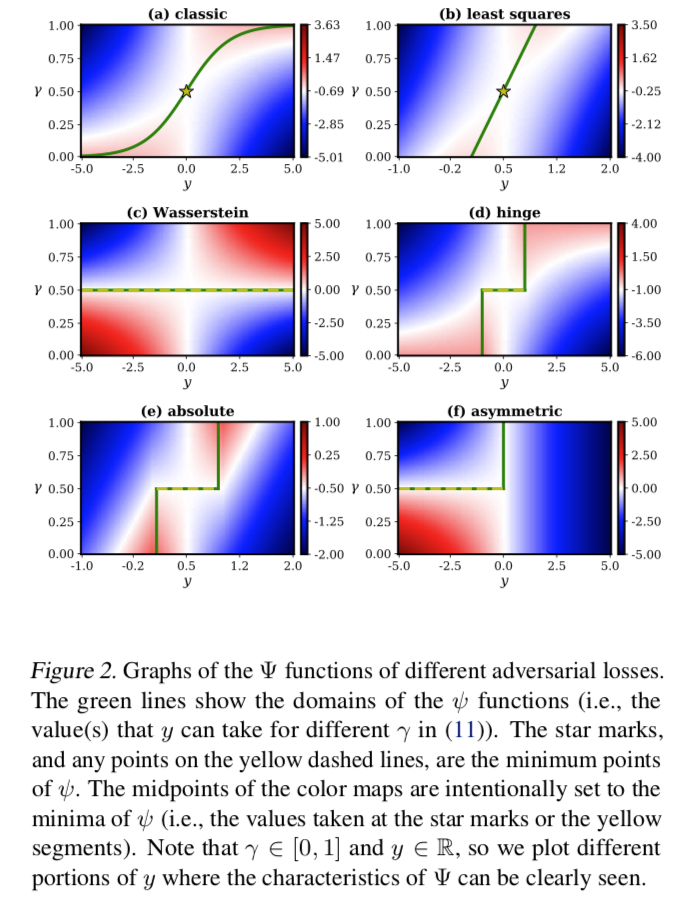

Towards a Deeper Understanding of Adversarial Losses

研究了各种对抗生成训练的 losses,还可以 know which one of them makes an adversarial loss better than another。

Exploring Disentangled Feature Representation Beyond Face Identification

Accepted by CVPR 2018, 香港中文大学、北京大学和商汤科技的文章. 这篇采用了对抗的思路来获得人脸表征, 并使用AutoEncoder的结构训练出了一个属性可控的人脸生成模型. 具体来讲, encoder部分使用Inception-ResNet作为backbone, 在最后使用Distilling和Dispelling分支分别学习与ID相关/无关的特征; decoder部分使用这两个特征concat的结果作为输入, 经过TransposeConv和Upsampling获得输出图像. 最终在LFW的精度是99.8, 生成人脸的效果也比较出色.

?

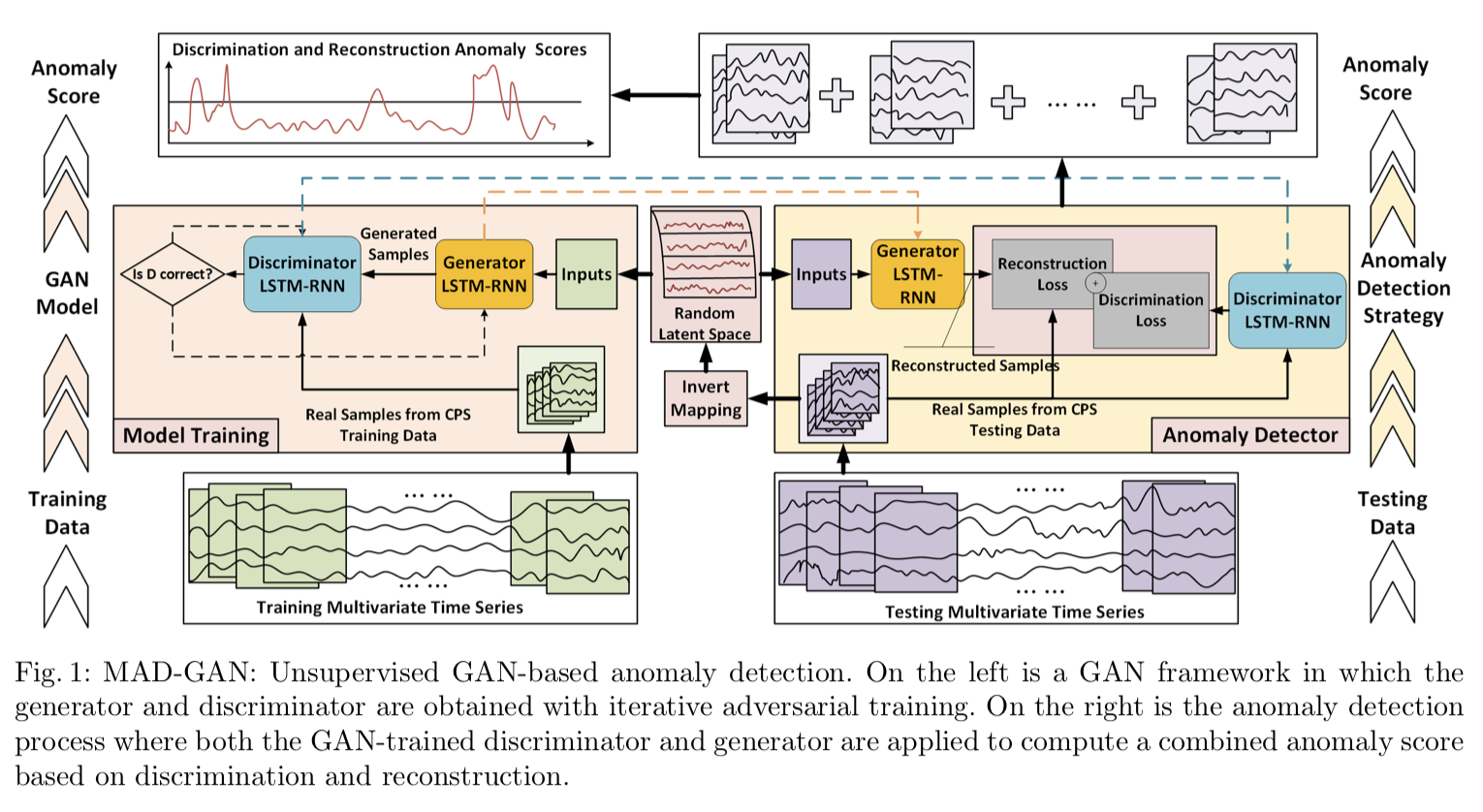

MAD-GAN: Multivariate Anomaly Detection for Time Series Data with Generative Adversarial Networks

这 paper 挺神的,用 GAN 做时序数据异常检测。主要神在 G 和 D 都仅用 LSTM-RNN 来构造的!不仅因此值得我关注,更因为该模型可以为自己思考“非模板引力波探测”带来启发!

InstaGAN: Instance-aware Image-to-Image Translation

这两天很火的 paper,新的 InstaGAN 不仅对抗编码一幅图像,还可以同时编码其中的实例。

网络结构图:

Improving Generalization and Stability of Generative Adversarial Networks

GANs with 0-GP (a zero-centered gradient penalty)。通过重新设计正则化方案,来提升 GANs 的表现,网络也更加稳定,对超参数更鲁棒。

Generative Models from the perspective of Continual Learning

蛮有趣的实验!读读 OpenReview.net 上匿名审稿人和作者之间的对话也会收获颇多~~

Finger-GAN: Generating Realistic Fingerprint Images Using Connectivity Imposed GAN

A Probe into Understanding GAN and VAE models

paper 提出了个 VAE-GAN 模型,不过正如作者自己说的可能是 GPU 资源不够,图像质量并不太如意,而且用的是 FCN 不是 CNN;主要用 Entropy 来量化评估生成变现。

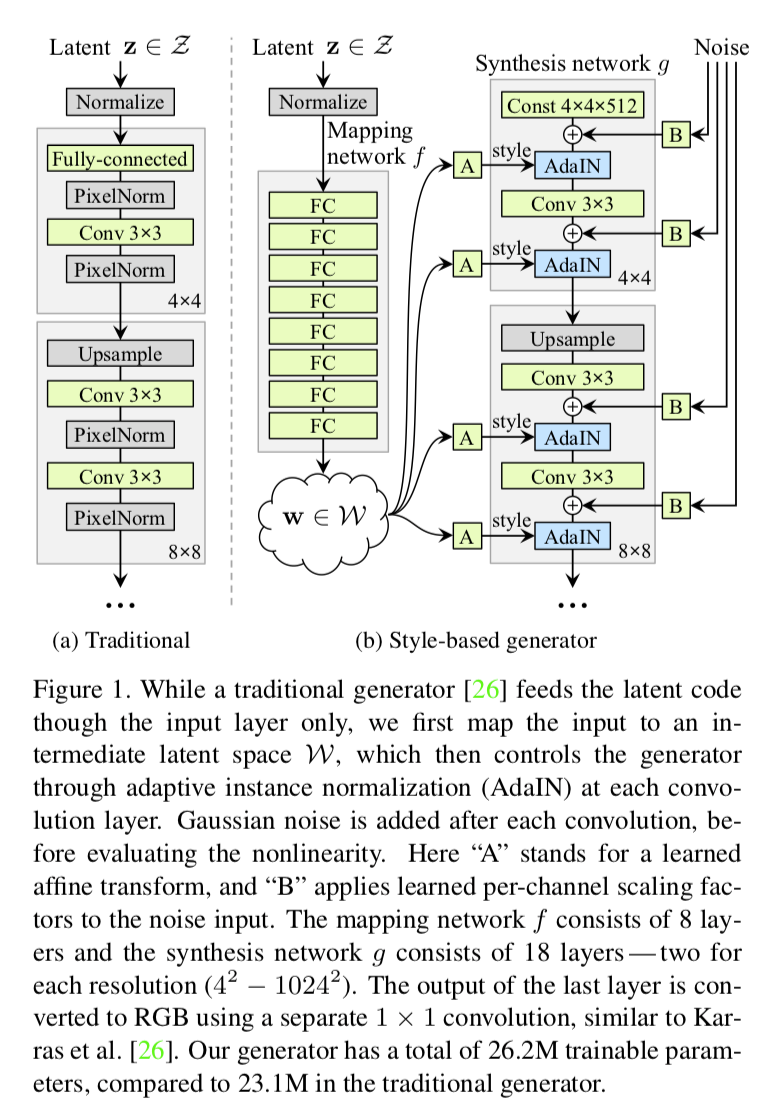

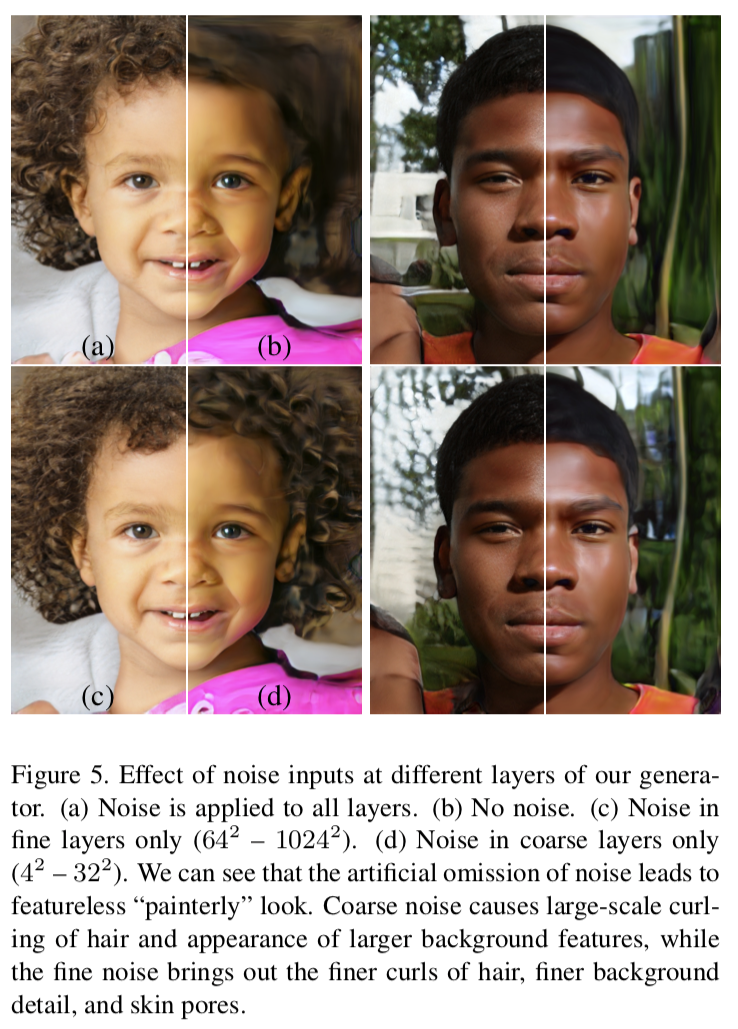

A Style-Based Generator Architecture for Generative Adversarial Networks

带风格变化的“变脸”~ 此文的开源代码应该很值得期待!

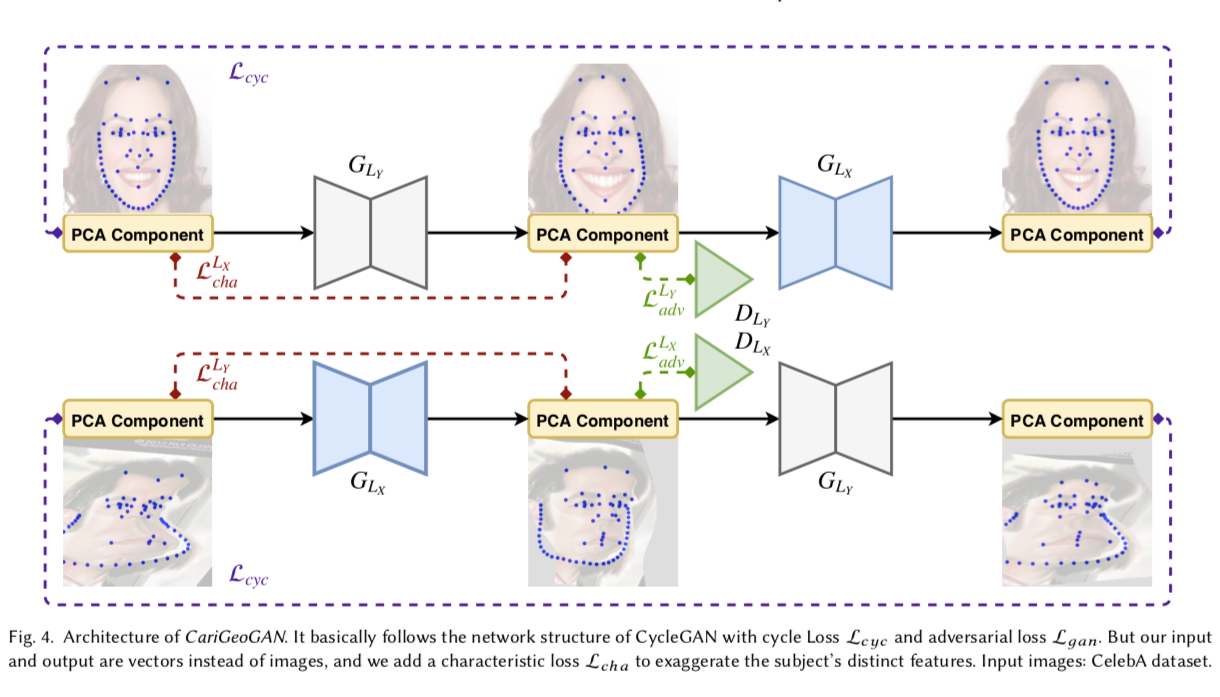

CariGANs: Unpaired Photo-to-Caricature Translation

照片转动画。。。

Generalization and Equilibrium in Generative Adversarial Nets (GANs)

转自作者 Yi Zhang 在知乎上的回答:https://www.zhihu.com/question/60374968/answer/189371146

老板在Simons给的talk:https://www.youtube.com/watch?v=V7TliSCqOwI

这应该是第一个认真研究 theoretical guarantees of GANs的工作

使用的techniques比较简单,但得到了一些insightful的结论:

在只给定training samples 而不知道true data training distribution的情况下,generator's distribution会不会converge to the true data training distribution.

答案是否定的。 假设discriminator有p个parameters, 那么generator 使用O(p log p) samples 就能够fool discriminator, 即使有infinitely many training data。

这点十分反直觉,因为对于一般的learning machines, getting more data always helps.

几乎所有的GAN papers都会提到GANs' training procedure is a two-player game, and it's computing a Nash Equilibrium. 但是没有人提到此equilibrium是否存在。

大家都知道对于pure strategy, equilibrium doesn't always exist。很不幸的是,GANs 的结构使用的正是pure strategy。

很自然的我们想到把GANs扩展到mixed strategy, 让equilibrium永远存在。

In practice, 我们只能使用finitely supported mixed strategy, 即同时训练多个generator和多个discriminator。借此方法,我们在CIFAR-10上获得了比DCGAN高许多的inception score.

通过分析GANs' generalization, 我们发现GANs training objective doesn't encourage diversity. 所以经常会发现mode collapse的情况。但至今没有paper严格定义diversity或者分析各种模型mode collapse的严重情况。

关于这点在这片论文里讨论的不多。我们有一篇follow up paper用实验的方法估计各种GAN model的diversity, 会在这一两天挂到arxiv上。

Improved Techniques for Training GANs

训练GANs 其实是一个找纳什均衡的问题。但是找高维连续非凸问题的纳什均衡点是很困难的。

The GAN Landscape: Losses, Architectures, Regularization, and Normalization

看到 Goodfellow 亲自转推了此文~~

很重要的 review,准备个 Paper Summary

Label-Noise Robust Generative Adversarial Networks

作者提出一种新的GAN类型,称为标签噪声GAN(rGAN),通过结合噪声过渡模型,即使标签有噪声,也可以学习干净的标签条件生成分布

Large Scale GAN Training for High Fidelity Natural Image Synthesis

Goodfellow 转载了此 BigGANs!

在 ImageNet 上最大范围尺度上训练 GAN 来研究类别的不稳定性。并据称Iception Scores 提升超过100,有应用“orghogonal 正则化”技术~

这也是2018年 Spectral Normalization 的作者!

另外,附录里还如此贴心的写了一页“错题本”。。。有心又有爱的作者![赞]

Paper Summary

Seamless Nudity Censorship: an Image-to-Image Translation Approach based on Adversarial Training

【用GAN给裸女自动“穿”上比基尼】

这么多人对这篇文章经验的实验效果表示赞叹和好奇~ 我也去瞻仰一番去。。。

Language GANs Falling Short

此文的一个 Paper Summary 写的特别棒!

This paper’s high-level goal is to evaluate how well GAN-type structures for generating text are performing, compared to more traditional maximum likelihood methods. In the process, it zooms into the ways that the current set of metrics for comparing text generation fail to give a well-rounded picture of how models are performing.

In the old paradigm, of maximum likelihood estimation, models were both trained and evaluated on a maximizing the likelihood of each word, given the prior words in a sequence. That is, models were good when they assigned high probability to true tokens, conditioned on past tokens. However, GANs work in a fundamentally new framework, in that they aren’t trained to increase the likelihood of the next (ground truth) word in a sequence, but to generate a word that will make a discriminator more likely to see the sentence as realistic. Since GANs don’t directly model the probability of token t, given prior tokens, you can’t evaluate them using this maximum likelihood framework.

This paper surveys a range of prior work that has evaluated GANs and MLE models on two broad categories of metrics, occasionally showing GANs to perform better on one or the other, but not really giving a way to trade off between the two.

The first type of metric, shorthanded as “quality”, measures how aligned the generated text is with some reference corpus of text: to what extent your generated text seems to “come from the same distribution” as the original. BLEU, a heuristic frequently used in translation, and also leveraged here, measures how frequently certain sets of n-grams occur in the reference text, relative to the generated text. N typically goes up to 4, and so in addition to comparing the distributions of single tokens in the reference and generated, BLEU also compares shared bigrams, trigrams, and quadgrams (?) to measure more precise similarity of text.

The second metric, shorthanded as “diversity” measures how different generated sentences are from one another. If you want to design a model to generate text, you presumably want it to be able to generate a diverse range of text - in probability terms, you want to fully sample from the distribution, rather than just taking the expected or mean value. Linguistically, this would be show up as a generator that just generates the same sentence over and over again. This sentence can be highly representative of the original text, but lacks diversity. One metric used for this is the same kind of BLEU score, but for each generated sentence against a corpus of prior generated sentences, and, here, the goal is for the overlap to be as low as possible

The trouble with these two metrics is that, in their raw state, they’re pretty incommensurable, and hard to trade off against one another.

更多需要阅读 Paper Summary 了。。。。

WaveGlow: A Flow-based Generative Network for Speech Synthesis

一篇来自 NVIDIA 的小文。提出的实时生成网络 WaveGlow 结合了 Glow 和 WaveNet 的特点,实现了更快速高效准确的语音合成。

ClusterGAN : Latent Space Clustering in Generative Adversarial Networks

貌似结果效果很惊艳<del>~</del>~!

用 GAN 做聚类的!

NEMGAN: Noise Engineered Mode-matching GAN

一个新 GAN,特点是 fully unsupervised。不仅可以做条件生成,还可以做聚类。

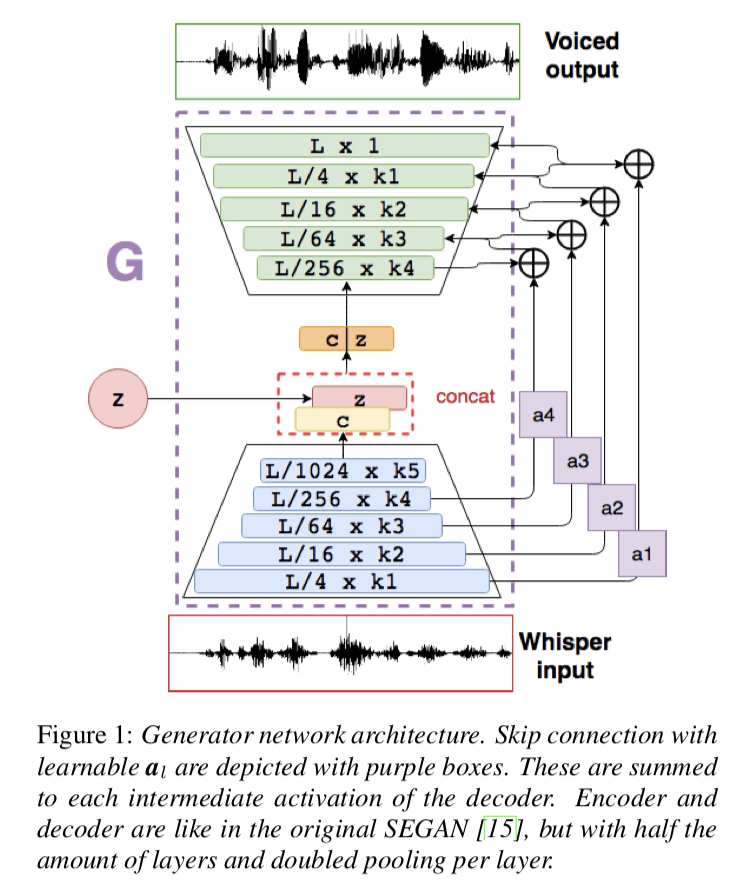

Whispered-to-voiced Alaryngeal Speech Conversion with Generative Adversarial Networks

这是一篇用 GAN 来做 Voiced Speech Restoration 的,并且使用了作者自己提出的 speech enhancement using GANs (SEGAN) 。

于我而言,亮点有二:

Do Deep Generative Models Know What They Don't Know?

DeepMind 这篇文章算是一股清流,告诉我们千万不要完全迷信生成模型的结果。这里有一个不错的paper summary:

Generative models might not be as robust as we’ve come to believe.

The DeepMind paper concludes that researchers should be aware of deep generative models’ vulnerabilities and that they will require further improvements in this regard.

“In turn, we must then temper the enthusiasm with which we preach the benefits of generative models until their sensitivity to out-of-distribution inputs is better understood.”

Toward Convolutional Blind Denoising of Real Photographs

真实照片上的盲去噪!印象中使用的 GAN~

乖乖准确 Paper Summary!

Generative adversarial networks and adversarial methods in biomedical image analysis

这是一篇很有 review 气质的 paper,对GAN 和对抗方法等做了介绍(在生物医药领域中),也谈论了这些技术应用的优势和劣势。

对我来说,这是一篇很适合快速入门GAN应用的 paper。

Bias and Generalization in Deep Generative Models: An Empirical Study

有些没看太懂~

不过此文通过对训练集的实验控制是很值得我学习和借鉴的!

The relativistic discriminator: a key element missing from standard GAN

本文基于“图灵测试”思想提出了用相对的判别器来取代标准GAN原有的判别器,使得生成器的收敛更为迅速,训练更为稳定。在笔者看来,RSGAN具有更为深刻的含义,甚至可以看成它已经开创了一个新的GAN流派。

来自 Paperweekly 的论文解读:http://t.cn/EZg9Gj1

Discriminator Rejection Sampling

判别器舍选抽样(DRS)——用GAN判别器对训练的GAN生成器进行拒绝采样

作者之一 Goodfellow 这样发推评价此文:“The discriminator often knows something about the data distribution that the generator didn't manage to capture. By using rejection sampling, it's possible to knock out a lot of bad samples.”

Refacing: reconstructing anonymized facial features using GANs

一篇医疗领域的核磁共振图像生成的 paper。对我来说唯一值得 mark 的是它用的是 correlation coefficients and structural similarity indices (SSIMs) 来 evaluate CycleGAN 的效果的。

The alignment problem is how to make sure that the input image in A is mapped (via image generation) to an analog image in B, where this analogy is not defined by training pairs or in any other explicit way (see Sec. 2).

Well, you are attempting to define it as "the alignment given by the lowest complexity meme".

I think this doesn't address the question, which is more generally addressed to the whole field of cross-domain mapping, I think. Why aren't the new neural network approaches compared with previous approaches of unlabelled cross-domain mapping, which can be formalized as different forms of alignment between sets of points. I think the main difference here is that in those approaches you typically know the whole target domain, while here we don't quite know it, we just have a few samples from it, but I feel this is a surmontable problem

Generative adversarial nets (GAN) , DCGAN, CGAN, InfoGAN”

GAN

Toward Multimodal Image-to-Image Translation

New thing

Adversarial networks provide a strong algorithmic framework for building unsupervised learning models that incorporate properties such as common sense, and we believe that continuing to explore and push in this direction gives us a reasonable chance of succeeding in our quest to build smarter AI.

This demonstration of unsupervised generative models learning object attributes like scale, rotation, position, and semantics was one of the first.

DCGANs are also able to identify patterns and put similar representations together in some dimension space.

Practically, this property of adversarial networks translates to better, sharper generations and higher-quality predictive models.

The adversarial network learns its own cost function — its own complex rules of what is correct and what is wrong — bypassing the need to carefully design and construct one.

This cost function forms the basis of what the neural network learns and how well it learns. A traditional neural network is given a cost function that is carefully constructed by a human scientist.

While previous attempts to use CNNs to train generative adversarial networks were unsuccessful, when we modified their architecture to create DCGANs, we were able to visualize the filters the networks learned at each layer, thus opening up the black box.

This type of optimization is difficult, and if the model weren't stable, we would not find this center point.

Instead of having a neural network that takes an image and tells you whether it's a dog or an airplane, it does the reverse: It takes a bunch of numbers that describe the content and then generates an image accordingly.

An adversarial network has a generator, which produces some type of data — say, an image — from some random input, and a discriminator, which gets input either from the generator or from a real data set and has to distinguish between the two — telling the real apart from the fake.

Would you have me argue that man is entitled to liberty? that he is the rightful owner of his own body? You have already declared it. Must I argue the wrongfulness of slavery? Is that a question for Republicans? Is it to be settled by the rules of logic and argumentation, as a matter beset with great difficulty, involving a doubtful application of the principle of justice, hard to be understood? How should I look to-day, in the presence of Americans, dividing, and subdividing a discourse, to show that men have a natural right to freedom?

In the following three paragraphs, the author uses question marks for many times, strongly indicating that he has so many questions left without having a proper answer from the government, the church, and the law. It is very ironic just like what he says after making this questions that seem obvious but are impossible to answer for those who support slavery.

I was born amid such sights and scenes. To me the American slave-trade is a terrible reality. When a child, my soul was often pierced with a sense of its horrors.

The author uses his own experience to demonstrate how serious the problem of slavery is at that time. From the persepective of a child, readers can feel the emotions much more stronger, since even a child, without realizing how serious the actual condition is, often feels a sense of horror.

God speed the year of jubilee The wide world o’er When from their galling chains set free, Th’ oppress’d shall vilely bend the knee, And wear the yoke of tyranny Like brutes no more. That year will come, and freedom’s reign, To man his plundered fights again Restore. God speed the day when human blood Shall cease to flow! In every clime be understood, The claims of human brotherhood, And each return for evil, good, Not blow for blow; That day will come all feuds to end. And change into a faithful friend Each foe. God speed the hour, the glorious hour, When none on earth Shall exercise a lordly power, Nor in a tyrant’s presence cower; But all to manhood’s stature tower, By equal birth! That hour will come, to each, to all, And from his prison-house, the thrall Go forth. Until that year, day, hour, arrive, With head, and heart, and hand I’ll strive, To break the rod, and rend the gyve, The spoiler of his prey deprive — So witness Heaven! And never from my chosen post, Whate’er the peril or the cost, Be driven.

Considering the special social background of that time, having a religious poem can give more credit to the authenticity of his article. It is more convincing at that time.

The iron shoe, and crippled foot of China must be seen, in contrast with nature. Africa must rise and put on her yet unwoven garment. “Ethiopia shall stretch out her hand unto God.”

The author surprisingly mentions China in this paragraph, maybe showing that his concerns of racial problems not on in African Americans but also in some other minority groups. He also infers that China and African countries will rise in the future.

Did this law concern the “mint, anise, and cumin” — abridge the right to sing psalms, to partake of the sacrament, or to engage in any of the ceremonies of religion, it would be smitten by the thunder of a thousand pulpits. A general shout would go up from the church, demanding repeal, repeal, instant repeal!

The author ironically points out the injustices in law despite it gives people civil and religious liberty. He mentions" mint, anise, and cumin" which seem to be good things in the law, but these things are the least important in actual human rights. So these law makers and politians are hypocrites in the author's view.

Where these are, man is not sacred. He is a bird for the sportsman’s gun. By that most foul and fiendish of all human decrees, the liberty and person of every man are put in peril. Your broad republican domain is hunting ground for men. Not for thieves and robbers, enemies of society, merely, but for men guilty of no crime. Your lawmakers have commanded all good citizens to engage in this hellish sport. Your President, your Secretary of State, our lords, nobles, and ecclesiastics, enforce, as a duty you owe to your free and glorious country, and to your God, that you do this accursed thing.

There is an excellent metaphor in this paragraph. The author compares slaves with birds for the sportsman's gun, which refers to republicans and slaveholders. The republican domain is like the hunting ground of the birds, and other people involved in this hellish business, are the supportors of this horrible sport.

Architecture guidelines for stable Deep Convolutional GANsReplace any pooling layers with strided convolutions (discriminator) and fractional-stridedconvolutions (generator).Use batchnorm in both the generator and the discriminator.Remove fully connected hidden layers for deeper architectures.Use ReLU activation in generator for all layers except for the output, which uses Tanh.Use LeakyReLU activation in the discriminator for all layers

Concrete guidelines for DCGAN architecture

Adversarial Variational Bayes: Unifying Variational Autoencoders andGenerative Adversarial Networks

Adversarial Feature Learning