I like GitLab

Summary: I Like GitLab

- Initial Adoption: The author originally chose GitLab because it offered free private repositories when GitHub still charged for them, leading to a long-term workflow integration.

- Integrated Container Registry: One of the most valued features is the built-in Docker registry, which eliminates the need for separate accounts, external access tokens, and concerns about Docker Hub pull limits.

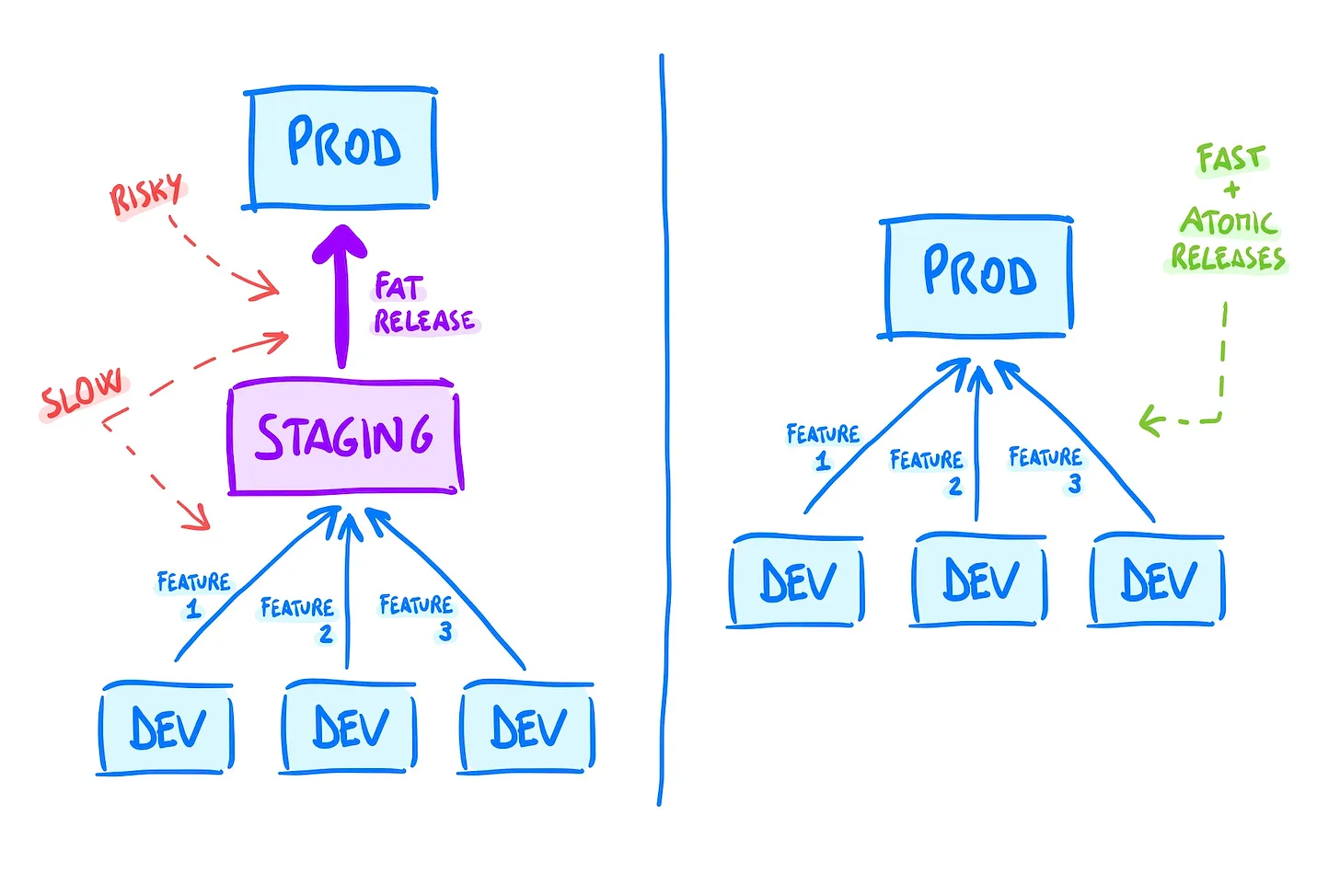

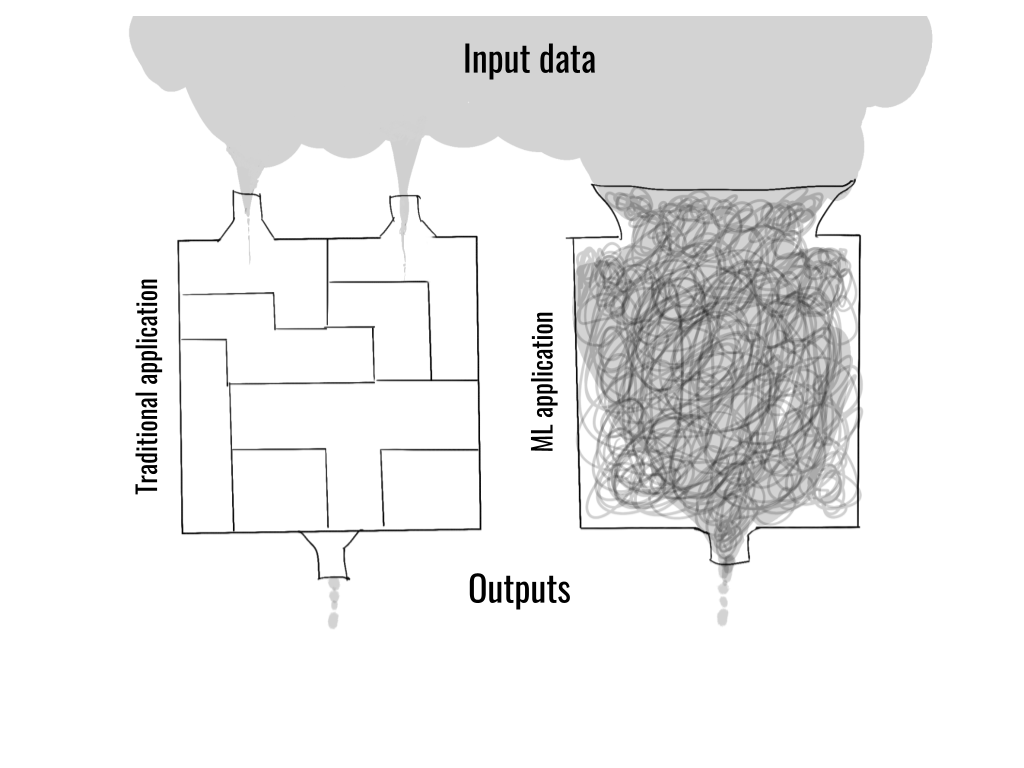

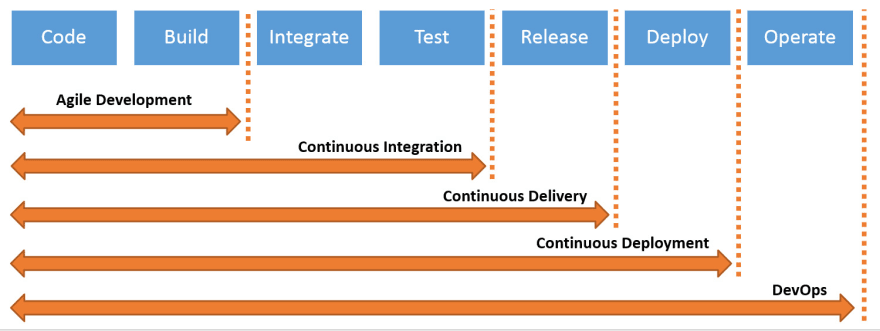

- CI/CD Maturity: GitLab's "config as code" (.gitlab-ci.yml) is praised for being versioned with the repo and offering extensive documentation, though the sheer volume of options can be overwhelming.

- Runner Flexibility: While shared runners are reliable for free workloads, the author finds setting up custom runners on private VPS instances to be straightforward.

- Performance Issues: The web interface is consistently described as sluggish and slow compared to GitHub, creating "constant friction" during long sessions.

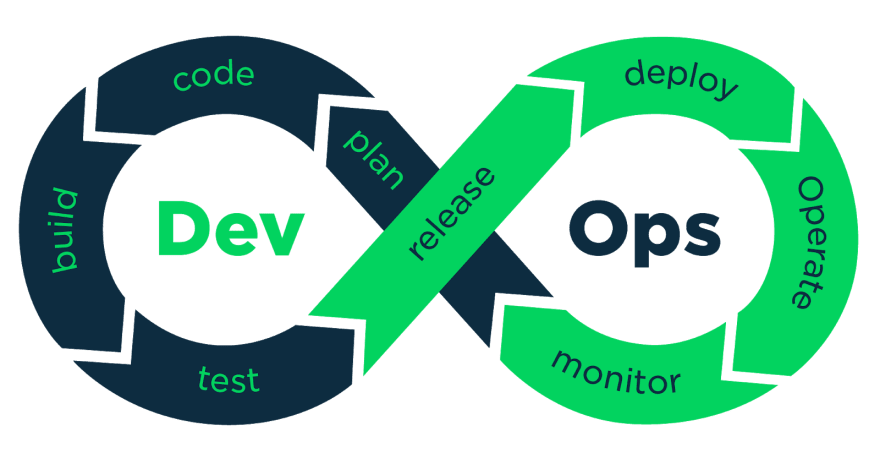

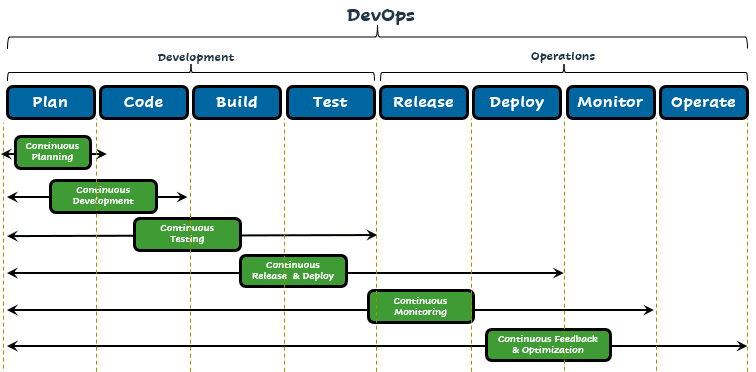

- Feature Bloat: GitLab attempts to be an all-in-one DevOps platform; while the author only uses about 10% of the features, they acknowledge the benefit of having advanced tools (like security scanning) available if needed.

- Workflow Split: The author uses GitLab as a "digital workshop" for private, messy experiments and reserves GitHub for public-facing collaboration and visibility.

Hacker News Discussion

- Corporate Shift & Quality: Several users noted that since its IPO, GitLab seems to prioritize "enterprise checklist" features and AI over fixing long-standing bugs and improving general UI polish.

- The "Sluggishness" Debate: A major point of discussion was GitLab's slow performance. Some attribute this to the "Ruby on Rails tax," though others pointed out that GitHub and Shopify also use Rails successfully, suggesting the issue lies in GitLab's specific architecture.

- Rise of Alternatives: Many commenters mentioned switching to Forgejo or Gitea for self-hosting, citing significantly lower resource requirements (up to 90% less) and near-instant page loads.

- The "80/20" Problem: Critics argued that GitLab often builds 80% of a feature to satisfy marketing requirements but leaves the remaining 20% of "polish" unfinished, leading to a "meme" of finding 5-year-old open bug reports for basic issues.

- Storage Exploits: There was a technical side-discussion about the 10GB project limit; users noted that because the limit often applies per-layer rather than per-registry, it can sometimes be bypassed for very large images.

- Website Appreciation: Many participants took a tangent to praise the blog's design, specifically its minimalist, terminal-like aesthetic and "markdown-as-markdown" presentation.

and one more:

and one more: