I would argue that "whole tree" thinking is enhanced by --follow being the default. What I mean is when I want to see the history of the code within a file, I really don't usually care whether the file was renamed or not, I just want to see the history of the code, regardless of renames. So in my opinion it makes sense for --follow to be the default because I don't care about individual files; --follow helps me to ignore individual file renames, which are usually pretty inconsequential.

- Apr 2025

-

stackoverflow.com stackoverflow.com

-

-

book.originit.top book.originit.top

-

(validBytes - lastIndexEntry > indexIntervalBytes)

recover过程中会重置索引,然后遍历每个batch来添加索引项,这样就能避免掉原本写入消息时,一次性写入的多batch的消息最多只写入一次索引导致查询效率低的问题

-

recover 方法

什么时候recover?

kafka在shutdown的时候会将segment刷盘并维护checkpoint和.kafka_cleanshutdown文件,每个partition一份.重启的时候会进行判定

-

- Jul 2024

-

www.drupal.org www.drupal.org

-

Especially users working with Microsoft Office 365 and therefore Outlook noticed very often that login is not possible. Upon closer analysis, it was found that the MS/Bing crawlers are particularly persistent and repeatedly call the reset links, regardless of server configuration or the like. For this reason, a text field was implemented in the backend via the Drupal State API, in which selected user agents (always one per line) can be entered. These are checked by 'Shy One Time', in case of a hit a redirect to the LogIn form with a 302 status code occurs, the reset link is not invalidated.

-

-

security.stackexchange.com security.stackexchange.com

-

I’ve implemented a form on the landings page that auto-submits (on DOMContentLoaded) and posts the token to the next page. Passwordless login is now working for my client despite their mail scanner.

-

- Apr 2024

-

start.digitalefitheid.nl start.digitalefitheid.nl

-

49:00 Voys heeft een company wiki. Hierbij hanteren ze het volgende: weet je iets niet, kijk dan in het "orakel". Zit het er niet in? Voeg het dan toe. ... Je kan ook een logboek bijhouden voor beslissingen.

-

-

-

Rather than seeing document history as a linear sequence of versions,we could see it as a multitude of projected views on top of a database of granular changes

That would be nice.

As well as preserving where ops come from.

Screams to have gossip-about-gossip. I'm surprised people do not discover it.

-

Another approach is to maintain all operations for a particular document version in anordered log, and to append new operations at the end when they are generated. To mergetwo document versions with logs 𝐿1 and 𝐿2 respectively, we scan over the operations in𝐿1, ignoring any operations that already exist in 𝐿2; any operations that do not exist in 𝐿2are applied to the 𝐿1 document and appended to 𝐿1 in the order they appear in 𝐿2.

This much like Chronofold's subjective logs.

Do the docs need to be shared in full? The only thing we need is delta ops.

-

Peritext works bycapturing a document’s edit history as a log of operations

How that works is not mentioned.

I guess ops are collected into logs, per member, based on their IDs.

A step short from having gossip-about-gossip.

Tags

Annotators

URL

-

- Nov 2023

-

Local file Local file

-

Splitting a “transaction” into a mul-tistage pipeline of stream processors allows each stage to make progress based only on local data; it ensures that one partition is never blocked waiting for communication or coordination with another partition.

Event logs allow for gradual processing of events.

-

A subscriber periodically check-points the latest LSN it has processed to stable storage. When a subscriber crashes, upon recovery it resumes pro-cessing from the latest checkpointed LSN. Thus, a subscriber may process some events twice (those processed between the last checkpoint and the crash), but it never skips any events. Events in the log are processed at least once by each subscriber.

-

-

martin.kleppmann.com martin.kleppmann.com

-

Partially ordered event-based systems are well placed to supportsuch branching-and-merging workflows, since they already makedata changes explicit in the form of events, and their support forconcurrent updates allows several versions of a dataset to coexistside-by-side.

Event-based systems allow for divergent views as first-class citizens.

-

in Git terms, one user can create a branch (a setof commits that are not yet part of the main document version),and another user can choose whether to merge it

Users are empowered to create composed views out of events of their choice.

I.e., collaboration as composition.

I.e., divergent views as first-class citizens.

-

compute the differencesbetween versions of the document to visualise the change history

-

we can reconstruct the state of thedocument at any past moment in time

-

Moreover, if processing an event may have external side-effectsbesides updating a replica state – for example, if it may trigger anemail to be sent – then the time warp approach requires some wayof undoing or compensating for those side-effects in the case wherea previously processed event is affected by a late-arriving eventwith an earlier timestamp. It is not possible to un-send an emailonce it has been sent, but it is possible to send a follow-up emailwith a correction, if necessary. If the possibility of such correctionsis unacceptable, optimistic replication cannot be used, and SMR oranother strongly consistent approach must be used instead. In manybusiness systems, corrections or apologies arise from the regularcourse of business anyway [27], so maybe occasional correctionsdue to out-of-order events are also acceptable in practice.

-

If permanent deletion of records is required (e.g. to delete per-sonal data in compliance with the GDPR right to be forgotten [62]),an immutable event log requires extra care.

-

In applications with a high rate of events, storing and replaying thelog may be expensive

-

the level of indirection between the event log andthe resulting database state adds complexity in some types of appli-cations that are more easily expressed in terms of state mutations

-

itis less familiar to most application developers than mutable-statedatabases

-

it is easy to maintain severaldifferent views onto the same underlying event log if needed

-

If the applicationdevelopers wish to change the logic for processing an event, forexample to change the resulting database schema or to fix a bug,they can set up a new replica, replay the existing event log usingthe new processing function, switch clients to reading from thenew replica instead of the old one, and then decommission theold replica [34].

-

well-designed events oftencapture the intent and meaning of operations better than eventsthat are a mere side-effect of a state mutation [68].

-

- Aug 2023

-

-

Before we move on to uploading an image, let’s give our users the ability to logout

-

- Mar 2023

-

-

There are two main reasons to use logarithmic scales in charts and graphs.

- respond to skewness towards large values / outliers by spreading out the data.

- show multiplicative factors rather than additive (ex: b is twice that of a).

The data values are spread out better with the logarithmic scale. This is what I mean by responding to skewness of large values.

In Figure 2 the difference is multiplicative. Since 27 = 26 times 2, we see that the revenues for Ford Motor are about double those for Boeing. This is what I mean by saying that we use logarithmic scales to show multiplicative factors

-

-

www.graphpad.com www.graphpad.com

-

Using a logarithmic axes on a bar graph rarely make sense.

-

- Feb 2023

-

www.ilpost.it www.ilpost.it

-

si conservò questo mio onanistico rapporto con i Pet Shop Boys.

La lettura di questo articolo ha sancito la prima volta in cui mi sia mai imbattuto nel termine “onanistico” e più in generale nel concetto di onanismo. Da quel momento in poi, l’ho sempre utilizzato in questo senso, piuttosto che in quello letterale. Nel mio caso, prevalentemente avevo un rapporto onanistico con l’astronomia e lo ho tuttora con l’informatica.

Tags

Annotators

URL

-

- Oct 2022

-

expert.cheekyscientist.com expert.cheekyscientist.com

-

Additionally, make sure to use both forward and side scatter on log scale when measuring microparticles or microbiological samples like bacteria. These types of particles generate dim scatter signals that are close to the cytometer’s noise, so it’s often necessary to visualize signal on a log scale in order to separate the signal from scatter noise.

-

- Sep 2022

-

stackoverflow.com stackoverflow.com

-

If we ever moved a file to a different location or renamed it, all its previous history is lost in git log, unless we specifically use git log --follow. I think usually, the expected behavior is that we'd like to see the past history too, not "cut off" after the rename or move, so is there a reason why git log doesn't default to using the --follow flag?

-

-

stackoverflow.com stackoverflow.com

-

Note: Git 2.6+ (Q3 2015) will propose that in command line: see "Why does git log not default to git log --follow?" Note: Git 2.6.0 has been released and includes this feature. Following path changes in the log command can be enabled by setting the log.follow config option to true as in: git config log.follow true

-

- Aug 2022

-

docs.nginx.com docs.nginx.com

-

Process the log file to determine the spread of data: cat /tmp/sslparams.log | cut -d ' ' -f 2,2 | sort | uniq -c | sort -rn | perl -ane 'printf "%30s %s\n", $F[1], "="x$F[0];'

-

- Jan 2022

-

hackernoon.com hackernoon.com

-

Most developers are familiar with MySQL and PostgreSQL. They are great RDBMS and can be used to run analytical queries with some limitations. It’s just that most relational databases are not really designed to run queries on tens of millions of rows. However, there are databases specially optimized for this scenario - column-oriented DBMS. One good example is of such a database is ClickHouse.

How to use Relational Databases to process logs

-

Another format you may encounter is structured logs in JSON format. This format is simple to read by humans and machines. It also can be parsed by most programming languages

-

- Sep 2021

-

-

Ciccione, L., Sablé-Meyer, M., & Dehaene, S. (2021). Analyzing the misperception of exponential growth in graphs. PsyArXiv. https://doi.org/10.31234/osf.io/dah3x

-

-

kubernetes.io kubernetes.io

-

-

-

www.sumologic.com www.sumologic.com

Tags

Annotators

URL

-

- Jun 2021

-

devhints.io devhints.io

-

-

stackoverflow.com stackoverflow.com

-

From pretty format documentation: '%w([<w>[,<i1>[,<i2>]]])': switch line wrapping, like the -w option of git-shortlog[1]. And from shortlog: -w[<width>[,<indent1>[,<indent2>]]] Linewrap the output by wrapping each line at width. The first line of each entry is indented by indent1 spaces, and the second and subsequent lines are indented by indent2 spaces. width, indent1, and indent2 default to 76, 6 and 9 respectively. If width is 0 (zero) then indent the lines of the output without wrapping them.

-

- Feb 2021

-

www.globaldatinginsights.com www.globaldatinginsights.com

- Oct 2020

-

noeldemartin.com noeldemartin.com

-

stackoverflow.com stackoverflow.com

-

If you're using ggplot, you can use scales::pseudo_log_trans() as your transformation object. This will replace your -inf with 0.

-

- May 2020

-

github.com github.com

-

I originally did not use this approach because many pages that require translation are behind authentication that cannot/should not be run through these proxies.

-

-

docs.aws.amazon.com docs.aws.amazon.com

-

Amazon S3 event notifications are designed to be delivered at least once. Typically, event notifications are delivered in seconds but can sometimes take a minute or longer.

event notification of s3 might take minutes

BTW,

cloud watch does not support s3, but cloud trail does

-

- Apr 2020

-

psyarxiv.com psyarxiv.com

-

Romano, A., Sotis, C., Dominioni, G., & Guidi, S. (2020). COVID-19 Data: The Logarithmic Scale Misinforms the Public and Affects Policy Preferences [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/42xfm

-

-

keepass.info keepass.info

-

In the case of storing the data in log files, this is the case after seven days at the latest. Further storage is possible; in this case, the IP addresses of the users are erased or anonymized, so that an association of the calling client is no longer possible.

-

-

stackoverflow.com stackoverflow.com

-

Events can self-trigger based on a schedule; alarms don't do this Alarms invoke actions only for sustained changes Alarms watch a single metric and respond to changes in that metric; events can respond to actions (such as a lambda being created or some other change in your AWS environment) Alarms can be added to CloudWatch dashboards, but events cannot Events are processed by targets, with many more options than the actions an alarm can trigger

Event vs Alarm

-

- Jan 2020

-

www.sthda.com www.sthda.com

-

For a given predictor (say x1), the associated beta coefficient (b1) in the logistic regression function corresponds to the log of the odds ratio for that predictor.

-

If the odds ratio is 2, then the odds that the event occurs (event = 1) are two times higher when the predictor x is present (x = 1) versus x is absent (x = 0).

-

- Oct 2019

-

engineering.linkedin.com engineering.linkedin.com

-

It is an append-only, totally-ordered sequence of records ordered by time.

-

- Jul 2019

-

nbviewer.jupyter.org nbviewer.jupyter.org

-

Sidenote: Visually comparing estimated survival curves in order to assess whether there is a difference in survival between groups is usually not recommended, because it is highly subjective. Statistical tests such as the log-rank test are usually more appropriate.

-

- Mar 2019

-

www.thedevelopersconference.com.br www.thedevelopersconference.com.br

-

Você consegue visualizar a saúde da sua aplicação?

Ainda que aqui os tópicos da certificação não cubram exatamente esse assunto, monitorar a saúde de um sistema e suas aplicações é missão do profissional DevOps. Atente para os tópicos:

701 Software Engineering 701.1 Modern Software Development (weight: 6)

e

705.2 Log Management and Analysis (weight: 4)

-

- Dec 2018

-

inst-fs-iad-prod.inscloudgate.net inst-fs-iad-prod.inscloudgate.net

-

Outliers : All data sets have an expected range of values, and any actual data set also has outliers that fall below or above the expected range. (Space precludes a detailed discussion of how to handle outliers for statistical analysis purposes, see: Barnett & Lewis, 1994 for details.) How to clean outliers strongly depends on the goals of the analysis and the nature of the data.

Outliers can be signals of unanticipated range of behavior or of errors.

-

Understanding the structure of the data : In order to clean log data properly, the researcher must understand the meaning of each record, its associated fi elds, and the interpretation of values. Contextual information about the system that produced the log should be associated with the fi le directly (e.g., “Logging system 3.2.33.2 recorded this fi le on 12-3-2012”) so that if necessary the specifi c code that gener-ated the log can be examined to answer questions about the meaning of the record before executing cleaning operations. The potential misinterpretations take many forms, which we illustrate with encoding of missing data and capped data values.

Context of the data collection and how it is structured is also a critical need.

Example, coding missing info as "0" risks misinterpretation rather than coding it as NIL, NDN or something distinguishable from other data

-

Data transformations : The goal of data-cleaning is to preserve the meaning with respect to an intended analysis. A concomitant lesson is that the data-cleaner must track all transformations performed on the data .

Changes to data during clean up should be annotated.

Incorporate meta data about the "chain of change" to accompany the written memo

-

Data Cleaning A basic axiom of log analysis is that the raw data cannot be assumed to correctly and completely represent the data being recorded. Validation is really the point of data cleaning: to understand any errors that might have entered into the data and to transform the data in a way that preserves the meaning while removing noise. Although we discuss web log cleaning in this section, it is important to note that these principles apply more broadly to all kinds of log analysis; small datasets often have similar cleaning issues as massive collections. In this section, we discuss the issues and how they can be addressed. How can logs possibly go wrong ? Logs suffer from a variety of data errors and distortions. The common sources of errors we have seen in practice include:

Common sources of errors:

• Missing events

• Dropped data

• Misplaced semantics (encoding log events differently)

-

In addition, real world events, such as the death of a major sports fi gure or a political event can often cause people to interact with a site differently. Again, be vigilant in sanity checking (e.g., look for an unusual number of visitors) and exclude data until things are back to normal.

Important consideration for temporal event RQs in refugee study -- whether external events influence use of natural disaster metaphors.

-

Recording accurate and consistent time is often a challenge. Web log fi les record many different timestamps during a search interaction: the time the query was sent from the client, the time it was received by the server, the time results were returned from the server, and the time results were received on the client. Server data is more robust but includes unknown network latencies. In both cases the researcher needs to normalize times and synchronize times across multiple machines. It is common to divide the log data up into “days,” but what counts as a day? Is it all the data from midnight to midnight at some common time reference point or is it all the data from midnight to midnight in the user’s local time zone? Is it important to know if people behave differently in the morning than in the evening? Then local time is important. Is it important to know everything that is happening at a given time? Then all the records should be converted to a common time zone.

Challenges of using time-based log data are similar to difficulties in the SBTF time study using Slack transcripts, social media, and Google Sheets

-

Log Studies collect the most natural observations of people as they use systems in whatever ways they typically do, uninfl uenced by experimenters or observers. As the amount of log data that can be collected increases, log studies include many different kinds of people, from all over the world, doing many different kinds of tasks. However, because of the way log data is gathered, much less is known about the people being observed, their intentions or goals, or the contexts in which the observed behaviors occur. Observational log studies allow researchers to form an abstract picture of behavior with an existing system, whereas experimental log stud-ies enable comparisons of two or more systems.

Benefits of log studies:

• Complement other types of lab/field studies

• Provide a portrait of uncensored behavior

• Easy to capture at scale

Disadvantages of log studies:

• Lack of demographic data

• Non-random sampling bias

• Provide info on what people are doing but not their "motivations, success or satisfaction"

• Can lack needed context (software version, what is displayed on screen, etc.)

Ways to mitigate: Collecting, Cleaning and Using Log Data section

-

Two common ways to partition log data are by time and by user. Partitioning by time is interesting because log data often contains signifi cant temporal features, such as periodicities (including consistent daily, weekly, and yearly patterns) and spikes in behavior during important events. It is often possible to get an up-to-the- minute picture of how people are behaving with a system from log data by compar-ing past and current behavior.

Bookmarked for time reference.

Mentions challenges of accounting for time zones in log data.

-

An important characteristic of log data is that it captures actual user behavior and not recalled behaviors or subjective impressions of interactions.

Logs can be captured on client-side (operating systems, applications, or special purpose logging software/hardware) or on server-side (web search engines or e-commerce)

-

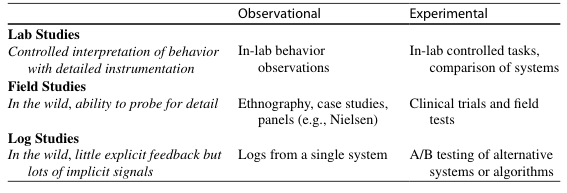

Table 1 Different types of user data in HCI research

-

Large-scale log data has enabled HCI researchers to observe how information diffuses through social networks in near real-time during crisis situations (Starbird & Palen, 2010 ), characterize how people revisit web pages over time (Adar, Teevan, & Dumais, 2008 ), and compare how different interfaces for supporting email organi-zation infl uence initial uptake and sustained use (Dumais, Cutrell, Cadiz, Jancke, Sarin, & Robbins, 2003 ; Rodden & Leggett, 2010 ).

Wide variety of uses of log data

-

Behavioral logs are traces of human behavior seen through the lenses of sensors that capture and record user activity.

Definition of log data

-

- Jan 2018

-

engineering.linkedin.com engineering.linkedin.com

-

The Log: What every software engineer should know about real-time data's unifying abstraction

-

- Mar 2017

-

educ-lak17.educ.sfu.ca educ-lak17.educ.sfu.ca

-

Presentation 6C2 (30 min): “Evolving a Process Model for Learning Analytics Implementation” (Practitioner Presentation) by Adam Cooper

attended friday LAK17 conf.

-

- May 2015

-

research.microsoft.com research.microsoft.com

-

That is, the human annotators are likely to assign different relevance labels to a document, depending on the quality of the last document they had judged for the same query. In addi- tion to manually assigned labels, we further show that the implicit relevance labels inferred from click logs can also be affected by an- choring bias. Our experiments over the query logs of a commercial search engine suggested that searchers’ interaction with a document can be highly affected by the documents visited immediately be- forehand.

-