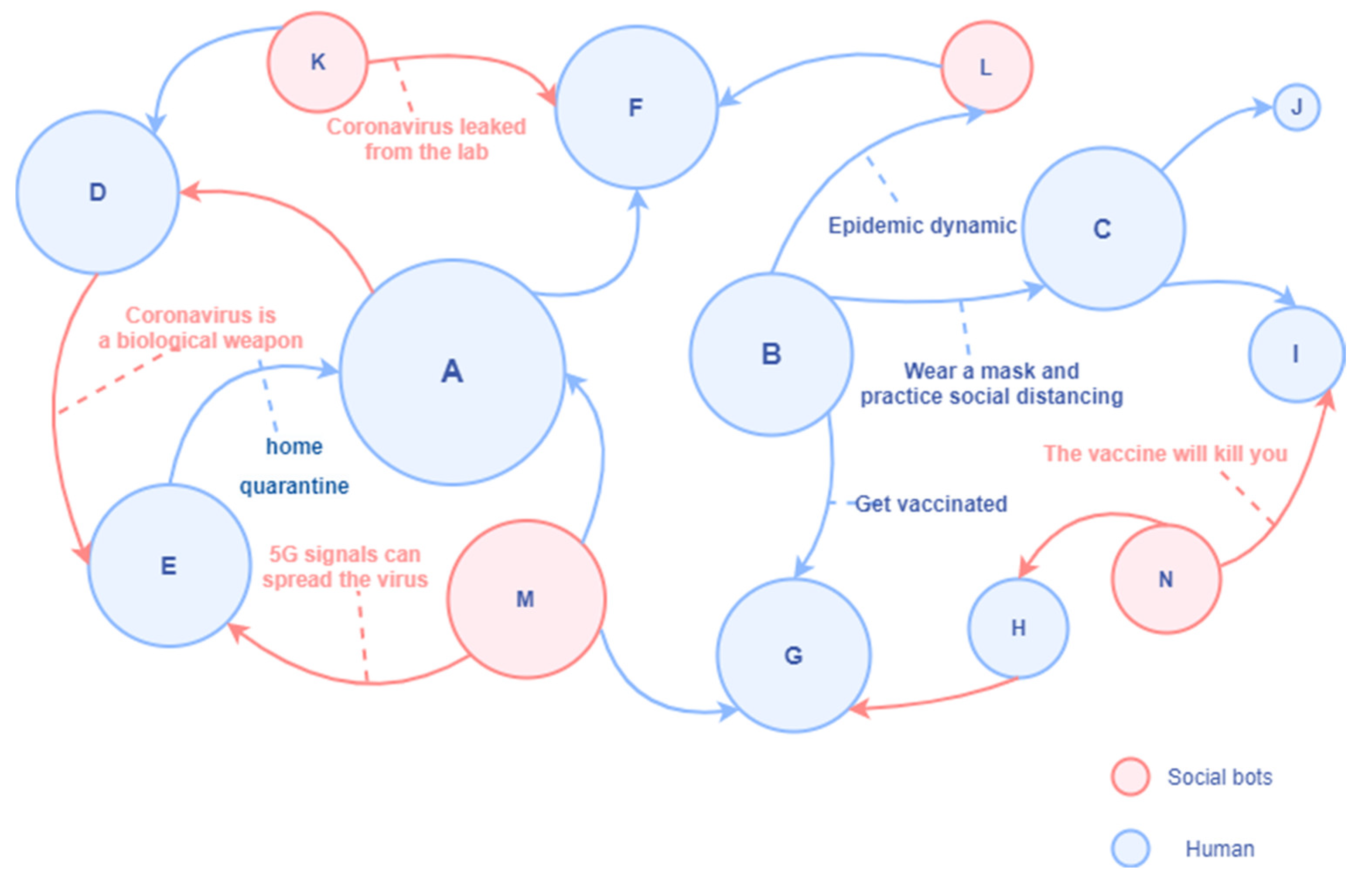

what we are facing is not, you know, like a Hollywood science fiction scenario of one big evil computer trying to take over the world. No, it's nothing like that. It's more like millions and millions of AI bureaucrats that are given more and more authority to make decisions about us

for - futures - AI - millions of AI bots making decisions about us