https://en.wikipedia.org/wiki/Soundex

There is a close relationship between the Soundex indexing scheme and the scheme behind the Major System!!!

https://en.wikipedia.org/wiki/Soundex

There is a close relationship between the Soundex indexing scheme and the scheme behind the Major System!!!

Subqueries can be used in any place where a table can be used, for example in joins:

Because Read Committed mode starts each command with a new snapshot that includes all transactions committed up to that instant, subsequent commands in the same transaction will see the effects of the committed concurrent transaction in any case. The point at issue above is whether or not a single command sees an absolutely consistent view of the database.

SQL/PGQ reduces the difference in functionality between relational DBMSs and native graph DBMSs. Basically, this new feature makes it easier to query data in tables as if it were in a graph database, providing a possibly more intuitive alternative to writing complex join queries.

Unlike SQL designs that build on K/V stores, this allows to DSQL to do much of the heavy lifting of filtering and finding data right next to the data itself, on the storage replicas, without sacrificing scalability of storage or compute.

This is differeny to cockroachdb then. the KV doesn't really know how to do the filtering, aggregation, projection stuff, because it takes care of reliability instead, but presumably there is a performance cost

ELECT DISTINCT ON (customer) id, customer, total FROM purchases ORDER BY customer, total DESC, id;

The perfect index for the above query would be a multi-column index spanning all three columns in matching sequence and with matching sort order:

WITH recursive temp (n, fact) AS ( SELECT 0, 1 -- Initial Subquery UNION ALL SELECT n+1, (n+1)*fact FROM temp WHERE n < 9 -- Recursive Subquery ) SELECT * FROM temp;

select count(*) filter (where year <= 1945) as pre1945, count(*) filter (where year between 1946 and 1964) as period2, count(*) filter (where year between 1965 and 1974) as period3, ... from ... where ...;

https://web.archive.org/web/20240106230221/https://explainextended.com/2023/12/31/happy-new-year-15/

This seems a very good explainer for how LLMs and GPTs work. And all in 500 lines of sql :D

ON conflict (member_id) DO UPDATE SET

Writing json_populate_record in the FROM clause is good practice, since all of the extracted columns are available for use without duplicate function calls.

SELECT CNPJ, razaoSocialFROM EmpresaWHERE atividade = '4120-4/00

Nesse comando, a tabela "Empresa" é selecionada e as colunas "CNPJ" e "razaoSocial" são especificadas como resultado da consulta. A cláusula WHERE filtra apenas as linhas da tabela "Empresa" que têm a coluna "atividade" igual a '4120-4/00', ou seja, as empresas cuja atividade econômica principal é a construção de edifícios.

SELECT E.CNPJ, E.razaoSocialFROM Empresa EWHERE E.CNPJ NOT IN (SELECT T.CNPJFROM Tem TJOIN Caracteristica C ON (T.cod = C.cod)WHERE C.sigla = 'ESG')

O código SQL acima faz uma consulta em duas tabelas, Empresa e Tem, para retornar apenas as empresas que não têm a característica ESG (governança ambiental, social e corporativa).

A cláusula WHERE filtra as empresas que não estão presentes na subconsulta. A subconsulta retorna os CNPJs das empresas que têm a característica ESG, utilizando a tabela Tem, que relaciona as características com as empresas por meio dos campos CNPJ e cod. A cláusula JOIN é usada para juntar as tabelas Tem e Caracteristica.

Assim, a consulta principal retorna apenas os CNPJs e razões sociais das empresas que não estão na subconsulta, ou seja, que não têm a característica ESG.

Optimized for coding 💻

Most applications do not need to process massive amounts of data. This has led to a resurgence in data management systems with traditional architectures; SQLite, Postgres, MySQL are all growing strongly, while “NoSQL” and even “NewSQL” systems are stagnating.

SQL still shines over NoSQL

Richard was a contractor for Bath Iron Works working on software for the DDG-79 Oscar Austin.

In the Containers pane, expand all nodes of the package container hierarchy, and then clear all check boxes, including the Extract Sample Currency Data check box. Now select the check box for Extract Sample Currency Data to get only the events for this node.

When I completed this step, the nodes of the package container hierarchy did not have checkboxes. Also, the Extract Sample Currency Data checkbox did not respond to my clicks.

There was a message to clear the parent, but since the parent had no checkbox, I was unable to do so.

Drilling down resulted in checkboxes that "appear dimmed" as in the note below.

This appears to be a bug or at least a lapse in the documentation.

EDIT: We found a work-around. You can highlight the checkbox and tick it with the spacebar - but you can't select it with the mouse.

On the Lesson 3 root node, under Select the logs to use for the container: I was able to select the checkbox.

When I navigate deeper though, this option is unchecked and muted or grayed out with a message at the bottom reading:

"⚠️ To configure unique logging options for this container, enable logging for it in the tree view."

Mysql(多级分销)无限极数据库设计方案(邻接表,路径枚举,嵌套集,闭包表),并提供 嵌套集维持完整性程序 源码和SQL脚本

Hibernate在控制台打印sql语句以及参数

Spring Data JPA中多表联合查询最佳实践

常用的分布式事务解决方案有哪些?

数据库MVCC和隔离级别的关系是什么?

乐观锁和 MVCC 的区别?

SQL 语句的查询结果的的顺序是由哪些因素决定?

如何根据sql语句逆向生成回滚语句?

2020年,java持久层怎么选择?

为什么会有人写出几百行的SQL语句啊?这些人的心态是怎样的?为了凸显自己的强大吗?

现在比较好用的漏洞扫描和注入攻击的工具?

ORM是否必要?

从编程语言设计的角度,如何评价SQL语言?

如何优化加快 SQL 查询速度 ?

Post.in_order_of(:type, %w[Draft Published Archived]).order(:created_at).pluck(:name) which generates SELECT posts.name FROM posts ORDER BY CASE posts.type WHEN 'Draft' THEN 1 WHEN 'Published' THEN 2 WHEN 'Archived' THEN 3 ELSE 4 END ASC, posts.created_at ASC

Many popular SQL clients do not use SSL by default. If you aren’t deliberate about choosing encryption, the connection will be unencrypted.

Table with SQL clients and their default SSL mode:

select * from information_schema.innodb_trx where TIME_TO_SEC(timediff(now(),trx_started))>60

this application uses SQLAlchemy, an ORM library for Python that allows the developer to create schemas and queries using mostly database-independent Python constructs.

How if compared with SQLModel?

In my opinion , SQLite is easy/small to host on Cloud platform but PostgreSQL might be difficult if compared. For larger data , SQLite is not suitable.

How does PostgreSQL access a column? It will fetch the row and then dissect this tuple to calculate the position of the desired column inside the row. So if we want to access column #1000 it means that we have to figure out how long those first 999 columns before our chosen one really are. This can be quite complex. For integer we simply have to add 4, but in case of varchar, the operation turns into something really expensive.

Column order matters in PostgreSQL. The time to read 1000th column can be pretty large, especially if there are varchar columns before that.

alter column object type jsonb using object::jsonb;

convert type

Create the new empty table Write to both old and new table Copy data (in chunks) from old to new Validate consistency Switch reads to new table Stop writes to the old table Cleanup old table

7 steps required while migrating to a new table

COUNT(*)表示查询所有列的行数,要注意聚合的计算结果虽然是一个数字,但查询的结果仍然是一个二维表,只是这个二维表只有一行一列,并且列名是COUNT(*)

聚合操作我的理解是“给数据降维”

IF NOT EXISTS (SELECT * FROM spatial_ref_sys) THEN ⋮ ANALYZE spatial_ref_sys;

The lateral keyword allows us to access columns after the FROM statement, and reference these columns "earlier" in the query ("earlier" meaning "written higher in the query").

The difference between a non- lateral and a lateral join lies in whether you can look to the left hand table's row.

The comma ( , ) in the FROM clause is short notation for CROSS JOIN . LATERAL is assumed automatically for table functions.

LEFT OUTER JOIN First, an inner join is performed. Then, for each row in T1 that does not satisfy the join condition with any row in T2, a joined row is added with null values in columns of T2. Thus, the joined table always has at least one row for each row in T1.

A LATERAL item can appear at top level in the FROM list, or within a JOIN tree. In the latter case it can also refer to any items that are on the left-hand side of a JOIN that it is on the right-hand side of.

Unlike with most joins (IIUC), order is important with lateral joins. Weird. Why?

Maybe because it is equivalent to a cross join lateral (see example), and in an explicit cross join, you have a LHS and RHS?

This allows them to reference columns provided by preceding FROM items.

It is often particularly handy to LEFT JOIN to a LATERAL subquery, so that source rows will appear in the result even if the LATERAL subquery produces no rows for them.

Why not just use a join and group by? SELECT AA.ID, COUNT(B.ID) as no_tx, min(B.DATE) as fday_tx, max(B.DATE) as lday_tx, AA.start_date, AA.end_date FROM (SELECT ID, min(DATE) as start_date, max(DATE) as end_date FROM MAIN_TABLE WHERE CODE = 'drugA' GROUP BY ID ) AA LEFT JOIN MAIN_TABLE b ON b.CODE = 'drugB' AND b.DATE > AA.start_date AND b.DATE < AA.end_date GROUP BY AA.ID, AA.start_date, AA.end_date;

window functions: SELECT ID, SUM(CASE WHEN code = 'drugB' THEN 1 ELSE 0 END) as no_tx, MIN(CASE WHEN code = 'drugB' THEN DATE END) as fday_tx, MIN(CASE WHEN code = 'drugB' THEN DATE END) as lday_tx, start_date, end_date FROM (SELECT t.*, MIN(CASE WHEN code = 'drugA' THEN date END) as start_date, MAX(CASE WHEN code = 'drugB' THEN date END) as end_date FROM MAIN_TABLE t ) t WHERE code in ('drugA', 'drugB') AND date between start_date and end_date GROUP BY t.id;

The query to the right of the Lateral would be evaluated for every row of the left table.

SELECT lateral_subquery.* FROM posts JOIN LATERAL ( SELECT comments.* FROM comments WHERE (comments.post_id = posts.id) LIMIT 3 ) lateral_subquery ON true WHERE posts.id

You want the front page to show a few hundred posts along with the top three comments on each post. You’re planning on being very popular, so the front page will need to be very fast. How do you fetch that data efficiently from postgresql using Activerecord?

Making one Comment query per Post is too expensive; it’s N+1 queries (one to fetch the posts, N to fetch the comments). You could use includes to preload all the comments for all the posts, but that requires hydrating hundreds of thousands of records, even though you only need a few hundred for your front page. What you want is some kind of GROUP BY with a LIMIT on each group — but that doesn’t exist, either in Activerecord nor even in postgres. Postgres has a different solution for this problem: the LATERAL JOIN.

Inner Join Venn Diagram

Description of the problem: Select all users from a DB with their parents which have the association by parent_id column. Possible solution: Recursive Common Table Expression.

join = Arel::Nodes::NamedFunction.new('json_b_array_elements', [Arel::Nodes::SqlLiteral.new("subscriptions")]) .as(Arel::Nodes::NamedFunction.new('sd', [Arel::Nodes::SqlLiteral.new("subscription_data")]).to_sql) p = e.project( Arel::Nodes::SqlLiteral.new( Arel::Nodes::Grouping.new( Arel::Nodes::InfixOperation.new('->>', sd[:subscription_data], Arel::Nodes::SqlLiteral.new("'id'"))).to_sql) << '::uuid' ).where( Arel::Nodes::InfixOperation.new('->>', sd[:subscription_data], Arel::Nodes::SqlLiteral.new("'type'").eq( Arel::Nodes::SqlLiteral.new("'Company'") ) ).and(e[:slug].eq(event_slug))) p.join_sources << Arel::Nodes::StringJoin.new( Arel::Nodes::SqlLiteral.new('CROSS JOIN LATERAL')) << join

SELECT "users".* FROM "users" wHERE 'admin' = ANY("users"."roles")

any_role = Arel::Nodes::NamedFunction.new("ANY", [User[:roles]])

any

lateral (select pledged / fx_rate as pledged_usd) pu, lateral (select pledged_usd / backers_count as avg_pledge_usd) apu, lateral (select goal / fx_rate as goal_usd) gu, lateral (select goal_usd - pledged_usd as usd_from_goal) ufg, lateral (select (deadline - launched_at)/86400.00 as duration) dr

Comment[:article_id].in(Arel.sql(articles_sql))

It's redundant. The transaction commit or rollback can happen in the C# code or the sproc but not both.

c# / SQL transactions

So, what’s a better way to illustrate JOIN operations? JOIN diagrams!

Apparently, SQL should be taught using JOIN diagrams not Venn diagrams?

The most important part of this query is the with block. It's a powerful resource, but for this example, you can think of it as a "way to store a variable" that is the path of the contact you need to update, which will be dynamic depending on the record.

It just builds the path as '{1, value}', but we need to convert to text[] because that’s the type expected on the jsonb_path function.

In addition to SQLAlchemy core queries, you can also perform raw SQL queries

Instead of SQLAlchemy core query:

query = notes.insert()

values = [

{"text": "example2", "completed": False},

{"text": "example3", "completed": True},

]

await database.execute_many(query=query, values=values)

One can write a raw SQL query:

query = "INSERT INTO notes(text, completed) VALUES (:text, :completed)"

values = [

{"text": "example2", "completed": False},

{"text": "example3", "completed": True},

]

await database.execute_many(query=query, values=values)

=================================

The same goes with fetching in SQLAlchemy:

query = notes.select()

rows = await database.fetch_all(query=query)

And doing the same with raw SQL:

query = "SELECT * FROM notes WHERE completed = :completed"

rows = await database.fetch_all(query=query, values={"completed": True})

HAVING avg(score) < 0.75 * (SELECT avg(score) FROM performance_reviews)

When you are dealing with an aggregate of aggregates, it needs to be accomplished in two steps. This can be done using a subquery as the FROM clause, essentially giving us a temporary table to then select from, allowing us to find the average of those averages.

Is there a way to select from multiple custom tables using ActiveRecord QueryMethods? I'm trying to replicate this SQL query using Ruby's ActiveRecord Query Methods. select employee.emplid, address.location from (....) employee, (....) address where employee.emplid = address.emplid

over (order by effdt desc) prev

select ... over

This is an important one, as it will enable us to use the aggregate functions that we are familiar when dealing with relational databases, but in the otherwise counter-intuitive environment of JSON data.

database: query builder

like ActiveRecord for node

The clean way to call a set-returning function is LEFT [OUTER] JOIN LATERAL. This includes rows without children. To exclude those, change to a [INNER] JOIN LATERAL

FROM test x1 LEFT JOIN test x2 ON x1.id = (x2.data->>'parent')::INT;

Careful, Instead of != you may need to IS DISTINCT FROM operator which also compares NULL value

SELECT * FROM ( -- build virtual table of all hours between -- a date range SELECT start_ts, start_ts + interval '1 hour' AS end_ts FROM generate_series( '2017-03-01'::date, '2017-03-03'::timestamp - interval '1 hour', interval '1 hour' ) AS t(start_ts) ) AS cal LEFT JOIN ( -- build virtual table of uptimes SELECT * FROM ( VALUES ('2017-03-01 01:15:00-06'::timestamp, '2017-03-01 02:15:00-06'::timestamp), ('2017-03-01 08:00:00-06', '2017-03-01 20:00:00-06'), ('2017-03-02 19:00:00-06', null) ) AS t(start_ts, end_ts) ) AS uptime ON cal.end_ts > uptime.start_ts AND cal.start_ts <= coalesce(uptime.end_ts, current_timestamp)

This is not a problem if your DBMS supports SQL recursion: lots of data can be generated with a single query. The WITH RECURSIVE clause comes to the rescue.

WITH RECURSIVE can help you quickly generate a series of random data.

I prefer not to duplicate the name of the table in any of the columns (So I prefer option 1 above).

So do I.

SQL regular expressions are a curious cross between LIKE notation and common (POSIX) regular expression notation.

The Realm is a new database module that is improving the way databases are used and also supports relationships between objects. If you are part of the SQL development world, then you must be familiar with the Realm.

[:returning] (Postgres-only) An array of attributes that should be returned for all successfully inserted records. For databases that support INSERT ... RETURNING, this will default to returning the primary keys of the successfully inserted records. Pass returning: %w[ id name ] to return the id and name of every successfully inserted record or pass returning: false to omit the clause.

Comparison Time … 🤞

Brief comparison of 8 aspects between SQL vs NoSQL

NoSQL databases typically perform better and are easier to scale due to the nature of their data access and storage

WHERE clause introduces a condition on individual rows; HAVING clause introduces a condition on aggregations, i.e. results of selection where a single result, such as count, average, min, max, or sum, has been produced from multiple rows. Your query calls for a second kind of condition (i.e. a condition on an aggregation) hence HAVING works correctly. As a rule of thumb, use WHERE before GROUP BY and HAVING after GROUP BY. It is a rather primitive rule, but it is useful in more than 90% of the cases.

Difference between WHERE and HAVING in SQL:

WHERE is used for individual rowsHAVING is used for aggregations (result of a selection), such as: COUNT, AVERAGE, MIN, MAX or SUMWHERE before GROUP BY and HAVING after GROUP BY (works in 90% of the cases)Which database technology to choose

Which database to choose (advice from an Amazon employee):

1) Redash and Falcon focus on people that want to do visualizations on top of SQL2) Superset, Tableau and PowerBI focus on people that want to do visualizations with a UI3) Metabase and SeekTable focus on people that want to do quick analysis (they are the closest to an Excel replacement)

Comparison of data analysis tools:

1) Redash & Falcon - SQL focus

2) Superset, Tableau & PowerBI - UI workflow

3) Metabase & SeekTable - Excel like experience

Joins are not expensive. Who said it to you? As basically the whole concept of relational databases revolve around joins (from a practical point of view), these product are very good at joining. The normal way of thinking is starting with properly normalized structures and going into fancy denormalizations and similar stuff when the performance really needs it on the reading side. JSON(B) and hstore (and EAV) are good for data with unknown structure.

Another nice SQL script paired with CRON jobs was the one that reminded people of carts that was left for more than 48 hours. Select from cart where state is not empty and last date is more than or equal to 48hrs.... Set this as a CRON that fires at 2AM everyday, period with less activity and traffic. People wake up to emails reminding them about their abandoned carts. Then sit watch magic happens. No AI/ML needed here. Just good 'ol SQL + Bash.

Another example of using SQL + CRON job + Bash to remind customers of cart that was left (again no ML needed here)

I will write a query like select from order table where last shop date is 3 or greater months. When we get this information, we will send a nice "we miss you, come back and here's X Naira voucher" email. The conversation rate for this one was always greater than 50%.

Sometimes SQL is much more than enough (you don't need ML)

ACID stands for Atomicity (an operation either succeeds completely or fails, it does not leave partial data), Consistency (once an application performs an operation the results of that operation are visible to it in every subsequent operation), Isolation (an incomplete operation by one user does not cause unexpected side effects for other users), and Durability (once an operation is complete it will be preserved even in the face of machine or system failure).

ACID definition

The SELECT part should not contain any columns not referenced in GROUP BY clause, unless it is wrapped with an aggregate function.

Before SQL3 (1999), the selected fields must appear in the GROUP BY clause

Event.joins(:packages).having('array_agg(packages.type) @> array[?]', packages).group(:id)

This illustrates a pretty common challenge with joins: you want them to be used for some of the query but not for other of it.

Which to use? ANY is a later, more versatile addition, it can be combined with any binary operator returning a boolean value. IN burns down to a special case of ANY. In fact, its second form is rewritten internally: IN is rewritten with = ANY NOT IN is rewritten with <> ALL

Single quotes return text strings. Double quotes return (if you can really think of them as “returning” anything) identifiers, but with the case preserved.

if you care at all about storing timestamps in MySQL, store them as integers and use the UNIX_TIMESTAMP() and FROM_UNIXTIME() functions.

MySQL does not store offset

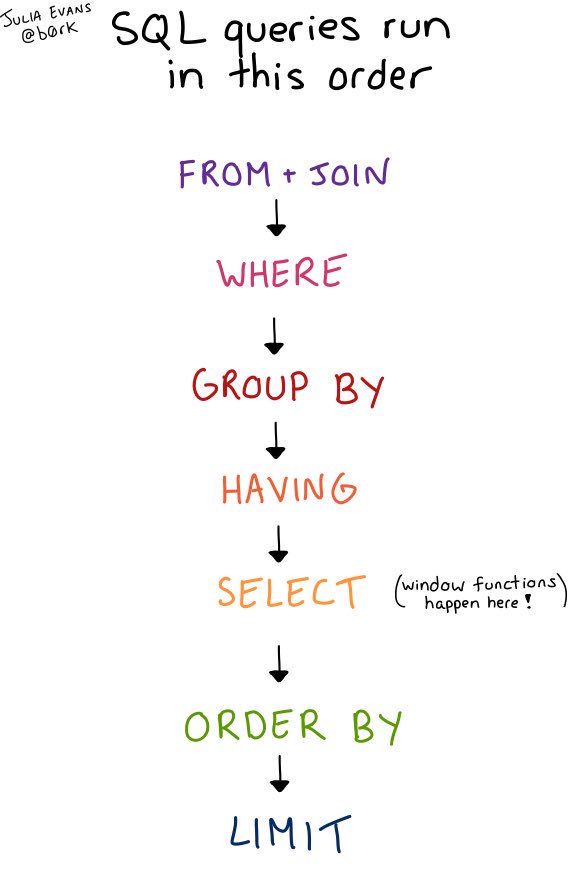

Database engines in practice don’t actually run queries by joining, and then filtering, and then grouping, because they implement a bunch of optimizations reorder things to make the query run faster as long as reordering things won’t change the results of the query

SQL queries are run by database engines in different order than we write them down

SELECT isn’t the first thing, it’s like the 5th thing!

Order of SQL queries:

FROM/JOIN and all the ON conditionsWHEREGROUP BYHAVINGSELECT (including window functions)ORDER BYLIMIT

* 1.

* 1. DBA Por Acaso: RDS, MySQL e Tuning para Iniciantes

Outro assunto que não é explicitamente coberto por nossos tópicos, mas que é base importante para o administrador de sistemas na nuvem - e aqui coberto em um nível introdutório, para não assustar ninguém. E procura por Cloud em https://wiki.lpi.org/wiki/DevOps_Tools_Engineer_Objectives_V1 para ver como esse assunto é importante!

SELECT sj.name , sja.* FROM msdb.dbo.sysjobactivity AS sja INNER JOIN msdb.dbo.sysjobs AS sj ON sja.job_id = sj.job_id WHERE sja.start_execution_date IS NOT NULL AND sja.stop_execution_date IS NULL

View current running jobs SQL

sys.database_mirroring

Check / View SQL Mirror settings

ALTER DATABASE dbName SET PARTNER TIMEOUT 20

Adjust SQL Mirror timeout for fail over

ALTER DATABASE SET trustworthy on

Database may have to me made trustworthy to initiate linked server connection

n the Advanced Security Settings dialog box, make sure that SELF is listed under Permission entries. If SELF is not listed, click Add, and then add SELF.Under Permission entries, click SELF, and then click Edit.In the Permission Entry dialog box, click the Properties tab.On the Properties tab, click This object only in the Apply onto list, and then click to select the check boxes for the following permissions under Permissions:Read servicePrincipalNameWrite servicePrincipalName

Permissions needed for AD account to write SPN name

rant delegation permission to the SQL Server service account domain user account.

Computer and SQL service accounts need to be grated delegation permissions in AD users and computers

The client and server computers must be part of the same Windows domain, or in trusted domains. A Service Principal Name (SPN) must be registered with Active Directory, which assumes the role of the Key Distribution Center in a Windows domain. The SPN, after it is registered, maps to the Windows account that started the SQL Server instance service. If the SPN registration has not been performed or fails, the Windows security layer cannot determine the account associated with the SPN, and Kerberos authentication will not be used.

2 main criteria for linked servers to pass through AD credentials

with recursive rnd_move(move) as ( select *, random() rnd from generate_series(1, 9) move ), winning_positions(a, b, c) as ( values (1, 2, 3), (4, 5, 6), (7, 8, 9), -- rows (1, 4, 7), (2, 5, 8), (3, 6, 9), -- cols (1, 5, 9), (3, 5, 7) -- diagonals ), game as ( select 'O' as who_next, ARRAY['.', '.', '.', '.', '.', '.', '.', '.', '.'] as board union ( select case when who_next = 'X' then 'O' else 'X' end as who_next, board[:move-1] || who_next || board[move+1:] from game, rnd_move where board[move] = '.' order by rnd limit 1 ) ), game_with_winner as ( select *, lag(a is not null) over () as finished, lag(who_next) over () as who from game left join winning_positions on board[a] != '.' and board[a] = board[b] and board[a] = board[c] ) select array_to_string(board[1:3] || chr(10) || board[4:6] || chr(10) || board[7:9] || chr(10), '') board, case when a is not null then who || ' wins' end as winner from game_with_winner where not finished;

hi there learn MSBI in 20 min with handwritten explanation on each and every topics on the Course with real time examples

hi there we have came up with the newly launched Procedural Query in PL sql with Oracle 12 c so please check this out For detailed Description on the Pl Sql from the scratch to advance level:-

sqlite> .mode column sqlite> .headers on

At the start of your session,these will format your sqlite3 output so it is clearer, and add columns headers.

select top 1 * from newsletters where IsActive = 1 order by PublishDate desc

This doesn't require a full table scan or a join operation. That's just COOL

PostgreSQL連接Python

python连接postgresql,非常简单,基本跟mylsql一样,通过cursor来执行

REQUIRED fields are no longer supported in Standard SQL. If you're using Standard SQL (as opposed to Legacy SQL), they recommend you change all your REQUIRED fields to NULLABLE.

significant.

So, there is a performance hit for using + or +=, but not enough for any reasonable string literal in code. Concatenating a long paragraph, use StringBuilder.

Todo o dado (valor atómico) pode ser acedido logicamente (e unicamente) usando o nome da tabela, o valor da chave primária da linha e o nome da coluna

NOME DA TABELA: VALOR CHAVE PRIMARIA: COLUNA

Um banco de dados relacional é um banco de dados que modela os dados de uma forma que eles sejam percebidos pelo usuário como tabelas, ou mais formalmente relações.

tabelas = relações

chaves estrangeiras

A chave estrangeira ocorre quando um atributo de uma relação for chave primária em outra relação. https://pt.wikipedia.org/wiki/Chave_estrangeira

chaves candidatas

identificador único que garante que nenhuma tupla será duplicada; isto faz com que seja criado um relacionamento em algo denominado multiconjunto, porque viola a definição básica de um conjunto. Uma chave pode ser composta, isto é, pode ser formada por vários atributos. https://pt.wikipedia.org/wiki/Chave_candidata

Uma relação é um conjunto desordenado de tuplas.

A relação determina o modo como cada registro de cada tabela se associa a registros de outras tabelas.

Na construção da tabela identificam-se os dados da entidade. A atribuição de valores a uma entidade constrói um registro da tabela.

entidade

conjunto de pares ordenados de domínio e nome que serve como um cabeçalho para uma relação.

Relvar

Os blocos básicos do modelo relacional são o domínio, ou tipo de dado.

todos os dados são representados como relações matemáticas

Uma relação é similar ao conceito de tabela e uma tupla é similar ao conceito de linha.

Uma tupla é um conjunto de atributos que são ordenados em pares de domínio e valor.

Num banco de dados relacional, quando um registro aponta para o outro, dependente deste, há de se fazer regras para que o registro "pai" não possa ser excluído se ele tiver "filhos" (as suas dependências).

Integridade referencial

Exercises for learning PostgreSQL.

SqlResultSetMapping

JPA SQL native query den join ile birden fazla nesne elde etme

2.3.2. Mapping native queries You can also map a native query (ie a plain SQL query). To achieve that, you need to describe the SQL resultset structure using @SqlResultSetMapping (or @SqlResultSetMappings if you plan to define several resulset mappings). Like @NamedQuery, a @SqlResultSetMapping can be defined at class level or in a JPA XML file. However its scope is global to the application.

JPA SQL native query den join ile birden fazla nesne elde etme

@SqlResultSetMappings( { @SqlResultSetMapping(name = "ProfessorWithAddress", entities = { @EntityResult(entityClass = Professor.class), @EntityResult(entityClass = Address.class) }) })

jpa birden fazla tabloyu direk sınıf ile eşleme join table mapping

Use @FieldResult in the SqlResultSetMapping, to link each entity property to its column alias

birden fazla tablonun birleşimini sınıflara eşleştirirken oluşan hatanın giderilmesi