3. Negative general ability

couldn't \ not be able to

3. Negative general ability

couldn't \ not be able to

3. When I was fifteen, I ________________________ ride a motorbike.

I could / was able to

3.____ We were able to get an excellent price on flights for our trip.

Sa

1. At the age of eight, she_______________________ speak three languages. 2. And when she was ten, she ___________________________ speak another two languages. 3. When I was fifteen, I ________________________ ride a motorbike. 4. However, at age fifteen, I ____________________________ drive a car. 5. Unfortunately, I ______________________________ find my keys last night when I got home last night. 6. Fortunately, I ____________________________ unlock the door with a spare key last night. 7. However, I _______________________ find the original keys at that time. 8. The rescuers _______________________contact the trapped miners soon after the mine collapsed yesterday. 9. She ______________________ smell gas coming from the stove, so she turned off the burner. 10. However, she ____________________________ see the escaping gas. 11. I ________________________________understand anything that he was saying. 12. We ______________________________ hear Joe enter the house because he made a loud noise.

she could / was able to speak she was able to speak I could / was able to ride I couldn’t / wasn’t able to drive I couldn’t / wasn’t able to find /I was able to unlock I couldn’t / wasn’t able to find /were able to contact /She could smell/ she couldn’t see/ I couldn’t / wasn’t able to understand /We could hear

1.____ I was able to ride a bike when I was ten years old. 2.____ Unfortunately, I wasn’t able to make a dentist appointment for this week. 3.____ We were able to get an excellent price on flights for our trip. 4.____ Sherry was able to run faster than her brother when they were young. 5.____ David was able to lift heavy boxes before his injury. 6.____ Randy was not able to drive until age eighteen. 7.____ I used to be able to sing. 8.____ The students were able to pass the Final Exam last fall. 9.____ Were you able to finish your homework yesterday?

1GA 2SA 3SA 4GA 5GA 6GA 7GA 8SA 9SA

Complete these sentences about past ability using could/couldn't or was/were able to. More than one answer may be correct. If two answers are correct, write both answers. 1. At the age of eight, she_______________________ speak three languages. 2. And when she was ten, she ___________________________ speak another two languages. 3. When I was fifteen, I ________________________ ride a motorbike. 4. However, at age fifteen, I ____________________________ drive a car. 5. Unfortunately, I ______________________________ find my keys last night when I got home last night. 6. Fortunately, I ____________________________ unlock the door with a spare key last night. 7. However, I _______________________ find the original keys at that time. 8. The rescuers _______________________contact the trapped miners soon after the mine collapsed yesterday. 9. She ______________________ smell gas coming from the stove, so she turned off the burner. 10. However, she ____________________________ see the escaping gas. 11. I ________________________________understand anything that he was saying. 12. We ______________________________ hear Joe enter the house because he made a loud noise.

she could / was able to speak she was able to speak I could / was able to ride I couldn’t / wasn’t able to drive I couldn’t / wasn’t able to find I was able to unlock I couldn’t / wasn’t able to find were able to contact She could smell she couldn’t see I couldn’t / wasn’t able to understand We could hear

Decide whether the sentence describes a specific or general ability Write SA for specific ability and GA for general ability. 1.____ I was able to ride a bike when I was ten years old. 2.____ Unfortunately, I wasn’t able to make a dentist appointment for this week. 3.____ We were able to get an excellent price on flights for our trip. 4.____ Sherry was able to run faster than her brother when they were young. 5.____ David was able to lift heavy boxes before his injury. 6.____ Randy was not able to drive until age eighteen. 7.____ I used to be able to sing. 8.____ The students were able to pass the Final Exam last fall. 9.____ Were you able to finish your homework yesterday?

1GA 2SA 3SA 4GA 5GA 6GA 7GA 8SA 9SAI could ride a bike when I was ten years old.

Sherry could run faster than her brother when they were young.

David could lift heavy boxes before his injury.

Randy could not drive until age eighteen.

I could sing.

jaguars

Jaguars is the plural noun of jaguar. Jaguars are large wild cats native to the Americas, especially Central and South American rainforests. They are the largest big cat in the Americas.

In simple terms: jaguars = powerful spotted big cats of the American tropics

| Feature | Jaguars | Leopards | | -------- | ------------------------ | ---------------------------- | | Region | Americas | Africa & Asia | | Build | Stocky, very powerful | Slimmer | | Rosettes | Larger, with central dot | Smaller, no central dot | | Water | Strong swimmers | Avoid water | | Bite | Extremely strong | Strong, but less than jaguar |

Jaguars are top predators that:

If you see “jaguars” by itself, it usually functions as:

jaguars 是 jaguar(美洲豹) 的复数形式。 美洲豹是 美洲体型最大的猫科动物,主要生活在 中美洲和南美洲。

简单说: jaguars = 生活在美洲热带地区的强壮大型猫科动物

美洲豹常被视为 雨林顶级捕食者的代表。

| 特点 | 美洲豹 | 豹子 | | -- | ----- | ----- | | 分布 | 美洲 | 非洲、亚洲 | | 体型 | 更强壮 | 较瘦 | | 斑点 | 中央有黑点 | 无黑点 | | 水性 | 喜水 | 避水 |

如果只看到 jaguars,通常表示:

If you want, paste the full sentence, diagram, or exam question (e.g., rainforest food webs or canopy adaptations), and I’ll explain exactly why jaguars are mentioned and what concept they illustrate(中英对照、直击考点).

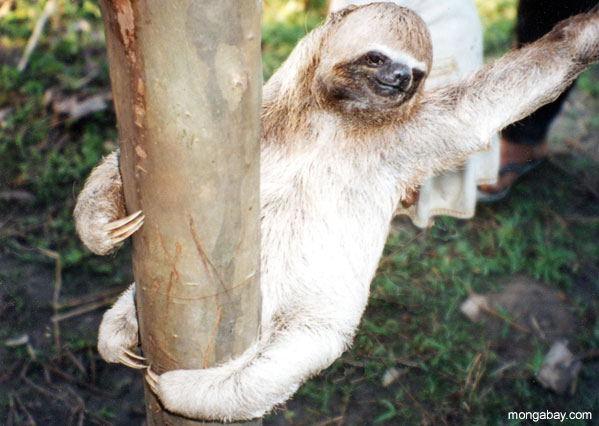

three-toed sloth

A three-toed sloth is a slow-moving tree-dwelling mammal found in Central and South American rainforests. The name comes from the fact that it has three toes on each limb.

In simple terms: three-toed sloth = a very slow rainforest animal that lives in trees and has three toes

Three-toed sloths survive by not being noticed:

This is a classic example of adaptation to the canopy environment.

| Feature | Three-toed sloth | Two-toed sloth | | -------- | ---------------------------- | ---------------- | | Toes | 3 on all limbs | 2 on front limbs | | Neck | Longer (can rotate head far) | Shorter | | Activity | More diurnal | More nocturnal | | Diet | Mostly leaves | Leaves + fruit |

Despite the names, both have three toes on their hind feet.

If you see “three-toed sloth” by itself, it usually functions as:

three-toed sloth 指 三趾树懒,是一种生活在 中美洲和南美洲热带雨林 的 树栖哺乳动物,因 每只脚有三根脚趾 而得名。

简单说: three-toed sloth = 生活在雨林树上的慢动作动物

三趾树懒的生存策略是 “不被发现”:

| 特征 | 三趾树懒 | 二趾树懒 | | ---- | ---- | ------ | | 前肢趾数 | 3 | 2 | | 颈部 | 较灵活 | 较短 | | 活动时间 | 偏白天 | 偏夜间 | | 食性 | 以叶为主 | 叶 + 果实 |

注意:两种树懒的后肢都是三趾。

如果只看到 three-toed sloth,通常表示:

If you want, you can paste the full sentence, diagram, or exam question (for example about rainforest canopy adaptations or food webs), and I’ll explain exactly why the three-toed sloth is mentioned and what concept it illustrates(中英对照,直击考点).

toucans

Toucans is the plural noun of toucan. Toucans are tropical birds best known for their very large, colorful beaks and their life in rainforest canopies.

In simple terms: toucans = tropical birds with big, bright beaks

Despite appearances, the beak is:

This is a classic example of adaptation.

Toucans are important because they:

If you see “toucans” by itself, it usually functions as:

toucans 是 toucan(巨嘴鸟) 的复数形式。 巨嘴鸟是一类生活在 中南美洲热带雨林 的鸟类,以 巨大而鲜艳的喙 闻名。

简单说: toucans = 有巨大彩色嘴巴的热带鸟类

巨嘴鸟的喙并不是负担,而是:

这是典型的 结构与功能相适应 的例子。

如果只看到 toucans,通常表示:

If you want, paste the full sentence, diagram, or exam question (e.g., rainforest canopy adaptations or seed dispersal), and I’ll explain exactly why toucans are mentioned and what concept they illustrate(中英对照、直击考点).

orchids

Orchids is the plural noun of orchid. It refers to plants in the orchid family (Orchidaceae)—one of the largest and most diverse plant families on Earth. Orchids are flowering plants known for their complex, often beautiful flowers.

In simple terms: orchids = a very large group of flowering plants with specialized flowers

Growth forms:

Epiphytic (grow on trees, not parasitic)

Orchids play important ecological roles:

Orchids are classic examples of evolutionary adaptation:

If you see “orchids” by itself, it usually functions as:

orchids 是 orchid(兰花) 的复数形式,指 兰科植物。 兰科是 地球上物种最多的植物科之一,以 结构复杂、形态多样的花朵 著称。

简单说: orchids = 兰花,一大类开花植物

生长方式:

附生兰(长在树上,但不寄生)

兰花是 自然选择与适应进化 的经典例子:

如果只看到 orchids,通常表示:

If you want, paste the full sentence, diagram, or exam question (for example about pollination, coevolution, or forest layers), and I’ll explain exactly why orchids are mentioned and what concept they illustrate(中英对照、直击考点).

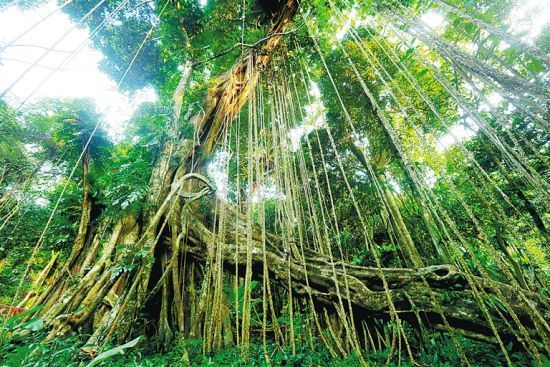

Vines

Vines is the plural noun of vine. It refers to plants with long, flexible stems that climb, trail, or spread rather than standing upright on their own.

In simple terms: vines = plants that grow by climbing or creeping instead of standing straight

Vines use other objects for support instead of building thick, rigid stems. They may climb by:

This strategy saves energy and helps vines reach sunlight.

Vines are especially common in warm, wet climates, but many also grow in temperate regions.

Some vines are woody, others are soft and flexible.

Vines:

In tropical forests, vines are a major part of the canopy and understorey.

If you see “vines” by itself, it usually functions as:

vines 是 vine(藤蔓植物) 的复数形式,指 茎细长、柔软,不能独立直立生长,而是 攀爬或蔓延生长 的植物。

简单说: vines = 藤蔓植物

藤蔓植物通常通过以下方式生长:

这种方式能 节省能量,快速到达有阳光的地方。

有些藤蔓是 木质的,有些是 草质的。

藤蔓:

如果只看到 Vines,通常表示:

If you want, you can paste the full sentence or diagram (for example, a forest layers or plant adaptation passage), and I’ll explain exactly why vines are mentioned there and what concept they illustrate(中英对照、直击考点)。

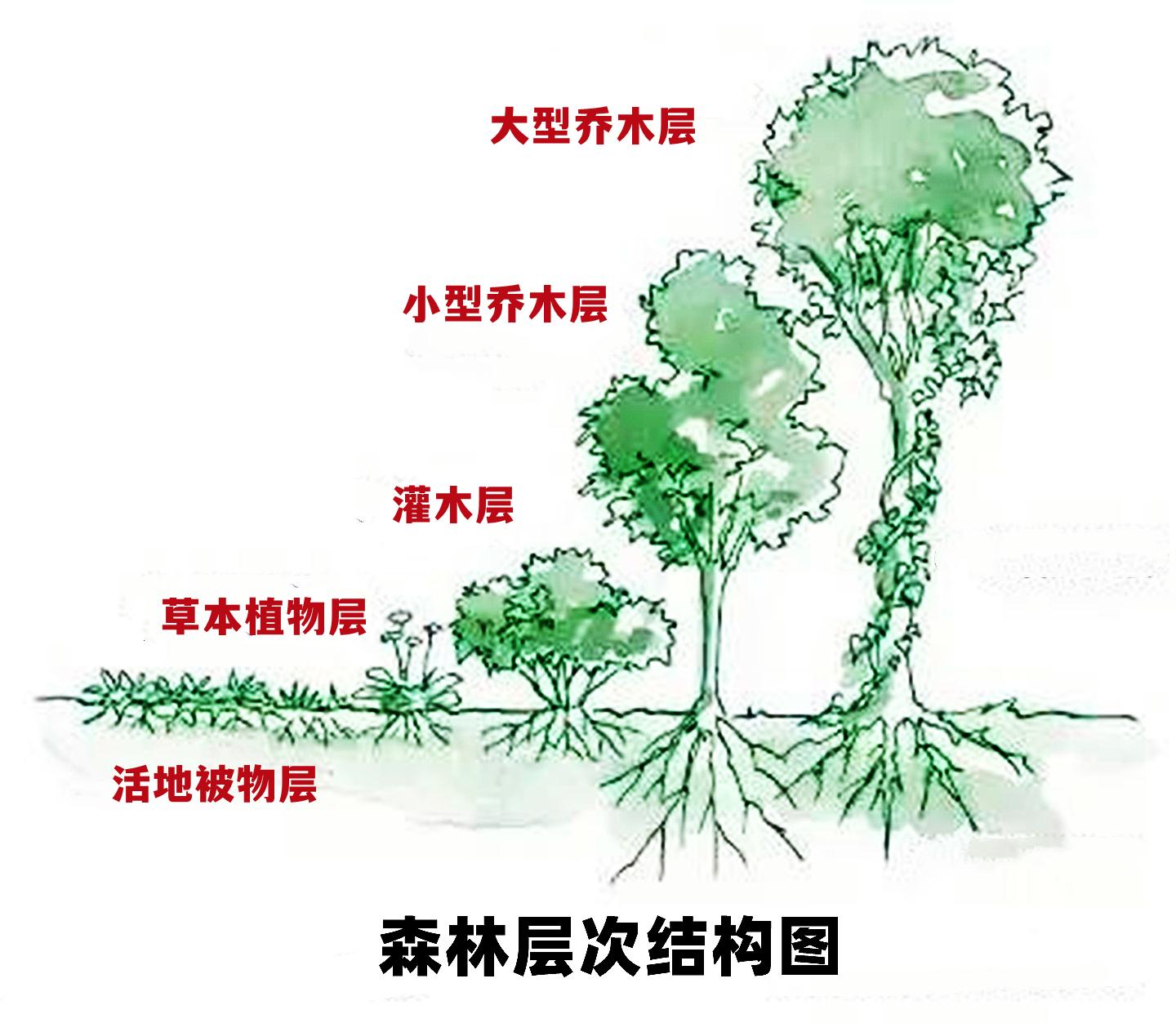

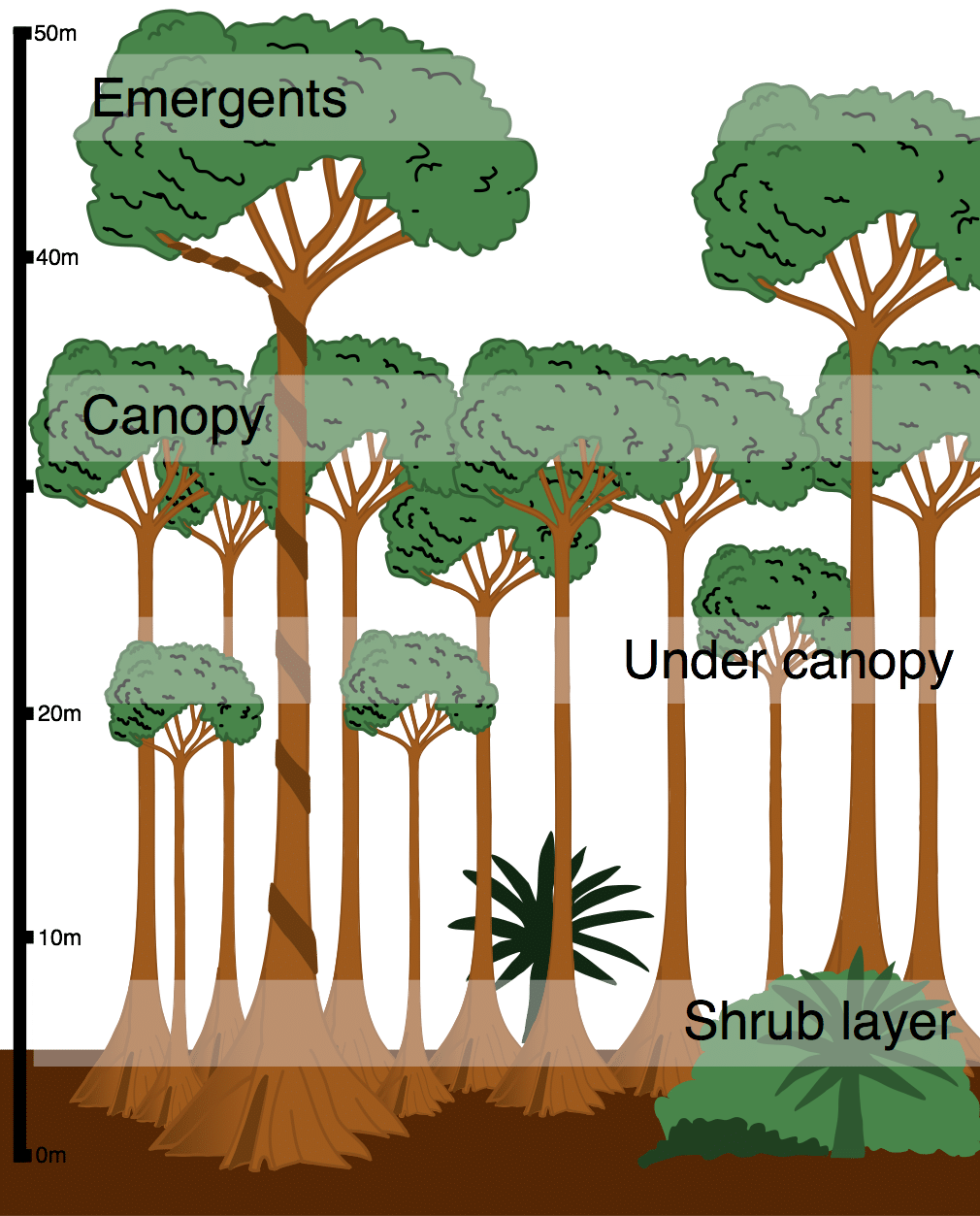

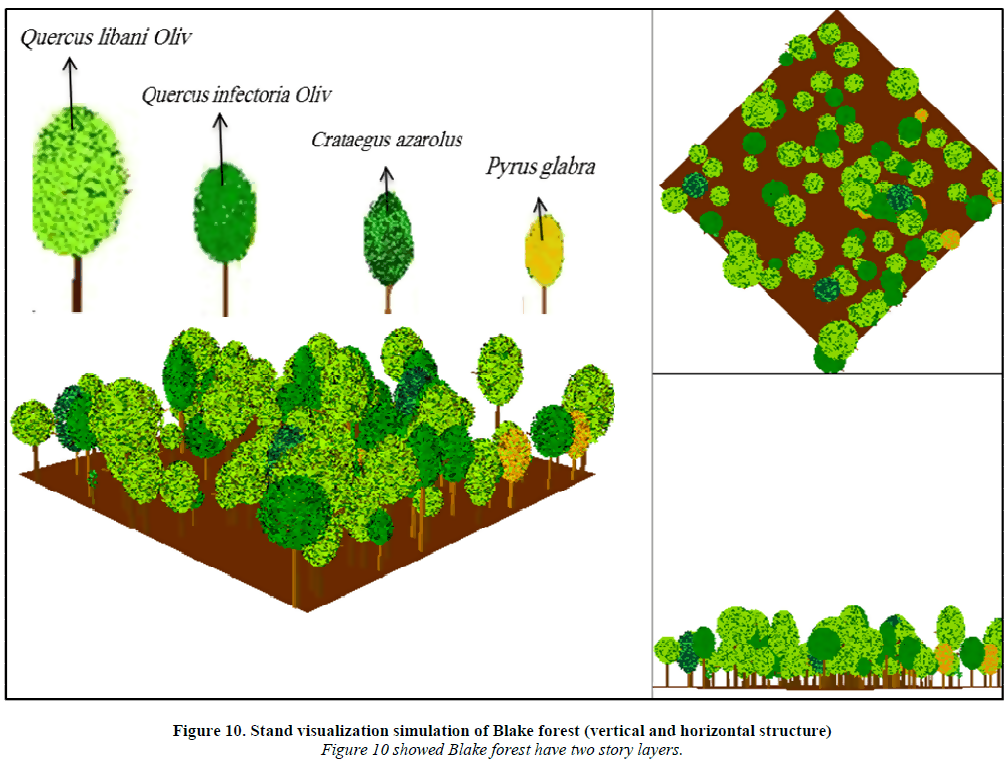

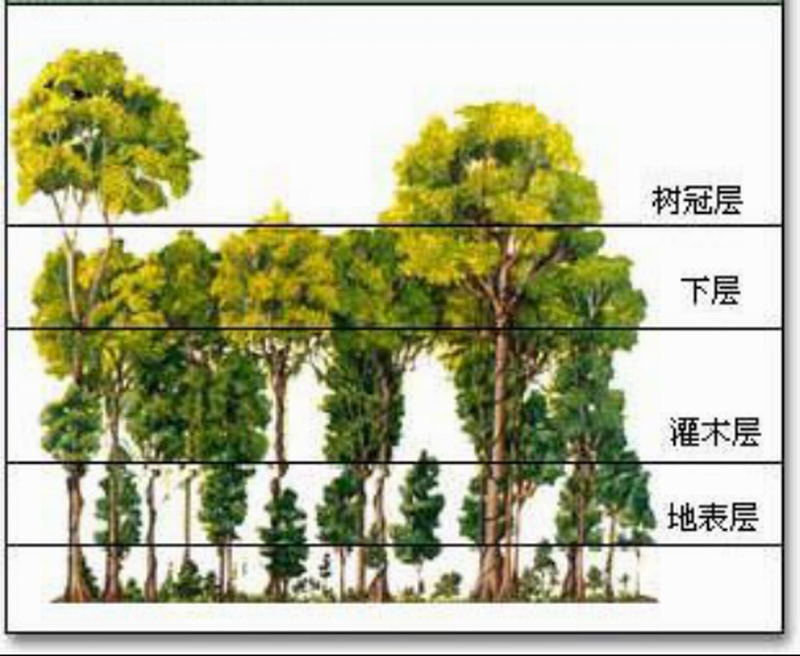

understorey

Understorey (British/Canadian spelling; understory in American English) is the layer of vegetation that grows beneath the forest canopy but above the forest floor.

In simple terms: understorey = the middle plant layer under the tree canopy

The understorey typically includes:

These plants receive filtered sunlight, not full sun.

The understorey plays key roles in forest ecosystems:

Forests are often described as having layers:

Understanding the understorey helps explain light competition and plant adaptation.

Plants in the understorey are adapted to low light:

| Term | Meaning | | --------------- | ------------------------------- | | understorey | Middle vegetation layer | | canopy | Upper tree layer | | forest floor | Ground layer | | shrub layer | Often overlaps with understorey |

If you see “understorey” by itself, it usually functions as:

understorey(英式/加式拼写,美式为 understory)指 位于森林树冠层之下、林地层之上的植被层,中文常称 林下层 / 下木层。

简单说: understorey = 树冠下面的中间植物层

这些植物只能接收到 被树冠过滤后的阳光。

林下层:

森林通常分为:

林下层植物常具有:

以适应 弱光环境。

| 英文 | 中文 | | ------------ | --- | | canopy | 树冠层 | | understorey | 林下层 | | forest floor | 林地层 |

若只看到 understorey,通常表示:

If you want, you can paste the 完整句子、图表或考试题(例如关于 光照、森林分层或植物适应性),我可以帮你 结合语境精准讲解 understorey 的考点(中英对照)。

canopy

A canopy is the upper layer formed by the tops of trees or plants, where leaves and branches overlap to create a kind of roof over what is below.

In simple terms: canopy = the leafy “ceiling” at the top of a forest or plant community

In forests, the canopy:

Many textbooks describe forests by layers:

The canopy:

In rainforests, the canopy is often the most biologically diverse layer.

Canopy cover means the percentage of ground shaded by the canopy when viewed from above.

This term is widely used in ecology, forestry, and geography.

Outside ecology, canopy can also mean:

But in science texts, it almost always refers to the plant/forest layer.

If you see “canopy” by itself, it usually functions as:

canopy 指 树冠层,是由树木顶部的 枝叶相互覆盖 形成的 上层结构,像一顶“绿色屋顶”。

简单说: canopy = 森林上方的树冠层

在森林生态系统中,树冠层:

森林常被分为不同层次:

树冠层:

canopy cover(树冠覆盖率) 指:

从上方看,树冠遮住地面的百分比

在非生态语境中,canopy 也可指:

但在 Science / Geography 中,几乎一定指 树冠层。

如果只看到 canopy,通常表示:

If you want, paste the full sentence, diagram, or exam question where canopy appears (e.g., forest layers or light competition), and I’ll explain exactly what role it plays in that context(中英对照、直击考点).

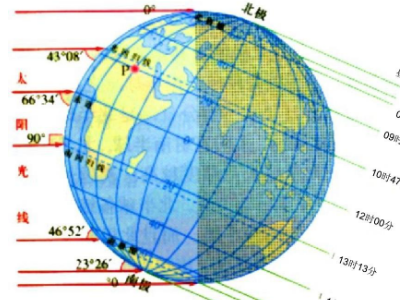

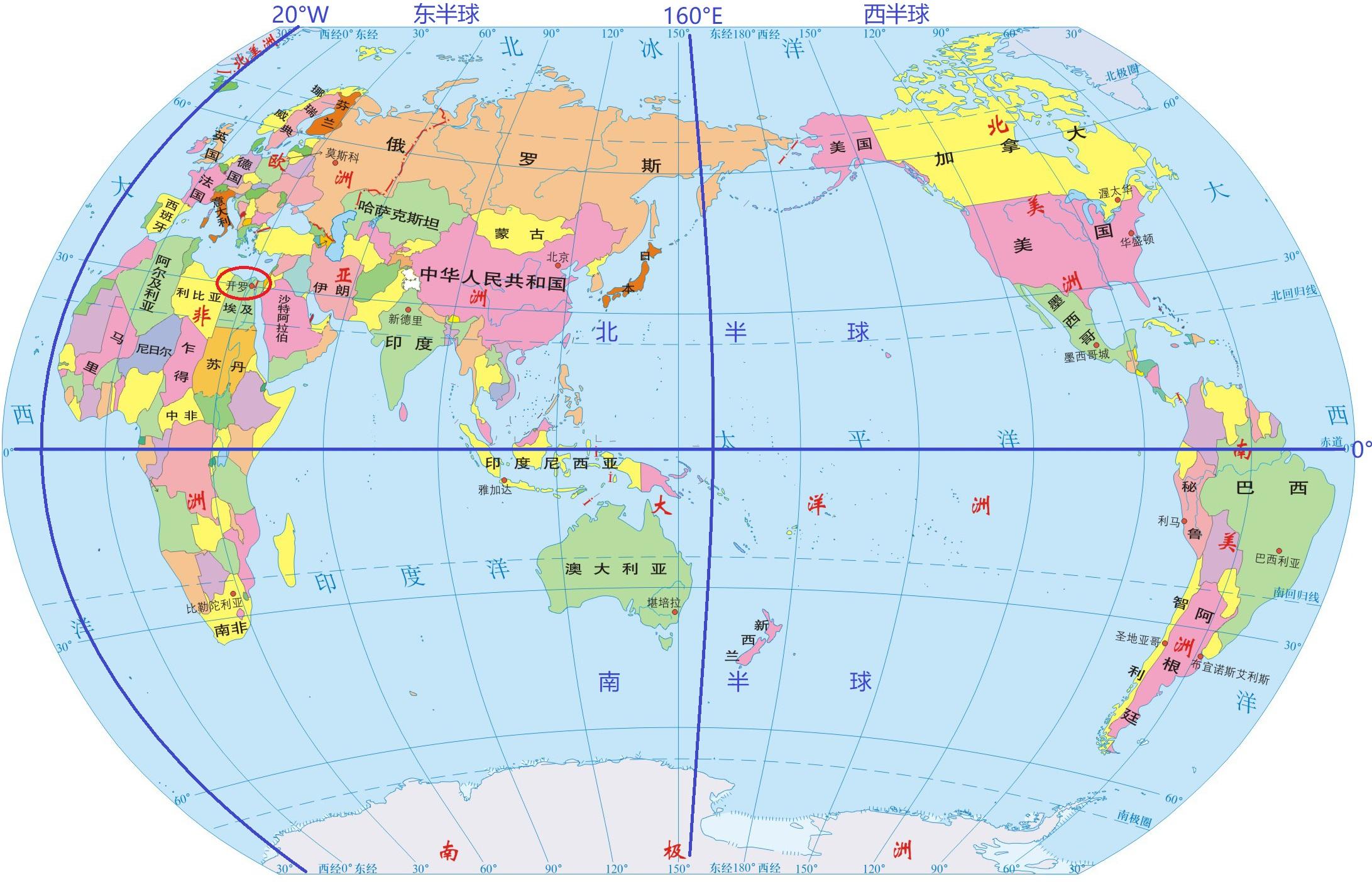

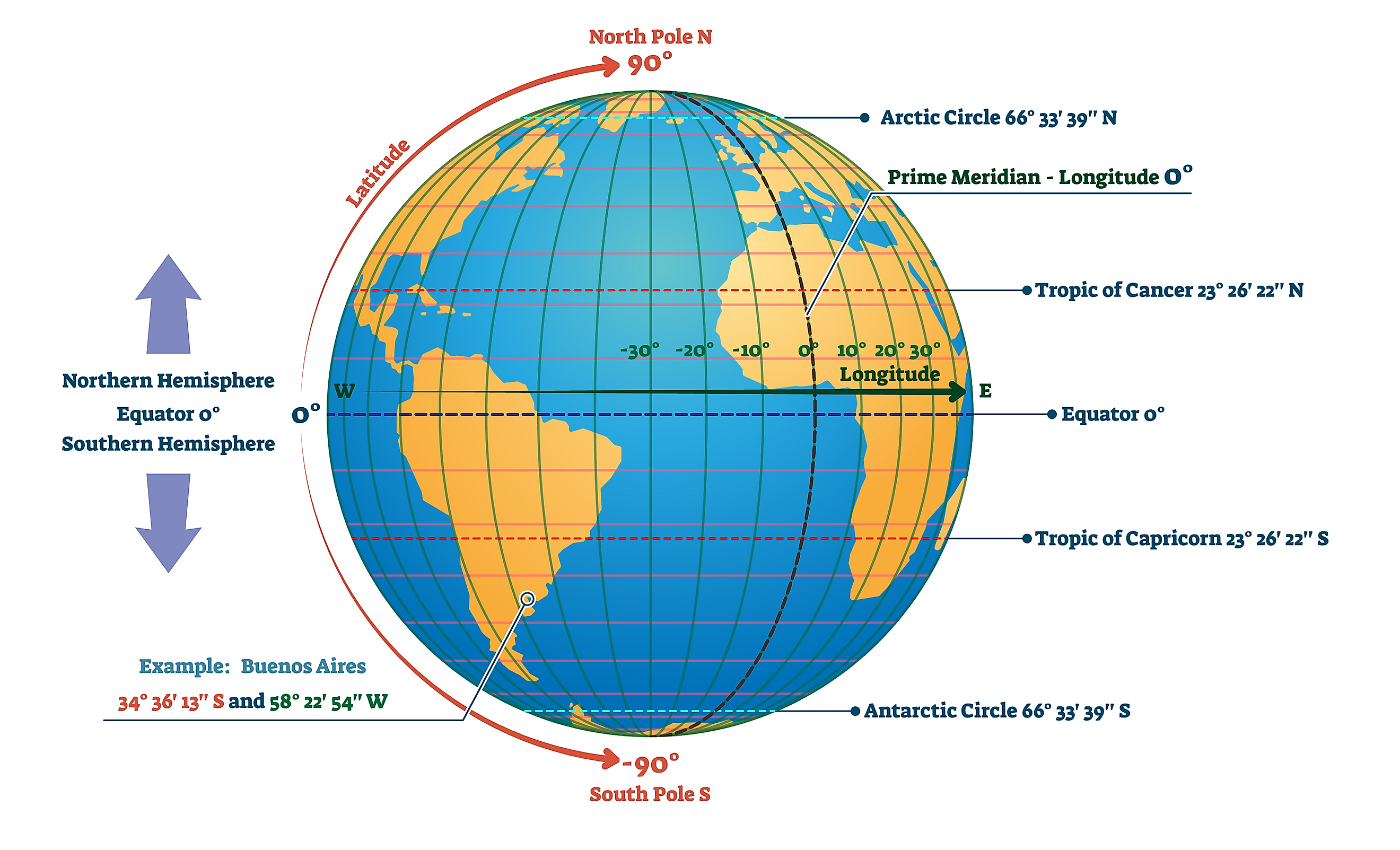

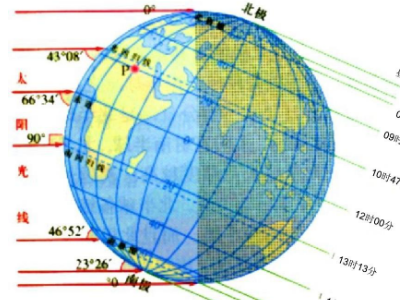

Tropic of Capricorn

The Tropic of Capricorn is an imaginary line of latitude at about 23.5° south of the Equator. It marks the southernmost point on Earth where the Sun can be directly overhead (at noon) during the year.

In simple terms: Tropic of Capricorn = the southern limit of the tropics

The Tropic of Capricorn crosses parts of:

| Line | Latitude | Meaning | | ----------------------- | ----------- | ----------------------------- | | Equator | 0° | Middle of Earth | | Tropic of Cancer | 23.5° N | Northern limit of tropics | | Tropic of Capricorn | 23.5° S | Southern limit of tropics |

Tropic of Capricorn 中文叫 南回归线,是一条位于 南纬约 23.5° 的 假想纬线。 它表示 太阳一年中能够直射到达的最南界线。

简单说: 南回归线 = 热带的最南边界

南回归线穿过:

| 纬线 | 纬度 | 含义 | | -------- | ----------- | --------- | | 赤道 | 0° | 地球中线 | | 北回归线 | 23.5° N | 热带最北界 | | 南回归线 | 23.5° S | 热带最南界 |

If you want, paste the full sentence or exam question (e.g., about seasons, climate zones, or Earth–Sun geometry), and I’ll explain exactly why the Tropic of Capricorn is mentioned—中英对照、直击考点.

Tropic ofCancer

The Tropic of Cancer is an imaginary line of latitude located at about 23.5° north of the Equator. It marks the northernmost point on Earth where the Sun can be directly overhead (at noon) during the year.

In simple terms: Tropic of Cancer = the northern limit of the tropics

The Tropic of Cancer crosses parts of:

| Line | Latitude | Meaning | | ------------------- | -------- | ------------------------- | | Equator | 0° | Middle of Earth | | Tropic of Cancer | 23.5° N | Northern limit of tropics | | Tropic of Capricorn | 23.5° S | Southern limit of tropics |

Tropic of Cancer 中文叫 北回归线,是一条位于 北纬约 23.5° 的 假想纬线。 它表示 太阳一年中能够直射到达的最北界线。

简单说: 北回归线 = 热带的最北边界

北回归线穿过:

| 纬线 | 纬度 | 含义 | | ---- | ------- | ----- | | 赤道 | 0° | 地球中线 | | 北回归线 | 23.5° N | 热带最北界 | | 南回归线 | 23.5° S | 热带最南界 |

If you want, paste the full sentence or exam question (e.g., about seasons, climate zones, or Earth–Sun geometry), and I’ll explain exactly why the Tropic of Cancer is mentioned—中英对照、直击考点.

prairie

A prairie is a type of large, open grassland with few or no trees, found mainly in central North America. The land is usually flat or gently rolling and covered mostly by grasses and wildflowers.

In simple terms: prairie = wide, open grassland

Prairies are often divided into types based on rainfall:

| Type | Rainfall | Description | | ----------------------- | -------- | ------------------------------- | | Tallgrass prairie | Higher | Tall grasses, very fertile soil | | Mixed-grass prairie | Medium | Mix of tall and short grasses | | Shortgrass prairie | Lower | Short grasses, drier conditions |

Many animals are adapted for open spaces, burrowing, or running.

Prairies:

| Feature | Prairie | Forest | Desert | | -------- | ------------ | -------- | ------------- | | Trees | Few | Many | Very few | | Rainfall | Medium | High | Very low | | Plants | Grasses | Trees | Sparse plants | | Soil | Very fertile | Moderate | Poor |

If you see “prairie” by itself, it usually functions as:

prairie 指 大草原,是一种 以草类为主、几乎没有树木 的开阔地形,主要分布在 北美中部。

简单说: prairie = 北美大草原

| 类型 | 中文 | 特点 | | ------------------- | ---- | -------- | | Tallgrass prairie | 高草草原 | 草高、土壤最肥沃 | | Mixed-grass prairie | 混合草原 | 中等高度 | | Shortgrass prairie | 矮草草原 | 较干燥 |

| 特点 | 草原 | 森林 | 沙漠 | | -- | -- | -- | -- | | 树木 | 少 | 多 | 极少 | | 降水 | 中等 | 高 | 很低 | | 土壤 | 肥沃 | 中等 | 贫瘠 |

如果只看到 prairie,通常表示:

If you want, you can paste the 完整句子、地图或考试题(例如关于 prairie food webs 或生态适应 的内容),我可以帮你 逐句解释并标出考点(中英对照)。

burrow

Burrow can be both a noun and a verb.

In simple terms: burrow = an underground home or tunnel made by animals

A burrow is an underground space used by animals for:

Examples:

To burrow means:

Examples:

Burrows affect ecosystems by:

Animals that do this are sometimes called ecosystem engineers.

| Word | Difference | | ---------- | ------------------------------- | | burrow | underground tunnel | | nest | built above ground or in trees | | den | shelter (often larger animals) | | hole | general, not necessarily a home |

burrow 既可以作 名词,也可以作 动词。

简单说: burrow = 动物挖的地下洞穴 / 挖洞

动物的 burrow 用来:

例句:

burrow 表示:

例句:

动物挖洞可以:

| 英文 | 中文区别 | | ---------- | ---------- | | burrow | 地下洞穴 | | nest | 巢(多在地面或树上) | | den | 兽穴(较大动物) | | hole | 普通的洞 |

If you want, you can paste the 完整句子或课文段落(例如关于 gophers、coyotes、grassland ecosystems 的内容),我可以帮你 结合语境精确解释 burrow 的作用和考点(中英对照)。

gophers

Gophers is the plural noun of gopher. In biology, it usually refers to small burrowing rodents, especially pocket gophers, known for living underground and pushing up soil mounds.

In simple terms: gophers = small digging rodents that live in tunnels underground

Gophers play important roles in ecosystems:

If you see “gophers” by itself, it usually functions as:

gophers 是 gopher(地鼠 / 囊地鼠) 的复数形式,指一类 善于挖洞、长期生活在地下的啮齿动物。

简单说: gophers = 生活在地下、会挖洞的地鼠

地鼠在生态系统中具有重要作用:

如果只看到 gophers,通常表示:

If you want, paste the full sentence or exam question where gophers appears (e.g., a grassland food web), and I’ll explain its exact role and why it’s mentioned in both English and Chinese.

coyotes

Coyotes is the plural noun of coyote. Coyotes are medium-sized wild canines (Canis latrans) native to North America and closely related to wolves and dogs.

In simple terms: coyotes = wild, dog-like predators that are highly adaptable

Coyotes have expanded dramatically and now live:

Coyotes are mesopredators that:

If the excerpt is just “coyotes”, it likely functions as:

coyotes 是 coyote(郊狼) 的复数形式。 郊狼是一种 野生犬科动物,原产于 北美,与狼和狗有亲缘关系。

简单说: coyotes = 适应能力很强的野生“狼狗类”动物

郊狼分布极广:

郊狼属于 中型捕食者(中级捕食者):

若只看到 coyotes,通常是:

如果你把 完整句子或课文段落(例如 Science 10 的食物网或生态系统内容)贴出来,我可以帮你 精确解释 coyotes 在该语境中的含义与考点(中英对照)。

kangaroos

Kangaroos is the plural noun of kangaroo. Kangaroos are large marsupial mammals native to Australia, famous for hopping on powerful hind legs and for carrying their young in a pouch.

In simple terms: kangaroos = large Australian animals that hop and carry babies in a pouch

If you see “kangaroos” by itself, it usually serves as:

kangaroos 是 kangaroo(袋鼠) 的复数形式。 袋鼠是 澳大利亚特有的大型有袋类哺乳动物,以 跳跃行走 和 育儿袋 著称。

简单说: kangaroos = 会跳跃、用育儿袋养幼崽的澳大利亚动物

若只出现 kangaroos,通常是:

如果你把 完整句子或课文段落(如关于草原放牧、食物网或适应性的内容)贴出来,我可以进一步 逐句解释其语境含义与考点(中英对照)。

leopards

Leopards is the plural noun of leopard. Leopards are large wild cats known for their spotted coats, stealth, and adaptability.

In simple terms: leopards = powerful, stealthy big cats with spotted fur

Leopards have one of the widest ranges of any big cat:

They can live near human settlements due to their adaptability.

| Feature | Leopards | Cheetahs | | -------- | --------------------- | --------------------- | | Spots | Rosettes | Solid black spots | | Body | Stocky, powerful | Slim, built for speed | | Speed | Fast, but not fastest | Fastest land animal | | Climbing | Excellent climbers | Poor climbers | | Hunting | Stealth & strength | Speed chases |

Leopards are top predators that:

If you see “leopards” by itself, it usually functions as:

leopards 是 leopard(豹子) 的复数形式。 豹子是一种 大型猫科动物,以 斑点花纹、隐蔽性强、适应能力高 而著名。

简单说: leopards = 有斑点、善于潜行的大型猫科动物

豹子分布范围很广:

适应力强,能在多种环境中生存。

| 特点 | 豹子 | 猎豹 | | ---- | ----- | ---- | | 斑点 | 玫瑰状 | 实心点 | | 体型 | 强壮 | 修长 | | 奔跑 | 快但非最快 | 最快 | | 爬树 | 很强 | 很弱 | | 捕猎方式 | 潜行伏击 | 高速追逐 |

如果只看到 leopards,通常表示:

如果你愿意,把 完整句子或课文段落(如草原生态、捕食者比较、适应性等)贴出来,我可以帮你 逐词拆解并标注考试要点(中英对照)。

cheetahs

Cheetahs is the plural noun of cheetah. It refers to a species of large wild cat best known as the fastest land animal.

In simple terms: cheetahs = very fast spotted big cats

Cheetahs are predators that help control prey populations, contributing to ecosystem balance.

If the excerpt is just “cheetahs”, it likely functions as:

cheetahs 是 cheetah(猎豹) 的复数形式。 猎豹是 陆地上速度最快的动物。

简单说: cheetahs = 速度极快、身上有黑色斑点的大型猫科动物

如果只看到 cheetahs,通常是:

If you want, paste the 完整句子或课文段落(如关于草原生态、捕食者或适应性的内容),我可以帮你 精确说明 cheetahs 在该语境中的含义与考点(中英对照)。

grazing

Grazing is a noun and also the -ing form of the verb “graze.” It refers to the act of animals feeding on grasses and other low-growing plants.

In simple terms: grazing = animals eating grass or similar plants

Typical grazing animals include:

These animals are often called grazers.

| Term | What is eaten | Examples | | ------------ | --------------------- | -------------- | | Grazing | Grass, low plants | Cows, horses | | Browsing | Leaves, shrubs, twigs | Deer, giraffes |

This distinction is common in biology and ecology texts.

Grazing plays a major role in ecosystems:

Moderate grazing can be beneficial to ecosystems.

Overgrazing happens when animals eat plants faster than they can regrow.

Consequences include:

This term often appears in environmental science and geography.

Besides feeding, graze can also mean:

To touch lightly or scrape

“The ball grazed his arm.”

But in science/ecology contexts, grazing almost always means feeding.

grazing 指 放牧、吃草,是动物在草地上 啃食草类和低矮植物 的行为。

简单说: grazing = 动物吃草 / 放牧

常见 食草动物:

这些动物被称为 放牧动物(grazers)。

| 英文 | 中文 | 吃什么 | | ------------ | ---- | ------ | | grazing | 放牧 | 草、低矮植物 | | browsing | 取食灌木 | 树叶、嫩枝 |

适度放牧可以:

过度放牧 是考试中的高频概念,指:

动物取食速度超过植物再生速度

后果包括:

graze 还可表示:

轻微擦过、掠过

球擦过他的手臂

但在 生物/地理课本 中,grazing 几乎总是指“放牧”。

If you want, you can paste the 完整句子或考试题(例如关于草原、食物网或人类活动的内容),我可以帮你 精确解释 grazing 在该语境中的含义与考点(中英对照)。

acacia

Acacia is a noun referring to a group of trees and shrubs in the genus Acacia (pea/legume family). They are especially common in Australia, Africa, and tropical–subtropical regions.

In simple terms: acacia = a thorny tree or shrub adapted to hot, dry environments

Acacias show classic dry-environment adaptations:

These traits make acacias dominant in savannas and semi-deserts.

If the excerpt is just “acacia”, it usually functions as:

acacia 指 金合欢,是一类生长在 热带和亚热带地区 的 乔木或灌木,属于 豆科植物。

简单说: acacia = 金合欢,一种耐旱、常带刺的树或灌木

金合欢是 干旱环境适应植物 的典型例子:

如果只看到 acacia,通常表示:

If you want, paste the 完整句子或课文段落(例如关于 干旱生态系统或植物适应性 的内容),我可以帮你 逐句拆解并标出考点(中英对照)。

clover

Clover is a noun referring to a group of low-growing flowering plants in the genus Trifolium (pea/legume family). They are common in lawns, meadows, and fields.

In simple terms: clover = a small plant with three-part leaves and round flower heads

Note: A four-leaf clover is rare and traditionally considered lucky.

Clover is mainly insect-pollinated:

This makes clover important for pollinator health.

Clover plays several key roles:

If you see “clover” by itself, it usually functions as:

clover 指 三叶草,是一类 低矮的开花植物,属于 豆科(Trifolium 属),在草地和草坪中非常常见。

简单说: clover = 三叶草

四叶草 属于基因突变,较罕见,常被视为幸运象征。

三叶草主要是 虫媒花:

如果只看到 clover,通常表示:

If you want, paste the 完整句子或课文段落(如 Science 10 的授粉或生态系统内容),我可以帮你 逐词拆解并标出考点(中英对照)。

goldenrod

Goldenrod is a noun referring to a group of wildflower plants in the genus Solidago. They are named for their bright golden-yellow flowers arranged in clusters on tall stems.

In simple terms: goldenrod = tall yellow wildflowers commonly seen in late summer and fall

Goldenrod is insect-pollinated, not wind-pollinated.

⚠️ Common misconception: Goldenrod does NOT cause hay fever.

Goldenrod:

If the excerpt is just “goldenrod”, it usually functions as:

goldenrod 指 一枝黄花,是一类常见的 野生开花植物,学名属 Solidago。

名字直译为“金色的枝条”,形容它 金黄色的花序。

简单说: goldenrod = 一枝黄花,秋季常见的黄色野花

一枝黄花是 虫媒花:

⚠️ 常见误区: 一枝黄花不是导致花粉过敏的元凶。 真正引起秋季过敏的多是 豚草(ragweed),因为它是 风媒花。

如果只看到 goldenrod,通常表示:

If you want, you can paste the 完整句子或课文段落(例如 Science 10 关于授粉或生态系统的内容),我可以帮你 逐句拆解 + 标出考点(中英对照)。

asters

Asters is the plural noun of aster. It refers to a group of wildflower plants in the daisy family (Asteraceae), known for their star-shaped flowers.

The word aster comes from Greek, meaning “star”, describing the flower’s shape.

In simple terms: asters = star-shaped wildflowers, often blooming in late summer and fall

Flower shape: Looks like a single flower but is actually a composite flower head

Disk florets in the center

Asters are typically insect-pollinated:

This makes asters ecologically important in temperate ecosystems.

Asters:

If the excerpt is just “asters”, it usually functions as:

asters 是 aster(紫菀) 的复数形式,指一类 野生开花植物,属于 菊科。

“aster” 一词源自希腊语,意思是 “星星”,形容花朵呈放射状。

简单说: asters = 紫菀类野花,花形像星星

复合花序:看起来像一朵花,实际上由很多小花组成

中央是 管状花

紫菀属于 虫媒花:

如果只看到 asters,通常表示:

If you want, you can paste the 完整句子或课文段落(例如 Science 10 的野花或授粉内容),我可以帮你 逐词拆解 + 标注考点(中英对照)。

wind- and insect-pollinated wildflowers

This phrase describes wildflowers (naturally growing flowering plants) that are pollinated in two different ways:

So, the phrase groups wildflowers by their pollination method.

In simple terms: wind- and insect-pollinated wildflowers = wildflowers that rely on wind or insects to transfer pollen

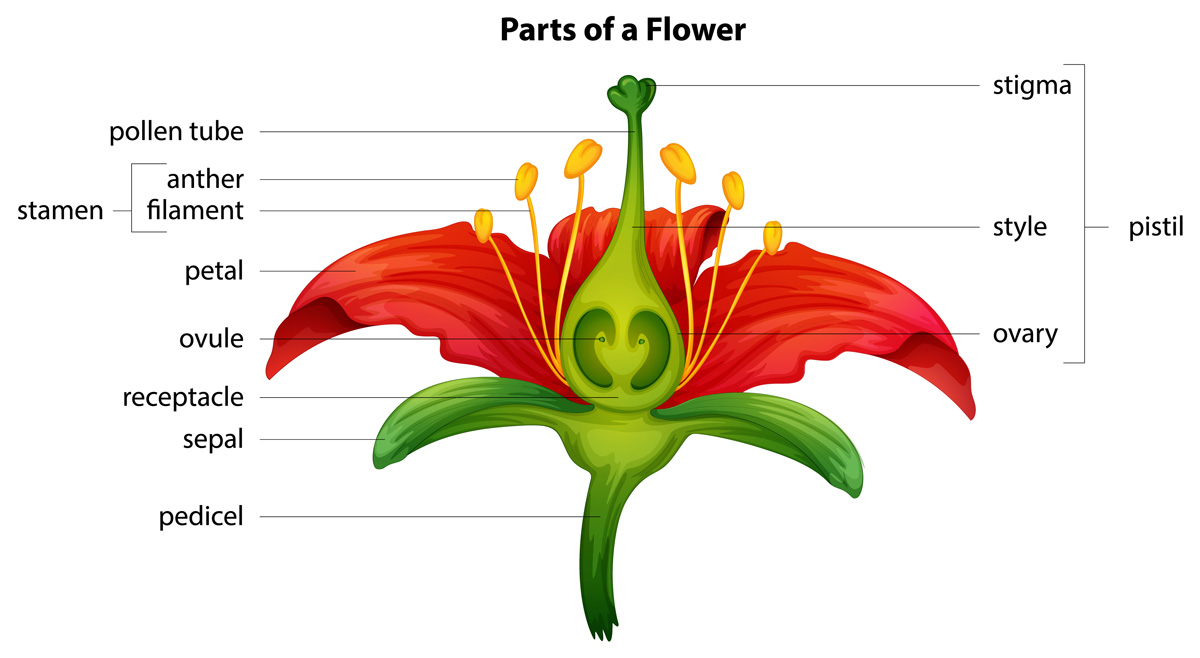

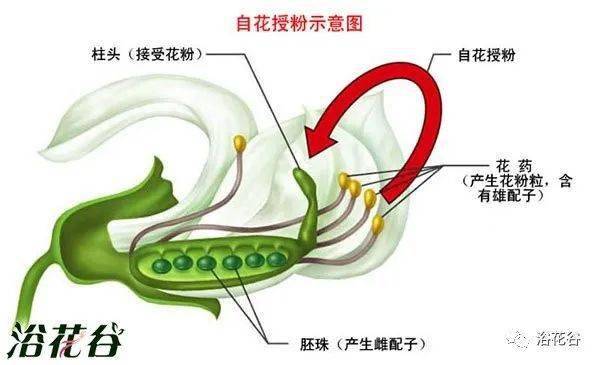

Pollination is the transfer of pollen from the male parts of a flower (anthers) to the female parts (stigma). This process is necessary for:

Wind-pollinated plants do not rely on animals, so they usually have:

Examples (general): grasses, some meadow plants.

👉 Strategy: quantity over attraction — release lots of pollen and let the wind do the work.

Insect-pollinated plants attract insects, so they usually have:

Examples: daisies, lupines, buttercups.

👉 Strategy: attraction and precision — fewer pollen grains, but targeted delivery.

| Feature | Wind-pollinated | Insect-pollinated | | ---------- | --------------- | ----------------- | | Petals | Small / dull | Bright / showy | | Scent | None | Often strong | | Nectar | None | Present | | Pollen | Light, abundant | Sticky, heavier | | Efficiency | Low precision | High precision |

When you see “wind- and insect-pollinated wildflowers”, the text is usually:

这个短语指的是 野生开花植物(野花),它们通过 两种方式之一 完成授粉:

简单说: 风媒和虫媒授粉的野花

授粉 是指 花粉从雄蕊传到雌蕊 的过程,是植物:

风媒植物通常具有:

👉 依赖自然风力,而不是动物。

虫媒植物通常具有:

👉 依靠昆虫的“精准投递”。

| 特征 | 风媒花 | 虫媒花 | | ---- | ----- | ---- | | 花瓣 | 小、不显眼 | 鲜艳 | | 气味 | 无 | 有 | | 花蜜 | 无 | 有 | | 花粉 | 多、轻 | 少、黏 | | 授粉方式 | 随机 | 高效精准 |

看到 “wind- and insect-pollinated wildflowers”,通常是在:

If you want, paste the 完整句子或课文段落(例如 Science 10 生态系统或阅读理解),我可以帮你 逐句拆解 + 标出考点(中英对照)。

sticky

Sticky is an adjective describing something that clings, adheres, or sticks to other things when touched. It usually involves a tacky or glue-like surface.

In simple terms: sticky = able to stick to things

Something described as sticky often has one or more of these features:

Examples:

Here, sticky often describes an adaptation for protection or feeding.

Sticky can also describe difficult or awkward situations:

Meaning: hard to deal with or escape from.

Related words:

stick (verb)

Example sentences:

| Word | Difference | | ---------- | ---------------------- | | sticky | general, everyday term | | adhesive | more technical | | tacky | lightly sticky | | gluey | thick, glue-like |

sticky 是一个形容词,意思是 黏的、粘的、容易粘住东西的。

简单说: sticky = 有黏性的

被形容为 sticky 的东西通常:

例子:

sticky 也可形容 棘手、难处理的情况:

例句:

| 英文 | 中文差别 | | -------- | --------- | | sticky | 通用“黏” | | adhesive | 技术性“有黏附力” | | tacky | 微黏 | | gluey | 像胶水一样黏 |

If you want, you can paste the 完整句子或课文 where sticky appears (例如 Science 10 的生态系统描述), and I’ll explain 它在该语境中的准确含义和考点(中英对照)。

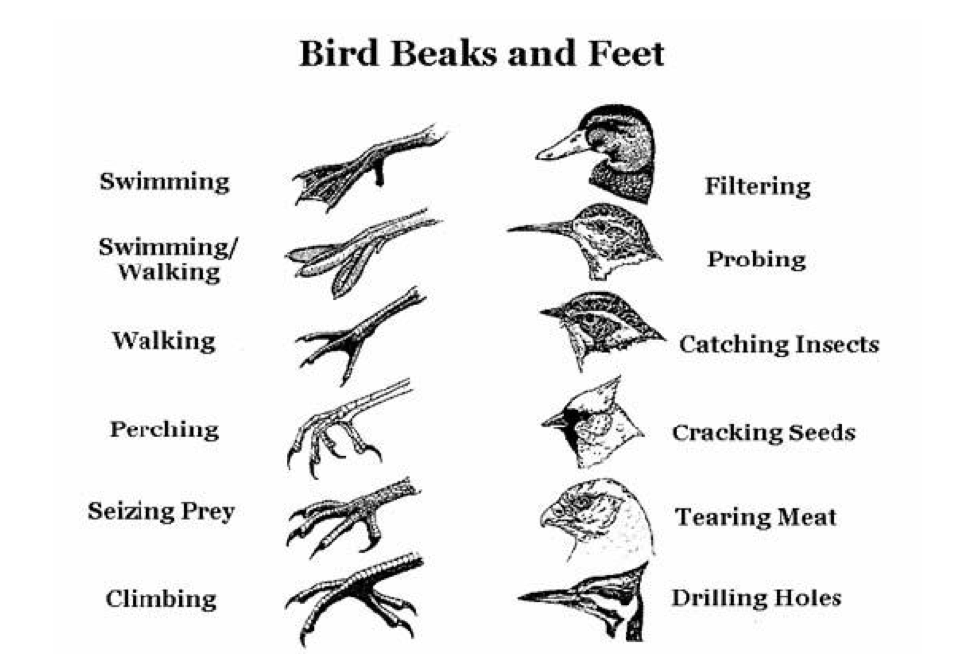

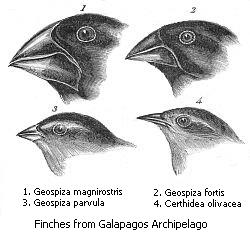

beaks

Beaks is the plural noun of beak. A beak is the hard, pointed mouthpart of birds (and some other animals) used for eating, grasping, grooming, building nests, and defense.

In simple terms: beaks = the hard mouths of birds

Beak shape reflects what and how an animal eats:

| Beak type | Shape | Function | | ------------- | ------------- | --------------------------------- | | Short & thick | Cone-shaped | Cracking seeds (e.g., finches) | | Long & thin | Needle-like | Sipping nectar / catching insects | | Hooked | Sharp, curved | Tearing meat (hawks, owls) | | Flat & broad | Wide | Filtering food from water (ducks) |

This is a classic example of adaptation and natural selection.

While most common in birds, beaks are also found in:

If the excerpt is just “beaks”, it usually functions as:

beaks 是 beak(喙) 的复数形式。 喙 是鸟类坚硬的口器,用于 进食、抓取、整理羽毛、筑巢、防御 等。

简单说: beaks = 鸟的嘴(喙)

不同鸟类的喙形状不同,是为了适应不同食物:

| 喙形 | 功能 | | ---- | ----------- | | 短而厚 | 啄碎种子 | | 细而长 | 吸食花蜜 / 捕捉昆虫 | | 弯钩状 | 撕裂肉类 | | 扁平宽阔 | 过滤水中食物 |

这是生物课中常见的 适应性进化 例子。

除了鸟类,一些动物也有喙:

如果只看到 beaks,通常是:

If you want, paste the full sentence, diagram, or exam question where beaks appears, and I’ll explain its exact role and why it matters in that context(中英对照).

tree bark

Tree bark is the outer protective covering of a tree’s trunk, branches, and roots. It is not wood; it’s the layer outside the wood that shields the tree from harm.

In simple terms: tree bark = the tree’s “skin”

Bark usually includes two main parts:

Outer bark

Dead tissue

Inner bark (phloem)

Living tissue

Together, these layers keep the tree alive and protected.

Tree bark helps a tree by:

Bark appearance varies widely:

These differences help with tree identification.

Tree bark:

If you see “tree bark” by itself, it usually functions as:

tree bark 指 树皮,是覆盖在树干、树枝和根部最外层的组织。

简单说: tree bark = 树的“皮肤”

树皮一般包括两部分:

外树皮

多为死亡组织

内树皮(韧皮部)

活组织

树皮的功能包括:

这些差异常用于 识别树种。

树皮为生态系统提供:

如果只看到 tree bark,通常是:

If you want, you can paste the full sentence or diagram where tree bark appears (for example in a Science 10 forest ecosystem passage), and I’ll explain why it’s mentioned and what concept it supports, in both English and Chinese.

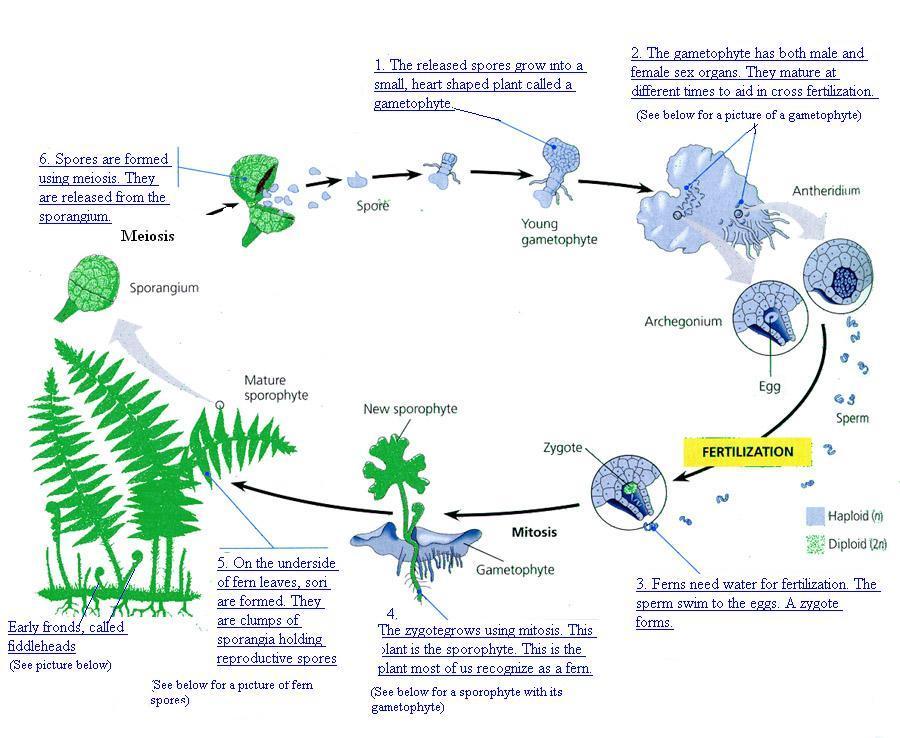

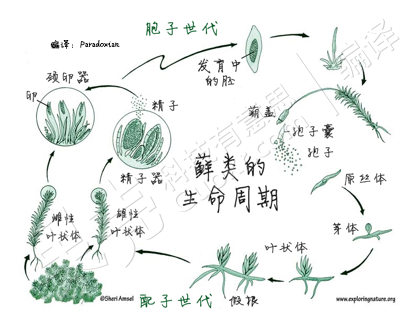

Ferns

Ferns is the plural noun of fern. It refers to a group of non-flowering, seedless plants that reproduce by spores, not seeds.

In simple terms: ferns = leafy plants with fronds that reproduce by spores instead of flowers or seeds

Ferns have alternation of generations:

This explains why ferns are common near forests, streams, and wetlands.

| Feature | Ferns | Mosses | Seed plants | | --------------- | ---------- | ----------- | ----------- | | Vascular tissue | ✅ Yes | ❌ No | ✅ Yes | | Flowers | ❌ No | ❌ No | ✅ (many) | | Seeds | ❌ No | ❌ No | ✅ Yes | | Reproduction | Spores | Spores | Seeds | | Dominant stage | Sporophyte | Gametophyte | Sporophyte |

If you see “Ferns” by itself, it is usually:

Ferns 是 fern(蕨类植物) 的复数形式,指 蕨类植物。 蕨类 不开花、不结果、不结种子,而是通过 孢子 繁殖。

简单说: ferns = 通过孢子繁殖的蕨类植物

蕨类具有 世代交替:

这也是蕨类多分布在潮湿地区的原因。

| 特点 | 蕨类 | 苔藓 | 种子植物 | | ---- | --- | --- | ---- | | 输导组织 | ✅ | ❌ | ✅ | | 种子 | ❌ | ❌ | ✅ | | 花 | ❌ | ❌ | ✅ | | 显性世代 | 孢子体 | 配子体 | 孢子体 |

如果只出现 Ferns,通常表示:

If you want, you can paste the 完整句子、图表或考试题 where Ferns appears, and I’ll explain 它在该语境中的具体含义和考点(中英对照)。

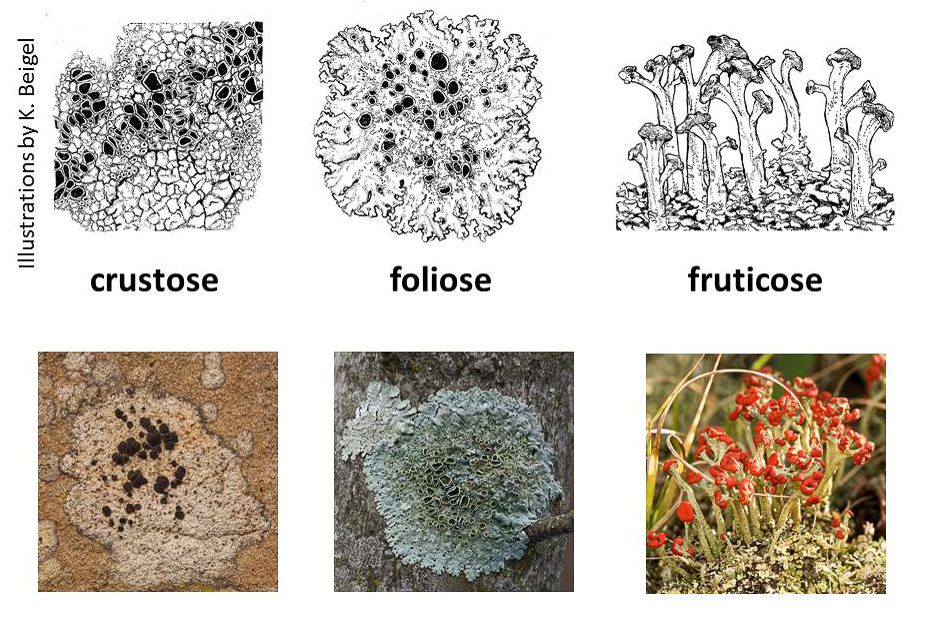

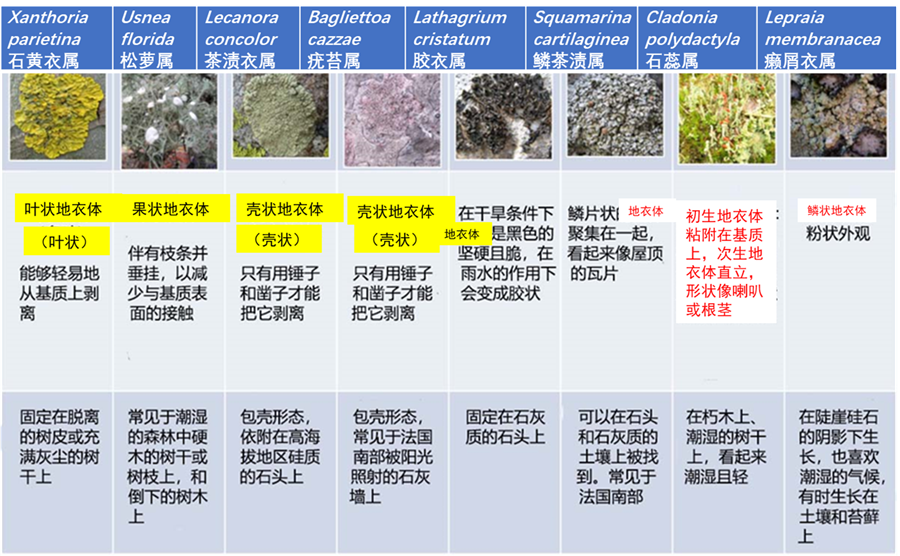

lichens

Lichens is the plural noun of lichen. Lichens are not single organisms; they are a partnership (symbiosis) between:

In simple terms: lichens = organisms made of fungus + algae living together

Lichens can grow in extreme environments:

Lichens are very important because they:

If the excerpt is just “lichens”, it usually functions as:

lichens 是 lichen(地衣) 的复数形式。 地衣不是单一生物,而是由 真菌 + 藻类(或蓝藻) 共同组成的 共生体。

简单说: lichens = 真菌和藻类共同生活形成的生物

地衣能生活在 极端环境 中:

地衣的作用包括:

如果只看到 lichens,通常表示:

If you want, you can paste the full sentence or diagram (for example from a Science 10 forest ecosystem passage), and I’ll explain exact meaning, function, and why lichens are mentioned there, in both English and Chinese.

draped

Draped is the past tense / past participle of the verb drape. It means hung loosely or laid over something so that it falls naturally in folds.

In simple terms: draped = loosely hung or spread over something

The key idea behind draped is gravity + softness:

Here, draped emphasizes gentle coverage, not force or damage.

This use creates a soft, atmospheric image.

Passive voice: often used

“The hills were draped in fog.”

Common patterns:

| Word | Difference | | ----------- | --------------------------- | | draped | loose, natural, soft | | covered | neutral, no texture implied | | wrapped | tight, enclosed | | coated | thin layer, often liquid |

draped 是动词 drape 的 过去式/过去分词,意思是: 松散地垂挂、披着、覆盖在……之上,通常是 自然下垂的状态。

简单说: draped = 垂挂着的、披着的

“draped” 强调的是:

这里的 draped 强调 轻柔覆盖,不是压断或破坏。

| 英文 | 中文区别 | | ---------- | ----------- | | draped | 垂挂、披着(柔软自然) | | covered | 覆盖(中性) | | wrapped | 包裹(紧) | | coated | 涂层(薄层) |

If you want, you can paste the full sentence where draped appears (for example in a Science 10 forest ecosystem passage), and I’ll explain why this word is chosen instead of “covered”, in both English and Chinese.

Mosses

Mosses is the plural noun of moss. It refers to a group of small, non-vascular plants (called bryophytes) that do not produce flowers or seeds and instead reproduce by spores.

In simple terms: mosses = tiny green plants that grow in damp places and reproduce by spores

Mosses show alternation of generations, but with a key difference from ferns:

| Feature | Mosses | Ferns | Seed plants | | --------------- | ----------- | ---------- | ----------- | | Vascular tissue | ❌ No | ✅ Yes | ✅ Yes | | Seeds | ❌ No | ❌ No | ✅ Yes | | Flowers | ❌ No | ❌ No | ✅ (many) | | Dominant stage | Gametophyte | Sporophyte | Sporophyte | | Typical size | Very small | Medium | Large |

Mosses play major roles in ecosystems:

If the excerpt is just “Mosses”, it most likely functions as:

Mosses 是 moss(苔藓) 的复数形式,指 苔藓植物。 苔藓是 非维管植物,不开花、不结种子,通过 孢子繁殖。

简单说: mosses = 苔藓植物

苔藓具有 世代交替,但特点是:

这就是苔藓多分布在 阴湿环境 的原因。

| 特点 | 苔藓 | 蕨类 | 种子植物 | | ---- | --- | --- | ---- | | 输导组织 | ❌ | ✅ | ✅ | | 种子 | ❌ | ❌ | ✅ | | 花 | ❌ | ❌ | ✅ | | 显性世代 | 配子体 | 孢子体 | 孢子体 | | 体型 | 很小 | 中等 | 大 |

如果只看到 Mosses,通常表示:

If you want, paste the full sentence、图表或考试题 where Mosses appears, and I’ll explain 它在该语境中的确切含义与考点(中英对照)。

Sitka spruce

Sitka spruce is a large evergreen coniferous tree (Picea sitchensis) native to the Pacific Northwest coast of North America—from Alaska down through British Columbia to California.

In simple terms: Sitka spruce = a very tall coastal evergreen tree common in wet, cool climates.

If you see “Sitka spruce” alone, it’s usually:

Example sentence:

Sitka spruce 指 西加云杉(学名 Picea sitchensis),是一种生长在 北美太平洋沿岸 的 大型常绿针叶树。

简单说: Sitka spruce = 太平洋沿岸常见的高大云杉树

如果单独出现 Sitka spruce,通常是:

If you want, you can paste the full sentence or diagram (for example from a Science 10 or BC ecosystems reading), and I’ll explain why Sitka spruce is mentioned there and what point it supports, in both English and Chinese.

blue jays

Blue jays is the plural noun of blue jay, a medium-sized songbird native to North America. They are famous for their bright blue feathers, white chest, black markings, and loud, intelligent behavior.

In simple terms: blue jays = bright blue birds known for loud calls and high intelligence.

Blue jays are important to ecosystems because they:

If the excerpt is just “blue jays”, it most likely functions as:

blue jays 是 blue jay(蓝松鸦) 的复数形式,指一种生活在 北美 的鸟类,以 鲜艳的蓝色羽毛 和 吵闹、聪明 而著名。

简单说: blue jays = 蓝松鸦,蓝色、聪明、声音很大的鸟

如果只看到 blue jays,通常表示:

If you’d like, paste the full sentence or paragraph (for example from a Science 10 ecosystem or food-web passage), and I’ll explain their exact role and meaning in context in both English and Chinese.

chipmunks

Chipmunks is the plural noun of chipmunk. It refers to small, ground-dwelling rodents in the squirrel family, easily recognized by their striped backs and cheek pouches.

In simple terms: chipmunks = small striped rodents that store food in their cheeks.

Chipmunks play useful roles in ecosystems:

If the excerpt is just “chipmunks”, it likely functions as:

chipmunks 是 chipmunk 的复数形式,指 花栗鼠,是一种体型很小、背部有明显条纹的啮齿动物。

简单说: chipmunks = 花栗鼠,小型、有条纹、会囤食物的动物

如果只看到 chipmunks,通常表示:

If you want, you can paste the full sentence or paragraph (for example from a Science 10 ecosystem or food-web passage), and I’ll explain the exact role and meaning in context in both English and Chinese.

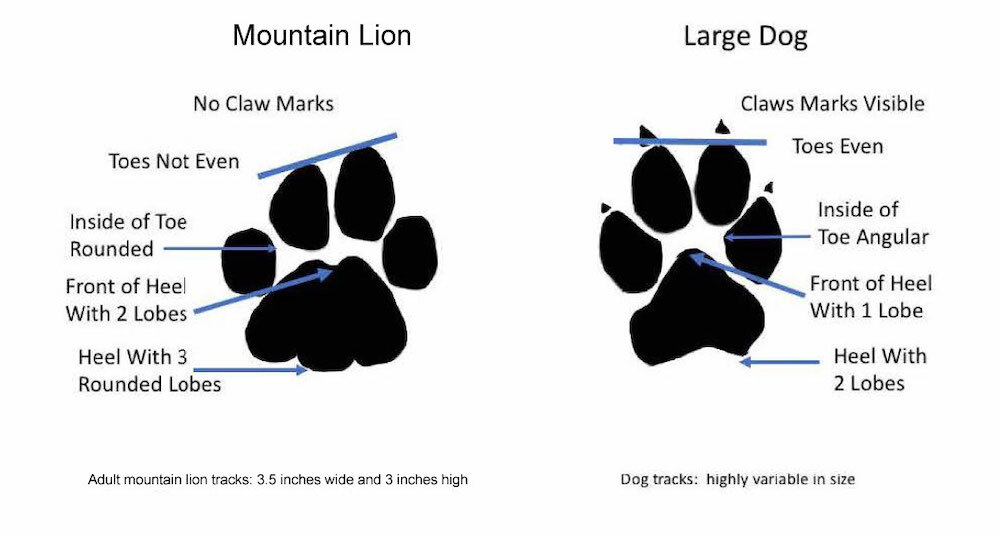

cougars

Cougars is the plural noun of cougar. It refers to a large wild cat species found mainly in the Americas. The same animal is also commonly called mountain lion, puma, or panther (regional names).

In simple terms: cougars = large wild cats that are powerful predators.

Cougars live in a wide range of environments:

They are found from Canada through the western United States and down into South America. In British Columbia, cougars are part of the natural ecosystem.

Cougars are apex predators:

If the excerpt is just “cougars”, it most likely functions as:

cougars 是 cougar 的复数形式,指 美洲狮(也常称为 山狮 / 美洲豹猫 / puma,不同地区叫法不同)。

简单说: cougars = 美洲狮,一种大型野生猫科动物

美洲狮分布范围很广,包括:

在 加拿大(包括 BC),美洲狮是重要的本地大型捕食者。

美洲狮属于 顶级捕食者:

如果只看到 cougars,通常表示:

If you want, you can paste the full sentence or paragraph (for example from a Science 10 food web or forest ecosystem passage), and I’ll explain exact meaning, ecological role, and exam-relevant points in both English and Chinese.

skunks

Skunks is the plural noun of skunk. It refers to small to medium-sized mammals best known for their ability to spray a strong-smelling liquid as a defense.

In simple terms: skunks = animals famous for their black-and-white coloring and strong defensive smell.

Skunks do not spray immediately. They usually:

The spray can reach several meters and is very hard to remove.

Skunks are common in North and Central America and often live:

They adapt well to human environments.

If the excerpt is just “skunks”, it most likely functions as:

skunks 是 skunk(臭鼬) 的复数形式,指 臭鼬 这种哺乳动物。 臭鼬以 能喷射强烈臭味的防御液体 而闻名。

简单说: skunks = 臭鼬,多为黑白相间、以臭味防身的动物

臭鼬通常 不会立刻喷臭液,而是先警告:

臭鼬主要分布在 北美和中美洲,在加拿大十分常见,常见于:

如果只出现一个词 skunks,通常是:

If you want, you can paste the full sentence or paragraph (for example from a Science 10 ecosystem passage), and I’ll explain how skunks fit into that context, in both English and Chinese.

breakage

Breakage is a noun that refers to the act, process, or result of breaking. It describes damage caused when something breaks, often into pieces or becomes structurally weakened.

In simple terms: breakage = the damage or loss caused by something breaking.

Breakage can apply to many contexts:

Plants & nature:

“Snow causes branch breakage.” (Branches snap under heavy snow.)

Materials & objects:

“Glass breakage during transport.”

Hair & fibers:

“Hair breakage from dryness or heat.”

Engineering & science:

“Metal breakage due to stress or fatigue.”

👉 The focus is usually on damage or loss, not the action itself.

Related forms:

break (verb)

Common patterns:

Example sentences:

In biology or geography texts, breakage often appears when explaining adaptations:

So here, breakage means structural damage caused by external forces.

breakage 是一个名词,指 破损、断裂、损坏,强调 由于“断裂”而造成的结果或损失。

简单说: breakage = 因断裂造成的损坏

自然 / 植物:

积雪造成树枝 折断

物品运输:

玻璃制品的 破损率

日常生活:

头发 断裂

工程 / 科学:

材料在应力下发生 断裂

👉 重点不是“打断这个动作”,而是 断了之后的损坏状态。

常见搭配:

减少 / 防止 breakage

例句:

在科学课中,breakage 常用于解释 适应性结构:

If you want, you can paste the full sentence or paragraph where breakage appears (for example in a Science 10 ecosystem passage), and I’ll explain the exact meaning and why it’s used there, in both English and Chinese.

shed

Shed can be both a verb and a noun, with related but different meanings.

To shed means to let something fall off, drop, or be removed naturally, often as part of a normal process.

Common uses:

👉 Core idea: losing or getting rid of something, often naturally or gradually.

A shed (noun) is a small, simple building, usually for storage, such as a garden shed or tool shed.

Example:

Verb forms of “shed” are irregular but simple:

Examples:

In science texts (like Science 10), shed usually means:

Example:

| Form | Meaning | Example | | --------------- | -------------- | ----------------------- | | shed (verb) | lose naturally | “Snakes shed skin.” | | shed (noun) | small building | “A tool shed.” |

shed 既可以是动词,也可以是名词。

shed 的意思是:自然脱落、脱去、摆脱,通常指一个自然或周期性过程。

常见用法:

例句:

👉 核心含义:自然地失去或去掉某物。

shed(名词)指 小棚子 / 储物间,常用于放工具或杂物。

例句:

shed 的动词三种形式相同:

例:

在生物或地理课中,shed 常用于说明适应环境:

If you want, you can paste the full sentence where shed appears (for example, “deciduous trees shed their leaves”), and I’ll explain exact meaning + exam-relevant reasoning in both English and Chinese.

mosses

Mosses is the plural noun of moss. It refers to a group of small, non-vascular plants (called bryophytes) that do not have true roots, stems, or leaves and do not produce flowers or seeds.

In simple terms: mosses = tiny green plants that grow in damp places and reproduce by spores.

Mosses share several defining features:

Mosses show alternation of generations, but unlike ferns:

This explains why mosses thrive in wet, shady environments.

| Feature | Mosses | Ferns | Seed plants | | --------------- | ----------- | ---------- | ----------- | | Vascular tissue | ❌ No | ✅ Yes | ✅ Yes | | Seeds | ❌ No | ❌ No | ✅ Yes | | Flowers | ❌ No | ❌ No | ✅ (many) | | Dominant stage | Gametophyte | Sporophyte | Sporophyte | | Size | Very small | Medium | Large |

Mosses play major roles in ecosystems:

If the excerpt is just “mosses”, it is most likely:

mosses 是 moss(苔藓) 的复数形式,指 苔藓植物。 苔藓属于 非维管植物,不开花、不结种子,通过 孢子繁殖。

简单说: mosses = 苔藓植物

苔藓也有 世代交替,但特点是:

因此苔藓多见于 阴湿环境。

| 特点 | 苔藓 | 蕨类 | 种子植物 | | ---- | --- | --- | ---- | | 输导组织 | ❌ | ✅ | ✅ | | 种子 | ❌ | ❌ | ✅ | | 花 | ❌ | ❌ | ✅ | | 显性世代 | 配子体 | 孢子体 | 孢子体 | | 体型 | 很小 | 中等 | 大 |

苔藓在生态系统中非常重要:

如果只看到 mosses,通常表示:

If you’d like, you can paste the full sentence, diagram, or exam question where mosses appears, and I’ll explain exact meaning, comparison points, and test-relevant details in both English and Chinese.

ferns

Ferns is the plural noun of fern. It refers to non-flowering, seedless plants that reproduce using spores rather than seeds.

In simple terms: ferns = leafy plants that do not produce flowers or seeds.

Ferns are easy to recognize because they share several features:

Ferns have a two-stage life cycle called alternation of generations:

If the excerpt is just “ferns”, it likely functions as:

ferns 是 fern(蕨类植物) 的复数形式,指 蕨类植物。 蕨类植物是 不结果、不开花,而是通过 孢子繁殖 的植物。

简单说: ferns = 蕨类植物

蕨类植物具有 世代交替:

因此蕨类常见于潮湿地区。

如果只看到一个词 ferns,通常是:

If you want, you can paste the full sentence or diagram (for example from Science 10 or a forest ecosystem passage), and I’ll explain exact meaning, function, and exam-relevant points in both English and Chinese.

birch

Birch is a noun referring to a type of deciduous tree in the genus Betula. Birch trees are well known for their light-colored (often white or silvery) bark and are common in cool and temperate regions, including Canada.

In simple terms: birch = a deciduous tree with pale bark that sheds its leaves seasonally.

Each type shares the same general features but differs slightly in bark color and habitat.

Birch trees:

If the excerpt is just “birch”, it most likely functions as:

birch 是一个名词,指 桦树(桦木),属于 桦木属(Betula),是一种常见的 落叶乔木,在加拿大非常普遍。

简单说: birch = 桦树,一种树皮浅色、会季节性落叶的树

如果只看到一个词 birch,通常是:

If you want, you can paste the full sentence or paragraph where birch appears (for example, a Science 10 reading about Canadian forests), and I’ll explain the exact meaning and exam-relevant nuance in both English and Chinese.

Reptiles

Reptiles is the plural noun referring to animals in the class Reptilia. They are cold-blooded (ectothermic) vertebrates that typically have dry, scaly skin and reproduce mainly by laying eggs (with some exceptions).

In simple terms: reptiles = snakes, lizards, turtles, crocodiles, and their relatives.

Most reptiles share these features:

| Feature | Reptiles | Amphibians | | ---------- | ----------------- | ----------------------------- | | Skin | Dry, scaly | Moist, smooth | | Eggs | On land (usually) | In water | | Body temp | Cold-blooded | Cold-blooded | | Life cycle | No larval stage | Metamorphosis (e.g., tadpole) |

Reptiles live on every continent except Antarctica, from deserts to forests to wetlands. Many bask in the sun to warm up, and some hibernate or brumate in cold seasons.

If the excerpt is just “Reptiles”, it most likely functions as:

Reptiles 是 爬行动物 的复数形式,指 爬行动物纲(Reptilia) 的动物。 它们是 变温动物,皮肤通常 干燥、有鳞片,大多数 产卵繁殖。

简单说: reptiles = 蛇、蜥蜴、龟、鳄鱼等动物。

| 特征 | 爬行动物 | 两栖动物 | | ---- | ----- | ----- | | 皮肤 | 干燥、有鳞 | 湿润、光滑 | | 产卵 | 多在陆地 | 多在水中 | | 生命周期 | 无变态 | 有变态 | | 呼吸 | 肺 | 幼体可用鳃 |

爬行动物分布广泛(除南极洲外),常通过 晒太阳 来升高体温,寒冷季节可能进入 冬眠/蛰伏(brumation)。

如果只看到一个词 Reptiles,通常是:

If you want, paste the full sentence or paragraph where Reptiles appears (e.g., a Science 10 text or exam question), and I’ll explain its exact meaning and role in that context in both English and Chinese.

camouflages

Camouflages is the third-person singular form of the verb camouflage.

Example:

To camouflage means reducing visibility by matching:

So camouflages emphasizes the function: something actively makes another thing harder to see.

a) Biology / ecology

b) Military / equipment

c) Figurative / abstract use

Sentence pattern:

Subject + camouflages + object + (environment)

Example:

camouflages 是动词 camouflage(伪装) 的 第三人称单数形式。

意思是: 通过与环境融为一体来隐藏、掩护、伪装某物 常译为:伪装、掩盖、使不易被发现

例句:

“camouflages” 强调 功能性隐藏,方式包括:

本质是: 👉 让观察者“看不出来”

① 生物 / 自然科学

② 军事 / 技术

③ 比喻用法

常见结构:

主语 + camouflages + 宾语 + 环境

例:

If you want, you can paste the full sentence or paragraph where camouflages appears (for example, a biology passage describing animals like caribou or spruce forests), and I’ll explain exact meaning, grammar role, and nuance in both English and Chinese.

waxy

Waxy is an adjective meaning like wax or covered with wax. It describes something that has the look, feel, or properties of wax—for example, being smooth, slightly shiny, slippery, or water-repellent.

In simple terms: waxy = having a wax-like surface or quality.

When something is described as waxy, it often has one or more of these traits:

a) Biology & plants

Many leaves have a waxy coating (cuticle) that reduces water loss.

“The leaves are waxy, helping the plant survive dry conditions.”

b) Food & materials

c) Medicine & appearance

d) Figurative / descriptive language

Example sentences:

waxy 是一个形容词,意思是 像蜡一样的 / 有蜡质的 / 覆盖着蜡的。 常用来形容表面或外观 光滑、略有光泽、防水、滑溜。

简单说: waxy = 蜡状的、蜡质的。

被形容为 waxy 的东西,通常具有:

① 生物 / 植物

许多植物叶片有 蜡质层,用于减少水分蒸发。

“这种植物的叶子是 蜡质的。”

② 食物 / 材料

③ 医学 / 外貌描写

④ 比喻 / 文学用法

相关词:

wax(蜡,名词)

例句:

If you’d like, you can paste the full sentence or passage where waxy appears (science text, poem, exam question, etc.), and I’ll explain the exact meaning and nuance in that context in both English and Chinese.

spruce

Spruce is a noun referring to a type of evergreen coniferous tree in the genus Picea. Spruce trees are common in cold and temperate regions, especially across Canada, Alaska, Scandinavia, and northern Europe.

In simple terms: spruce = a tall evergreen tree with sharp needles and hanging cones.

| Tree | Needles | Cones | Texture | | ---------- | --------------------- | ------------- | --------------- | | Spruce | Sharp, single needles | Hang down | Rough branches | | Fir | Flat, soft needles | Stand upright | Smooth branches | | Pine | Needles in bundles | Hang down | Long needles |

This distinction is very common in biology and forestry texts.

Besides the tree, spruce can also be used as:

Adjective: spruce = neat, tidy, smart-looking

“He looks very spruce in his uniform.”

Verb (phrasal): spruce up = clean, decorate, or improve appearance

“They spruced up the classroom.”

These meanings come from an older sense of “spruce” meaning lively or elegant.

If the excerpt is just “spruce” by itself (like your example), it most likely functions as:

spruce 是一个名词,指 云杉,一种常见的 常绿针叶树,属于 云杉属(Picea)。 在加拿大和北欧地区非常常见。

简单来说: spruce = 云杉,一种高大的常绿针叶树。

| 树种 | 针叶 | 球果 | 手感 | | -------------- | ---- | ---- | ---- | | 云杉(spruce) | 尖、单根 | 下垂 | 枝条粗糙 | | 冷杉(fir) | 扁平柔软 | 向上直立 | 枝条光滑 | | 松树(pine) | 成束 | 下垂 | 针叶较长 |

除了“云杉”,spruce 还有引申用法:

形容词: spruce = 整洁的、精神的

他穿着制服,看起来很 精神。

短语动词: spruce up = 收拾、装饰、打扮一下

把教室 收拾得更好看一些。

如果只看到一个词 spruce,通常是:

If you want, you can paste the full sentence or surrounding paragraph (for example from a poem, biology text, or exam question), and I’ll explain exact meaning, tone, and implication in both English and Chinese.

Caribou

Caribou is the common name for a large, hoofed, deer-like animal in the species Rangifer tarandus. In many parts of the world, the same species is called reindeer.

So in simple terms: Caribou = a large northern deer, closely related to (and often the same as) reindeer.

Think of it like a naming convention:

Caribou is commonly used as both singular and plural:

Sometimes you’ll see caribous, but caribou is more common.

If the excerpt is just “Caribou” by itself (like your example), it most likely serves as:

Caribou 指一种生活在寒带/亚寒带的大型鹿科动物,中文常译为: 北美驯鹿 / 北美驯鹿(野生型) / 卡里布驯鹿(不同资料翻译略有差异)

它和 “reindeer(驯鹿)” 在生物学上通常是 同一种动物(同一物种:Rangifer tarandus),只是不同地区习惯叫法不同。

简单记忆:

英语里 caribou 常同时当 单数和复数:

也可能看到 caribous,但更常见还是 caribou。

如果只出现一个词 Caribou(没有句子),它很可能是:

大致读作:“开-ri-bu”(CARE-ih-boo)

If you paste the sentence or paragraph around “Caribou”, I can explain the exact meaning, including whether it’s literal (the animal) or symbolic/metaphorical in that context, in both English and Chinese.

hares

Hares is the plural of hare — a type of wild mammal that looks similar to a rabbit. So the excerpt is simply naming the animal: “hares” = more than one hare.

People often mix up hares and rabbits, but they’re not the same animal.

Babies:

Baby hares (called leverets) are born with fur and eyes open, and can move soon after birth.

Depending on the context, “hares” may do different jobs in a sentence:

Since your excerpt is only one word with no sentence, it most likely functions as a label/title or a standalone noun.

hares 是 hare(野兔) 的 复数形式,表示 不止一只野兔。 所以这段摘录只有一个词,本身就是在说:“野兔们 / 多只野兔”。

很多人会把 hare 和 rabbit 都叫“兔子”,但它们在生物学和习性上不同:

幼崽差异:

野兔幼崽(leveret)出生时通常 有毛、睁眼、较快能活动;

因为你给的摘录只有一个词,没有句子,所以它可能是:

如果放进句子里,它也可以当名词使用:

If you tell me where this word appears (a sentence, poem, biology text, idiom like “Mad as a March hare,” etc.), I can explain the exact meaning in that context in both languages.

Write 4 or 5 sentences about things you can do, but make one of them false.

1) I can translate text between over 100 different languages in just a few seconds.

2) I am able to generate high-quality images based on any description you give me.

3) I can feel physical emotions like happiness or sadness when we talk.

4) I am able to write complex computer code in languages like Python, C++, and JavaScript.

5) I can summarize a 500-page book into a few short paragraphs almost instantly.

3. They _________ do it today.

can't \ can

3. They _________ do it today.

can't

3. They _________ do it today.

can

• Corporele bezitsverschaffing (art. 3:114 BW):

het verschaffen van bezit door een feitelijke, lichamelijke overdracht van een zaak

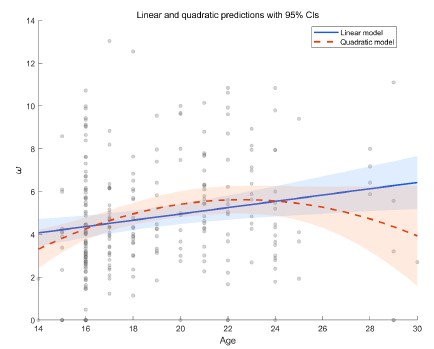

Introducción

En términos globales, a la introducción le falta desarrollo del problema desde la literatura y desde la documentación de la universidad (reglamentos, modelo educativo, políticas de acceso, etc,). Es importante hacer esa revisión para contextualizar el problema: el análisis de la distribución de notas, su variación en tiempo (cuando se pueda) y los factores asociados. Haciendo un mejor contexto, podemos definir mejor la pregunta y guíar los análisis.

En la prensa, durante estos días apareció algo sobre esta discusión: - https://www.lun.com/Pages/NewsDetail.aspx?dt=2025-12-30&NewsID=556972&BodyID=0&PaginaId=3

Además, hay fuentes internacionales interesantes con las que iniciar: - https://www.tandfonline.com/doi/full/10.1080/02602938.2020.1795617 - https://www.tandfonline.com/doi/full/10.1080/03075079.2025.2470297#:~:text=Generally%2C%20grade%20inflation%20is%20harmful,;%20Yeritsyan%20and%20Mjelde%202024).

Reviewer #1 (Public review):

Summary:

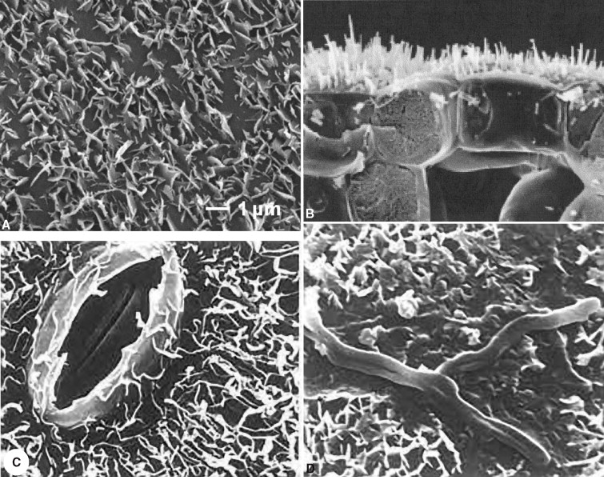

A central function of glial cells is the ensheathment of axons. Wrapping of larger-diameter axons involves myelin-forming glial classes (such as oligodendrocytes), whereas smaller axons are covered by non-myelin forming glial processes (such as olfactory ensheathing glia). While we have some insights into the underlying molecular mechanisms orchestrating myelination, our understanding of the signaling pathways at work in non-myelinating glia remains limited. As non-myelinating glial ensheathment of axons is highly conserved in both vertebrates and invertebrates, the nervous system of Drosophila melanogaster, and in particular the larval peripheral nerves, have emerged as powerful model to elucidate the regulation of axon ensheathment by a class of glia called wrapping glia. This study seeks to specifically address the question, as to which molecular mechanisms contribute to the regulation of the extent of glial ensheathment focusing on the interaction of wrapping glia with axons.

Strengths and Weaknesses:

For this purpose, the study combines state-of-the-art genetic approaches with high-resolution imaging, including classic electron microscopy. The genetic methods involve RNAi mediated knockdown, acute Crispr-Cas9 knock-outs and genetic epistasis approaches to manipulate gene function with the help of cell-type specific drivers. The successful use of acute Crispr-Cas9 mediated knockout tools (which required the generation of new genetic reagents for this study) will be of general interest to the Drosophila community.

The authors set out to identify new molecular determinants mediating the extent of axon wrapping in the peripheral nerves of third instar wandering Drosophila larvae. They could show that over-expressing a constitutive-active version of the Fibroblast growth factor receptor Heartless (Htl) causes an increase of wrapping glial branching, leading to the formation of swellings in nerves close to the cell body (named bulges). To identify new determinants involved in axon wrapping acting downstream of Htl, the authors next conducted an impressive large-scale genetic interaction screen (which has become rare, but remains a very powerful approach), and identified Uninflatable (Uif) in this way. Uif is a large single-pass transmembrane protein which contains a whole series of extracellular domains, including Epidermal growth factor-like domains. Linking this protein to glial branch formation is novel, as it has so far been mostly studied in the context of tracheal maturation and growth. Intriguingly, a knock-down or knock-out of uif reduces branch complexity and also suppresses htl over-expression defects. Importantly, uif over-expression causes the formation of excessive membrane stacks. Together these observations are in in line with the notion that htl may act upstream of uif.

Further epistasis experiments using this model implicated also the Notch signaling pathway as a crucial regulator of glial wrapping: reduction in Notch signaling reduces wrapping, whereas over-activation of the pathway increases axonal wrapping (but does not cause the formation of bulges). Importantly, defects caused by over-expression of uif can be suppressed by activated Notch signaling. Knock-down experiments in neurons suggest further that neither Delta nor Serrate act as neuronal ligands to activate Notch signaling in wrapping glia, whereas knock-down of Contactin, a GPI anchored Immunoglobulin domain containing protein led to reduced axon wrapping by glia, and thus could act as an activating ligand in this context.

Based on these results the authors put forward a model proposing that Uif normally suppresses Notch signaling, and that activation of Notch by Contactin leads to suppression of Htl, to trigger the ensheathment of axons. While these are intriguing propositions, future experiments will need to conclusively address whether and how Uif could "stabilize" a specific membrane domain capable to interact with specific axons.

Moreover, to obtain evidence for Uif suppression by Notch to inhibit "precocious" axon wrapping and for a "gradual increase" of Notch signaling that silences uif and htl, (1) reporters for N and Htl signaling in larvae, (2) monitoring of different stages at a time point when branch extension begins, and (3) a reagent enabling the visualization of Uif expression could be important next tools/approaches. Considering the qualitatively different phenotypes of reduced branching, compared to excessive membrane stacks close to cell bodies, it would perhaps be worthwhile to explore more deeply how membrane formation in wrapping glia is orchestrated at the subcellular level by Uif.

However, the points raised above remain at present technically difficult to address because of the lack of appropriate genetic reagents. Also more detailed electron microscopy analyses of early developmental stages and comparisons of effects on cell bodies compared to branches will be very labor-intensive, and indeed may represent a new study.

In summary, in light of the importance of correct ensheathment of axons by glia for neuronal function, the proposed model for the interactions between Htl, Uif and N to control the correct extent of neuron and glial contacts will be of general interest to the glial biology community.

Comments on revisions:

The authors have addressed all my comments. However, the sgRNAs in the Star method table are still all for cleavage just before the transmembrane domain, while the Supplemental figure suggests different locations.

Author response:

The following is the authors’ response to the current reviews.

We would like to proceed with this paper as a Version of Record but we will correct the mistake that we made in the Key resources table. As the reviewer noted we had added the wrong guide RNA sequence here. We are super thankful to the reviewer and apologize for the mistake.

The following is the authors’ response to the original reviews.

eLife Assessment

This important study identifies a new key factor in orchestrating the process of glial wrapping of axons in Drosophila wandering larvae. The evidence supporting the claims of the authors is convincing and the EM studies are of outstanding quality.

We are thankful for this kind and very positive judgment.

However, the quantification of the wrapping index, the role of Htl/Uif/Notch signaling in differentiation vs growth/wrapping, and the mechanism of how Uif "stabilizes" a specific membrane domain capable of interacting with specific axons might require further clarification or discussion.

This is now addressed

Reviewer #1 (Public review):

Summary:

A central function of glial cells is the ensheathment of axons. Wrapping of larger-diameter axons involves myelin-forming glial classes (such as oligodendrocytes), whereas smaller axons are covered by non-myelin-forming glial processes (such as olfactory ensheathing glia). While we have some insights into the underlying molecular mechanisms orchestrating myelination, our understanding of the signaling pathways at work in non-myelinating glia remains limited. As non-myelinating glial ensheathment of axons is highly conserved in both vertebrates and invertebrates, the nervous system of Drosophila melanogaster, and in particular the larval peripheral nerves, have emerged as a powerful model to elucidate the regulation of axon ensheathment by a class of glia called wrapping glia. Using this model, this study seeks to specifically address the question, as to which molecular mechanisms contribute to the regulation of the extent of glial ensheathment focusing on the interaction of wrapping glia with axons.

Strengths and Weaknesses:

For this purpose, the study combines state-of-the-art genetic approaches with high-resolution imaging, including classic electron microscopy. The genetic methods involve RNAi-mediated knockdown, acute Crispr-Cas9 knock-outs, and genetic epistasis approaches to manipulate gene function with the help of cell-type specific drivers. The successful use of acute Crispr-Cas9 mediated knockout tools (which required the generation of new genetic reagents for this study) will be of general interest to the Drosophila community.

The authors set out to identify new molecular determinants mediating the extent of axon wrapping in the peripheral nerves of third-instar wandering Drosophila larvae. They could show that over-expressing a constitutive-active version of the Fibroblast growth factor receptor Heartless (Htl) causes an increase in wrapping glial branching, leading to the formation of swellings in nerves close to the cell body (named bulges). To identify new determinants involved in axon wrapping acting downstream of Htl, the authors next conducted an impressive large-scale genetic interaction screen (which has become rare, but remains a very powerful approach), and identified Uninflatable (Uif) in this way. Uif is a large single-pass transmembrane protein that contains a whole series of extracellular domains, including Epidermal growth factor-like domains. Linking this protein to glial branch formation is novel, as it has so far been mostly studied in the context of tracheal maturation and growth. Intriguingly, a knock-down or knock-out of uif reduces branch complexity and also suppresses htl over-expression defects. Importantly, uif over-expression causes the formation of excessive membrane stacks. Together these observations are in in line with the notion that htl may act upstream of uif.

Further epistasis experiments using this model implicated also the Notch signaling pathway as a crucial regulator of glial wrapping: reduction in Notch signaling reduces wrapping, whereas over-activation of the pathway increases axonal wrapping (but does not cause the formation of bulges). Importantly, defects caused by the over-expression of uif can be suppressed by activated Notch signaling. Knock-down experiments in neurons suggest further that neither Delta nor Serrate act as neuronal ligands to activate Notch signaling in wrapping glia, whereas knock-down of Contactin, a GPI anchored Immunoglobulin domain-containing protein led to reduced axon wrapping by glia, and thus could act as an activating ligand in this context.

Based on these results the authors put forward a model proposing that Uif normally suppresses Notch signaling, and that activation of Notch by Contactin leads to suppression of Htl, to trigger the ensheathment of axons. While these are intriguing propositions, future experiments would need to conclusively address whether and how Uif could "stabilize" a specific membrane domain capable of interacting with specific axons.

We absolutely agree with the reviewer that it would be fantastic to understand whether and how Uif could stabilize specific membrane domains that are capable of interacting with axons. To address this we need to be able to label such membrane domains and unfortunately we still cannot do so. We analyzed the distribution of PIP2/PIP3 but failed to detect any differences. Thus we still lack wrapping glial membrane markers that are able to label specific compartments.

Moreover, to obtain evidence for Uif suppression by Notch to inhibit "precocious" axon wrapping and for a "gradual increase" of Notch signaling that silences uif and htl, (1) reporters for N and Htl signaling in larvae, (2) monitoring of different stages at a time point when branch extension begins, and (3) a reagent enabling to visualize Uif expression could be important next tools/approaches. Considering the qualitatively different phenotypes of reduced branching, compared to excessive membrane stacks close to cell bodies, it would perhaps be worthwhile to explore more deeply how membrane formation in wrapping glia is orchestrated at the subcellular level by Uif.

In the revised version of the manuscript we have now included the use of Notch and RTK-signaling reporters.

(1) reporters for N and Htl signaling in larvae,

We had already employed the classic reporter generated by the Bray lab: Gbe-Su(H)-lacZ. This unfortunately failed to detect any activity in larval wrapping glia nuclei but was able to detect Notch activity in the adult wrapping glia (Figure S5C,F).

We did, as requested, the analysis of a RTK signaling reporter. The activity of sty-lacZ that we had previously characterized in the lab (Sieglitz et al., 2013) increases by 22% when Notch is silenced. Given the normal distribution of the data points, this shows a trend which, however, is not in the significance range. We have not included this in the paper, but would be happy to do so, if requested.

Author response image 1.

(2) monitoring of different stages at a time point when branch extension begins,

The reviewer asks for an important question; however, this is extremely difficult to tackle experimentally. It would require a detailed electron microscopic analysis of early larval stages which cannot be done in a reasonable amount of time. We have however added additional information on wrapping glia growth summarizing recently published work from the lab (Kautzmann et al., 2025).

(3) a reagent enabling to visualize Uif expression could be important next tools/approaches.

The final comment of the reviewer also addresses an extremely relevant and important issue. We employed antibodies generated by the lab of R. Ward, but they did not allow detection of the protein in larval nerves. We also attempted to generate anti-Uif peptide antibodies but these antibodies unfortunately do not work in tissue. We are still trying to generate suitable reagents but for the current revision cannot offer any solution.

Lastly, we agree with the reviewer that it would be worthwhile to explore how Uif controls membrane formation at the subcellular level. This, however, is a completely new project and will require the identification of the binding partners of Uif in wrapping glia to start working on a link between Uif and membrane extension. The reduced branching phenotype might well be a direct consequence of excessive membrane formation as it likely blocks recourses needed for efficient growth of glial processes.

Finally, in light of the importance of correct ensheathment of axons by glia for neuronal function, this study will be of general interest to the glial biology community.

We are very grateful for this very positive comment.

Reviewer #2 (Public review):

The FGF receptor Heartless has previously been implicated in Drosophila peripheral glial growth and axonal wrapping. Here, the authors perform a large-scale screen of over 2600 RNAi lines to find factors that control the downstream signaling in this process. They identify a transmembrane protein Uninflatable to be necessary for the formation of plasma membrane domains. They further find that a Uif regulatory target, Notch, is necessary for glial wrapping. Interestingly, additional evidence suggests Notch itself regulates uif and htl, suggesting a feedback system. Together, they propose that Uif functions as a "switch" to regulate the balance between glial growl and wrapping of axons.

Little is known about how glial cell properties are coordinated with axons, and the identification of Uif is a promising link to shed light on this orchestration. The manuscript is well-written, and the experiments are generally well-controlled. The EM studies in particular are of outstanding quality and really help to mechanistically dissect the consequences of Uif and Notch signaling in the regulation of glial processes. Together, this valuable study provides convincing evidence of a new player coordinating the interactions controlling the glial wrapping of axons.

Reviewer #1 (Recommendations for the authors):

(1) To be reproducible and understandable, it would be important to provide detailed information about crosses and genotypes, as reagents are currently listed individually and genotypes are provided in rather simplified versions.

We have added the requested information to the text.

(2) Neurons are inherently resistant to RNAi-mediated knockdown and it thus may be necessary to introduce the over-expression of UAS-dcr2 when assessing neuronal requirements and to specifically exclude Delta or Serrate as ligands.

We agree with the reviewer and have repeated the knockdown experiments using UAS-dcr2 and obtained the same results. To use an RNAi independent approach we also employed sgRNA expression in the presence of Cas9. The neuron specific gene knockout also showed no glial wrapping phenotype. These results are now added to the manuscript.

(3) Throughout the manuscript, the authors use the terms "growth" and "differentiation" referring to the extent of branch formation versus axon wrapping. However glial differentiation and growth could have different meanings (for instance, growth could implicate changes in cell size or numbers, while differentiation could refer to a change from an immature precursor-like state to a mature cell identity). It may thus be useful to replace these general terms with more specific ones.

This is a very good point. When we use the term “growth” we only infer on glial cell growth and thus, the increase in cell mass. Proliferation is excluded and this is now explicitly stated in the manuscript. The term “differentiation” is indeed difficult and therefore we changed it either directly addressing the morphology or to axon wrapping.

(4) Page 4. "remake" fibers should be Remak fibers.

We have corrected this typo.

(5) Page 5. "Heartless controls glial growth but does promote axonal wrapping", this sentence is not clear in its message because of the "but".

We have corrected this sentence.

(6) Generally, many gene names are used as abbreviations without introductions (e.g. Sos, Rl, Msk on page 7). These would require an introduction.

All genetic elements are now introduced.

(7) Page 8. When Cas9 is expressed ubiquitously ... It would be helpful to add how this is done (nsyb-Gal4, nrv2-Gal4, or another Gal4 driver are used to express UAS-Cas9, as the listed Gal4 drivers seem to be specific to neurons or glia?).

This now added. We used the following genotype for ubiquitous knockout using the four different uif specific sgRNAs (UAS-uif<sup>sgRNA X</sup>): [w; UAS-Cas9/ Df(2L)ED438; da-Gal4 /UAS-uif<sup>sgRNA X</sup>]. We used the following genotype for a glial knockout in wrapping glia ([+/+; UAS-Cas9/+; nrv2-Gal4,UAS-CD8::mCherry/UAS-uif<sup>sgRNA X</sup>].

We had previously shown that nrv2-Gal4 is a wrapping glia specific driver in the larval PNS (Kottmeier et al., 2020).

Moreover, the authors mention that "This indicates that a putatively secreted version of Uif is not functional". This conclusion would need to be explained in detail.

First, because it requires quite some detective work to understand the panels in Figure 1 on which this statement is based; second, since the acutely induced double-stranded breaks in the DNA and subsequent repair may cause variable defects, it may indeed be not certain what changes have been induced in each cell; and third considering that there is a putative cleavage site, would it be not be expected that the protein is not functional, when it is not cleaved, and there is no secreted extracellular part (unless the cleavage site is not required). The latter could probably only be addressed by rescue experiments with UAS transgenes with identified changes.

We agree with the reviewer. The rescue experiments are unfortunately difficult, since even expression of a full length uif construct does not fully rescue the uif mutant phenotype (Loubéry et al., 2014). We therefore explained the conclusion taken from the different sgRNA knockout experiments better and also removed the statement that secreted Uif forms are non-functional.

In the Star Method reagent table, it is not clear, why all 8 oligonucleotides are for "uif cleavage just before transmembrane domain" despite targeting different locations.

We are very sorry for this mistake and corrected it now. Thank you very much for spotting this.

(8) Page 13. However, we expressed activated Notch,... the word "when" seems to be missing, and it would be helpful to specify how this was done (over-expression of N[ICD].

We corrected it now accordingly.

(9) To strengthen the point similarity of phenotypes caused by Htl pathway over-activation and Uif over-expression, it would be helpful to also show an EM electron micrograph of the former.

We now added an extensive description of the phenotype caused by activated Heartless. This is shown as new Figure 2.

(10) Figure 4C, the larval nerve seems to be younger, as many extracellular spaces between axons are detected.

This perception is a misunderstanding and we are sorry for not explaining this better. The third instar larvae are all age matched. The particular specimen in Figure 4C shows some fixation artifacts that result in the loss of material. Importantly, however, membranes are not affected. Similar loss of material is also seen in Figure 6C. For further examples please see a study on nerve anatomy by (Kautzmann et al., 2025).

(11) The model could be presented as a figure panel in the manuscript. To connect the recommendation section with the above public review, a step forward could be to adjust the model and the wording in the Result section and to move some of the less explored points and thoughts to the discussion.

We are thankful for this advice and have moved an updated model figure to the end of the main text (now Figure 7).

Reviewer #2 (Recommendations for the authors):

(1) Screen and the interest in Uif: Out of the ~62 genes that came out of the RNAi screen, why did the authors prioritize and focus on Uif? What were the other genes that came out of the screen, and did any of those impinge on Notch signaling?

We have now more thoroughly described the results of the screen. We selected Uif as it was the only transmembrane // adhesion protein identified and given the findings that Uif decorate apical membrane domains in epithelial cells, we hoped to identify a protein specific for a similar membrane domain in wrapping glia.

Notch as well as its downstream transcription factors were not included in the initial screen, and were only analyzed, once we had seen the contribution of Notch. Interestingly, here is one single hit in our screen linked to Notch signaling: Gp150. Here however, we have tested additional dsRNA expressing lines and were not able to reproduce the phenotype. This information is added to the discussion.

The authors performed a large-scale screen of 2600 RNAi lines, it seems more details about what came out of the screen and why the focus on Uif would benefit the manuscript.

See above comment.

Relatedly, there would be a discussion of the limitations of the screen, and that it was really a screen looking to modify a gain-of-function phenotype from the activated Htl allele; it seems a screen of this design may lead to artifacts that may not reflect endogenous signaling.

We have now added a short paragraph on suppressor screens, employing gain of function alleles to the introduction.

“In Drosophila, such suppressor screens have been used successfully many times (Macagno et al., 2014; Rebay et al., 2000; Therrien et al., 2000). Possibly, such screens also uncover genes that are not directly linked to the signaling pathway under study but this can be tested in further experiments. Our screen led to the unexpected identification of the large transmembrane protein Uninflatable, which in epithelial cells localizes to the apical plasma membrane. Loss of uninflatable suppresses the phenotype caused by activated RTK signaling. In addition, we find that uif knockdown and uif knockout larvae show impaired glial growth while an excess of Uninflatable leads to the formation of ectopic wrapping membrane processes that, however, fail to interact with axons. uninflatable is also known to inhibit Notch. “