Die Rolle des Staates im Kapitalismus besteht darin, den Wettbewerb zwischen Kapitalisten zu regulieren. Ohne die Vermittlung durch den Staat gibt es keine Möglichkeit, diesen Wettbewerb zu ordnen. Und nun haben wir eine Schwächung der Leistungsfähigkeit des Staates, diesen Wettbewerb zu moderieren. Die neuen Kapitalisten ändern die Regeln selbst und erlangen damit eine gewisse Art von Souveränität.

- Jan 2026

-

jacobin.de jacobin.de

-

- Feb 2025

-

blog.joinmastodon.org blog.joinmastodon.org

-

It doesn’t matter if the offending account is on your server or a different one, these measures are contained within your server, which is how servers with different policies can co-exist on the network:

In Mastodon, offending accounts are reported to the server where the reporting account resides. It is this server that decides what to do with the offending account's content. Reports can be forwarded to the offending account's server, but this is optional.

-

- Jan 2025

-

www.linkedin.com www.linkedin.com

-

Holy Moly. Zuckerberg goes all out Elon, joins hands with Trump and Meta content is heading to pretty much ‘anything goes’

for - post - LinkedIn - misinformation - Meta joins Elon Musk and Twitter no moderation - 2025, Jan 8

-

- Aug 2024

-

connect.iftas.org connect.iftas.org

Tags

Annotators

URL

-

- Apr 2024

-

snarfed.org snarfed.org

-

Moderate people, not code by [[Ryan Barrett]]

-

Moderate people, not code.

-

- Jan 2024

-

www.reddit.com www.reddit.com

-

u/taurusnoises

Nice to see that Bob Doto has joined the list of moderators at r/zettelkasten.

-

-

dougbelshaw.com dougbelshaw.com

-

By its very nature, moderation is a form of censorship. You, as a community, space, or platform are deciding who and what is unacceptable. In Substack’s case, for example, they don’t allow pornography but they do allow Nazis. That’s not “free speech” but rather a business decision. If you’re making moderation based on financials, fine, but say so. Then platform users can make choices appropriately.

-

- Dec 2023

-

www.theatlantic.com www.theatlantic.com

- Mar 2023

-

deliverypdf.ssrn.com deliverypdf.ssrn.com

-

content-moderation subsidiarity. Just asthe general principle of political subsidiarity holds that decisions should bemade at the lowest organizational level capable of making such decisions,15content-moderation subsidiarity devolves decisions to the individual in-stances that make up the overall network.

Content-moderation subsidiarity

In the fediverse, content moderation decisions are made at low organization levels—at the instance level—rather than on a global scale.

Tags

Annotators

URL

-

-

nymag.com nymag.com

-

OpenAI also contracted out what’s known as ghost labor: gig workers, including some in Kenya (a former British Empire state, where people speak Empire English) who make $2 an hour to read and tag the worst stuff imaginable — pedophilia, bestiality, you name it — so it can be weeded out. The filtering leads to its own issues. If you remove content with words about sex, you lose content of in-groups talking with one another about those things.

OpenAI’s use of human taggers

-

- Feb 2023

-

-

https://www.unddit.com/r/Zettelkasten/comments/115hvkj/_/j91xw9b/#comment-info

TIL: There's an archived version of deleted/edited Reddit fora...

-

-

deliverypdf.ssrn.com deliverypdf.ssrn.com

-

Rozenshtein, Alan Z., Moderating the Fediverse: Content Moderation on Distributed Social Media (November 23, 2022). 2 Journal of Free Speech Law (2023, Forthcoming), Available at SSRN: https://ssrn.com/abstract=4213674 or http://dx.doi.org/10.2139/ssrn.4213674

Found via Nathan Schneider

Abstract

Current approaches to content moderation generally assume the continued dominance of “walled gardens”: social media platforms that control who can use their services and how. But an emerging form of decentralized social media—the "Fediverse"—offers an alternative model, one more akin to how email works and that avoids many of the pitfalls of centralized moderation. This essay, which builds on an emerging literature around decentralized social media, seeks to give an overview of the Fediverse, its benefits and drawbacks, and how government action can influence and encourage its development.

Part I describes the Fediverse and how it works, beginning with a general description of open versus closed protocols and then proceeding to a description of the current Fediverse ecosystem, focusing on its major protocols and applications. Part II looks at the specific issue of content moderation on the Fediverse, using Mastodon, a Twitter-like microblogging service, as a case study to draw out the advantages and disadvantages of the federated content-moderation approach as compared to the current dominant closed-platform model. Part III considers how policymakers can encourage the Fediverse, whether through direct regulation, antitrust enforcement, or liability shields.

-

-

www.washingtonpost.com www.washingtonpost.com

-

Internet ‘algospeak’ is changing our language in real time, from ‘nip nops’ to ‘le dollar bean’ by [[Taylor Lorenz]]

shifts in language and meaning of words and symbols as the result of algorithmic content moderation

instead of slow semantic shifts, content moderation is actively pushing shifts of words and their meanings

article suggested by this week's Dan Allosso Book club on Pirate Enlightenment

-

“you’ll never be able to sanitize the Internet.”

-

Could it be the sift from person to person (known in both directions) to massive broadcast that is driving issues with content moderation. When it's person to person, one can simply choose not to interact and put the person beyond their individual pale. This sort of shunning is much harder to do with larger mass publics at scale in broadcast mode.

How can bringing content moderation back down to the neighborhood scale help in the broadcast model?

-

“Zuck Got Me For,” a site created by a meme account administrator who goes by Ana, is a place where creators can upload nonsensical content that was banned by Instagram’s moderation algorithms.

-

“The reality is that tech companies have been using automated tools to moderate content for a really long time and while it’s touted as this sophisticated machine learning, it’s often just a list of words they think are problematic,” said Ángel Díaz, a lecturer at the UCLA School of Law who studies technology and racial discrimination.

-

Is algorithmic content moderation creating a new sort of cancel culture online?

-

But algorithmic content moderation systems are more pervasive on the modern Internet, and often end up silencing marginalized communities and important discussions.

What about non-marginalized toxic communities like Neo-Nazis?

Tags

- internet

- leetspeak

- structural racism

- beyond the pale

- discrimination

- social media machine guns

- technology

- euphemisms

- cultural taboos

- banning

- algorithmic feeds

- colloquialisms

- human computer interaction

- quotes

- Ángel Díaz

- algorithms

- content moderation

- community organizing

- social media

- marginalized groups

- shadow banning

- neo-Nazis

- Zuck Got Me For

- cancel culture

- dialect creation

- cultural anthropology

- Evan Greer

- shunning

- block lists

- broadcasting models

- historical linguistics

- Voldemorting

- demonitization

- dialects

Annotators

URL

-

-

wordcraft-writers-workshop.appspot.com wordcraft-writers-workshop.appspot.com

-

LaMDA's safety features could also be limiting: Michelle Taransky found that "the software seemed very reluctant to generate people doing mean things". Models that generate toxic content are highly undesirable, but a literary world where no character is ever mean is unlikely to be interesting.

-

-

www.politifact.com www.politifact.com

-

PolitiFact - People are using coded language to avoid social media moderation. Is it working?<br /> by Kayla Steinberg<br /> November 4, 2021

-

- Dec 2022

-

www.techdirt.com www.techdirt.com

-

techpolicy.press techpolicy.press

-

Although there is no server governance census, in my own research I found very few examples of more participatory approaches to server governance (social.coop keeps a list of collectively owned instances)

Tarkowski has only seen a few more participatory server governance set-ups, most are 'benevolent dictator' style. Same. I have seen several growing instances that have changed from 'dictator' to a group of moderators making the decisions. You could democratise those roles by having users propose / vote on moderators. It's what we do offline in communal structures.

-

The Fediverse is, by design, fractured by server-level decisions that block and cancel access to other parts of the network.

Indeed, and this is what many don't seem to take into account. E.g. calls for various centralisation efforts. The way 'out' of being under 'benevolent dictators' is to fragment more to the level where you are your own dictator and moderation decisions at individual level and server level are identical, or where you have a cohesive circle of trust in which those decisions take place (i.e. small servers with small groups)

-

-

www.theatlantic.com www.theatlantic.com

-

The subjectivity of moderation decisions across the social web poses tremendous and complicated problems—which is precisely why journalists and academics have paid close attention to it for more than a decade

-

The trolling is paramount. When former Facebook CSO and Stanford Internet Observatory leader Alex Stamos asked whether Musk would consider implementing his detailed plan for “a trustworthy, neutral platform for political conversations around the world,” Musk responded, “You operate a propaganda platform.” Musk doesn’t appear to want to substantively engage on policy issues: He wants to be aggrieved.

-

-

techcrunch.com techcrunch.com

-

Twitter has, like its fellow social media platforms, been working for years to make the process of moderation efficient and systematic enough to function at scale. Not just so the platform isn’t overrun with bots and spam, but in order to comply with legal frameworks like FTC orders and the GDPR.

-

-

www.garbageday.email www.garbageday.email

-

The most typical way users encounter trending content is when a massively viral tweet — or subtweets about that tweet or the discourse it created — enters their feed. Then it’s up to them to figure out what kind of account posted the tweet, what kind of accounts are sharing the tweet, and what accounts are saying about the tweet.

-

The most successful, I’d argue, is Reddit

-

my best guess is it’s the moderation

-

-

news.ycombinator.com news.ycombinator.com

-

The hypothesis is that hate speech is met with other speech in a free marketplace of ideas.That hypothesis only functions if users are trapped in one conversational space. What happens instead is that users choose not to volunteer their time and labor to speak around or over those calling for their non-existence (or for the non-existence of their friends and loved ones) and go elsewhere... Taking their money and attention with them.As those promulgating the hate speech tend to be a much smaller group than those who leave, it is in the selfish interest of most forums to police that kind of signal jamming to maximize their possible user-base. Otherwise, you end up with a forum full mostly of those dabbling in hate speech, which is (a) not particularly advertiser friendly, (b) hostile to further growth, and (c) not something most people who get into this gig find themselves proud of.

Battling hate speech is different when users aren't trapped

When targeted users are not trapped on a platform, they have the choice to leave rather than explain themselves and/or overwhelm the hate speech. When those users leave, the platform becomes less desirable for others (the concentration of hate speech increases) and it becomes a vicious cycle downward.

Tags

Annotators

URL

-

-

blog.erinshepherd.net blog.erinshepherd.net

-

The trust one must place in the creator of a blocklist is enormous, because the most dangerous failure mode isn’t that it doesn’t block who it says it does, but that it blocks who it says it doesn’t and they just disappear.

-

- Nov 2022

-

stackoverflow.com stackoverflow.com

-

This question was removed from Stack Overflow for reasons of moderation.

-

-

gitlab.com gitlab.com新しいタブ5

-

for the safety of the LGBTQ community here we refused to engage in mass server blocking and instead encouraged our users to block servers on an individual basis and provided access to block lists for them to do so

This instance encourages their account holders to actively block for themselves. Pushing agency into their hands, also by providing existing blocklists to make that easier. After all it isn't pleasant to have to experience abuse first before you know whom to block.

-

In fact we added a feature just for them called subscriptions which allowed them to monitor accounts without following them so they could do so anonymously.

Providing lurking opportunities for security reasons. Very sensible. Example of actively providing tools that create agency for groups to protect themselves.

-

s pecifically from the LGBTQ community, onto our server. It turns out many people relied on us not-blocking for their physical safety. There were big name biggots (like milo yanappolus) who were on the network. They used their accounts here to watch his account for doxing so they could warn themselves and their community and protect themselves accordingly

Having the ability to see what known bigots get up to on social media is a security feature.

-

allowed people read content from any server (but with strict hate speech rules)

Blocking means your account holders don't see that part of the fediverse, you're taking away their overview. A decision you're making about them, without them. A block decision isn't only about the blocked server, it impacts your account holders too, and that needs to be part of the considerations.

-

So there are some servers out there that demand every server int he network block every instance they do, and if a server doesnt block an instance they block then they block you in rettatliation.Their reason for this is quite flawed but it goes like this.. If we federate with a bad actor instance and we boost one of their posts then their users will see it and defeat the purpose of the block. The problem is, this isnt how it actually works. If they block a server and we boost it, they wont see the boost, thats how blocks work.

There are M instances that block servers that don't block the same servers they do. That seems to defeat the entire concpet of federating (and the rationale isn't correct).

-

-

www.techdirt.com www.techdirt.com

-

www.theverge.com www.theverge.com

-

The problem when the asset is people is that people are intensely complicated, and trying to regulate how people behave is historically a miserable experience, especially when that authority is vested in a single powerful individual.

-

The essential truth of every social network is that the product is content moderation, and everyone hates the people who decide how content moderation works.

-

- Oct 2022

-

www.thebureauinvestigates.com www.thebureauinvestigates.com

-

Some social media platforms struggle with even relatively simple tasks, such as detecting copies of terrorist videos that have already been removed. But their task becomes even harder when they are asked to quickly remove content that nobody has ever seen before. “The human brain is the most effective tool to identify toxic material,” said Roi Carthy, the chief marketing officer of L1ght, a content moderation AI company. Humans become especially useful when harmful content is delivered in new formats and contexts that AI may not identify. “There’s nobody that knows how to solve content moderation holistically, period,” Carthy said. “There’s no such thing.”

Marketing officer for an AI content moderation company says it is an unsolved problem

-

-

www.theverge.com www.theverge.com

-

Running Twitter is more complicated than you think.

-

The essential truth of every social network is that the product is content moderation, and everyone hates the people who decide how content moderation works.

-

-

www.theverge.com www.theverge.com

-

If the link is in a Proud Boys forum, would you not take any action against it, even if it’s like, “Click this link to help plan”?Are you asking if we have people out there clicking every link and checking if the forum comports with the ideological position that Signal agrees with?Yeah. I think in the most abstract way, I’m asking if you have a content moderation team.No, we don’t have that. We are also not a social media platform. We don’t amplify content. We don’t have Telegram channels where you can broadcast to thousands and thousands of people. We have been really careful in our product development side not to develop Signal as a social network that has algorithmic amplification that allows that “one to millions” amplification of content. We are a messaging platform. We don’t have a content moderation team because (1) we are fully private, we don’t see your content, we don’t know who you’re talking about; and (2) we are not a content platform, so it is a different paradigm.

Signal president, Meredith Wittaker, on Signal's product vision and the difference between Signal and Telegram.

They deliberately steered the product away from "one to millions" amplification of content, like for example Telegram's channels.

-

- Jul 2022

-

herman.bearblog.dev herman.bearblog.dev

-

https://herman.bearblog.dev/a-better-ranking-algorithm/

-

- Jun 2022

-

web.hypothes.is web.hypothes.is

-

We will continue to listen and work to make Hypothesis a safe and welcoming place for expression and conversation on the web

What has been done to improve this situation since this post six years ago?

-

-

blogs.timesofisrael.com blogs.timesofisrael.com

- May 2022

-

www.niemanlab.org www.niemanlab.org

-

I'm curious if any publications have experimented with the W3C webmention spec for notifications as a means of handling comments? Coming out of the IndieWeb movement, Webmention allows people to post replies to online stories on their own websites (potentially where they're less like to spew bile and hatred in public) and send notifications to the article that they've mentioned them. The receiving web page (an article, for example) can then choose to show all or even a portion of the response in the page's comments section). Other types of interaction beyond comments can also be supported here including receiving "likes", "bookmarks", "reads" (indicating that someone actually read the article), etc. There are also tools like Brid.gy which bootstrap Webmention onto social media sites like Twitter to make them send notifications to an article which might have been mentioned in social spaces. I've seen many personal sites supporting this and one or two small publications supporting it, but I'm as yet unaware of larger newspapers or magazines doing so.

-

The Seattle Times turns off comments on “stories that are of a sensitive nature,” said Michelle Matassa Flores, executive editor of The Seattle Times. “People can’t behave on any story that has to do with race.” Comments are turned off on stories about race, immigration, and crime, for instance.

The Seattle Times turns off comments on stories about race, immigration, and crime because as their executive editor Michelle Matassa Flores says, "People can't behave on any story that has to do with race."

-

- Apr 2022

-

- Dec 2021

-

twitter.com twitter.com

-

Richard Hodkinson 💙. (2021, December 1). @Twitter why are you promoting civil war #Bürgerkrieg in Germany? @TwitterSupport Can you try to be at least slightly responsible about ot promoting these antivaxers? Https://t.co/iXTdktPLRn [Tweet]. @richardhod. https://twitter.com/richardhod/status/1466111888027271171

-

-

www.vice.com www.vice.com

-

How the Far-Right Is Radicalizing Anti-Vaxxers. (n.d.). Retrieved December 2, 2021, from https://www.vice.com/en/article/88ggqa/how-the-far-right-is-radicalizing-anti-vaxxers

Tags

- neo-Nazi

- online community

- vaccine

- nationalist

- protest

- COVID-19

- anti-lockdown

- USA

- vaccine hesitancy

- far-right

- disinformation

- misinformation

- extremism

- right-wing

- lang:en

- Telegram

- UK

- British National Party

- mandate

- anti-vaxxer

- antisemitism

- is:webpage

- anti-government

- social media

- radicalization

- conspiracy theory

- moderation

- anti-vaccine

- ideology

Annotators

URL

-

- Nov 2021

-

meta.stackoverflow.com meta.stackoverflow.com

- Sep 2021

-

stackoverflow.com stackoverflow.com

-

Mod note: This question is about why XMLHttpRequest/fetch/etc. on the browser are subject to the Same Access Policy restrictions (you get errors mentioning CORB or CORS) while Postman is not. This question is not about how to fix a "No 'Access-Control-Allow-Origin'..." error. It's about why they happen.

-

Please stop posting: CORS configurations for every language/framework under the sun. Instead find your relevant language/framework's question. 3rd party services that allow a request to circumvent CORS Command line options for turning off CORS for various browsers

-

- Jul 2021

-

-

Yasseri, T., & Menczer, F. (2021). Can the Wikipedia moderation model rescue the social marketplace of ideas? ArXiv:2104.13754 [Physics]. http://arxiv.org/abs/2104.13754

-

-

today.law.harvard.edu today.law.harvard.edu

-

Schmitt, C. E., November 7, & 2020. (n.d.). ‘Be the Twitter that you want to see in the world’. Harvard Law Today. Retrieved 1 March 2021, from https://today.law.harvard.edu/be-the-twitter-that-you-want-to-see-in-the-world/

-

- Apr 2021

-

datatogether.org datatogether.org

-

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>Internet Archive</span> in (6) Why Trust A Corporation to Do a Library’s Job? - YouTube (<time class='dt-published'>04/28/2021 11:46:41</time>)</cite></small>

-

-

arxiv.org arxiv.org

-

Yang, K.-C., Pierri, F., Hui, P.-M., Axelrod, D., Torres-Lugo, C., Bryden, J., & Menczer, F. (2020). The COVID-19 Infodemic: Twitter versus Facebook. ArXiv:2012.09353 [Cs]. http://arxiv.org/abs/2012.09353

-

- Mar 2021

-

docdrop.org docdrop.org

-

Take control of it for yourself.

quite in contrast to the 2021 Congressional Investigation into Online Misinformation and Disinformation which places the responsibility on major platforms (FB, Twitter, YouTube) to moderate and control content.

-

-

-

-

Q: So, this means you don’t value hearing from readers?A: Not at all. We engage with readers every day, and we are constantly looking for ways to hear and share the diversity of voices across New Jersey. We have built strong communities on social platforms, and readers inform our journalism daily through letters to the editor. We encourage readers to reach out to us, and our contact information is available on this How To Reach Us page.

We have built strong communities on social platforms

They have? Really?! I think it's more likely the social platforms have built strong communities which happen to be talking about and sharing the papers content. The paper doesn't have any content moderation or control capabilities on any of these platforms.

Now it may be the case that there are a broader diversity of voices on those platforms over their own comments sections. This means that a small proportion of potential trolls won't drown out the signal over the noise as may happen in their comments sections online.

If the paper is really listening on the other platforms, how are they doing it? Isn't reading some or all of it a large portion of content moderation? How do they get notifications of people mentioning them (is it only direct @mentions)?

Couldn't/wouldn't an IndieWeb version of this help them or work better.

-

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>Inquirer.com</span> in Why we’re removing comments on most of Inquirer.com (<time class='dt-published'>03/18/2021 19:32:19</time>)</cite></small>

-

-

www.inquirer.com www.inquirer.com

-

-

Many news organizations have made the decision to eliminate or restrict comments in recent years, from National Public Radio, to The Atlantic, to NJ.com, which did a nice job of explaining the decision when comments were removed from its site.

A list of journalistic outlets that have removed comments from their websites.

-

Experience has shown that anything short of 24-hour vigilance on all stories is insufficient.

-

-

www.technologyreview.com www.technologyreview.com

-

Meanwhile, the algorithms that recommend this content still work to maximize engagement. This means every toxic post that escapes the content-moderation filters will continue to be pushed higher up the news feed and promoted to reach a larger audience.

This and the prior note are also underpinned by the fact that only 10% of people are going to be responsible for the majority of posts, so if you can filter out the velocity that accrues to these people, you can effectively dampen down the crazy.

-

In his New York Times profile, Schroepfer named these limitations of the company’s content-moderation strategy. “Every time Mr. Schroepfer and his more than 150 engineering specialists create A.I. solutions that flag and squelch noxious material, new and dubious posts that the A.I. systems have never seen before pop up—and are thus not caught,” wrote the Times. “It’s never going to go to zero,” Schroepfer told the publication.

The one thing many of these types of noxious content WILL have in common are the people at the fringes who are regularly promoting it. Why not latch onto that as a means of filtering?

-

-

-

-

Lori Morimoto, a fandom academic who was involved in the earlier discussion, didn’t mince words about the inherent hypocrisy of the controversy around STWW. “The discussions of the fic were absolutely riddled with people saying they wished you could block and/or ban certain users and fics on AO3 altogether because this is obnoxious,” she wrote to me in an email, “and nowhere (that I can see) is there anyone chiming in to say, ‘BUT FREE SPEECH!!!’” Morimoto continued: But when people suggest the same thing based on racist works and users, suddenly everything is about freedom of speech and how banning is bad. When it’s about racism, every apologist under the sun puts in an appearance to fight for our rights to be racist assholes, but if it’s about making the reading experience less enjoyable (which is basically what this is — it’s obnoxious, but not particularly harmful except to other works’ ability to be seen), then suddenly our overwhelming concern with free speech seems to just disappear in a poof of nothingness.

This is an interesting example of people papering around allowing racism in favor of free speech.

-

- Feb 2021

-

threadreaderapp.com threadreaderapp.com

-

maggieappleton.com maggieappleton.com

-

What we're after is a low-friction way for website owners to let other people link to their ideas and creations, offering a rich contextual reading experience for audiences, without letting bad actors monopolise the system.

It is crucial to have an open standard, and I think the people from indieweb already did a lot of the work. Webmentions are just the communication layer, but. microformats may be a great tool to keep in mind.

-

-

www.nytimes.com www.nytimes.com

-

The solution, he said, was to identify “super-spreaders” of slander, the people and the websites that wage the most vicious false attacks.

This would be a helpful thing in general disinformation from a journalistic perspective too.

-

- Jan 2021

-

www.facebook.com www.facebook.com

-

Group Rules from the Admins1NO POSTING LINKS INSIDE OF POST - FOR ANY REASONWe've seen way too many groups become a glorified classified ad & members don't like that. We don't want the quality of our group negatively impacted because of endless links everywhere. NO LINKS2NO POST FROM FAN PAGES / ARTICLES / VIDEO LINKSOur mission is to cultivate the highest quality content inside the group. If we allowed videos, fan page shares, & outside websites, our group would turn into spam fest. Original written content only3NO SELF PROMOTION, RECRUITING, OR DM SPAMMINGMembers love our group because it's SAFE. We are very strict on banning members who blatantly self promote their product or services in the group OR secretly private message members to recruit them.4NO POSTING OR UPLOADING VIDEOS OF ANY KINDTo protect the quality of our group & prevent members from being solicited products & services - we don't allow any videos because we can't monitor what's being said word for word. Written post only.

Wow, that's strict.

-

-

github.com github.com

-

This has some interesting research which might be applied to better design for an IndieWeb social space.

I'd prefer a more positive framing rather than this likely more negative one.

-

-

www.cigionline.org www.cigionline.org

-

Sarah Roberts’s new book Behind the Screen: Content Moderation in the Shadows of Social Media (2019)

-

What will it take to break this circuit, where white supremacists see that violence is rewarded with amplification and infamy? While the answer is not straightforward, there are technical and ethical actions available.

How can this be analogized to newspapers that didn't give oxygen to the KKK in the early 1900's as a means of preventing recruiting?

-

- Oct 2020

-

www.newyorker.com www.newyorker.com

-

This is the story of how Facebook tried and failed at moderating content. The article cites many sources (employees) that were tasked with flagging posts according to platform policies. Things started to be complicated when high-profile people (such as Trump) started posting hate speech on his profile.

Moderators have no way of getting honest remarks from Facebook. Moreover, they are badly treated and exploited.

The article cites examples from different countries, not only the US, including extreme right groups in the UK, Bolsonaro in Brazil, the massacre in Myanmar, and more.

In the end, the only thing that changes Facebook behavior is bad press.

-

- Sep 2020

-

discuss.rubyonrails.org discuss.rubyonrails.org

-

You’re doing some things I’d like you to stop doing:

-

-

www.theverge.com www.theverge.com

-

What were the “right things” to serve the community, as Zuckerberg put it, when the community had grown to more than 3 billion people?

This is just one of the contradictions of having a global medium/platform of communication being controlled by a single operator.

It is extremely difficult to create global policies to moderate the conversations of 3 billion people across different languages and cultures. No team, no document, is qualified for such a task, because so much is dependent on context.

The approach to moderation taken by federated social media like Mastodon makes a lot more sense. Communities moderate themselves, based on their own codes of conduct. In smaller servers, a strict code of conduct may not even be necessary - moderation decisions can be based on a combination of consensus and common sense (just like in real life social groups and social interactions). And there is no question of censorship, since their moderation actions don't apply to the whole network.

-

- Jul 2020

-

www.reddit.com www.reddit.com

-

Overall, the process of moderating individual comments is really really really fucking hard.

dire need for some IBIS or other, where people can make structured arguments & thread out, rather than this endless growing log of comments that pick up wherever they feel like & push whichever-which-way.

we need higher fidelity information to begin to moderate effectively.

this is a really nicely written thread from one of the most ultra-productive extremely-high-quality coders on the planet, detailing what challenges moderators face. and how they are equipped with only: a) moderation of individual comments b) locking threads c) bannings, all fiat acts.

-

-

bb2.uhd.edu bb2.uhd.edu

-

A moderator provides motivation and inertia to an asynchronous computerconference, encouraging interaction between participants while creating asupportive and comfortable environment for discussion.

So re-starting the conversation where it might have stalled. This might require asking questions or possibly adding on to the topic. In other places, the same process of questioning something might cause people to slow down. You can say, let's reflect on the statement, or can we have a source and maybe interpret this in another manner?

-

- Jun 2020

-

web.hypothes.is web.hypothes.is

-

The creator of a Hypothesis group

According to this issue in Github, in an LMS environment the creator of the group would be the first instructor-user in a course who creates and launches a Hypothesis-enabled reading. Could someone confirm this? Is this specified somewhere else?

-

- May 2020

-

www.webpurify.com www.webpurify.com

-

www.webpurify.com www.webpurify.com

- Apr 2020

-

www.openannotation.org www.openannotation.org

-

Meta-moderators are chosen by their reputation in the associated area. By domain proximity.

Meta-moderation: second level of comment moderation. A user is invited to rate a moderator's decision.

-

-

www.tandfonline.com www.tandfonline.com

-

students responded to messages more actively and engaged in more in-depth discussions when discussions were moderated by a peer.

This could be a good argument to push Hypothes.is to introduce some sort of moderation, in combination with the finding that annotation threads would be rare, and not very deep (Wolfe & Neuwirth, 2001)

-

-

-

There are paywalls, cookie walls. Should we call this a "comprehension wall"?

-

-

github.com github.com

-

-

moderating entities.

But do this entities have to be central, monolithic? Can't they be distributed, collaborative?

I usually like to think of the reddit model as a proposal for moderation of web annotation. Reddit is quite flexible as of what it is allowed and what it is not (this has, of course, brought heated debates in the past). But reddit has multiple reddits (as web annotation may have multiple groups or sublayers), each with a set of rules, administered and moderated by one or more people.

Do you like the moderation rules of one subreddit? You can join and even help with moderation. You don't like them? Then don't join and find another one you feel more comfortable with.

-

- Dec 2019

-

-

fairness and transparency in YouTube’s moderation practices

I wonder wich type of algorithm rules are behind this...

-

- Aug 2019

-

www.smashingmagazine.com www.smashingmagazine.com

-

Comments are moderated and will only be made live if they add to the discussion in a constructive way. If you disagree with a point, be polite. This should be a conversation between professional people with the aim that we all learn.

-

- Nov 2018

-

www.zylstra.org www.zylstra.org

-

They can spew hate amongst themselves for eternity, but without amplification it won’t thrive.

This is a key point. Social media and the way it amplifies almost anything for the benefit of clicks towards advertising is one of its most toxic features. Too often the extreme voice draws the most attention instead of being moderated down by more civil and moderate society.

-

- Oct 2018

-

motherboard.vice.com motherboard.vice.com

-

"I am really pleased to see different sites deciding not to privilege aggressors' speech over their targets'," Phillips said. "That tends to be the default position in so many online 'free speech' debates which suggest that if you restrict aggressors' speech, you're doing a disservice to America—a position that doesn't take into account the fact that antagonistic speech infringes on the speech of those who are silenced by that kind of abuse."

-

- Jul 2017

-

nearthespeedoflight.com nearthespeedoflight.com

-

Comments sections often become shouting matches or spam-riddled.

They can also become filled with "me too" type of commentary which doesn't add anything substantive to the conversation.

See also the Why Did you Delete my comment at http://www.math.columbia.edu/~woit/wordpress/?page_id=4338

-

- Apr 2016

-

www.theverge.com www.theverge.com

-

Several content moderation experts point to Pinterest as an industry leader. Microsoft’s Tarleton Gillespie, author of the forthcoming Free Speech in the Age of Platform, says the company is likely doing the most of any social media company to bridge the divide between platform and user, private company and the public. The platform’s moderation staff is well-funded and supported, and Pinterest is reportedly breaking ground in making its processes transparent to users. For example, Pinterest posts visual examples to illustrate the site’s "acceptable use policy" in an effort to help users better understand the platform’s content guidelines and the decisions moderators make to uphold them.

-

- Sep 2015

-

groups.google.com groups.google.com

Tags

Annotators

URL

-

- Aug 2015

-

people.stanford.edu people.stanford.edu

-

Hegemonic online voting systems are not useful mechanisms for the creation of equitable online communities, which is a prerequisite for more nuanced and sophisticated collaborative textual interpretation.

So true. Early design drafts of Hypothesis assumed the typical up/down voting, but I've been pretty opposed to adding it.

-

- Jul 2015

-

twentyfour.fibreculturejournal.org twentyfour.fibreculturejournal.org

-

http://ssrn.com/abstract=2588493

Grimmelmann, James. "The Virtues of Moderation." April 1, 2015. SSRN http://ssrn.com/abstract=2588493 keywords: moderation, online communities, semicommons, peer production, Wikipedia, MetaFilter, Reddit 17 Yale J.L. & Tech. 42 (2015) U of Maryland Legal Studies Research Paper No. 2015-8

-

- Jun 2015

-

Local file Local file

-

semico m- mons — a resource that is owned and managed as private pro p- erty at one l evel but as a commons at a n other, and in which “both common and private uses are important and impact si g- nificantly on each other.” 42

Yes, this acknowledgement of the largely private space of the online world is far too often overlooked in utopian views of the Internet as a "commons."

-

shared infrastru c ture with limited capacity

Does it?

-

well - moderated community will have low costs

If the moderators are unpaid community members?

-

moderation can increase access to online commun i- ties.

But doesn't deleting someone's stuff make it less open (at least to them)?

-

participation in moderation and in setting moderation

So transparency is critical.

-

the unlucky YouTube employees who manual ly r e- view flagged videos. 24

This isn't automated?

-

r when a comm u- nity is tor n between participants with incompatible goals (e.g. , amateur and professional photogr a phers).

Are expert and amateur always incompatible in this way? I'm thinking here of how to at once allow for anyone to have a conversation on a page using annotation, but also to surface for discovery expert voices...

-

moderation by flagg ing unwanted posts for deletion because they enjoy being part of a thriving communit

Motivating users to take ownership seems key. A simple flag feature could make an active user all the more involved.

-

Thus, even though it is not pa r- ticularly helpful to talk about Google as a c ommunity in its own rig ht, 21 it and other search engines play an important role in the overall mo d eration of the Web . 22

Indeed, Google search organizes communities from their inception: which entry points are immediately discoverable and which are not.

-

ex ante versus ex pos

Before or after the event. In terms of online community moderation, this likely refers to systems that prevent or punish bad behavior.

-

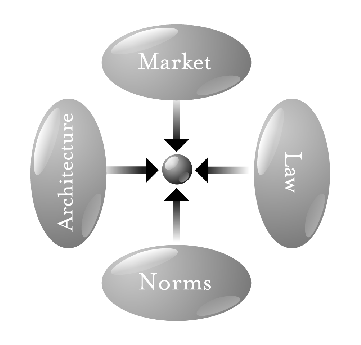

norms versus architectu

From "pathetic dot" theory, popularized Larry Lessig's Code and Other Laws of Cyberspace.

"Architecture" refers to the technical infrastructures that regulate individual behavior. In this case, I suppose that would be the design of online communities?

-

d. When they do their job right, they cr e- ate the conditions under which cooperation is possib

This is an obvious point, but one that I think is not necessarily emphasized in discussion of the problem of moderation: it's not just about deleting bad content, it's about enabling good content creation.

-

-

www.theatlantic.com www.theatlantic.com

-

called on designers and social scientists to ethically embrace their role as the web's “civil servants,”

Got to read this article itself, but civil servants are civil servants because they are employed by the government, not because they think of themselves that way. I love the idea, but I guess I'm worried that without something more official in place, this ethos cannot be institutionalized or even broadly applied.

-

dependent on those who use them and on the subjective judgments of the people who provide mutual aid.

As in "real-life," what do we do about the George Zimmermans of the world, rogue "moderators" claiming a kind of "mutual aid" in their neighborhood watch, but deeply problematic in their views and actions.

-

-

www.wired.com www.wired.com

-

They won’t continue to log on if they find their family photos sandwiched between a gruesome Russian highway accident and a hardcore porn video.

Conjecture!

Tags

Annotators

URL

-