Consumer Data Industry Association

This may be a front group. Investigate, find additional sources, and leave research notes in the comments.

Consumer Data Industry Association

This may be a front group. Investigate, find additional sources, and leave research notes in the comments.

Consumer Data Industry Association

This may be a front group. Investigate, find additional sources, and leave research notes in the comments.

Consumer Data Industry Association

This may be a front group. Investigate, find additional sources, and leave research notes in the comments.

a tax plan

Simplify the tax code.

Evolve public accounting/finance into a more real-time, open, and interactive public service. Transaction-level financial data should be available internally and externally.

Participatory budgeting and other forms of public input should be well-factored into the public-planning process. 21st century government participation can be simplified and enriched at the same time.

loopholes proliferated, and the tax code grew more complex

correlated? causative?

complexity in law, leads to more logic to parse and process - therefore more potential ambiguity in human-processing.

does software engineering practices about code complexity (or lack thereof) have fruitful applications here?

14.08

Look at this!

The Echo Look suffers from two dovetailing issues: the overwhelming potential for invasive data collection, and Amazon’s lack of a clear policy on how it might prevent that.

Important to remember. Amazon shares very little about what it collects and what it does with what it collects.

The Impact of False and Misleading Economic Data

Countries falsifying economic data: How statistics reveal fraudulent figures

En produisant des services gratuits (ou très accessibles), performants et à haute valeur ajoutée pour les données qu’ils produisent, ces entreprises captent une gigantesque part des activités numériques des utilisateurs. Elles deviennent dès lors les principaux fournisseurs de services avec lesquels les gouvernements doivent composer s’ils veulent appliquer le droit, en particulier dans le cadre de la surveillance des populations et des opérations de sécurité.

Voilà pourquoi les GAFAM sont aussi puissants (voire plus) que des États.

How Uber Uses Psychological Tricks to Push Its Drivers’ Buttons

Persuasion

沒有看到許委員的『數位經濟基本法』原始草案全文。在討論過程中有一些問題,例如數位經濟的基本定義;對資料產業沒有處理,以至於詹先生對國家保存資料的想像可能低估技術、行政程序,導致不恰當地反映在資料保存相關條文等處,不曉得是否有修正與否。

Furthermore, the results could focus on drawing the user into the virtual app space (immersive) or could use the portable nature of tablet to extend the experience into the physical space inhabited by the user (something I have called ’emersive’). Generative (emersive) Books that project coloured ambient light and/or audio into a darkened space Generative (immersive) Books that display abstracted video/audio from cameras/microphone, collaged or augmented with pre-designed content Books that contain location specific content from the internet combined with pre-authored/designed content

Estas líneas y las siguientes definen un conjunto interesante de posibilidades para las publicaciones digitales. ¿Cómo podemos hacerles Bootstrap desde lo que ya tenemos? (ejp: Grafoscopio y el Data Week).

Some key themes arise from the two NNG reports on iPad usability: App designers should ensure perceived affordances / discoverability There is a lack of consistency between apps, lots of ‘wacky’ interaction methods. Designers should draw upon existing conventions (either OS or web) or users won’t know what to do. These are practical interaction design observations, but from a particular perspective, that of perceptual psychology. These conclusions are arrived at through a linear, rather than lateral process. By giving weight to building upon existing convention, because they are familiar to the user, there is a danger that genuinely new ideas (and the kind of ambition called for by Victor Bret) within tablet design will be suppressed. Kay’s vision of the Dynabook came from lateral thinking, and thinking about how children learn. Shouldn’t the items that we design for this device be generated in the same way?

The idea of lateral thinking here is the key one. Can informatics be designed by nurturing lateral thinking? That seems related with the Jonas flopology

A first list of projects are available here but more can be found by interacting with mentors from the Pharo community. Join dedicated channels, #gsoc-students for general interactions with students on Pharo slack. In order to get an invitation for pharoproject.slack.com visit the here Discuss with mentors about the complexity and skills required for the different projects. Please help fix bugs, open relevant issues, suggest changes, additional features, help build a roadmap, and interact with mentors on mailing list and/or slack to get a better insight into projects. Better the contributions, Better are the chances of selection. Before applying: Knowledge about OOP Basic idea about Pharo & Smalltalk syntax and ongoing projects Past experience with Pharo & Smalltalk Interaction with organisation You can start with the Pharo MOOC: http://files.pharo.org/mooc/

Corporate thought leaders have now realized that it is a much greater challenge to actually apply that data. The big takeaways in this topic are that data has to be seen to be acknowledged, tangible to be appreciated, and relevantly presented to have an impact. Connecting data on the macro level across an organization and then bringing it down to the individual stakeholder on the micro level seems to be the key in getting past the fact that right now big data is one thing to have and quite another to unlock.

Simply possessing pools of data is of limited utility. It's like having a space ship but your only access point to it is through a pin hole in the garage wall that lets you see one small, random glint of ship; you (think you) know there's something awesome inside but that sense is really all you've got. Margaret points out that it has to be seen (data visualization), it has to be tangible (relevant to audience) and connected at micro and macro levels (storytelling). For all of the machine learning and AI that helps us access the spaceship, these key points are (for now) human-driven.

wanted there to be a continuum, a narrative,1:33that tracks the history of people1:37disseminating, collecting, sharing data.

Back to the question: Can data help tell the story? or does it obscure the humanity of the narrative?

ail system and the telegraph1:08and made Council Bluffs an enduring anchor1:13of the sharing of information.

The history of data points is on the ground, first, and then in the air, and then in the wires, and now, in the wireless.

The Justice Department has announced charges against four people, including two Russian security officials, over cybercrimes linked to a massive hack of millions of Yahoo user accounts. [500M accounts, in 2014]

Two of the defendants — Dmitry Dokuchaev and his superior Igor Sushchin — are officers of the Russian Federal Security Service, or FSB. According to court documents, they "protected, directed, facilitated and paid" two criminal hackers, Alexsey Belan and Karim Baratov, to access information that has intelligence value. Belan also allegedly used the information obtained for his personal financial gain.

Prophet : Facebook에서 오픈 소스로 공개한 시계열 데이터의 예측 도구로 R과 Python으로 작성되었다.

python statics opensource, also can use R

Either we own political technologies, or they will own us. The great potential of big data, big analysis and online forums will be used by us or against us. We must move fast to beat the billionaires.

You can delete the data. You can limit its collection. You can restrict who sees it. You can inform students. You can encourage students to resist. Students have always resisted school surveillance.

The first three of these can be tough for the individual faculty member to accomplish, but informing students and raising awareness around these issues can be done and is essential.

Great course

with the publication of the “New Oxford Shakespeare”, they have shaped the debate about authorship in Elizabethan England.

Interesting how the technology improves.

In addition, Neylon suggested that some low-level TDM goes on below the radar. ‘Text and data miners at universities often have to hide their location to avoid auto cut-offs of traditional publishers. This makes them harder to track. It’s difficult to draw the line between what’s text mining and what’s for researchers’ own use, for example, putting large volumes of papers into Mendeley or Zotero,’ he explained.

Without a clear understanding of what a reference managers can do and what text and data mining is, it seems that some publishers will block the download of fulltexts on their platforms.

Best States Rankings

rankings of US states based on 7 criteria

A company that sells internet-connected teddy bears that allow kids and their far-away parents to exchange heartfelt messages left more than 800,000 customer credentials, as well as two million message recordings, totally exposed online for anyone to see and listen.

Compliance, Privacy, and Security

on data compliance, privacy and security in EDU

Between 2013 and 2015 we accepted fewer than 25% of the total number of applications we received

I'd love to see some stats on what the most common reasons for rejection are. Show me the data!

Not in the right major. Not in the right class. Not in the right school. Not in the right country.

There's a bit of a slippery slope here, no? Maybe it's Audrey on that slope, maybe it's data-happy schools/companies. In either case, I wonder if it might be productive to lay claim to some space on that slope, short of the dangers below, aware of them, and working to responsibly leverage machine intelligence alongside human understanding.

All along the way, or perhaps somewhere along the way, we have confused surveillance for care. And that’s my takeaway for folks here today: when you work for a company or an institution that collects or trades data, you’re making it easy to surveil people and the stakes are high. They’re always high for the most vulnerable. By collecting so much data, you’re making it easy to discipline people. You’re making it easy to control people. You’re putting people at risk. You’re putting students at risk.

Ed-Tech in a Time of Trump

in order to facilitate advisors holding more productive conversations about potential academic directions with their advisees.

Conversations!

Each morning, all alerts triggered over the previous day are automatically sent to the advisor assigned to the impacted students, with a goal of advisor outreach to the student within 24 hours.

Key that there's still a human and human relationships in the equation here.

A single screen for each student offers all of the information that advisors reported was most essential to their work,

Did students have access to the same data?

and Georgia State's IT and legal offices readily accepted the security protocols put in place by EAB to protect the student data.

So it's not as if this was done willy-nilly.

In his spare time, the documentary photographer had been scraping information on Airbnb listings across the city and displaying them in interactive maps on his website, InsideAirbnb.com.

Quite an undertaking!

After a brief training session, participants spent six hours archiving environmental data from government websites, including those of the National Oceanic and Atmospheric Administration and the Interior Department.

A worthwhile effort.

An anonymous donor has provided storage on Amazon servers, and the information can be searched from a website at the University of Pennsylvania called Data Refuge. Though the Federal Records Act theoretically protects government data from deletion, scientists who rely on it say would rather be safe than sorry.

Data refuge.

In the node-list format, the first node in each row is ego, and the remaining nodes in that row are the nodes to which ego is connected (alters).

Please don't do this!

prospective interviewee,

Just a side spiel: In terms of an interviewee and data, everything really is data. I'll be interviewing freshmen next semester with other SLU students and some things I have already told the group to take note of in notebooks (ha ha) are the different responses the interviewee gives. In a way, the sad little freshmen turn into our experiment. Everyone in the group records a different response. These responses include the obvious oral responses, body language, and tone of voice.

Thousands of poorly secured MongoDB databases have been deleted by attackers recently. The attackers offer to restore the data in exchange for a ransom -- but they may not actually have a copy.

evidence about obtaining higher productivity by using Agile methods

If higher productivity came from including stakeholders in the frequent development releases, running a complementary scrum team on UX analysis should lead to improvement in quality.

‘In the past, if you were an alcohol distiller, you could throw up your hands and say, look, I don’t know who’s an alcoholic,’ he said. ‘Today, Facebook knows how much you’re checking Facebook. Twitter knows how much you’re checking Twitter. Gaming companies know how much you’re using their free-to-play games. If these companies wanted to do something, they could.’

sites such as Facebook and Twitter automatically and continuously refresh the page; it’s impossible to get to the bottom of the feed.

Well is not. A scrapping web technique used for the Data Selfies project goes to the end of the scrolling page for Twitter (after almost scrolling 3k tweets), which is useful for certain valid users of scrapping (like overwatch of political discourse on twitter).

So, can be infinite scrolling be useful, but not allowed by default on this social networks. Could we change the way information is visualized to get an overview of it instead of being focused on small details all the time in an infitite scroll tread mill.

Smalltalk doesn’t have to be pragmatic, because it’s better than its imitators and the things that make it different are also the things that give it an advantage.

Preserving

Really love the proposal overall and look forward to seeing what comes of the project(s).

One slight thing I'd like to mention here, in the interest of furthering the critical diversity is that, in addition to our need to preserve data/archives, I'm increasingly being persuaded of the need to construct data prevention policies and techniques that would allow many people--protestors, youth, citizens, hospital patients, insurance beneficiaries, et al--much needed space to present clean-ish slates.

Northeast Ocean Data Portal

This is so cool.

Data should extend our senses, not be a substitute for them. Likewise, analytics should augment rather than replace our native sense-making capabilities.

Federally Funded Research Results Are Becoming More Open and Accessible

A large database of blood donors' personal information from the AU Red Cross was posted on a web server with directory browsing enabled, and discovered by someone scanning randomly. It is unknown whether anyone else downloaded the file before it was removed.

My hope is that the book I’ve written gives people the courage to realize that this isn’t really about math at all, it’s about power.

because of the judiciary’s concern that such data could be used to single out judges, who were freed from restrictive sentencing guidelines in 2005

so why is everyone talking about getting rid of mandatory minimums? This makes it sounds like they've already been gotten rid of

Outside of the classroom, universities can use connected devices to monitor their students, staff, and resources and equipment at a reduced operating cost, which saves everyone money.

Devices connected to the cloud allow professors to gather data on their students and then determine which ones need the most individual attention and care.

Machine learning:

(courses.csail.mit.edu/18.337/2015/docs/50YearsDataScience.pdf)

nice reference !

For G Suite users in primary/secondary (K-12) schools, Google does not use any user personal information (or any information associated with a Google Account) to target ads.

In other words, Google does use everyone’s information (Data as New Oil) and can use such things to target ads in Higher Education.

But ultimately you have to stop being meta. As Jeff Kaufman — a developer in Cambridge who's famous among effective altruists for, along with his wife Julia Wise, donating half their household's income to effective charities — argued in a talk about why global poverty should be a major focus, if you take meta-charity too far, you get a movement that's really good at expanding itself but not necessarily good at actually helping people.

"Stop being meta" could be applied in some sense to meta systems like Smalltalk and Lisp, because their tendency to develop meta tools used mostly by developers, instead of "tools" used by by mostly everyone else. Burring the distinction between "everyone else" and developers in their ability to build/use meta tools, means to deliver tools and practices that can be a bridge with meta-tools. This is something we're trying to do with Grafoscopio and the Data Week.

(Crazy app uptake + riding data + math wizardry = many surprises in store.)

Like Waze for public transit? Way to merge official Open Data from municipal authorities with the power of crowdsourcing mass transportation.

all intellectual property rights, shall remain the exclusive property of the [School/District],

This is definitely not the case. Even in private groups would it ever make sense to say this?

Access

This really just extends the issue of "transfer" mentioned in 9.

Data Transfer or Destruction

This is the first line item I don't feel like we have a proper contingency for or understand exactly how we would handle it.

It seems important to address not just due to FERPA but to contracts/collaborations like that we have with eLife:

What if eLife decides to drop h. Would we, could we delete all data/content related to their work with h? Even outside of contract termination, would we/could we transfer all their data back to them?

The problems for our current relationship with schools is that we don't have institutional accounts whereby we might at least technically be able to collect all related data.

Students could be signing up for h with personal email addresses.

They could be using their h account outside of school so that their data isn't fully in the purview of the school.

Question: if AISD starts using h on a big scale, 1) would we delete all AISD related data if they asked--say everything related to a certain email domain? 2) would we share all that data with them if they asked?

Data cannot be shared with any additional parties without prior written consent of the Userexcept as required by law.”

Something like this should probably be added to our PP.

Data Collection

I'm really pleased with how hypothes.is addresses the issues on this page in our Privacy Policy.

There is nothing wrong with a provider usingde-‐identified data for other purposes; privacy statutes, after all, govern PII, not de-‐identified data.

Key point.

Application Modern higher education institutions have unprecedentedly large and detailed collections of data about their students, and are growing increasingly sophisticated in their ability to merge datasets from diverse sources. As a result, institutions have great opportunities to analyze and intervene on student performance and student learning. While there are many potential applications of student data analysis in the institutional context, we focus here on four approaches that cover a broad range of the most common activities: data-based enrollment management, admissions, and financial aid decisions; analytics to inform broad-based program or policy changes related to retention; early-alert systems focused on successful degree completion; and adaptive courseware.

Perhaps even more than other sections, this one recalls the trope:

The difference probably comes from the impact of (institutional) “application”.

the risk of re-identification increases by virtue of having more data points on students from multiple contexts

Very important to keep in mind. Not only do we realise that re-identification is a risk, but this risk is exacerbated by the increase in “triangulation”. Hence some discussions about Differential Privacy.

the automatic collection of students’ data through interactions with educational technologies as a part of their established and expected learning experiences raises new questions about the timing and content of student consent that were not relevant when such data collection required special procedures that extended beyond students’ regular educational experiences of students

Useful reminder. Sounds a bit like “now that we have easier access to data, we have to be particularly careful”. Probably not the first reflex of most researchers before they start sending forms to their IRBs. Important for this to be explicitly designated as a concern, in IRBs.

Responsible Use

Again, this is probably a more felicitous wording than “privacy protection”. Sure, it takes as a given that some use of data is desirable. And the preceding section makes it sound like Learning Analytics advocates mostly need ammun… arguments to push their agenda. Still, the notion that we want to advocate for responsible use is more likely to find common ground than this notion that there’s a “data faucet” that should be switched on or off depending on certain stakeholders’ needs. After all, there exists a set of data use practices which are either uncontroversial or, at least, accepted as “par for the course” (no pun intended). For instance, we probably all assume that a registrar should receive the grade data needed to grant degrees and we understand that such data would come from other sources (say, a learning management system or a student information system).

Data sharing over open-source platforms can create ambiguous rules about data ownership and publication authorship, or raise concerns about data misuse by others, thus discouraging liberal sharing of data.

Surprising mention of “open-source platforms”, here. Doesn’t sound like these issues are absent from proprietary platforms. Maybe they mean non-institutional platforms (say, social media), where these issues are really pressing. But the wording is quite strange if that is the case.

captures values such as transparency and student autonomy

Indeed. “Privacy” makes it sound like a single factor, hiding the complexity of the matter and the importance of learners’ agency.

Activities such as time spent on task and discussion board interactions are at the forefront of research.

Really? These aren’t uncontroversial, to say the least. For instance, discussion board interactions often call for careful, mixed-method work with an eye to preventing instructor effect and confirmation bias. “Time on task” is almost a codeword for distinctions between models of learning. Research in cognitive science gives very nuanced value to “time spent on task” while the Malcolm Gladwells of the world usurp some research results. A major insight behind Competency-Based Education is that it can allow for some variance in terms of “time on task”. So it’s kind of surprising that this summary puts those two things to the fore.

Research: Student data are used to conduct empirical studies designed primarily to advance knowledge in the field, though with the potential to influence institutional practices and interventions. Application: Student data are used to inform changes in institutional practices, programs, or policies, in order to improve student learning and support. Representation: Student data are used to report on the educational experiences and achievements of students to internal and external audiences, in ways that are more extensive and nuanced than the traditional transcript.

Ha! The Chronicle’s summary framed these categories somewhat differently. Interesting. To me, the “application” part is really about student retention. But maybe that’s a bit of a cynical reading, based on an over-emphasis in the Learning Analytics sphere towards teleological, linear, and insular models of learning. Then, the “representation” part sounds closer to UDL than to learner-driven microcredentials. Both approaches are really interesting and chances are that the report brings them together. Finally, the Chronicle made it sound as though the research implied here were less directed. The mention that it has “the potential to influence institutional practices and interventions” may be strategic, as applied research meant to influence “decision-makers” is more likely to sway them than the type of exploratory research we so badly need.

often private companies whose technologies power the systems universities use for predictive analytics and adaptive courseware

the use of data in scholarly research about student learning; the use of data in systems like the admissions process or predictive-analytics programs that colleges use to spot students who should be referred to an academic counselor; and the ways colleges should treat nontraditional transcript data, alternative credentials, and other forms of documentation about students’ activities, such as badges, that recognize them for nonacademic skills.

Useful breakdown. Research, predictive models, and recognition are quite distinct from one another and the approaches to data that they imply are quite different. In a way, the “personalized learning” model at the core of the second topic is close to the Big Data attitude (collect all the things and sense will come through eventually) with corresponding ethical problems. Through projects vary greatly, research has a much more solid base in both ethics and epistemology than the kind of Big Data approach used by technocentric outlets. The part about recognition, though, opens the most interesting door. Microcredentials and badges are a part of a broader picture. The data shared in those cases need not be so comprehensive and learners have a lot of agency in the matter. In fact, when then-Ashoka Charles Tsai interviewed Mozilla executive director Mark Surman about badges, the message was quite clear: badges are a way to rethink education as a learner-driven “create your own path” adventure. The contrast between the three models reveals a lot. From the abstract world of research, to the top-down models of Minority Report-style predictive educating, all the way to a form of heutagogy. Lots to chew on.

“We need much more honesty, about what data is being collected and about the inferences that they’re going to make about people. We need to be able to ask the university ‘What do you think you know about me?’”

The importance of models may need to be underscored in this age of “big data” and “data mining”. Data, no matter how big, can only tell you what happened in the past. Unless you’re a historian, you actually care about the future — what will happen, what could happen, what would happen if you did this or that. Exploring these questions will always require models. Let’s get over “big data” — it’s time for “big modeling”.

Readers are thus encouraged to examine and critique the model. If they disagree, they can modify it into a competing model with their own preferred assumptions, and use it to argue for their position. Model-driven material can be used as grounds for an informed debate about assumptions and tradeoffs. Modeling leads naturally from the particular to the general. Instead of seeing an individual proposal as “right or wrong”, “bad or good”, people can see it as one point in a large space of possibilities. By exploring the model, they come to understand the landscape of that space, and are in a position to invent better ideas for all the proposals to come. Model-driven material can serve as a kind of enhanced imagination.

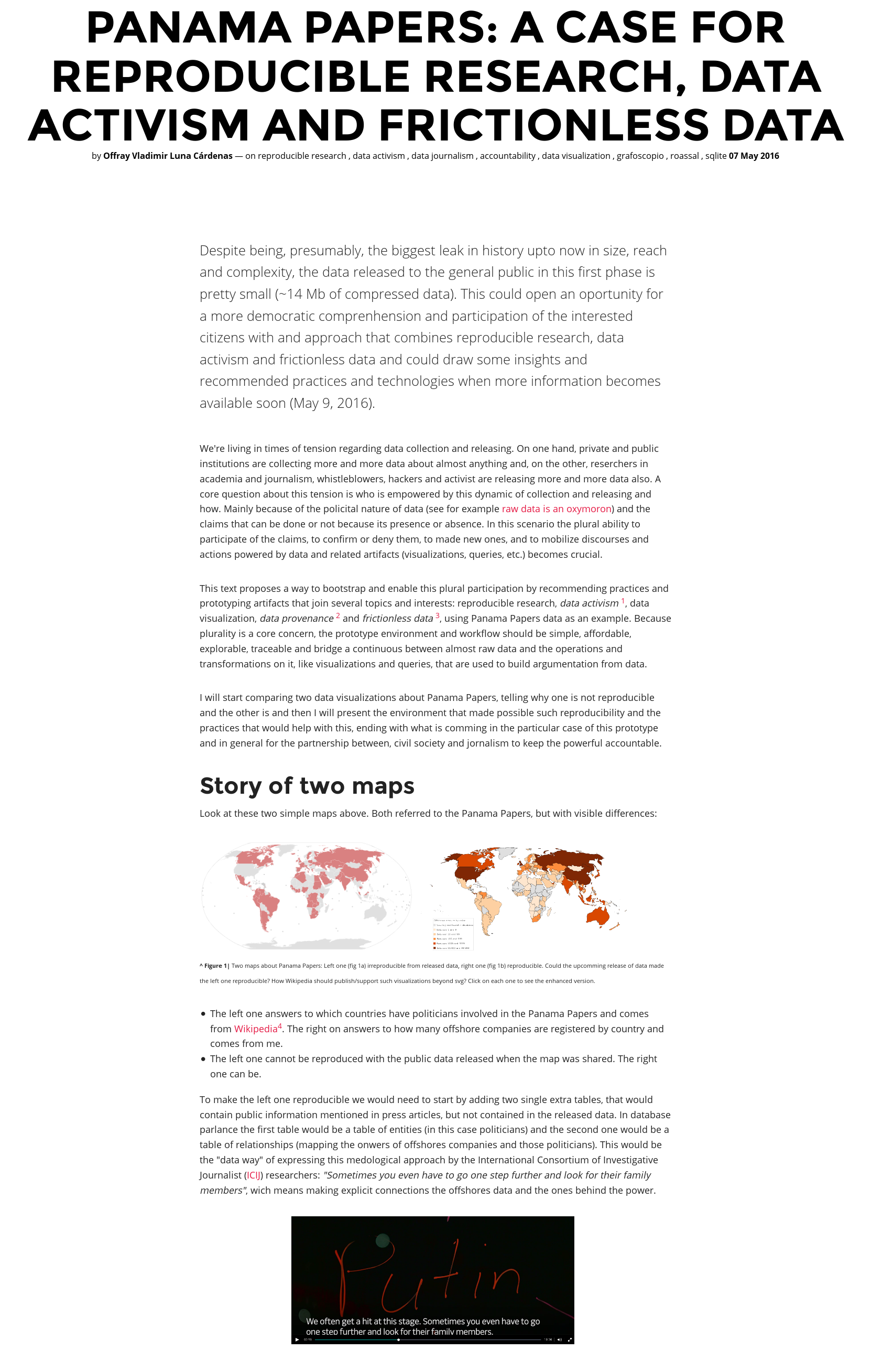

This is a part where my previous comments on data activism data journalism (see 1,2 & 3) and more plural computing environments for engagement of concerned citizens on the important issues of our time could intersect with Victor's discourse.

The Gamma: Programming tools for data journalism

(b) languages for novices or end-users, [...] If we can provide our climate scientists and energy engineers with a civilized computing environment, I believe it will make a very significant difference.

But data journalists, and in fact, data activist, social scientist, and so on, could be a "different type of novice", one that is more critically and politically involved (in the broader sense of the "politic" word).

The wider dialogue on important matters that is mediated, backed up and understood by dealing data, (as climate change) requires more voices that the ones are involved today, and because they need to be reason and argument using data, we need to go beyond climate scientist or energy engeeners as the only ones who need a "civilized computing environment" to participate in the important complex and urgent matters of today world. Previously, these more critical voices (activists, journalists, scientists) have helped to make policy makers accountable and more sensible on other important and urgent issues.

In that sense my work with reproducible research in my Panama Papers as a prototype of a data continuum environment, or others, like Gamma, could serve as an exploration, invitation and early implementation of what is possible to enrich this data/computing enhanced dialogue.

I say this despite the fact that my own work has been in much the opposite direction as Julia. Julia inherits the textual interaction of classic Matlab, SciPy and other children of the teletype — source code and command lines.

The idea of a tradition technologies which are "children of teletype" is related to the comparison we do in the data week workshop/hackathon. In our case we talk about "unix fathers" versus "dynabook children" and bifurcation/recombination points of this technologies:

If efficiency incentives and tools have been effective for utilities, manufacturers, and designers, what about for end users? One concern I’ve always had is that most people have no idea where their energy goes, so any attempt to conserve is like optimizing a program without a profiler.

The catalyst for such a scale-up will necessarily be political. But even with political will, it can’t happen without technology that’s capable of scaling, and economically viable at scale. As technologists, that’s where we come in.

May be we come before, by enabling this conversation (as said previously). Political agenda is currently coopted by economical interests far away of a sustainable planet or common good. Feedback loops can be a place to insert counter-hegemonic discourse to enable a more plural and rational dialogue between civil society and goverment, beyond short term economic current interest/incumbents.

This is aimed at people in the tech industry, and is more about what you can do with your career than at a hackathon. I’m not going to discuss policy and regulation, although they’re no less important than technological innovation. A good way to think about it, via Saul Griffith, is that it’s the role of technologists to create options for policy-makers.

Nice to see this conversation happening between technology and broader socio-political problems so explicit in Bret's discourse.

What we're doing in fact is enabling this conversation between technologist and policy-makers first, and we're highlighting it via hackathon/workshops, but not reducing it only to what happens there (an interesting critique to the techno-solutionism hackathon is here), using the feedback loops in social networks, but with an intention of mobilizing a setup that goes beyond. One example is our twitter data selfies (picture/link below). The necesity of addressing urgent problem that involve techno-socio-political complex entanglements is more felt in the Global South.

^ Up | Twitter data selfies: a strategy to increase the dialog between technologist/hackers and policy makers (click here for details).

DATA GOVERNANCE

la Data Governance fa pensare ad una Pubblica Amministrazione come unico organismo pensante e decisorio. Un concetto facile da metabolizzare, ma che non rispecchia spesso l'architettura reale delle PA di grandi dimensioni come i Comuni capoluogo, ad esempio.

La Data Governance parte da una PA che ha progettato o implementato la sua piattaforma informatica di 1) gestione dei flussi di lavoro interni e 2) gestione di servizi erogati all'utenza, in maniera tale da eliminare totalmente l'uso del supporto cartaceo e da permettere esclusivamente il data entry sia internamente dagli uffici che dall'utenza che richiede servizi pubblici agli enti pubblici. La Data Governance può essere adeguatamente ed efficacemente attuata solo se nella PA si tiene conto di questi elementi anzidetti. In merito colgo l'occasione per citare le 7 piattaforme ICT che le 14 grandi città metropolitane italiane devono realizzare nel contesto del PON METRO. Ecco questa si presenta come un occasione per le 14 grandi città italiane di dotarsi della stessa DATA GOVERNANCE, visto che le 7 piattaforme ICT devono (requisito) essere interoperabili tra loro. La Data Governance si crea insieme alla progettazione delle piattaforme informatiche che permettono alla PA di "funzionare" nei territori. La Data Governance è indissolubilmente legata al "data entry". Il data entry non prevede scansioni di carta o gestione di formati di lavoro non aperti. La Data Governance nelle sue procedure operative quotidiana è alla base della politica open data di qualità. Una Data Governance della PA nel 2016-17-... non può ancora fondarsi nella costruzione manuale del formato CSV e relativa pubblicazione manuale ad opera del dipendente pubblico. Una Data Governance dovrebbe tenere in considerazione che le procedure di pubblicazione dei dataset devono essere automatiche e derivanti dalle funzionalità degli stessi applicativi gestionali (piattaforme ICT) in uso nella PA, senza alcun intervento umano se non nella fase di filtraggio/oscuramento dei dati che afferiscono alla privacy degli individui.

Credibull score = 9.60 / 10

To provide feedback on the score fill in the form available here

What is Credibull? getcredibull.com

Page 122

Borgman on terms used by the humanities and social sciences to describe data and other types of analysis

humanist and social scientists frequently distinguish between primary and secondary information based on the degree of analysis. Yet this ordering sometimes conflates data, sources, and resources, as exemplified by a report that distinguishes "primary resources, E. G., Books close quotation from quotation secondary resources, eat. Gee., Catalogs close quotation . Resources also categorized as primary or sensor data, numerical data, and field notebooks, all of which would be considered data in the sciences. Rarely would books, conference proceedings, and feces that the report categorizes as primary resources be considered data, except when used for text-or data-mining purposes. Catalogs, subject indices, citation indexes, search engines, and web portals were classified as secondary resources. These are typically viewed as tertiary resources in the library community because they describe primary and secondary resources. The distinctions between data, sources, and resources very by discipline and circumstance. For the purposes of this book, primary resources are data, secondary resources are reports of research, whether publications or intern forms, and tertiary resources are catalogs, indexes, and directories that provide access to primary and secondary resources. Sources are the origins of these resources.

Page XVIII

Borgman notes that no social framework exist for data that is comparable to this framework that exist for analysis. CF. Kitchen 2014 who argues that pre-big data, we privileged analysis over data to the point that we threw away the data after words . This is what creates the holes in our archives.

He wonders capabilities [of the data management] must be compared to the remarkably stable scholarly communication system in which they exist. The reward system continues to be based on publishing journal articles, books, and conference papers. Peer-reviewed legitimizes scholarly work. Competition and cooperation are carefully balanced. The means by which scholarly publishing occurs is an unstable state, but the basic functions remained relatively unchanged. while capturing and managing the "data deluge" is a major driver of the scholarly infrastructure developments, no Showshow same framework for data exist that is comparable to that for publishing.

Page 220

Humanistic research takes place in a rich milieu that incorporates the cultural context of artifacts. Electronic text and models change the nature of scholarship in subtle and important ways, which have been discussed at great length since the humanities first began to contemplate the scholarly application of computing.

Page 217

Methods for organizing information in the humanities follow from their research practices. Humanists fo not rely on subject indexing to locate material to the extent that the social sciences or sciences do. They are more likely to be searching for new interpretations that are not easily described in advance; the journey through texts, libraries, and archives often is the research.

Page 223

Borgman is discussing here the difference in the way humanists handle data in comparison to the way that scientists and social scientist:

When generating their own data such as interviews or observations, human efforts to describe and represent data are comparable to that of scholars and other disciplines. Often humanists are working with materials already described by the originator or holder of the records, such as libraries, archives, government agencies, or other entities. Whether or not the desired content already is described as data, scholars need to explain its evidentiary value in your own words. That report often becomes part of the final product. While scholarly publications in all fields set data within a context, the context and interpretation are scholarship in the humanities.

Pages 220-221

Digital Humanities projects result in two general types of products. Digital libraries arise from scholarly collaborations and the initiatives of cultural heritage institutions to digitize their sources. These collections are popular for research and education. … The other general category of digital humanities products consist of assemblages of digitized cultural objects with associated analyses and interpretations. These are the equivalent of digital books in that they present an integrated research story, but they are much more, as they often include interactive components and direct links to the original sources on which the scholarship is based. … Projects that integrate digital records for widely scattered objects are a mix of a digital library and an assemblage.

Page 219

In the humanities, it is difficult to separate artifacts from practices or publications from data.

Page 219

Humanities scholars integrate and aggregate data from many sources. They need tools and services to analyze digital data, as others do the sciences and social sciences, but also tools that assist them interpretation and contemplation.

Page 215

What seems a clear line between publications and data in the sciences and social sciences is a decidedly fuzzy one in the humanities. Publications and other documents are central sources of data to humanists. … Data sources for the humanities are innumerable. Almost any document, physical artifact, or record of human activity can be used to study culture. Humanities scholars value new approaches, and recognizing something as a source of data (e.g., high school yearbooks, cookbooks, or wear patterns in the floor of public places) can be an act of scholarship. Discovering heretofore unknown treasures buried in the world's archives is particularly newsworthy. … It is impossible to inventory, much less digitize, all the data that might be useful scholarship communities. Also distinctive about humanities data is their dispersion and separation from context. Cultural artifacts are bought and sold, looted in wars, and relocated to museums and private collections. International agreements on the repatriation of cultural objects now prevent many items from being exported, but items that were exported decades or centuries ago are unlikely to return to their original site. … Digitizing cultural records and artifacts make them more malleable and mutable, which creates interesting possibilities for analyzing, contextualizing, and recombining objects. Yet digitizing objects separates them from the origins, exacerbating humanists’ problems in maintaining the context. Removing text from its physical embodiment in a fixed object may delete features that are important to researchers, such as line and page breaks, fonts, illustrations, choices of paper, bindings, and marginalia. Scholars frequently would like to compare such features in multiple additions or copies.

Page 214

Borgman on information artifacts and communities:

Artifacts in the humanities differ from those of the sciences and social sciences in several respects. Humanist use the largest array of information sources, and as a consequence, the station between documents and data is the least clear. They also have a greater number of audiences for the data and the products of the research. Whereas scientific findings usually must be translated for a general audience, humanities findings often are directly accessible and of immediate interest to the general public.

Page 204

Borgman on the different types of data in the social sciences:

Data in the social sciences fall into two general categories. The first is data collected by researchers through experiments, interviews, surveys, observations, or similar names, analogous to scientific methods. … the second category is data collected by other people or institutions, usually for purposes other than research.

Page 202

Borgman on information artifacts in the social sciences

like the sciences, the social sciences create and use minimal information. Yet they differ in the sources of the data. While almost all scientific data are created by for scientific purposes, a significant portion of social scientific data consists of records credit for other purposes, by other parties.

Borgman, Christine L. 2007. Scholarship in the Digital Age: Information, Infrastructure, and the Internet. Cambridge, Mass: MIT Press.

Page 147

Borgman on the challenges facing the humanities in the age of Big Data:

Text and data mining offer similar Grand challenges in the humanities and social sciences. Gregory crane provide some answers to the question what do you do with a million books? Two obvious answers include the extraction of information about people, places, and events, and machine translation between languages. As digital libraries of books grow through scanning avert such as Google print, the open content Alliance, million books project, and comparable projects in Europe and China, and as more books are published in digital form technical advances in data description, and now it says, and verification are essential. These large collections differ from earlier, smaller after it's on several Dimensions. They are much larger in scale, the content is more heterogenous in topic and language, the granularity creases when individual words can be tagged and they were noisy then there well curated predecessors, and their audiences more diverse, reaching the general public in addition to the scholarly community. Computer scientists are working jointly with humanist, language, and other demands specialist to pars tax, extract named entities in places, I meant optical character recognition techniques counter and Advance the state of art of information retrieval.

Page 137

Borgman discusses hear the case of NASA which lost the original video recording of the first moon landing in 1969. Backups exist, apparently, but they are lower quality than the originals.

Page 122

Here Borgman suggest that there is some confusion or lack of overlap between the words that humanist and social scientists use in distinguishing types of information from the language used to describe data.

Humanist and social scientists frequently distinguish between primary and secondary information based on the degree of analysis. Yet this ordering sometimes conflates data sources, and resorces, as exemplified by a report that distinguishes quote primary resources, ed books quote from quote secondary resources, Ed catalogs quote. Resorts is also categorized as primary wear sensor data AMA numerical data and filled notebooks, all of which would be considered data in The Sciences. But rarely would book cover conference proceedings, and he sees that the report categorizes as primary resources be considered data, except when used for text or data mining purposes. Catalogs, subject indices, citation index is, search engines, and web portals were classified as secondary resources.

Pages 119 and 120

Here Borgman discusses the various definitions of data showing them working across the fields

the following definition of data is widely accepted in this context: AT&T portable representation of information in a formalized manner suitable for communication, interpretation, or processing. Examples of data include a sequence of bits, a table of numbers, the characters on a page, recording of sounds made by a person speaking Ori moon rocks specimen. Definitions of data often arise from Individual disciplines, but can apply to data used in science, technology, the social sciences, and the humanities: data are facts, numbers, letters, and symbols that describe an object, idea, condition, situation, or other factors.... Terms data and facts are treated interchangeably, as is the case in legal context. Sources of data includes observations, complications, experiment, and record-keeping. Observational data include weather measurements... And attitude surveys... Or involve multiple places and times. Computational data result from executing a computer model or simulation.... experimental data include results from laboratory studies such as measurements of chemical reactions or from field experiments such as controlled Behavioral Studies.... records of government, business, and public and private life also yield useful data for scientific, social scientific, and humanistic research.

Pages 117 to 1:19

Here Borgman discusses the ability to go back and forth between data and reports on data she cites Phil born 2005 on this for a while medicine. She also discusses how in the pre-digital error data was understood as a support mechanism for final publication and as a result was allowed to deteriorate or be destroyed after the Publications upon which they were based appeared.

Page 115

Borgman makes the point here that while there is a Commons in the infrastructure of scholarly publishing there is less of a Commons in the infrastructure 4 data across disciplines.

The infrastructure of scholarly publishing Bridges disciplines: every field produces Journal articles, conference papers, and books albeit in differing ratios. Libraries select, collect organize and make accessible publications of all types, from all fields. No comparable infrastructure exists for data. A few Fields have major mechanisms for publishing data in repositories. Some fields are in the stage of developing standards and practices to activate their data resorces and Nathan were widely accessible. In most Fields, especially Outside The Sciences, data practices remain local idiosyncratic, and oriented to current usage rather than preservation operation, and access. Most data collections Dash where they exist Dash are managed by individual agencies within disciplines, rather than by libraries are archives. Data managers usually are trained within the disciplines they serve. Only a few degree programs and information studies include courses on data management. The lack of infrastructure for data amplifies the discontinuities in scholarly publishing despite common concerns, independent debates continue about access to Publications and data.

Page 41

discussions of digital scholarship tend to distinguish implicitly or explicitly between data and documents. Some of you data and documents as a Continuum rather than a dichotomy in this sense data such as numbers images and observations are the initial products of research, and Publications are the final products that set research findings in context.

A great paragraph here on the value of interconnection

scholarly data and documents are of most value when they are interconnected rather than independent. The outcomes of a research project could be understood most fully if it were possible to trace an important finding from a grant proposal, to data collection, to a data set, to its publication, to its subsequent review and comment period journal articles are more valuable if one can jump directly from the article to those insights into later articles that cite the source article. Articles are even more valuable if they provide links to data on which they are based. Some of these capabilities already are available, but their expansion depends more on the consistency of the data description, access arrangements, and intellectual property agreement then on technological advances.

I think here of the line from Jim Gill may all your problems be technical

p. 8-actually this is link to p. 7, since 8 is excluded

Another trend is the blurring of the distinction between primary sources, generally viewed as unprocessed or unanalysed data, and secondary sources that set data in context.

Good point about how this is a new thing. On the next page she discusses how we are now collpasing the traditional distinction between primary and secondary sources.

Retrieval methods designed for small databases decline rapidly in effectiveness as collections grow...

This is an interesting point that is missed in the Distant reading controversies: its all very well to say that you prefer close reading, but close reading doesn't scale--or rather the methodologies used to decide what to close read were developed when big data didn't exist. How to you combine that when you can read everything. I.e. You close read Dickins because he's what survived the 19th C as being worth reading. But now, if we could recover everything from the 19th C how do you justify methodologically not looking more widely?

Page 14

Rockwell and Sinclair note that corporations are mining text including our email; as they say here:

more and more of our private textual correspondence is available for large-scale analysis and interpretation. We need to learn more about these methods to be able to think through the ethical, social, and political consequences. The humanities have traditions of engaging with issues of literacy, and big data should be not an exception. How to analyze interpret, and exploit big data are big problems for the humanities.

Page 14

Rockwell and Sinclair note that HTML and PDF documents account for 17.8% and 9.2% of (I think) all data on the web while images and movies account for 23.2% and 4.3%.

“knowledge creation”.

The "business" of univeristy?

big data

les algorithmes ont besoin de données soi-disant neutres.. c'est un peu aller dans le sens des discours d'accompagnement de ces algorithmes et services de recommandation qui considèrent leurs données "naturelles", sans valeur intrasèque. (voir Bonenfant 2015)

Initially, the digital humanities consisted of the curation and analysis of data that were born digital, and the digitisation and archiving projects that sought to render analogue texts and material objects into digital forms that could be organised and searched and be subjects to basic forms of overarching, automated or guided analysis, such as summary visualisations of content or connections between documents, people or places. Subsequently, its advocates have argued that the field has evolved to provide more sophisticated tools for handling, searching, linking, sharing and analysing data that seek to complement and augment existing humanities methods, and facilitate traditional forms of interpretation and theory building, rather than replacing traditional methods or providing an empiricist or positivistic approach to humanities scholarship.

summary of history of digital humanities

Data are not useful in and of themselves. They only have utility if meaning and value can be extracted from them. In other words, it is what is done with data that is important, not simply that they are generated. The whole of science is based on realising meaning and value from data. Making sense of scaled small data and big data poses new challenges. In the case of scaled small data, the challenge is linking together varied datasets to gain new insights and opening up the data to new analytical approaches being used in big data. With respect to big data, the challenge is coping with its abundance and exhaustivity (including sizeable amounts of data with low utility and value), timeliness and dynamism, messiness and uncertainty, high relationality, semi-structured or unstructured nature, and the fact that much of big data is generated with no specific question in mind or is a by-product of another activity. Indeed, until recently, data analysis techniques have primarily been designed to extract insights from scarce, static, clean and poorly relational datasets, scientifically sampled and adhering to strict assumptions (such as independence, stationarity, and normality), and generated and alanysed with a specific question in mind.

Good discussion of the different approaches allowed/required by small v. big data.

25% of data stored in digital form in 2000 (the rest analogue; 94% by 2007

Kitchin, Rob. 2014. The Data Revolution. Thousand Oaks, CA: SAGE Publications Ltd.

Kitchin, Rob. 2014. The Data Revolution. Thousand Oaks, CA: SAGE Publications Ltd.

digital data

are there non-digital data?

The visualisation may look like data, but it is a snapshot of how I am connected, it is my rhizomatic digital landscape. For me it reinforces the fact that digital is people.

Really nice way to end the article.

I love Data = People :)

Everyone should acquire the skills to understand data, and analytics.

AMEN!

what do we do with that information?

Interestingly enough, a lot of teachers either don’t know that such data might be available or perceive very little value in monitoring learners in such a way. But a lot of this can be negotiated with learners themselves.

turn students and faculty into data points

Data=New Oil

E-texts could record how much time is spent in textbook study. All such data could be accessed by the LMS or various other applications for use in analytics for faculty and students.”

not as a way to monitor and regulate

Replication data for this study can be found in Harvard's Dataverse

demanded by education policies — for more data

data being collected about individuals for purposes unknown to these individuals

Data collection on students should be considered a joint venture, with all parties — students, parents, instructors, administrators — on the same page about how the information is being used.

there is some disparity and implicit bias

The arrival of quantified self means that it's no longer just what you type that is being weighed and measured, but how you slept last night, and with whom.

Limit retention to what is useful.

So what data does h retain?

Do annotations count as data?

Even if you trust everyone spying on you right now, the data they're collecting will eventually be stolen or bought by people who scare you. We have no ability to secure large data collections over time.

Fair enough.

And "Burn!!" on Microsoft with that link.

Data in Digital Scholarship 23

Data in digital scholarship

Annotation can help us weave that web of linked data.

This pithy statement brings together all sorts of previous annotations. Would be neat to map them.

dynamic documents

A group of experts got together last year at Daghstuhl and wrote a white paper about this.

Basically the idea is that the data, the code, the protocol/analysis/method, and the narrative should all exist as equal objects on the appropriate platform. Code in a code repository like Github, Data in a data repo that understands data formats, like Mendeley Data (my company) and Figshare, protocols somewhere like protocols.io and the narrative which ties it all together still at the publisher. Discussion and review can take the form of comments, or even better, annotations just like I'm doing now.

n a sample of 2,101 scientificpapers published between 1665 and 1800, Beaver andRosen found that 2.2% described collaborative work. No-table was the degree of joint authorship in astronomy,especially in situations where scientists were dependentupon observational data.

Astronomy was area of collaboration because they needed to share data

What type of team do you need to create these visualisations? OpenDataCity has a special team of really high-level nerds. Experts on hardware, servers, software development, web design, user experience and so on. I contribute the more mathematical view on the data. But usually a project is done by just one person, who is chief and developer, and the others help him or her. So, it's not like a group project. Usually, it's a single person and a lot of help. That makes it definitely faster, than having a big team and a lot of meetings.

This strengths the idea that data visualization is a field where a personal approach is still viable, as is shown also by a lot of individuals that are highly valuated as data visualizers.

List of publications on open access research data

nice bibliography!

After graduating from MIT at the age of 29, Loveman began teaching at Harvard Business School, where he was a professor for nine years.[8][10] While at Harvard, Loveman taught Service Management and developed an interest in the service industry and customer service.[8][10] He also launched a side career as a speaker and consultant after a 1994 paper he co-authored, titled "Putting the Service-Profit Chain to Work", attracted the attention of companies including Disney, McDonald's and American Airlines. The paper focused on the relationship between company profits and customer loyalty, and the importance of rewarding employees who interact with customers.[7][8] In 1997, Loveman sent a letter to Phil Satre, the then-chief executive officer of Harrah's Entertainment, in which he offered advice for growing the company.[7] Loveman, who had done some consulting work for the company in 1991,[11] again began to consult for Harrah's and, in 1998, was offered the position of chief operating officer.[8] He initially took a two year sabbatical from Harvard to take on the role of COO of Harrah's,[10] at the end of which Loveman decided to remain with the company.[12]

Putting the Service-Profit Chain to Work

the most important figures that one needs for management are unknown or unknowable (Lloyd S. Nelson, director of statistical methods for the Nashua corporation), but successful management must nevertheless take account of them.

分清楚哪些是能知道的,哪些是不能知道的数据

From Bits to Narratives: The Rapid Evolution of Data Visualization Engines

It was an amazing presentation by Mr Cesar A Hidalgo, It was an eye opener for me in the area of data visualisation, As the national level organisation, we have huge data, but we never thought about data visualisation. You projects particularly pantheon and immersion is marvelous and I came to know that, you are using D3. It is a great job

Around 40% of Swiss research is open access

The entirely quantitative methods and variables employed by Academic Analytics -- a corporation intruding upon academic freedom, peer evaluation and shared governance -- hardly capture the range and quality of scholarly inquiry, while utterly ignoring the teaching, service and civic engagement that faculty perform,

What is missing and trends.

Cough GigaScience Cough. See integrated GigaDB repo http://database.oxfordjournals.org/content/2014/bau018.full

SocialBoost — is a tech NGO that promotes open data and coordinates the activities of more than 1,000 IT-enthusiasts, biggest IT-companies and government bodies in Ukraine through hackathons for socially meaningful IT-projects, related to e-government, e-services, data visualization and open government data. SocialBoost has developed dozens of public services, interactive maps, websites for niche communities, as well as state projects such as data.gov.ua, ogp.gov.ua. SocialBoost builds the bridge between civic activists, government and IT-industry through technology. Main goal is to make government more open by crowdsourcing the creation of innovative public services with the help of civic society.

Great Principles of Computing<br> Peter J. Denning, Craig H. Martell

This is a book about the whole of computing—its algorithms, architectures, and designs.

Denning and Martell divide the great principles of computing into six categories: communication, computation, coordination, recollection, evaluation, and design.

"Programmers have the largest impact when they are designers; otherwise, they are just coders for someone else's design."

We should have control of the algorithms and data that guide our experiences online, and increasingly offline. Under our guidance, they can be powerful personal assistants.

Big business has been very militant about protecting their "intellectual property". Yet they regard every detail of our personal lives as theirs to collect and sell at whim. What a bunch of little darlings they are.

OER Data Report

Is it possible to add information to a resource without touching it?

That’s something we’ve been doing, yes.

preferably

Delete "preferably". Limiting the scope of text mining to exclude societal and commercial purposes limits the usefulness to enterprises (especially SMEs that cannot mine on their own) as well as to society. These limitations have ramifications in terms of limiting the research questions that researchers can and will pursue.

Encourage researchers not to transfer the copyright on their research outputs before publication.

This statement is more generally applicable than just to TDM. Besides, "Encourage" is too weak a word here, and from a societal perspective, it would be far better if researchers were to retain their copyright (where it applies), but make their copyrightable works available under open licenses that allow publishers to publish the works, and others to use and reuse it.

To maximize its utility

The unusual data released strategy involving crowdsourcing on twitter, is discussed in more detail in this blog http://blogs.biomedcentral.com/gigablog/2011/08/03/notes-from-an-e-coli-tweenome-lessons-learned-from-our-first-data-doi/

In December 2014, FitBit released a pledge stating that it “is deeply committed to protecting the security of your data.” Still, we may soon be obliged to turn over the sort of information the device is designed to collect in order to obtain medical coverage or life insurance. Some companies currently offer incentives like discounted premiums to members who volunteer information from their activity trackers. Many health and fitness industry experts say it is only a matter of time before all insurance providers start requiring this information.

Related manuscripts:

See also this population genomics study in Nature Genetics that uses this data: http://www.nature.com/ng/journal/v45/n1/full/ng.2494.html See also this blog posting on data citation of this data (and related problems): http://blogs.biomedcentral.com/gigablog/2012/12/21/promoting-datacitation-in-nature/

Accession codes

The panda and polar bear datasets should have been included in the data section rather than hidden in the URLs section. Production removed the DOIs and used (now dead) URLs instead, but for the working links and insight see the following blog: http://blogs.biomedcentral.com/gigablog/2012/12/21/promoting-datacitation-in-nature/

doi:10.1016/j.cell.2014.03.054

More on the backstory and other papers using and citing this data before the Cell publication in ths blog posting: http://blogs.biomedcentral.com/gigablog/2014/05/14/the-latest-weapon-in-publishing-data-the-polar-bear/

To date 5'-cytosine methylation (5mC) has not been reported in Caenorhabditis elegans, and using ultra-performance liquid chromatography/tandem mass spectrometry (UPLC-MS/MS) the existence of DNA methylation in T. spiralis was detected, making it the first 5mC reported in any species of nematode.

As a novel and potentially controversial finding, the huge amounts of supporting data are depositedhere to assist others to follow on and reproduce the results. This won the BMC Open Data Prize, as the judges were impressed by the numerous extra steps taken by the authors in optimizing the openness and easy accessibility of this data, and were keen to emphasize that the value of open data for such breakthrough science lies not only in providing a resource, but also in conferring transparency to unexpected conclusions that others will naturally wish to challenge. You can see more in the blog posting and interview with the authors here: http://blogs.biomedcentral.com/gigablog/2013/10/02/open-data-for-the-win/

Giga Science Database

For more about GigaDB, see the paper in Database Journal: http://database.oxfordjournals.org/content/2014/bau018.full

The New Politics of Educational Data

Ranty Blog Post about Big Data, Learning Analytics, & Higher Ed

There is a human story behind every data point and as educators and innovators we have to shine a light on it.

three-dimensional inversion recovery-prepped spoiled grass coronal series

ID: BPwPsyStructuralData SubjectGroup: BPwPsy Acquisition: Anatomical DOI: 10.18116/C6159Z

ID: BPwoPsyStructuralData SubjectGroup: BPwoPsy Acquisition: Anatomical DOI: 10.18116/C6159Z

ID: HCStructuralData SubjectGroup: HC Acquisition: Anatomical DOI: 10.18116/C6159Z

ID: SZStructuralData SubjectGroup: SZ Acquisition: Anatomical DOI: 10.18116/C6159Z

Open data

Sadly, there may not be much work on opening up data in Higher Education. For instance, there was only one panel at last year’s international Open Data Conference. https://www.youtube.com/watch?v=NUtQBC4SqTU

Looking at the interoperability of competency profiles, been wondering if it could be enhanced through use of Linked Open Data.

American Statistical Association statement on p-values

right to privacy, while allowing them to make an informed choice about taking reasonable risks to their privacy in order to help advance research

As Big-Data Companies Come to Teaching, a Pioneer Issues a Warning

federally funded research publicly accessible are becoming the norm

I read my first books on data mining back in the early 1990's and one thing I read was that "80% of the effort in a data mining project goes into data cleaning."

"It comes down to what is the reason for our existence? It's to accelerate science, not to make money."

Books on data science and R programming by Roger D. Peng of Johns Hopkins.

Great explanation of 15 common probability distributions: Bernouli, Uniform, Binomial, Geometric, Negative Binomial, Exponential, Weibull, Hypergeometric, Poisson, Normal, Log Normal, Student's t, Chi-Squared, Gamma, Beta.

Since its start in 1998, Software Carpentry has evolved from a week-long training course at the US national laboratories into a worldwide volunteer effort to improve researchers' computing skills. This paper explains what we have learned along the way, the challenges we now face, and our plans for the future.

http://software-carpentry.org/lessons/<br> Basic programming skills for scientific researchers.<br> SQL, and Python, R, or MATLAB.

http://www.datacarpentry.org/lessons/<br> Managing and analyzing data.

The journal will accommodate data but should be presented in the context of a paper. The Winnower should not act as a forum for publishing data sets alone. It is our feeling that data in absence of theory is hard to interpret and thus may cause undue noise to the site.

This will be the case also for the data visualizations showed here, once the data is curated and verified properly. Still data visualizations can start a global conversation without having the full paper translated to English.

50 Years of Data Science, David Donoho<br> 2015, 41 pages

This paper reviews some ingredients of the current "Data Science moment", including recent commentary about data science in the popular media, and about how/whether Data Science is really di fferent from Statistics.

The now-contemplated fi eld of Data Science amounts to a superset of the fi elds of statistics and machine learning which adds some technology for 'scaling up' to 'big data'.

The explosion of data-intensive research is challenging publishers to create new solutions to link publications to research data (and vice versa), to facilitate data mining and to manage the dataset as a potential unit of publication. Change continues to be rapid, with new leadership and coordination from the Research Data Alliance (launched 2013): most research funders have introduced or tightened policies requiring deposit and sharing of data; data repositories have grown in number and type (including repositories for “orphan” data); and DataCite was launched to help make research data cited, visible and accessible. Meanwhile publishers have responded by working closely with many of the community-led projects; by developing data deposit and sharing policies for journals, and introducing data citation policies; by linking or incorporating data; by launching some pioneering data journals and services; by the development of data discovery services such as Thomson Reuters’ Data Citation Index (page 138).

It doesn’t work if we think the people who disagree with us are all motivated by malice, or that our political opponents are unpatriotic. Democracy grinds to a halt without a willingness to compromise; or when even basic facts are contested, and we listen only to those who agree with us.

C'mon, civic technologists, government innovators, open data advocates: this can be a call to arms. Isn't the point of "open government" to bring people together to engage with their leaders, provide the facts, and allow more informed, engaged debate?

"A friend of mine said a really great phrase: 'remember those times in early 1990's when every single brick-and-mortar store wanted a webmaster and a small website. Now they want to have a data scientist.' It's good for an industry when an attitude precedes the technology."

UT Austin SDS 348, Computational Biology and Bioinformatics. Course materials and links: R, regression modeling, ggplot2, principal component analysis, k-means clustering, logistic regression, Python, Biopython, regular expressions.

paradox of unanimity - Unanimous or nearly unanimous agreement doesn't always indicate the correct answer. If agreement is unlikely, it indicates a problem with the system.

Witnesses who only saw a suspect for a moment are not likely to be able to pick them out of a lineup accurately. If several witnesses all pick the same suspect, you should be suspicious that bias is at work. Perhaps these witnesses were cherry-picked, or they were somehow encouraged to choose a particular suspect.

Python interface to the R programming language.<br> Use R functions and packages from Python.<br> https://pypi.python.org/pypi/rpy2

Guidelines for publishing GLAM data (galleries, libraries, archives, museums) on GitHub. It applies to publishing any kind of data anywhere.

https://en.wikipedia.org/wiki/Open_Knowledge<br> http://openglam.org/faq/

Set Semantics¶ This tool is used to set semantics in EPUB files. Semantics are simply, links in the OPF file that identify certain locations in the book as having special meaning. You can use them to identify the foreword, dedication, cover, table of contents, etc. Simply choose the type of semantic information you want to specify and then select the location in the book the link should point to. This tool can be accessed via Tools->Set semantics.

Though it’s described in such a simple way, there might be hidden power in adding these tags, especially when we bring eBooks to the Semantic Web. Though books are the prime example of a “Web of Documents”, they can also contribute to the “Web of Data”, if we enable them. It might take long, but it could happen.

The idea was to pinpoint the doctors prescribing the most pain medication and target them for the company’s marketing onslaught. That the databases couldn’t distinguish between doctors who were prescribing more pain meds because they were seeing more patients with chronic pain or were simply looser with their signatures didn’t matter to Purdue.

Users publish coursework, build portfolios or tinker with personal projects, for example.

Useful examples. Could imagine something like Wikity, FedWiki, or other forms of content federation to work through this in a much-needed upgrade from the “Personal Home Pages” of the early Web. Do see some connections to Sandstorm and the new WordPress interface (which, despite being targeted at WordPress.com users, also works on self-hosted WordPress installs). Some of it could also be about the longstanding dream of “keeping our content” in social media. Yes, as in the reverse from Facebook. Multiple solutions exist to do exports and backups. But it can be so much more than that and it’s so much more important in educational contexts.

(Not surprisingly, none of the bills provide for funding to help schools come up to speed.)