for - paper - Characteristic processes of human evolution caused the Anthropocene and may obstruct its global solutions (2023) - author - Timothy M. Waring - Zachary T. Wood - Eörs Szathmáry

SRG comment - validation that cultural evolution must make a dramatic shift because - the patterns of cultural evolution that brought human civilization to modernity and the Anthropocene - could end up destroying it - progress trap - cultural evolution - patterns of existing cultural evolution and progress could be our ultimate progress trap

- Dec 2025

-

royalsocietypublishing.org royalsocietypublishing.org

-

-

We conclude that our species must alter longstanding patterns of cultural evolution to avoid environmental disaster and escalating between-group competition.

for - cultural evolution - futures - directional change<br /> - our species must alter longstanding patterns of cultural evolution to avoid - environmental disaster and - escalating between-group competition

- SRG comment - validation for cultural change from traditional patterns that brought us to the anthropocene

Tags

- Zachary T. Wood

- paper - Characteristic processes of human evolution caused the Anthropocene and may obstruct its global solutions (2023)

- cultural evolution - futures - directional change

- Timothy M. Waring

- progress trap - cultural evolution

- SRG comment - validation for cultural change from traditional patterns that brought us to the anthropocene

- SRG comment - validation that cultural evolution must make a dramatic shift

- Eörs Szathmáry

Annotators

URL

-

- Oct 2025

-

view-su2.highspot.com view-su2.highspot.com

-

Some educators and administrators still see professional skillsas a bonus, or a logical outcome from learning technical skills.They also assume they’re too subjective to teach or measure(which we’ve proven is not the case thanks to tools like SJTs).But as this report has shown, the lack of professional skillsdevelopment has graduates struggling to communicate, adapt,and lead in today’s workforce—skills that are particularlyimportant in today’s AI-driven workforce.The Opportunity: Provide faculty and staff with professionaldevelopment that underscores the importance of professionalskills and equips them with effective methods to assess anddevelop these skills. By learning how to measure and developthese skills, faculty can better integrate professional skillsevaluation into coursework, and ensure graduates have thecompetencies employers demand

Difficult to assess (and impossible at scale) is proving to be a myth. It's doable, we just have to want to.

-

- Mar 2025

-

superforms.rocks superforms.rocks

Tags

Annotators

URL

-

-

www.youtube.com www.youtube.com

-

when you constantly Supply your body with animal protein it never fully switches on its recycling system this doesn't mean you need to become vegetarian but considering having a few meat-free days each week might actually help help boost your body's natural cleaning process

for - adjacency - autophagy - transition to planet based diet - validation for flexitarian diet - TP cafe

-

- Jan 2025

-

www.youtube.com www.youtube.com

-

like it or not Fate has placed the current generation in a position will where it will determine whether we march on the disaster or whether the human species and much other life on Earth can be saved from a terrible Indescribable fate

for - rapid whole system change - Deep Humanity - Tipping Point Festival - validation for - Indyweb - Stop Reset Go - source - Youtube - The End of Organized Humanity - Noam Chomsky - 2024, Dec

-

- Dec 2024

-

www.resilience.org www.resilience.org

-

Transcending a global culture of deep complexity and diversity is not a singular project, but can only be the outcome of an enormous multitude of projects, each individual, specific, appropriate to time, place and desire, at all scales. Diversity and multiplicity of approach are not failings but necessities.

for - A Transcender Manifesto - validation for - Indyweb - diversity

-

we cannot proceed effectively alone

for - A Transcender Manifesto - validation for - Indyweb - individual / collective gestalt - evolutionary learning

-

developing our individual and collective heuristic capacities to judge ‘life-like’ characteristics to be the most fundamental educational endeavour

for - validation - for Indyweb

validation - for Indyweb - The Indyweb is designed for the individual / collective getalt - for individual evolutionary learning intertwingled with - collective evolutionary learning

-

-

medium.com medium.com

-

I feel like sharing with you some of my observations as a frame analyst, as someone who analyzes semantic frames and how they structure a discourse to, in this case, to disempower us and keep us embedded within a conversation that is primarily about the actions of corporations and nation-states and that disengages us from direct grassroots action and taking power into our own hands

for - adjacency / validation - for justifying Tipping Point Festival - TPF - bottom up, grassroots direct action Vs - top down, corporate, policy action - Joe Brewer - framing analysis - using cognitive linguistics

adjacency / validation - between - ustifying Tipping Point Festival - TPF - - bottom up, grassroots direct action<br /> - top down, corporate, policy action<br /> - Joe Brewer - framing analysis - cognitive linguistics - adjacency relationship - We need both bottom up and top down section, but Joe's framing analysis provides an explanation why there isn't more bottom up direct action - It requires a lot of skill to find the leverage points as well as the weakness of people power is lack of money - To awaken the sleeping giant off the commons is the purpose of the Typing Point Festival (TPF)

Tags

Annotators

URL

-

- Nov 2024

-

illuminem.com illuminem.com

-

Rogan Hallam’s award-winning research at King’s College London demonstrates that when people sit in small circles to discuss a social issue (with biscuits on the table!) for most of a public meeting, 80% leave feeling empowered. In contrast, only 20% feel empowered after a conventional meeting with a series of speakers and no small group discussion.

for - TPC network - validation

-

-

www.nature.com www.nature.com

-

Adhering to the designated community’s metadata and curation standards, along with providing stewardship of the data holdings e.g. technical validation, documentation, quality control, authenticity protection, and long-term persistence.

TRSP Desirable Characteristics

-

- Sep 2024

- Aug 2024

-

www.youtube.com www.youtube.com

-

even those people who are deeply involved in introspection and find that they that their minds raise objections which prevent them going deeper into their experiential investigation and i feel that the work you're doing is making a very um significant contribution to those people as well

for - recognizing true nature - validation of conceptual method - Rupert Spira

-

i try to validate the effort i make by paying attention to a specific group of people people more or less like me that do not allow themselves to open up to the introspective path unless and until they have some kind of conceptual model that validates that that introspective path if if the head doesn't allow the heart to have the experience by direct acquaintance then in those people the heart doesn't get there the brain is the bouncer of the heart

for - recognizing true nature - validation of conceptual approach - brain is the bouncer for the heart - Bernardo Kastrup

-

-

www.sciencedaily.com www.sciencedaily.com

-

for - climate change impacts - marine life - citizen-science - potential project - climate departure - ocean heating impacts - marine life - marine migration - migrating species face collapse - migration to escape warming oceans - population collapse

main research findings - Study involved 146 species of temperate or subpolar fish and 2,572 time series - Extremely fast moving species (17km/year) showed large declines in population while - fish that did not shift showed negligible decline - Those on the northernmost edge experienced the largest declines - There is speculation that the fastest moving ones are the also the one's with the least evolutionary adaptations for new environments

-

-

www.swissre.com www.swissre.com

-

Inventories of species remain incomplete – mainly due to limited field sampling –to provide an accurate picture of the extent and distribution of all components ofbiodiversity (Purvis/Hector 2000, MEA 2003).

for - open source, citizen science biodiversity projects - validation - open source, citizen science climate departure project - validation

open source, citizen science biodiversity projects - validation - Inventories of species remain incomplete - mainly due to limited field sampling to provide an accurate picture of the extent and distribution of all components of biodiversity - Purvis/Hector 2000, MEA 2003

-

-

-

upport cross-divisional thinking and that the best ideas are already in a company and it's just a matter of sort of um getting people together

for - neuroscience - validation for Stop Reset Go open source participatory system mapping for design innovation

neuroscience - validation for Stop Reset Go open source participatory system mapping for design innovation - bottom-up collective design efficacy - What Henning Beck validates for companies can also apply to using Stop Reset Go participatory system mapping within an open space to de-silo and be as inclusive as possible of many different silo'd transition actors

-

- Jul 2024

-

-

you can't as one person you know solve a global problem like this it's you starts at a 00:34:58 CommunityWide level

for - validation - cosmolocal community organization - validation - TPF - validation - Living Cities Earth

-

we are going to need decentralized networks of communities figuring out how to support one another

for - validation - cosmolocal community organization - validation - TPF - validation - Living Cities Earth

-

if you could get everyone on the planet to do one thing what would it be and she said stay exactly where you are and figure out 00:33:30 what it is that you can do in your local

for - cosmolocal movement - validation - Jay Griffith - leverage point - cosmolocal - validation - TPF - validation - Living Cities Earth

-

- Jun 2024

-

coevolving.com coevolving.com

-

Thus, as in the case of natural languages, the pattern language is generative. It not only tells us the rules of arrangement, but shows us how to construct arrangements as many as we want which satisfy the rules.

-

-

-

这两种方式结合起来,不就是典型的默认 + 定制扩展的搭配麽?

ValidatorContext 负责生成自定的校验器 Validator Factory 负责生成默认的校验器

-

- Jan 2024

-

-

the contents of your mind, your self model, your model of the outside world, where the boundary between you and the outside world is- so where do you end and the outside world begins- all of these things are constantly being constructed 00:00:36 and created.

for - quote - Michael Levin - quote - Human INTERBeCOMing

- quote

- ()

- the contents of your mind,

- your self model,

- your model of the outside world,

- where the boundary between you and the outside world is

- so where do you end and the outside world begins

- all of these things are constantly being constructed and created.

- ()

validation for - Human INTERBeCOMing - Levin validates Deep Humanity redefinition of human being to human INTERbeCOMing, as a verb, and ongoing evolutionary process rather than a fixed, static object

- quote

-

- Dec 2023

-

docdrop.org docdrop.org

-

we have to be very careful when we respond to climate change we're not exacerbating the other ones that are there and 00:12:34 ideally we want to try and respond to all of these challenges at the same time and there are a lot of crossovers between them but there are also real risks that sometimes you you solve one thing and cause another now in contemporary Society we have been very 00:12:47 good at reductionist thinking of of silos of thinking one bit and then causing another problem elsewhere we we don't have that opportunity anymore we have to start to think of these issues at a system level

-

for: progress trap - Kevin Anderson

-

validation: SRG mapping tool, Indyweb

-

-

- Aug 2023

-

ajv.js.org ajv.js.org

-

Ajv generates code to turn JSON Schemas into super-fast validation functions that are efficient for v8 optimization.

-

- May 2023

-

en.wikipedia.org en.wikipedia.org

-

Rose therefore recommended "explicit consistency checks in a protocol ... even if they impose implementation overhead".

-

- Jan 2023

-

stackoverflow.com stackoverflow.com

- Sep 2022

-

docs.openvalidation.io docs.openvalidation.io

-

openVALIDATION enables programming of complex validation rules using natural language, such as German or English.

Tags

Annotators

URL

-

-

rororo.readthedocs.io rororo.readthedocs.io

-

"detail": [ { "loc": [ "body", "name" ], "message": "Field required" }, { "loc": [ "body", "email" ], "message": "'not-email' is not an 'email'" } ]

not complient with Problem Details, which requires

detailsto be a string

-

-

docs.openvalidation.io docs.openvalidation.io

-

x-ov-rules: culture: en rule: | the location of the applicant MUST be Dortmund

-

-

github.com github.com

-

One of the reasons I initially pushed back on the creation of a JSON Schema for V3 is because I feared that people would try to use it as a document validator. However, I was convinced by other TSC members that there were valid uses of a schema beyond validation.

annotation meta: may need new tag: fear would be used for ... valid uses for it beyond ...

-

I'd also love to see a JSON schema along with the specification. I don't really trust myself to be able to accurately read the spec in its entirely, so for 2.0 I fell back heavily on using the included schemas to verify that what I'm generating is actually intelligible (and it worked, they caught many problems).

-

-

-

allOf takes an array of object definitions that are validated independently but together compose a single object.

-

- Jun 2022

-

docs.angularjs.org docs.angularjs.org

-

Lots of info on form validation

Tags

Annotators

URL

-

-

webflow.com webflow.com

-

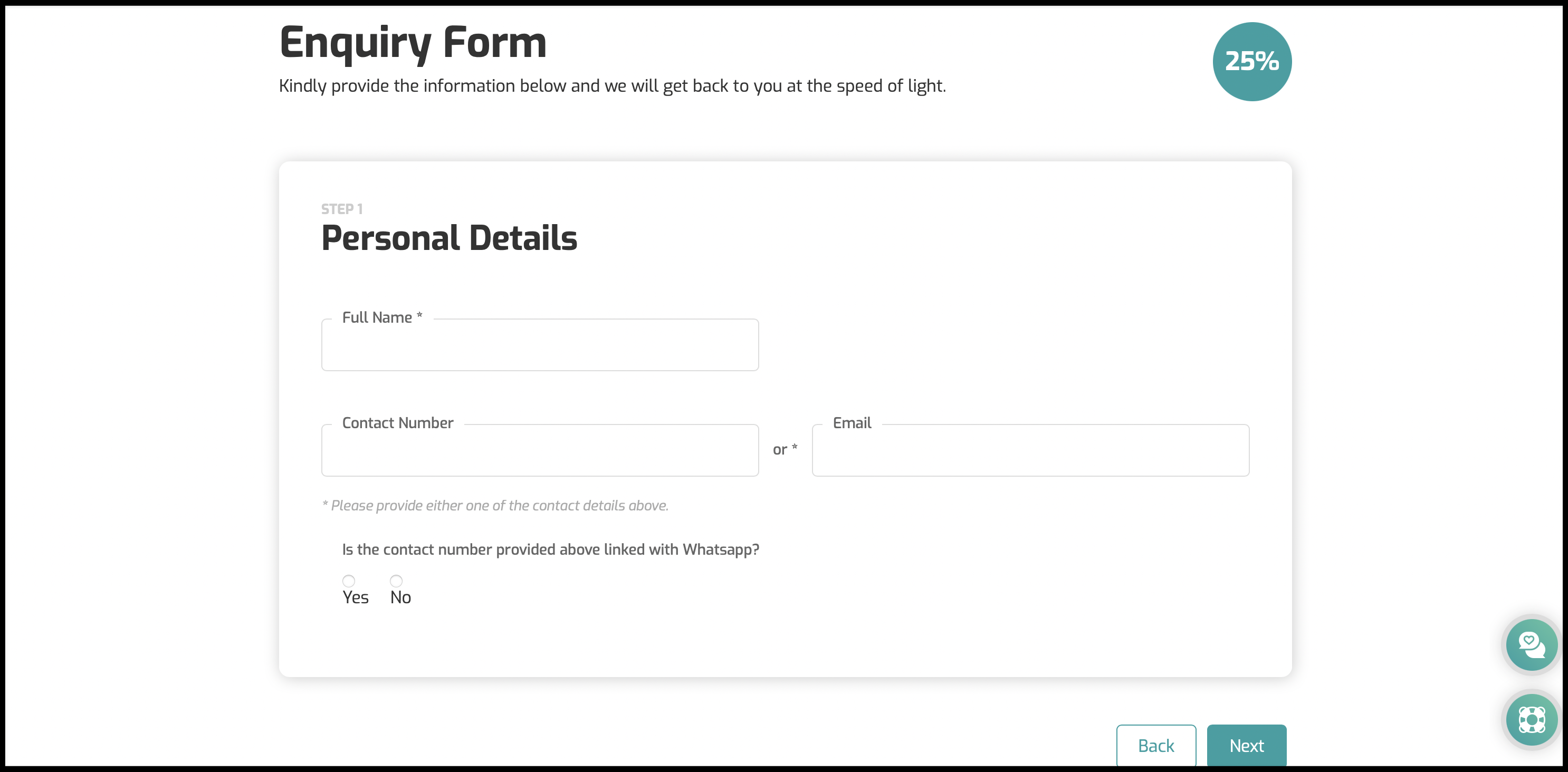

Webflow MultiStep Form - DEMO 2

- Webflow Showcase Link: https://webflow.com/website/Advanced-Form-with-Multi-Steps-and-Conditional-Logic

- View in Webflow Designer: Link here

- Live Site Link: https://sketchzlab-test-1.webflow.io

-

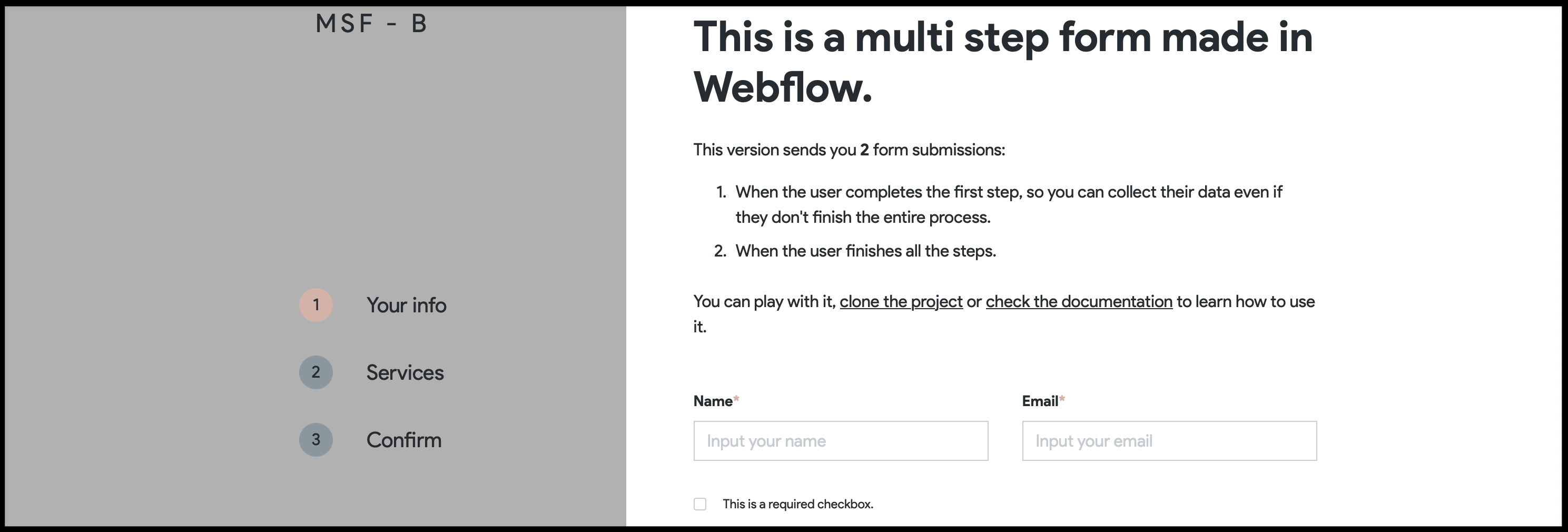

Webflow MultiStep Form - DEMO

.

.- Webflow Showcase Link: https://webflow.com/website/Multi-Step-Form-with-Input-Validation

-

View In Webflow Designer: Link Here

-

Live Site Link: https://brota-msf.webflow.io/variant-b

-

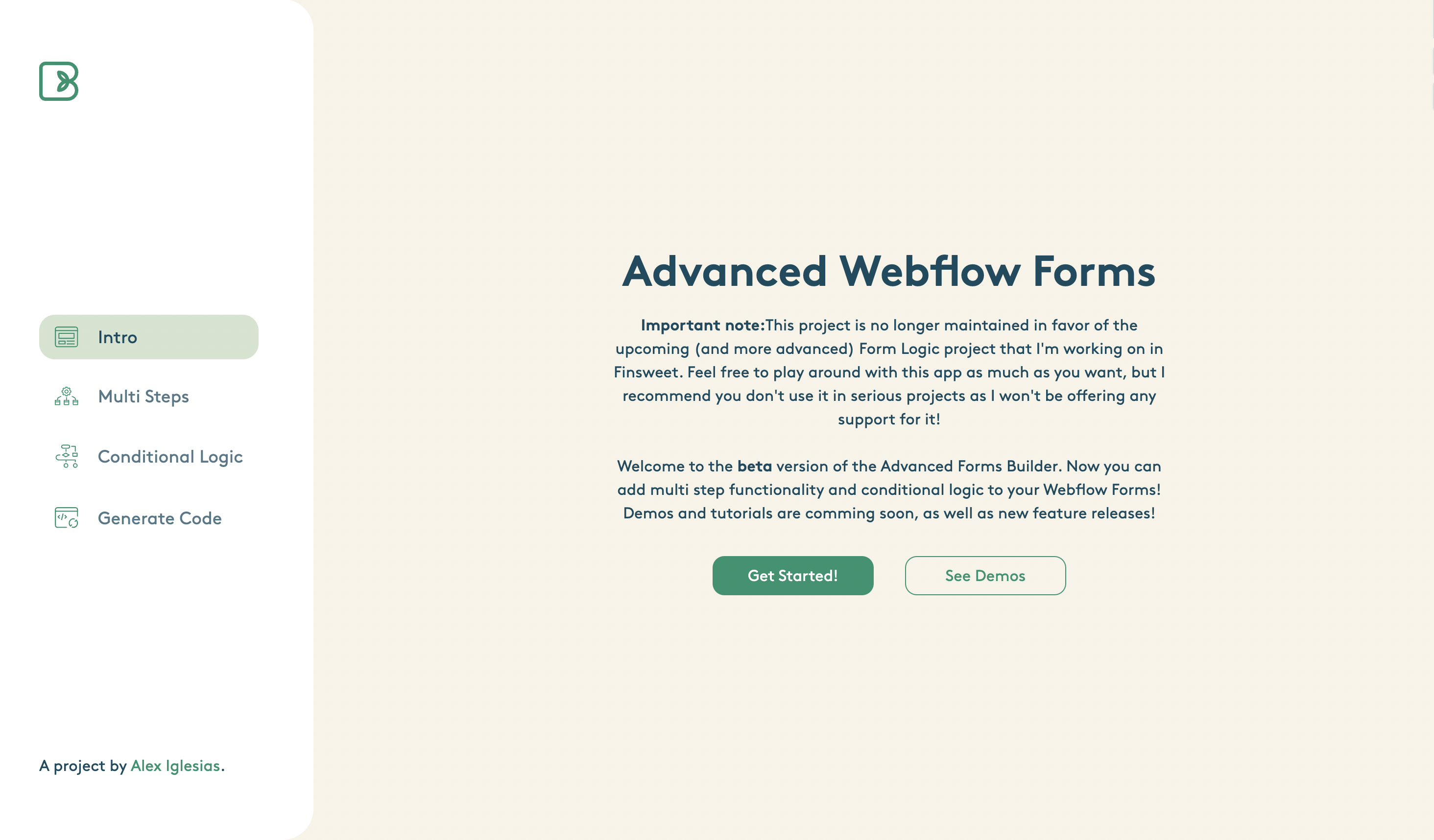

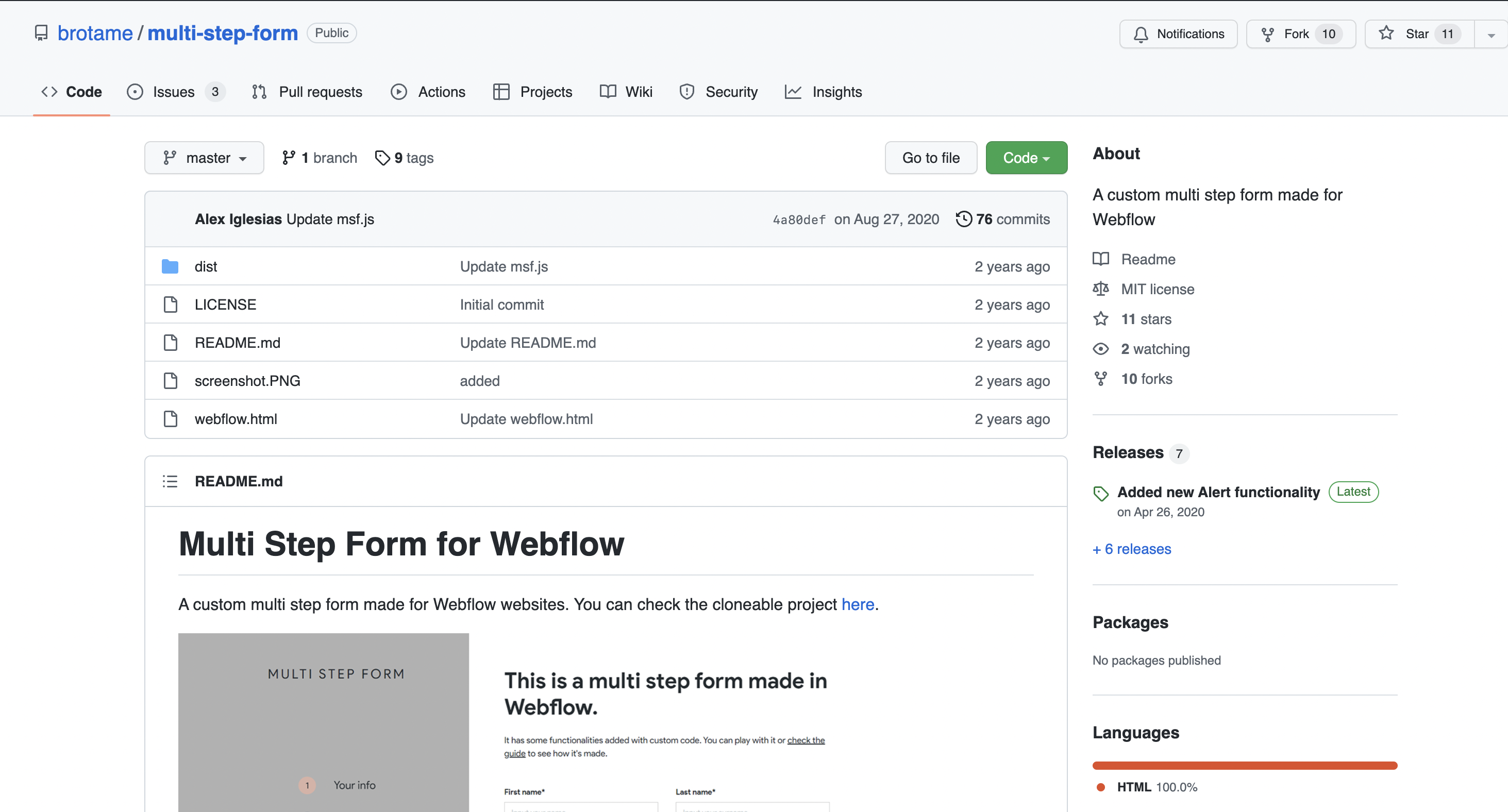

Webflow MultiStep Form - CODE GENERATOR

Live Site: https://advanced-forms.webflow.io/

Github Documentation: https://github.com/brotame/multi-step-form

-

-

webflow.com webflow.com

-

Form Validation

- Webflow Edit Link: https://webflow.com/website/Easy-Form-Validation

- Live Page: https://easy-form-validation.webflow.io/

-

- Apr 2022

-

Tags

Annotators

URL

-

- Feb 2022

-

www.jacobsen.no www.jacobsen.no

-

Hence an email address/mailbox/addr-spec is "local-part@domain"; "local-part" is composed of one or more of 'word' and periods; "word" can be an "atom" which can include anything except "specials", control characters or blank/space; and specials (the *only* printable ASCII characters [other than space, if you call space "printable"] *excluded* from being a valid "local-part") are: ()<>@,;:\".[] Therefore by the official standard for email on the internet, the plus sign is as much a legal character in the local-part of an email address as "a" or "_" or "-" or most any other symbol you see on the main part of a standard keyboard.

-

There's a common design flaw on many many websites that require an email address to register; most recently I came across this bug on CNet's download.com site: for some reason they don't accept me when I try to register an email address containing a "+", and they then send me back saying my address is invalid. It isn't!

-

"+" is a completely valid character in an email address; as defined by the internet messaging standard published in 1982(!) RFC 822 (page 8 & 9)... Any website claiming anything else is wrong by definition, plus they are prohibiting me and many fellow anti-spam activists from tracking where inbound spam comes from:

-

- Nov 2021

-

twitter.com twitter.com

-

World Health Organization (WHO) on Twitter. (n.d.). Twitter. Retrieved 3 November 2021, from https://twitter.com/WHO/status/1455869992965181441

-

- Sep 2021

-

- Aug 2021

-

softwareengineering.stackexchange.com softwareengineering.stackexchange.com

-

Input check = Tests the user input, as opposed to some internal data structure or the output of a function.

-

- Jul 2021

-

www.reddit.com www.reddit.com

-

u/dawnlxh. (2021). Reviewing peer review: does the process need to change, and how?. r/BehSciAsk. Reddit

-

- Jun 2021

-

psyarxiv.com psyarxiv.com

-

Agarwal, A. (2021). COVID19 Stress Scale—Nepali Version. PsyArXiv. https://doi.org/10.31234/osf.io/374hx

-

- May 2021

-

covid19vaccine.health.ny.gov covid19vaccine.health.ny.gov

-

Excelsior Pass. (n.d.). COVID-19 Vaccine. Retrieved 12 May 2021, from https://covid19vaccine.health.ny.gov/excelsior-pass

-

-

-

Fisman, D., Greer, A. L., & Tuite, A. (2020). Derivation and Validation of Clinical Prediction Rule for COVID-19 Mortality in Ontario, Canada. MedRxiv, 2020.06.21.20136929. https://doi.org/10.1101/2020.06.21.20136929

-

-

twitter.com twitter.com

-

Maarten van Smeden on Twitter. (n.d.). Twitter. Retrieved 4 March 2021, from https://twitter.com/MaartenvSmeden/status/1328093246829064192

-

- Mar 2021

-

final-form.org final-form.org

-

Your validation functions should also treat undefined and '' as the same. This is not too difficult since both undefined and '' are falsy in javascript. So a "required" validation rule would just be error = value ? undefined : 'Required'.

-

-

-

Using these attributes will show validation errors, or limit what the user can enter into an <input>.

-

The HTML5 form validation techniques in this post only work on the front end. Someone could turn off JavaScript and still submit jank data to a form with the tightest JS form validation.To be clear, you should still do validation on the server.

-

With these JavaScript techniques, the display of server validation errors could be a lot simpler if you expect most of your users to have JS enabled. For example, Rails still encourages you to dump all validation errors at the top of a form, which is lulzy in this age of touchy UX. But you could do that minimal thing with server errors, then rely on HTML5 validation to provide a good user experience for the vast majority of your users.

-

-

-

-

-

We don’t want to invalidate the input if the user removes all text. They may need a moment to think, but the invalidated state sets off an unnecessary alarm.

-

-

stackoverflow.com stackoverflow.com

-

If you would like to make “empty” include values that consist of spaces only, you can add the attribute pattern=.*\S.*.

-

-

css-tricks.com css-tricks.com

-

Positively indicate valid input values Let users know a field has been entered correctly. The browser can give us this information through the :valid CSS selector:

-

-

stackoverflow.com stackoverflow.com

-

Currently, there is no way to style those little validation tooltips.

-

-

www.the-art-of-web.com www.the-art-of-web.com

-

Website: <input type="url" name="website" required pattern="https?://.+"> Now our input box will only accept text starting with http:// or https:// and at least one additional character

-

-

stackoverflow.com stackoverflow.com

-

var applicationForm = document.getElementById("applicationForm"); if (applicationForm.checkValidity()) { applicationForm.submit(); } else { applicationForm.reportValidity(); }

-

-

www.html5rocks.com www.html5rocks.com

-

-

Therefore client side validation should always be treated as a progressive enhancement to the user experience; all forms should be usable even if client side validation is not present.

-

It's important to remember that even with these new APIs client side validation does not remove the need for server side validation. Malicious users can easily workaround any client side constraints, and, HTTP requests don't have to originate from a browser.

-

Since you have to have server side validation anyways, if you simply have your server side code return reasonable error messages and display them to the end user you have a built in fallback for browsers that don't support any form of client side validation.

-

-

stackoverflow.com stackoverflow.com

-

github.com github.com

-

Responders don't use valid? to check for errors in models to figure out if the request was successful or not, and relies on your controllers to call save or create to trigger the validations.

-

-

psyarxiv.com psyarxiv.com

-

Speranza, T., Abrevaya, S., & Ramenzoni, V. C. (2021). Body Image During Quarantine; Generational Effects of Social Media Pressure on Body Appearance Perception. PsyArXiv. https://doi.org/10.31234/osf.io/y826u

-

-

twitter.com twitter.com

-

ReconfigBehSci. (2021, February 26). RT @PsyArXivBot: Body Image During Quarantine; Generational Effects of Social Media Pressure on Body Appearance Perception https://t.co/Y6… [Tweet]. @SciBeh. https://twitter.com/SciBeh/status/1366708215900176385

-

- Feb 2021

-

github.com github.com

-

It is based on the idea that each validation is encapsulated by a simple, stateless predicate that receives some input and returns either true or false.

-

URI::MailTo::EMAIL_REGEXP

First time I've seen someone create a validator by simply matching against

URI::MailTo::EMAIL_REGEXPfrom std lib. More often you see people copying and pasting some really long regex that they don't understand and is probably not loose enough. It's much better, though, to simply reuse a standard one from a library — by reference, rather than copying and pasting!!

-

-

github.com github.com

-

ActiveInteraction type checks your inputs. Often you'll want more than that. For instance, you may want an input to be a string with at least one non-whitespace character. Instead of writing your own validation for that, you can use validations from ActiveModel. These validations aren't provided by ActiveInteraction. They're from ActiveModel. You can also use any custom validations you wrote yourself in your interactions.

-

Note that it's perfectly fine to add errors during execution. Not all errors have to come from type checking or validation.

-

-

-

with ActiveForm-Rails, validations is the responsability of the form and not of the models. There is no need to synchronize errors from the form to the models and vice versa.

But if you intend to save to a model after the form validates, then you can't escape the models' validations:

either you check that the models pass their own validations ahead of time (like I want to do, and I think @mattheworiordan was wanting to do), or you have to accept that one of the following outcomes is possible/inevitable if the models' own validations fail:

- if you use

object.savethen it may silently fail to save - if you use

object.savethen it will fail to save and raise an error

Are either of those outcomes acceptable to you? To me, they seem not to be. Hence we must also check for / handle the models' validations. Hence we need a way to aggregate errors from both the form object (context-specific validations) and from the models (unconditional/invariant validations that should always be checked by the model), and present them to the user.

What do you guys find to be the best way to accomplish that?

I am interested to know what best practices you use / still use today after all these years. I keep finding myself running into this same problem/need, which is how I ended up looking for what the current options are for form objects today...

- if you use

-

I agre with your concern. I realy prefer to do this : form.assign_attributes(hash) if form.valid? my_service.update(form) #render something else #render somthing else end It looks more like a normal controller.

-

My only concern with this approach is that if someone calls #valid? on the form object afterwards, it would under the hood currently delete the existing errors on the form object and revalidate. The could have unexpected side effects where the errors added by the models passed in or the service called will be lost.

-

My concern with this approach is still that it's somewhat brittle with the current implementation of valid? because whilst valid? appears to be a predicate and should have no side effects, this is not the case and could remove the errors applied by one of the steps above.

Tags

- rails: validation: valid? has side effects

- evolved into unfortunate state and too late to fix now

- I have a differing opinion

- feels natural

- missing the point

- surprising behavior

- nice API

- unfortunate

- whose responsibility is it?

- should have no side effects

- rails: validation

- overlooking/missing something

Annotators

URL

-

-

github.com github.com

-

Some developers found work arounds by using virtual attributes to # skip validators

-

-

-

Any attribute in the list will be allowed, and any defined as attr_{accessor,reader,writer} will not be populated when passed in as params. This means we no longer need to use strong_params in the controllers because the form has a clear definition of what it expects and protects us by design.

strong params not needed since form object handles that responsibility.

That's the same opinion Nick took in Reform...

-

-

github.com github.com

-

Seems similar to Reform, but simpler, plays nicely with Rails

-

-

coderwall.com coderwall.com

-

If you include ActiveModel::Validations you can write the same validators as you would with ActiveRecord. However, in this case, our form is just a collection of Contact objects, which are ActiveRecord and have their own validations. When I save the ContactListForm, it attempts to save all the contacts. In doing so, each contact has its error_messages available.

-

- Jan 2021

-

-

Finally, through its reference to “the accumulated evidence,” the definition in the Standards emphasizes that obtaining validity evidence is a process rather than a sin-gle study from which a dichotomous “valid/not valid” decision is made

-

-

psyarxiv.com psyarxiv.com

-

Collins, F. E. (2020, July 6). The Contagion Fear and Threat Scale: Measuring COVID-19 Fear in Australian, Indian, and Nepali University Students. https://doi.org/10.31234/osf.io/4s65q

-

-

discourse.computational-humanities-research.org discourse.computational-humanities-research.org

-

some interesting readings mentioned here on the topic of statistical validation/evaluation in CompHum research.

-

- Oct 2020

-

stackoverflow.com stackoverflow.com

-

we update the validation schema on the fly (we had a similar case with a validation that needs to be included whenever some fetch operation was completed)

-

Final Form makes the assumption that your validation functions are "pure" or "idempotent", i.e. will always return the same result when given the same values. This is why it doesn't run the synchronous validation again (just to double check) before allowing the submission: because it's already stored the results of the last time it ran it.

-

-

codesandbox.io codesandbox.io

-

export const validationSchema = { field: { account: [Validators.required.validator, iban.validator, ibanBlackList], name: [Validators.required.validator], integerAmount: [Able to update this schema on the fly, with:

React.useEffect(() => { getDisabledCountryIBANCollection().then(countries => { const newValidationSchema = { ...validationSchema, field: { ...validationSchema.field, account: [ ...validationSchema.field.account, { validator: countryBlackList, customArgs: { countries, }, }, ], }, }; formValidation.updateValidationSchema(newValidationSchema); }); }, []); -

Meat:

validate={values => formValidation.validateForm(values)}

-

-

www.basefactor.com www.basefactor.com

-

Form Validation

-

return { type: "COUNTRY_BLACK_LIST", succeeded, message: succeeded ? "" : "This country is not available" }

-

Validation Schema: A Form Validation Schema allows you to synthesize all the form validations (a list of validators per form field) into a single object definition. Using this approach you can easily check which validations apply to a given form without having to dig into the UI code.

-

It is easily extensible (already implemented Final Form and Formik plugin extensions).

-

Form validation can get complex (synchronous validations, asynchronous validations, record validations, field validations, internationalization, schemas definitions...). To cope with these challenges we will leverage this into Fonk and Fonk Final Form adaptor for a React Final Form seamless integration.

-

Just let the user fill in some fields, submit it to the server and if there are any errors notify them and let the user start over again. Is that a good approach? The answer is no, you don't want users to get frustrated waiting for a server round trip to get some form validation result.

Tags

- integration

- form validation

- react-final-form

- fonk (form validation library)

- extensibility

- final-form

- Formik

- complexity

- form validation library

- user experience

- nice API

- validation schema

- interoperability

- too hard/difficult/much work to expect end-developers to write from scratch (need library to do it for them)

- bad user experience

- adapter

Annotators

URL

-

-

lemoncode.github.io lemoncode.github.ioFonk1

-

Fonk is framework extension, and can be easily plugged into many libraries / frameworks, in this documentation you will find integrations with:

-

-

-

In other words, I do not want an error to appear/disappear as a user types the text that swaps it from invalid to valid.

-

-

codesandbox.io codesandbox.io

-

codesandbox.io codesandbox.io

-

codesandbox.io codesandbox.io

-

formvalidation.io formvalidation.io

-

Add new plugin Recaptcha3Token that sends the reCaptcha v3 token to the back-end when the form is valid

-

All validators can be used independently. Inspried by functional programming paradigm, all built in validators are just functions.

I'm glad you can use it independently like:

FormValidation.validators.creditCard().validate({because sometimes you don't have a formElement available like in their "main" (?) API examples:

FormValidation.formValidation(formElement

-

-

github.com github.com

-

stackoverflow.com stackoverflow.com

-

that a better and cleaner approach would be to use computed properties and a validation library that is decoupled for the UI (like hapi/joi).

-

-

sveltesociety.dev sveltesociety.dev

-

Doesn't handle:

- blur/touched

-

-

github.com github.com

-

These all come from the HTML Standard.

-

-

www.bmj.com www.bmj.com

-

Knight, S. R., Ho, A., Pius, R., Buchan, I., Carson, G., Drake, T. M., Dunning, J., Fairfield, C. J., Gamble, C., Green, C. A., Gupta, R., Halpin, S., Hardwick, H. E., Holden, K. A., Horby, P. W., Jackson, C., Mclean, K. A., Merson, L., Nguyen-Van-Tam, J. S., … Harrison, E. M. (2020). Risk stratification of patients admitted to hospital with covid-19 using the ISARIC WHO Clinical Characterisation Protocol: Development and validation of the 4C Mortality Score. BMJ, 370. https://doi.org/10.1136/bmj.m3339

-

- Sep 2020

-

www.nielsvandermolen.com www.nielsvandermolen.com

-

setCustomValidity

-

Note that we added HTML validation with specifying the types of the field (email and password). This allows the browser to validate the input.

-

-

github.com github.com

-

codedaily.io codedaily.io

-

We must always return at least some validation rule. So first off if value !== undefined then we'll return our previous validation schema. If it is undefined then we'll use the yup.mixed().notRequired() which will just inform yup that nothing is required at the optionalObject level. optionalObject: yup.lazy(value => { if (value !== undefined) { return yup.object().shape({ otherData: yup.string().required(), }); } return yup.mixed().notRequired(); }),

-

-

github.com github.com

-

Mark the schema as required. All field values apart from undefined and null meet this requirement.

-

The same as the mixed() schema required, except that empty strings are also considered 'missing' values.

-

-

jamanetwork.com jamanetwork.com

-

Kouzy, R., Jaoude, J. A., Garcia, C. J. G., Alam, M. B. E., Taniguchi, C. M., & Ludmir, E. B. (2020). Characteristics of the Multiplicity of Randomized Clinical Trials for Coronavirus Disease 2019 Launched During the Pandemic. JAMA Network Open, 3(7), e2015100–e2015100. https://doi.org/10.1001/jamanetworkopen.2020.15100

-

-

www.youtube.com www.youtube.com

-

JNOLive | Multiplicity of Randomized Clinical Trials for Coronavirus Disease 2019. (2020, July 14). https://www.youtube.com/watch?v=H-MWPqgLUvA&feature=youtu.be

-

-

codechips.me codechips.me

-

Form validation is hard. That's why there are so many different form handling libraries for the popular web frameworks. It's usually not something that is built-it, because everyone has a different need and there is no one-fit-all solution.

-

- Aug 2020

-

github.com github.com

-

Triggers error messages to render after a field is touched, and blurred (focused out of), this is useful for text fields which might start out erronous but end up valid in the end (i.e. email, or zipcode). In these cases you don't want to rush to show the user a validation error message when they haven't had a chance to finish their entry.

-

Triggers error messages to show up as soon as a value of a field changes. Useful for when the user needs instant feedback from the form validation (i.e. password creation rules, non-text based inputs like select, or switches etc.)

-

-

html.spec.whatwg.org html.spec.whatwg.org

-

Constraints

-

-

github.com github.com

-

The bindings are two-way because any HTML5 contraint validation errors will be added to the Final Form state, and any field-level validation errors from Final Form will be set into the HTML5 validity.customError state.

-

-

Local file Local file

-

So when we ask users to answer questions that deal with the future, we have to keep in mind the context in which they’re answering. They can tell us about a feature they think will make their lives better, but user val-idation will always be necessary to make sure that past user’s beliefs about future user are accurate.

-

-

stackoverflow.com stackoverflow.com

-

It's worth pointing out that filenames can contain a newline character on many *nix systems. You're unlikely to ever run into this in the wild, but if you're running shell commands on untrusted input this could be a concern

-

- Jul 2020

-

amp.dev amp.dev

-

To verify that your structured data is correct, many platforms provide validation tools. In this tutorial, we'll validate our structured data with the Google Structured Data Validation Tool.

-

-

github.com github.com

-

Currently, if we have an invalid mouse association that was previously saved at some point in the past (eg. with mouse.save! validate: false)

-

-

-

Pulido, Edgar Guillermo. ‘Validation to Spanish Version of the COVID-19 Stress Scale’. Preprint. PsyArXiv, 17 July 2020. https://doi.org/10.31234/osf.io/rcqx3.

-

-

www.clinicalmicrobiologyandinfection.com www.clinicalmicrobiologyandinfection.com

-

Meyer, B., Torriani, G., Yerly, S., Mazza, L., Calame, A., Arm-Vernez, I., Zimmer, G., Agoritsas, T., Stirnemann, J., Spechbach, H., Guessous, I., Stringhini, S., Pugin, J., Roux-Lombard, P., Fontao, L., Siegrist, C.-A., Eckerle, I., Vuilleumier, N., & Kaiser, L. (2020). Validation of a commercially available SARS-CoV-2 serological immunoassay. Clinical Microbiology and Infection, 0(0). https://doi.org/10.1016/j.cmi.2020.06.024

-

-

stackoverflow.com stackoverflow.com

-

There's not a way to do this. What you could do instead is use Cloud Functions HTTP triggers as an API for writing data. It could check the conditions you want, then return a response that indicates what's wrong with the data the client is trying to write. I understand this is far from ideal, but it might be the best option you have right now

it's definitely far from ideal :(

-

- May 2020

-

psyarxiv.com psyarxiv.com

-

Prem, R., Kubicek, B., Uhlig, L., Baumgartner, V. C., & Korunka, C. (2020). Development and Validation of a Scale to Measure Cognitive Demands of Flexible Work [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/mxh75

-

-

nvlpubs.nist.gov nvlpubs.nist.gov

-

(Thus, for these curves, the cofactor is always h = 1.)

This means there is no need to check if the point is in the correct subgroup.

-

-

psyarxiv.com psyarxiv.com

-

McElroy, E., Patalay, P., Moltrecht, B., Shevlin, M., Shum, A., Creswell, C., & Waite, P. (2020, May 8). Demographic and health factors associated with pandemic anxiety in the context of COVID-19. https://doi.org/10.31234/osf.io/2eksd

-

- Apr 2020

-

www.rubydoc.info www.rubydoc.info

-

As mentioned in StateMachines::Machine#state, you can define behaviors, like validations, that only execute for certain states. One important caveat here is that, due to a constraint in ActiveRecord's validation framework, custom validators will not work as expected when defined to run in multiple states.

-

-

psyarxiv.com psyarxiv.com

-

Fucci, E., Baquedano, C., Abdoun, O., Deroche, J., & Lutz, A. (2020, April 21). Validation of a set of stimuli to investigate the effect of attributional processes on social motivation in within-subject experiments. https://doi.org/10.31234/osf.io/nbdj4

-

-

wpvip.com wpvip.com

-

1- Validation: you “validate”, ie deem valid or invalid, data at input time. For instance if asked for a zipcode user enters “zzz43”, that’s invalid. At this point, you can reject or… sanitize. 2- sanitization: you make data “sane” before storing it. For instance if you want a zipcode, you can remove any character that’s not [0-9] 3- escaping: at output time, you ensure data printed will never corrupt display and/or be used in an evil way (escaping HTML etc…)

-

-

This style of validation most closely follows WordPress’ whitelist philosophy: only allow the user to input what you’re expecting.

-

-

download.oracle.com download.oracle.com

-

What Is Input Validation and Sanitization? Validation checks if the input meets a set of criteria (such as a string contains no standalone single quotation marks). Sanitization modifies the input to ensure that it is valid (such as doubling single quotes).

-

-

stackoverflow.com stackoverflow.com

-

stackoverflow.com stackoverflow.com

-

psyarxiv.com psyarxiv.com

-

Wolf, M. G. (2020, April 26). Survey Uses May Influence Survey Responses. https://doi.org/10.31234/osf.io/c4hd6

-

-

sciencebusiness.net sciencebusiness.net

-

Imperial researchers develop lab-free COVID-19 test with results in less than an hour. (n.d.). Science|Business. Retrieved April 20, 2020, from https://sciencebusiness.net/network-updates/imperial-researchers-develop-lab-free-covid-19-test-results-less-hour

-

-

github.com github.com

-

github.com github.com

-

github.com github.com

-

Prefer over this: https://github.com/michaelbanfield/devise-pwned_password

-

-

www.troyhunt.com www.troyhunt.com

-

Having visibility to the prevalence means, for example, you might outright block every password that's appeared 100 times or more and force the user to choose another one (there are 1,858,690 of those in the data set), strongly recommend they choose a different password where it's appeared between 20 and 99 times (there's a further 9,985,150 of those), and merely flag the record if it's in the source data less than 20 times.

-

-

www.troyhunt.com www.troyhunt.com

-

docs.sqlalchemy.org docs.sqlalchemy.org

-

Validators, like all attribute extensions, are only called by normal userland code; they are not issued when the ORM is populating the object

-

-

stackoverflow.com stackoverflow.com

-

validates works only during append operation. Not during assignment

-

- Mar 2020

-

tools.ietf.org tools.ietf.org

-

Designers using these curves should be aware that for each public key, there are several publicly computable public keys that are equivalent to it, i.e., they produce the same shared secrets. Thus using a public key as an identifier and knowledge of a shared secret as proof of ownership (without including the public keys in the key derivation) might lead to subtle vulnerabilities.

-

Protocol designers using Diffie-Hellman over the curves defined in this document must not assume "contributory behaviour". Specially, contributory behaviour means that both parties' private keys contribute to the resulting shared key. Since curve25519 and curve448 have cofactors of 8 and 4 (respectively), an input point of small order will eliminate any contribution from the other party's private key. This situation can be detected by checking for the all- zero output, which implementations MAY do, as specified in Section 6. However, a large number of existing implementations do not do this.

-

The check for the all-zero value results from the fact that the X25519 function produces that value if it operates on an input corresponding to a point with small order, where the order divides the cofactor of the curve (see Section 7).

-

Both MAY check, without leaking extra information about the value of K, whether K is the all-zero value and abort if so (see below).

Tags

Annotators

URL

-

-

nvlpubs.nist.gov nvlpubs.nist.gov

-

n

n is the order of the subgroup and n is prime

-

an ECC key-establishment scheme requires the use of public keys that are affine elliptic-curve points chosen from a specific cyclic subgroup with prime order n

n is the order of the subgroup and n is prime

-

5.6.2.3.3ECC Full Public-Key Validation Routine

-

The recipient performs a successful full public-key validation of the received public key (see Sections 5.6.2.3.1for FFCdomain parameters andSection5.6.2.3.3for ECCdomain parameters).

-

Assurance of public-key validity –assurance that the public key of the other party (i.e., the claimed owner of the public key) has the (unique) correct representation for a non-identity element of the correct cryptographic subgroup, as determined by the

-

-

prosecco.gforge.inria.fr prosecco.gforge.inria.fr

-

outside the subgroup

-

-

nvlpubs.nist.gov nvlpubs.nist.gov

-

5.6.2.3.2ECC Full Public-Key Validation Routine

-

The recipient performs a successful full public-key validation of the received public key (see Sections 5.6.2.3.1 and 5.6.2.3.2).

-

Assurance of public-key validity – assurance that the public key of the other party (i.e., the claimed owner of the public key) has the (unique) correct representation for a non-identity element of the correct cryptographic subgroup, as determined by the domain parameters (see Sections 5.6.2.2.1 and 5.6.2.2.2). This assurance is required for both static and ephemeral public keys.

-

-

noiseprotocol.org noiseprotocol.org

-

Misusing public keys as secrets: It might be tempting to use a pattern with a pre-message public key and assume that a successful handshake implies the other party's knowledge of the public key. Unfortunately, this is not the case, since setting public keys to invalid values might cause predictable DH output. For example, a Noise_NK_25519 initiator might send an invalid ephemeral public key to cause a known DH output of all zeros, despite not knowing the responder's static public key. If the parties want to authenticate with a shared secret, it should be used as a PSK.

-

Channel binding: Depending on the DH functions, it might be possible for a malicious party to engage in multiple sessions that derive the same shared secret key by setting public keys to invalid values that cause predictable DH output (as in the previous bullet). It might also be possible to set public keys to equivalent values that cause the same DH output for different inputs. This is why a higher-level protocol should use the handshake hash (h) for a unique channel binding, instead of ck, as explained in Section 11.2.

-

The public_key either encodes some value which is a generator in a large prime-order group (which value may have multiple equivalent encodings), or is an invalid value. Implementations must handle invalid public keys either by returning some output which is purely a function of the public key and does not depend on the private key, or by signaling an error to the caller. The DH function may define more specific rules for handling invalid values.

Tags

Annotators

URL

-

-

hal.inria.fr hal.inria.fr

-

WireGuard excludes zero Diffie-Hellman shared secrets to avoid points of small order, while Noiserecommends not to perform this check

Tags

Annotators

URL

-

-

moderncrypto.org moderncrypto.org

-

This check strikes a delicate balance: It checks Y sufficiently to prevent forgery of a (Y, Y^x) pair without knowledge of X, but the rejected values for X are unlikely to be hit by an attacker flipping ciphertext bits in the least-significant portion of X. Stricter checking could easily *WEAKEN* security, e.g. the NIST-mandated subgroup check would provide an oracle on whether a tampered X was square or nonsquare.

-

X25519 is very close to this ideal, with the exception that public keys have easily-computed equivalent values. (Preventing equivalent values would require a different and more costly check. Instead, protocols should "bind" the exact public keys by MAC'ing them or hashing them into the session key.)

-

Curve25519 key generation uses scalar multiplication with a private key "clamped" so that it will always produce a valid public key, regardless of RNG behavior.

-

* Valid points have equivalent "invalid" representations, due to the cofactor, masking of the high bit, and (in a few cases) unreduced coordinates.

-

With all the talk of "validation", the reader of JP's essay is likely to think this check is equivalent to "full validation" (e.g. [SP80056A]), where only valid public keys are accepted (i.e. public keys which uniquely encode a generator of the correct subgroup).

-

(1) The proposed check has the goal of blacklisting a few input values. It's nowhere near full validation, does not match existing standards for ECDH input validation, and is not even applied to the input.

-

-

research.kudelskisecurity.com research.kudelskisecurity.com

-

If Alice generates all-zero prekeys and identity key, and pushes them to the Signal’s servers, then all the peers who initiate a new session with Alice will encrypt their first message with the same key, derived from all-zero shared secrets—essentially, the first message will be in the clear for an eavesdropper.

-

arguing that a zero check “adds complexity (const-time code, error-handling, and implementation variance), and is not needed in good protocols.”

-

- Dec 2019

-

github.com github.com

-

Responders don't use valid? to check for errors in models to figure out if the request was successful or not, and relies on your controllers to call save or create to trigger the validations.

Tags

Annotators

URL

-

-

stackoverflow.com stackoverflow.com

-

Arguably, the rails-team's choice of raising ArgumentError instead of validation error is correct in the sense that we have full control over what options a user can select from a radio buttons group, or can select over a select field, so if a programmer happens to add a new radio button that has a typo for its value, then it is good to raise an error as it is an application error, and not a user error. However, for APIs, this will not work because we do not have any control anymore on what values get sent to the server.

-

-

stackoverflow.com stackoverflow.com

-

When the controller creates the user, instead of adding a validation error to the record, it raises an exception. How to avoid this?

-

In case anyone wants a hack, here is what I came up with.

-

- Sep 2019

-

github.com github.com

-

codesandbox.io codesandbox.io

- Aug 2019

-

codesandbox.io codesandbox.io

-

codesandbox.io codesandbox.io

-

codesandbox.io codesandbox.io

-

www.smashingmagazine.com www.smashingmagazine.com

-

Validate Forms

-

-

www.smashingmagazine.com www.smashingmagazine.com

-

This rule has a few exceptions: It’s helpful to validate inline as the user is typing when creating a password (to check whether the password meets complexity requirements), when creating a user name (to check whether a name is available) and when typing a message with a character limit.

-