Acton's $850M moral stand and the $122mn fine for deliberately lying to the EU Competition Commission

Under pressure from Mark Zuckerberg and Sheryl Sandberg to monetize WhatsApp, he pushed back as Facebook questioned the encryption he'd helped build and laid the groundwork to show targeted ads and facilitate commercial messaging. Acton also walked away from Facebook a year before his final tranche of stock grants vested. “It was like, okay, well, you want to do these things I don’t want to do,” Acton says. “It’s better if I get out of your way. And I did.” It was perhaps the most expensive moral stand in history. Acton took a screenshot of the stock price on his way out the door—the decision cost him $850 million.

Despite a transfer of several billion dollars, Acton says he never developed a rapport with Zuckerberg. “I couldn’t tell you much about the guy,” he says. In one of their dozen or so meetings, Zuck told Acton unromantically that WhatsApp, which had a stipulated degree of autonomy within the Facebook universe and continued to operate for a while out of its original offices, was “a product group to him, like Instagram.”

For his part, Acton had proposed monetizing WhatsApp through a metered-user model, charging, say, a tenth of a penny after a certain large number of free messages were used up. “You build it once, it runs everywhere in every country,” Acton says. “You don’t need a sophisticated sales force. It’s a very simple business.”

Acton’s plan was shot down by Sandberg. “Her words were ‘It won’t scale.’ ”

“I called her out one time,” says Acton, who sensed there might be greed at play. “I was like, ‘No, you don’t mean that it won’t scale. You mean it won’t make as much money as . . . ,’ and she kind of hemmed and hawed a little. And we moved on. I think I made my point. . . . They are businesspeople, they are good businesspeople. They just represent a set of business practices, principles and ethics, and policies that I don’t necessarily agree with.”

Questioning Zuckerberg’s true intentions wasn’t easy when he was offering what became $22 billion. “He came with a large sum of money and made us an offer we couldn’t refuse,” Acton says. The Facebook founder also promised Koum a board seat, showered the founders with admiration and, according to a source who took part in discussions, told them that they would have “zero pressure” on monetization for the next five years... Internally, Facebook had targeted a $10 billion revenue run rate within five years of monetization, but such numbers sounded too high to Acton—and reliant on advertising.

T he warning signs emerged before the deal even closed that November. The deal needed to get past Europe’s famously strict antitrust officials, and Facebook prepared Acton to meet with around a dozen representatives of the European Competition Commission in a teleconference. “I was coached to explain that it would be really difficult to merge or blend data between the two systems,” Acton says. He told the regulators as much, adding that he and Koum had no desire to do so.

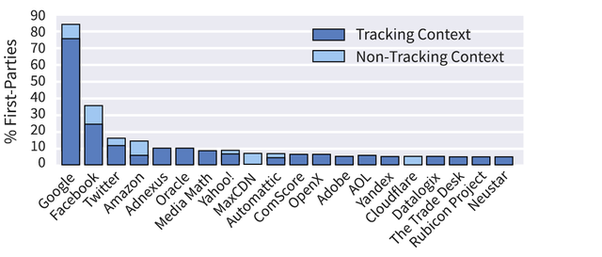

Later he learned that elsewhere in Facebook, there were “plans and technologies to blend data.” Specifically, Facebook could use the 128-bit string of numbers assigned to each phone as a kind of bridge between accounts. The other method was phone-number matching, or pinpointing Facebook accounts with phone numbers and matching them to WhatsApp accounts with the same phone number.

Within 18 months, a new WhatsApp terms of service linked the accounts and made Acton look like a liar. “I think everyone was gambling because they thought that the EU might have forgotten because enough time had passed.” No such luck: Facebook wound up paying a $122 million fine for giving “incorrect or misleading information” to the EU—a cost of doing business, as the deal got done and such linking continues today (though not yet in Europe). “The errors we made in our 2014 filings were not intentional," says a Facebook spokesman.

Acton had left a management position on Yahoo’s ad division over a decade earlier with frustrations at the Web portal’s so-called “Nascar approach” of putting ad banners all over a Web page. The drive for revenue at the expense of a good product experience “gave me a bad taste in my mouth,” Acton remembers. He was now seeing history repeat. “This is what I hated about Facebook and what I also hated about Yahoo,” Acton says. “If it made us a buck, we’d do it.” In other words, it was time to go.