Horn, S. R., Weston, S. J., & Fisher, P. (2020). Identifying causal role of COVID-19 in immunopsychiatry models [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/w4d5u

- Jun 2020

-

-

psyarxiv.com psyarxiv.com

-

Del Giudice, M. (2020). All About AIC [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/7hmgz

Tags

Annotators

URL

-

-

arxiv.org arxiv.org

-

de Arruda, G. F., Méndez-Bermúdez, J. A., Rodrigues, F. A., & Moreno, Y. (2020). Universality of eigenvector delocalization and the nature of the SIS phase transition in multiplex networks. ArXiv:2005.08074 [Cond-Mat, Physics:Physics]. http://arxiv.org/abs/2005.08074

-

-

www.researchgate.net www.researchgate.net

-

Mclachlan, S., Lucas, P., Kudakwashe Dube, Hitman, G. A., Osman, M., Kyrimi, E., Neil, M., & Fenton, N. E. (2020). The fundamental limitations of COVID-19 contact tracing methods and how to resolve them with a Bayesian network approach. https://doi.org/10.13140/RG.2.2.27042.66243

Tags

- network model

- prediction

- contact tracing

- likelihood

- digital solution

- Bayesian

- lang:en

- is:preprint

- app

- COVID-19

- containment

- limitation

Annotators

URL

-

-

arxiv.org arxiv.org

-

Cai, L., Chen, Z., Luo, C., Gui, J., Ni, J., Li, D., & Chen, H. (2020). Structural Temporal Graph Neural Networks for Anomaly Detection in Dynamic Graphs. ArXiv:2005.07427 [Cs, Stat]. http://arxiv.org/abs/2005.07427

-

-

lifehacker.com lifehacker.com

-

Facebook already harvests some data from WhatsApp. Without Koum at the helm, it’s possible that could increase—a move that wouldn’t be out of character for the social network, considering that the company’s entire business model hinges on targeted advertising around personal data.

-

-

www.metascience2019.org www.metascience2019.org

-

Yang Yang: The Replicability of Scientific Findings Using Human and Machine Intelligence (Video). Metascience 2019 Symposium. https://www.metascience2019.org/presentations/yang-yang/

-

-

www.pnas.org www.pnas.org

-

Yang, Y., Youyou, W., & Uzzi, B. (2020). Estimating the deep replicability of scientific findings using human and artificial intelligence. Proceedings of the National Academy of Sciences, 117(20), 10762–10768. https://doi.org/10.1073/pnas.1909046117

-

-

-

Frederick, J. K., Raabe, G. R., Rogers, V., & Pizzica, J. (2020, May 30). A Model of Distance Special Education Support Services Amidst COVID-19. Retrieved from psyarxiv.com/q362v

-

-

www.machinelearningmastery.com www.machinelearningmastery.com

-

epsilon. Is a very small number to prevent any division by zero in the implementation (e.g. 10E-8). Further, learning rate decay can also be used with Adam. The paper uses a decay rate alpha = alpha/sqrt(t) updted each epoch (t) for the logistic regression demonstration. The Adam paper suggests: Good default settings for the tested machine learning problems are alpha=0.001, beta1=0.9, beta2=0.999 and epsilon=10−8 The TensorFlow documentation suggests some tuning of epsilon: The default value of 1e-8 for epsilon might not be a good default in general. For example, when training an Inception network on ImageNet a current good choice is 1.0 or 0.1. We can see that the popular deep learning libraries generally use the default parameters recommended by the paper. TensorFlow: learning_rate=0.001, beta1=0.9, beta2=0.999, epsilon=1e-08. Keras: lr=0.001, beta_1=0.9, beta_2=0.999, epsilon=1e-08, decay=0.0. Blocks: learning_rate=0.002, beta1=0.9, beta2=0.999, epsilon=1e-08, decay_factor=1. Lasagne: learning_rate=0.001, beta1=0.9, beta2=0.999, epsilon=1e-08 Caffe: learning_rate=0.001, beta1=0.9, beta2=0.999, epsilon=1e-08 MxNet: learning_rate=0.001, beta1=0.9, beta2=0.999, epsilon=1e-8 Torch: learning_rate=0.001, beta1=0.9, beta2=0.999, epsilon=1e-8

Should we expose EPS as one of the experiment parameters? I think that we shouldn't since it is a rather technical parameter.

-

- May 2020

-

psyarxiv.com psyarxiv.com

-

Freeston, M. H., Tiplady, A., Mawn, L., Bottesi, G., & Thwaites, S. (2020, April 14). Towards a model of uncertainty distress in the context of Coronavirus (Covid-19). https://doi.org/10.31234/osf.io/v8q6m

-

-

www.nber.org www.nber.org

-

Eichenbaum, M., Rebelo, S., & Trabandt, M. (2020). The Macroeconomics of Epidemics (No. w26882; p. w26882). National Bureau of Economic Research. https://doi.org/10.3386/w26882

-

-

psyarxiv.com psyarxiv.com

-

Golino, H., Christensen, A. P., Moulder, R. G., Kim, S., & Boker, S. M. (2020, April 14). Modeling latent topics in social media using Dynamic Exploratory Graph Analysis: The case of the right-wing and left-wing trolls in the 2016 US elections. https://doi.org/10.31234/osf.io/tfs7c

-

-

github.com github.com

-

Deepset-ai/haystack. (2020). [Python]. deepset. https://github.com/deepset-ai/haystack (Original work published 2019)

-

-

www.repository.cam.ac.uk www.repository.cam.ac.uk

-

Toxvaerd, F. M. O. (2020). Equilibrium Social Distancing [Working Paper]. Faculty of Economics, University of Cambridge. https://doi.org/10.17863/CAM.52489

-

-

wellcomeopenresearch.org wellcomeopenresearch.org

-

Endo, A., Centre for the Mathematical Modelling of Infectious Diseases COVID-19 Working Group, Abbott, S., Kucharski, A. J., & Funk, S. (2020). Estimating the overdispersion in COVID-19 transmission using outbreak sizes outside China. Wellcome Open Research, 5, 67. https://doi.org/10.12688/wellcomeopenres.15842.1

-

-

github.com github.com

-

The diagram was generated with rails-erd

-

-

github.com github.com

-

Domain Model

-

-

www.nyteknik.se www.nyteknik.se

-

Forskare: ”Se upp med komplexa coronamodeller – de kan överträffa verkligheten”. (2020 April 24). Ny Teknik. https://www.nyteknik.se/opinion/forskare-se-upp-med-komplexa-coronamodeller-de-kan-overtraffa-verkligheten-6994339

-

-

arxiv.org arxiv.org

-

Given the disjoint vocabularies (Section2) andthe magnitude of improvement over BERT-Base(Section4), we suspect that while an in-domainvocabulary is helpful, SCIBERTbenefits mostfrom the scientific corpus pretraining.

The specific vocabulary only slightly increases the model accuracy. Most of the benefit comes from domain specific corpus pre-training.

-

We construct SCIVOCAB, a new WordPiece vo-cabulary on our scientific corpus using the Sen-tencePiece1library. We produce both cased anduncased vocabularies and set the vocabulary sizeto 30K to match the size of BASEVOCAB. The re-sulting token overlap between BASEVOCABandSCIVOCABis 42%, illustrating a substantial dif-ference in frequently used words between scien-tific and general domain texts

For SciBERT they created a new vocabulary of the same size as for BERT. The overlap was at the level of 42%. We could check what is the overlap in our case?

Tags

Annotators

URL

-

-

academic.oup.com academic.oup.com

-

Although we could have constructed new WordPiece vocabulary based on biomedical corpora, we used the original vocabulary of BERTBASE for the following reasons: (i) compatibility of BioBERT with BERT, which allows BERT pre-trained on general domain corpora to be re-used, and makes it easier to interchangeably use existing models based on BERT and BioBERT and (ii) any new words may still be represented and fine-tuned for the biomedical domain using the original WordPiece vocabulary of BERT.

BioBERT does not change the BERT vocabulary.

-

-

-

def _tokenize(self, text): split_tokens = [] if self.do_basic_tokenize: for token in self.basic_tokenizer.tokenize(text, never_split=self.all_special_tokens): for sub_token in self.wordpiece_tokenizer.tokenize(token): split_tokens.append(sub_token) else: split_tokens = self.wordpiece_tokenizer.tokenize(text) return split_tokens

How BERT tokenization works

-

-

github.com github.com

-

My initial experiments indicated that adding custom words to the vocab-file had some effects. However, at least on my corpus that can be described as "medical tweets", this effect just disappears after running the domain specific pretraining for a while. After spending quite some time on this, I have ended up dropping the custom vocab-files totally. Bert seems to be able to learn these specialised words by tokenizing them.

sbs experience from extending the vocabulary for medical data

-

Since Bert does an excellent job in tokenising and learning this combinations, do not expect dramatic improvements by adding words to the vocab. In my experience adding very specific terms, like common long medical latin words, have some effect. Adding words like "footballs" will likely just have negative effects since the current vector is already pretty good.

Expected improvement of extending the BERT vocabulary

Tags

Annotators

URL

-

-

jalammar.github.io jalammar.github.io

-

As is the case in NLP applications in general, we begin by turning each input word into a vector using an embedding algorithm.

What is the embedding algorithm for BERT?

-

-

blog.usejournal.com blog.usejournal.com

-

:It is fairly expensive (four days on 4 to 16 Cloud TPUs), but is a one-time procedure for each language

Estimates on the model pre-training from scratch

-

-

en.wikipedia.org en.wikipedia.org

-

www.nature.com www.nature.com

-

West, R., Michie, S., Rubin, G. J., & Amlôt, R. (2020). Applying principles of behaviour change to reduce SARS-CoV-2 transmission. Nature Human Behaviour, 1–9. https://doi.org/10.1038/s41562-020-0887-9

-

-

www.bmj.com www.bmj.com

-

Response to “Modelling the pandemic”: Reconsidering the quality of evidence from epidemiological models. (2020). https://www.bmj.com/content/369/bmj.m1567/rr-0

-

-

-

Fan, R., Xu, K., & Zhao, J. (2020). Weak ties strengthen anger contagion in social media. ArXiv:2005.01924 [Cs]. http://arxiv.org/abs/2005.01924

-

-

-

Pham, T. M., Kondor, I., Hanel, R., & Thurner, S. (2020). The effect of social balance on social fragmentation. ArXiv:2005.01815 [Nlin, Physics:Physics]. http://arxiv.org/abs/2005.01815

-

-

psyarxiv.com psyarxiv.com

-

Britwum, K., Catrone, R., Smith, G. D., & Koch, D. S. (2020, May 5). A University Based Social Services Parent Training Model: A Telehealth Adaptation During the COVID-19 Pandemic. https://doi.org/10.31234/osf.io/gw3cd

-

-

-

I do not understand what is the threat model of not allowing the root user to configure Firefox, since malware could just replace the entire Firefox binary.

-

-

psyarxiv.com psyarxiv.com

-

Rotella, A. M., & Mishra, S. (2020, April 24). Personal relative deprivation negatively predicts engagement in group decision-making. https://doi.org/10.31234/osf.io/6d35w

-

-

link.aps.org link.aps.org

-

Krönke, J., Wunderling, N., Winkelmann, R., Staal, A., Stumpf, B., Tuinenburg, O. A., & Donges, J. F. (2020). Dynamics of tipping cascades on complex networks. Physical Review E, 101(4), 042311. https://doi.org/10.1103/PhysRevE.101.042311

-

- Apr 2020

-

psyarxiv.com psyarxiv.com

-

Edelsbrunner, P. A., & Thurn, C. (2020, April 22). Improving the Utility of Non-Significant Results for Educational Research. https://doi.org/10.31234/osf.io/j93a2

-

-

-

Moyers, S. A., & Hagger, M. S. (2020, April 20). Physical activity and sense of coherence: A meta-analysis. https://doi.org/10.31234/osf.io/d9e3k

-

-

psyarxiv.com psyarxiv.com

-

Dai, B., Fu, D., Meng, G., Qi, L., & Liu, X. (2020, April 25). The effects of governmental and individual predictors on COVID-19 protective behaviors in China: a path analysis model. https://doi.org/10.31234/osf.io/hgzj9

-

-

-

Etilé, F., Johnston, D., Frijters, P., & Shields, M. (2020, April 16). Psychological Resilience to Major Socioeconomic Life Events. https://doi.org/10.31234/osf.io/vp48c

-

-

psyarxiv.com psyarxiv.com

-

Moya, M., Willis, G. B., Paez, D., Pérez, J. A., Gómez, Á., Sabucedo, J. M., … Salanova, M. (2020, April 23). La Psicología Social ante el COVID19: Monográfico del International Journal of Social Psychology (Revista de Psicología Social). https://doi.org/10.31234/osf.io/fdn32

-

-

-

Lades, L., Laffan, K., Daly, M., & Delaney, L. (2020, April 22). Daily emotional well-being during the COVID-19 pandemic. https://doi.org/10.31234/osf.io/pg6bw

-

-

stackoverflow.com stackoverflow.com

-

def save(self, *args, **kwargs): return

def delete(self, *args, **kwargs): return

from django.db import models

class MyReadOnlyModel(models.Model): class Meta: managed = False

-

-

psyarxiv.com psyarxiv.com

-

Colombo, R., Wallace, M., & Taylor, R. S. (2020, April 11). An Essential Service Decision Model for Applied Behavior Analytic Providers During Crisis. https://doi.org/10.31234/osf.io/te8ha

-

-

psyarxiv.com psyarxiv.com

-

Rafiei, F., & Rahnev, D. (2020, April 9). Does the diffusion model account for the effects of speed-accuracy tradeoff on response times?. https://doi.org/10.31234/osf.io/bhj85

-

-

journal.sjdm.org journal.sjdm.org

-

Dhami, Mandeep & Olsson, Henrik. (2008). Evolution of the interpersonal conflict paradigm. Judgment and Decision Making. 3. 547-569. http://journal.sjdm.org/8510/jdm8510.pdf

-

-

www.w3.org www.w3.org

- Mar 2020

-

matomo.org matomo.org

-

Did you know accurate data reporting is often capped? Meaning once your website traffic reaches a certain limit, the data then becomes a guess rather than factual.This is where tools like Google Analytics becomes extremely limited and cashes in with their GA360 Premium suite. At Matomo, we believe all data should be reported 100% accurately, or else what’s the point?

-

-

www.kqed.org www.kqed.org

-

But she's also addicted to her phone."

What we sow is what we reap!

-

-

techcrunch.com techcrunch.com

-

So it’s not surprising that Facebook is so coy about explaining why a certain user on its platform is seeing a specific advert. Because if the huge surveillance operation underpinning the algorithmic decision to serve a particular ad was made clear, the person seeing it might feel manipulated. And then they would probably be less inclined to look favorably upon the brand they were being urged to buy. Or the political opinion they were being pushed to form. And Facebook’s ad tech business stands to suffer.

-

-

news.humanpresence.io news.humanpresence.io

-

Rojas-Lozano claimed that the second part of Google’s two-part CAPTCHA feature, which requires users to transcribe and type into a box a distorted image of words, letters or numbers before entering its site, is also used to transcribe words that a computer cannot read to assist with Google’s book digitization service. By not disclosing that, she argued, Google was getting free labor from its users.

-

-

www.digital-democracy.org www.digital-democracy.org

-

threat model

Tags

Annotators

URL

-

- Feb 2020

-

github.com github.com

-

www.umich.edu www.umich.edu

-

* Information-Processing Analysis : about the mental operations used by a person who has learned a complex skills

this sounds a lot more involved unless you are working off a basic set of assumptions for mental operations and complex skills. Further understanding of psychological research and learning theories would be needed.

-

Dick and Carey Model

what the heck is this website, lmao.

-

-

etec.ctlt.ubc.ca etec.ctlt.ubc.ca

-

The Kemp Model of Instructional Design

-

-

hypothes.is hypothes.is

-

Kolkata Escorts | Kolkata escort service | Kolkata Escorts Service| Kokata call girls | Escorts in Kolkata | Escorts service in Kolkata | Kolkata female escorts | Kolkata independent girls | Kolkata model escorts | Kolkata high profile escorts | Kolkata college girls escorts | Kolkata celebrities escorts | Kolkata independent call girls | Kolkata escorts girls | Kolkata escorts agency | Independent kolkata escorts | Female escorts in kolkata | Kolkata independent celebrities escorts | Independent call girls in Kolkata | Kolkata High Profile Escorts | Kolkata High Profile Call Girls | Independent Escorts in Kolkata | Independent Kolkata escorts Agency | Escort in Kolkata | Escort Service in Kolkata | Female Escorts Service in Kolkata | Model Escorts Service in Kolkata | Kolkata Escort | Call Girls in Kolkata | Independent Kolkata Escorts Chandan Nagar Escorts| Dum Dum Escorts | WhatsApp Number Kolkata Call Girls | Baranagar Escorts | Barrackpore Escorts |Baruipur Escorts

Kolkata based girl who is passionate about her modeling. She is interested in travel, fashion show, and sports. View More : http://www.jennygupta.com Jenny Gupta Call and Whatsapp: +91-9830414129

Tags

- Kolkata Housewife Escorts

- Housewife Escorts Service in Kolkata

- Kolkata Model Escorts

- Escorts Service in Kolkata

- Independent Kolkata Escorts

- Kolkata Escort

- Kolkata Celebrities Escorts

- Kolkata Independent Escorts

- Kolkata Female Escorts

- Escort Service in Kolkata

- Kolkata Escorts Agency

- Kolkata Call Girls

- Model Escorts Service in Kolkata

- Kolkata Escorts

- Call Girls in Kolkata

- Kolkata Escorts Service

- Kolkata Escorts Girls

- Kolkata Escort Service

- Female Escorts Service in Kolkata

- Escorts in Kolkata

- Escort in Kolkata

- Kolkata Independent Girls

- Kolkata High Profile Escorts

Annotators

URL

-

-

www.aerocityincall.com www.aerocityincall.com

-

Kolkata Escorts Kolkata Escorts | Kolkata escort service | Kolkata Escorts Service| Kokata call girls | Escorts in Kolkata | Escorts service in Kolkata | Kolkata female escorts | Kolkata independent girls | Kolkata model escorts | Kolkata high profile escorts | Kolkata college girls escorts | Kolkata celebrities escorts | Kolkata independent call girls | Kolkata escorts girls | Kolkata escorts agency | Independent kolkata escorts | Female escorts in kolkata | Kolkata independent celebrities escorts | Independent call girls in Kolkata | Kolkata High Profile Escorts | Kolkata High Profile Call Girls | Independent Escorts in Kolkata | Independent Kolkata escorts Agency | Escort in Kolkata | Escort Service in Kolkata | Female Escorts Service in Kolkata | Model Escorts Service in Kolkata | Kolkata Escort | Call Girls in Kolkata | Independent Kolkata Escorts Chandan Nagar Escorts| Dum Dum Escorts | WhatsApp Number Kolkata Call Girls | Baranagar Escorts | Barrackpore Escorts |Baruipur Escorts

Tags

- Kolkata Housewife Escorts

- Housewife Escorts in Kolkata

- Kolkata Model Escorts

- Escorts Service in Kolkata

- Independent Kolkata Escorts

- Escort service in Kolkata

- Female Escorts in Kolkata

- Kolkata Escorts Agency

- Kolkata Call Girls

- Kolkata Escorts

- Call Girls in Kolkata

- Kolkata Escorts Service

- Kolkata Escort Service

- Model Escorts in Kolkata

- Kolkata Call Girls Escorts

- Escorts in Kolkata

- Kolkata High Profile Escorts

Annotators

URL

-

- Jan 2020

-

www.w3.org www.w3.org

-

The Web Annotation Data Model specification describes a structured model and format to enable annotations to be shared and reused across different hardware and software platforms.

The publication of this web standard changed everything. I look forward to true testing of interoperable open annotation. The publication of the standard nearly three years ago was a game changer, but the game is still in progress. The future potential is unlimited!

-

-

www.forbes.com www.forbes.com

-

Gujarat often produced growth faster than the national average, fewer regulations, better infrastructure and less corruption

-

- Dec 2019

-

-

Choose Your Plan

-

-

en.wikipedia.org en.wikipedia.org

Tags

Annotators

URL

-

- Nov 2019

-

ddi-cds.org ddi-cds.org

-

Clinic to view the NSAID value sets

Drugs involved

-

Click to view the warfarin value sets

Drugs involved

-

Concurrent use of both medications puts patients at a significant risk of bleeding that warrants appropriate management strategies

Clinical consequences and Serious

-

Algorithm

Patient Context and Operational classification

-

Warfarin is a vitamin K antagonist, which competitively inhibits a series of coagulation factors, as well as proteins C and S. These factors are biologically activated by the addition of carboxyl groups depending on vitamin K. Warfarin competitively inhibits this chemical reaction, thus depleting functional vitamin K reserves and hence reducing the synthesis of active coagulation factors.

Mechanism

-

Non-selective NSAIDs inhibit cyclooxygenase (COX) enzymes, COX-1 and COX-2 at different extent, leading varying effects on bleeding.(11, 12) COX-1 catalyzes the initial step in the formation of thromboxane (TxA2), and prostaglandins.(11, 12) TxA2 stimulates platelet aggregation.(13) Prostaglandins protect the gastrointestinal tract by increasing mucosal blood flow and the thickness of mucus layer, stimulating bicarbonate secretion, and reducing gastric acid secretion.(

Mechanism

-

Misoprostol also has been demonstrated to help prevent gastric ulcer in those who receive NSAIDs.

Recommended action

-

Therefore, alternate management strategies such as utilizing proton pump inhibitors or misoprostol may help reduce bleeding events.

Recommended action

-

Thus, avoiding and/or limiting the use of NSAIDs is an ideal strategy to prevent serious complication from these medications.

Recommended action

-

Both corticosteroids and aldosterone antagonists have been shown to substantially increase the risk of upper gastrointestinal bleeding in patients on NSAIDs, with relative risks of 12.8 and 11 respectively compared to a risk of 4.3 with NSAIDs alone

Risk modifying factor

-

Several risk factors for NSAID-related gastroduodenal bleeding are old age, a history of peptic ulcer disease, high dosages of NSAIDs, concomitant use of different NSAIDs.

Risk modifying factor

-

The VKORC1 and CYP2C9 genotypes are the most important known genetic determinants of warfarin dosing. Warfarin targets VKORC1, an enzyme involved in vitamin K recycling. The variants CYP2C9*2 and *3, required with a lower dose of warfarin. The FDA-approved drug label for warfarin states that CYP2C9 and VKORC1 genotype information, when available, can assist in the selection of the initial dose of warfarin.

Risk modifying factor

-

Concomitant NSAIDs occur with 24.3% of warfarin courses of therapy

Frequency of exposure

Tags

Annotators

URL

-

-

www.srdc.org www.srdc.org

-

This article is a great example of a research model in measuring outcomes of adult learning.

-

-

watermark.silverchair.com watermark.silverchair.com

-

In this journal, Davis uses his Technology Acceptance Model (TAM), to determine the factors of how and why there is acceptance of online learning in higher education. TAM indicates that when there is an "ease of use" is equates to usefulness is a survey conducted. TAM also explains that there is a correlation between perceived usefulness, evaluations of functions, current system use, and behavioral intentions use that are all positive factors in maintaining the continued system use. Ultimately, just like in life, where there is gratification there is TAM. 10/10

-

-

www.engadget.com www.engadget.com

-

In order for Google to be Google, it has to do evil. This is true for every major technology company. Apple, Facebook, Amazon, Tesla, Microsoft, Sony, Twitter, Samsung, Nintendo, Dell, HP, Toshiba -- every one of these organizations can't compete in the market without engaging in unethical, inhumane and invasive practices. It's a sliding scale: The larger the company, the more integrated it is in our everyday lives, the more evil it can be.

-

Take Facebook, for example. CEO Mark Zuckerberg will stand onstage at F8 and wax poetic about the beauty of connecting billions of people across the globe, while at the same time patenting technologies to determine users' social classes and enable discrimination in the lending process, and allowing housing advertisers to exclude racial and ethnic groups or families with women and children from their listings.

-

-

adnauseam.io adnauseam.io

-

We can certainly understand why Google would prefer users not to install AdNauseam, as it directly opposes their core business model, but the Web Store’s Terms of Service do not (at least thus far) require extensions to endorse Google’s business model. Moreover, this is not the justification cited for the software’s removal.

-

-

www.bleepingcomputer.com www.bleepingcomputer.com

-

We can certainly understand why Google would prefer users not to install AdNauseam, as it directly opposes their core business model, but the Web Store’s Terms of Service do not (at least thus far) require extensions to endorse Google’s business model.

-

-

journals.uair.arizona.edu journals.uair.arizona.edu

-

Educators are feeling more and more pressure to provide educational content and teaching methods that keep pace with ongoing scientific and technical progress.

The topics of this article is technology and adult students. The author writes about the challenges the adult learner faces with technology, what adult learners have in common, Andragogy information/challenges, Technology in the classroom and how it challenging integration issues, and how technology and andragogy is used. The article is great way to view how adult students utilize technology whether it be positive or negative. Rating: 4/5

-

- Oct 2019

-

numinous.productions numinous.productions

-

But in the notebook format it's much easier for the reader to experiment. Their exploration is scaffolded, they can make small modifications and see the results, even the answers to questions Norvig did not anticipate

Tags

Annotators

URL

-

-

github.com github.com

- Sep 2019

-

www.halle36.org www.halle36.org

Tags

Annotators

URL

-

-

www.w3.org www.w3.org

-

On the other hand, a resource may be generic in that as a concept it is well specified but not so specifically specified that it can only be represented by a single bit stream. In this case, other URIs may exist which identify a resource more specifically. These other URIs identify resources too, and there is a relationship of genericity between the generic and the relatively specific resource.

I was not aware of this page when the Web Annotations WG was working through its specifications. The word "Specific Resource" used in the Web Annotations Data Model Specification always seemed adequate, but now I see that it was actually quite a good fit.

-

- Jul 2019

-

archimede.mat.ulaval.ca archimede.mat.ulaval.ca

-

The effect ofthe covariates on survival is to act multiplicatively on some unknown baseline hazardrate, which makes it difficult to model covariate effects that change over time. Secondly,if covariates are deleted from a model or measured with a different level of precision, theproportional hazards assumption is no longer valid. These weaknesses in the Cox modelhave generated interest in alternative models. One such alternative model is Aalen’s(1989) additive model. This model assumes that covariates act in an additive manneron an unknown baseline hazard rate. The unknown risk coefficients are allowed to befunctions of time, so that the effect of a covariate may vary over time.

-

-

lifelines.readthedocs.io lifelines.readthedocs.io

-

Non-proportional hazards is a case of model misspecification.

-

The idea behind the model is that the log-hazard of an individual is a linear function of their static covariates and a population-level baseline hazard that changes over time.

-

- Apr 2019

-

-

In questo articolo Bradley Kuhn di SFC cerca di stabilire cosa sia meglio intendere per "sostenibile" nelle recenti discussioni sulla sostenibilità del FLOSS.

La necessità sentita di assicurarsi che i progetti FLOSS abbiano le risorse per progredire, retribuendo chi ci lavora, è corretta. Tuttavia allegare a questa intenzione anche il modello di crescita rapida tipico del capitale di ventura male si adatta ad un concetto di sostenibilità che possa essere trasversale a tutto il mondo del software libero.

Viene quindi proposto essenzialmente un focus su livelli di retribuzione che consentano uno stile di vita adeguato ai membri del progetto, e la diffusione della consapevolezza che la ricerca di margini di profitto eccessivi per singoli individui o per l'entità che gestisce il progetto si scontrano con la sostenibilità per il progetto stesso.

-

-

smethur.st smethur.st

-

modelling UK parliament

Tags

Annotators

URL

-

- Mar 2019

-

www.w3.org www.w3.org

-

separately. 1.1 Aims of the Model The

some text

Tags

Annotators

URL

-

-

debwagner.info debwagner.info

-

Behavior Engineering Model This page has a design that is not especially attractive or user friendly but it does provide an overview of Gilbert's Behavior Engineering Model. This is a model that can be used to analyze the issues that underlie performance. A six-cell model is presented. Rating 5/5

-

-

deepblue.lib.umich.edu deepblue.lib.umich.edu

-

Human Performance Technology Model This page is an eight page PDF that gives an overview of the human performance technology model. This is a black and white PDF that is simply written and is accessible to the layperson. Authors are prominent writers in the field of performance technology. Rating 5/5

-

- Feb 2019

-

gitee.com gitee.com

-

There are 22 response models in the system, including retrieval-based neural networks, generation-based neural networks, knowledge base question answering systems and template-based systems.Examples of candidate model responses are shown in Tabl

基于搜索的,基于生成的,知识问答和基于模版的混合应答模型

-

-

static1.squarespace.com static1.squarespace.com

-

Without this, men fill one another's heads with noise and sounds;

Alluding to the Transactional Model of Communication, noise can external (e.g. words, sounds) or internal (e.g. anxiety, distraction). Noise is a barrier to clear communication, which in this sense, inhibits the progression of knowledge.

-

-

educationaltechnologyjournal.springeropen.com educationaltechnologyjournal.springeropen.com

-

So much of faculty development is one-size-fits-all andarranged according to preset schedules and locations - and by doing so, will consist-ently fail to meet the needs of those whose interests are marginal or different from themajority. Moreover, the understanding of“network”in the institutional sense fails toaccount for the individual level of the Personal Learning Network (PLN) where educa-tors can build connections and relationships that advance their ongoing learning out-side of institutional structures and boundaries

One-size-fits-all is the perennial challenge of PD (professional development, faculty development)—the demand that faculty as learners must conform to the instruction, rather than bringing their full selves. There have been days, weeks, and even semesters when I felt marginalized (even as campus entities insisted that I wasn’t). The only way through this was the PLN (“my” PLN) that welcomed my whole self into another type of PD.

-

- Jan 2019

-

static1.squarespace.com static1.squarespace.com

-

n sender/receiver, channel, code, messag

Berlo's SMCR Model of communication

-

-

hal.inria.fr hal.inria.fr

-

De nition of the evolution model by SPL main-tainer.

-

-

dev01.inside-out-project.com dev01.inside-out-project.com|1

-

An HTML element is an individual component of an HTML document or web page, once this has been parsed into the Document Object Model.

Know the Document Object Model.

-

- Nov 2018

-

www.ncbi.nlm.nih.gov www.ncbi.nlm.nih.gov

-

Canada is the first case of the expansion of hospital medicine beyond the United States, and as of 2008, Canada had more than 100 hospital medicine programs.7 Currently, the estimated number of hospitalists in Canada has increased to ~3,000 (Colleen Savage, Administrator, Canadian SHM, personal communication, December 6, 2017). Yousef and Maslowski describe several drivers for the development of the hospitalist model, which are related to physicians, patients, and systems. Work–life balance and the desire for non-hospital work among primary care providers (PCPs) were leading physician factors. Two major patient-related factors were the increasing age and complexity of patients and the increasing number of “unattached” patients. Unattached patients are those who either do not have a PCP or their PCP does not have admitting hospital privileges. System drivers included PCP shortages, reduction in resident duty hours, higher need for health system efficiency, and cost reduction. Further, increasing health system complexity led to PCPs withdrawing from hospital care.7

-

-

www.the-hospitalist.org www.the-hospitalist.org

-

“It doesn’t just help make hospitalists work better. It makes nursing better. It makes surgeons better. It makes pharmacy better.”

-

In the last 20 years, HM and technology have drastically changed the hospital landscape. But was HM pushed along by generational advances in computing power, smart devices in the shape of phones and tablets, and the software that powered those machines? Or was technology spurred on by having people it could serve directly in the hospital, as opposed to the traditionally fragmented system that preceded HM? “Bob [Wachter] and others used to joke that the only people that actually understand the computer system are the hospitalists,” Dr. Goldman notes. “Chicken or the egg, right?” adds Dr. Merlino of Press Ganey. “Technology is an enabler that helps providers deliver better care. I think healthcare quality in general has been helped by both.

Chicken or the egg? Technological advances were tailored for specific needs in accordance with growth of hospitalist model

-

“This has all been an economic move,” she says. “People sort of forget that, I think. It was discovered by some of the HMOs on the West Coast, and it was really not the HMOs, it was the medical groups that were taking risks—economic risks for their group of patients—that figured out if they sent … primary-care people to the hospital and they assigned them on a rotation of a week at a time, that they can bring down the LOS in the hospital. “That meant more money in their own pockets because the medical group was taking the risk.” Once hospitalists set up practice in a hospital, C-suite administrators quickly saw them gaining patient share and began realizing that they could be partners. “They woke up one day, and just like that, they pay attention to how many cases the orthopedist does,” she says. “[They said], ‘Oh, Dr. Smith did 10 cases last week, he did 10 cases this week, then he did no cases or he did two cases. … They started to come to the hospitalists and say, ‘Look, you’re controlling X% of my patients a day. We’re having a length of stay problem; we’re having an early-discharge problem.’ Whatever it was, they were looking for partners to try to solve these issues.” And when hospitalists grew in number again as the model continued to take hold and blossom as an effective care-delivery method, hospitalists again were turned to as partners. “Once you get to that point, that you’re seeing enough patients and you’re enough of a movement,” Dr. Gorman says, “you get asked to be on the pharmacy committee and this committee, and chairman of the medical staff, and all those sort of things, and those evolve over time.”

-

Hospitalists were seen as people to lead the charge for safety because they were already taking care of patients, already focused on reducing LOS and improving care delivery—and never to be underestimated, they were omnipresent, Dr. Gandhi says of her experience with hospitalists around 2000 at Brigham and Women’s Hospital in Boston. “At least where I was, hospitalists truly were leaders in the quality and safety space, and it was just a really good fit for the kind of mindset and personality of a hospitalist because they’re very much … integrators of care across hospitals,” she says. “They interface with so many different areas of the hospital and then try to make all of that work better.”

role of hospitalists in safety and quality

-

“When the IOM report came out, it gave us a focus and a language that we didn’t have before,” says Dr. Wachter, who served as president of SHM’s Board of Directors and to this day lectures at SHM annual meetings. “But I think the general sensibility that hospitalists are about improving quality and safety and patients’ experience and efficiency—I think that was baked in from the start.”

-

“My feeling at the time was this was a good idea,” Dr. Wachter says. “The trend toward our system being pushed to deliver better, more efficient care was going to be enduring, and the old model of the primary-care doc being your hospital doc … couldn’t possibly achieve the goal of producing the highest value.”

How can care be made further efficient? E.g., integration, cost-sharing, payment-sharing, parent partners, nurse partners

-

That type of optimism permeated nascent hospitalist groups. But it was time to start proving the anecdotal stories. Nearly two years to the day after the Wachter/Goldman paper published, a team led by Herbert Diamond, MD, published “The Effect of Full-Time Faculty Hospitalists on the Efficiency of Care at a Community Teaching Hospital” in the Annals of Internal Medicine.1 It was among the first reports to show evidence that hospitalists improved care

-

“The role of the hospitalist often is to take recommendations from a lot of different specialties and come up with the best plan for the patient,” says Tejal Gandhi, MD, MPH, CPPS, president and CEO of the National Patient Safety Foundation. “They’re the true patient advocate who is getting the cardiologist’s opinion, the rheumatologist’s opinion, and the surgeon’s opinion, and they come up with the best plan for the patient.”

-

Dr. Merlino says he’s proud of the specialists who rotated through the hospital rooms of AIDS patients. But so many disparate doctors with no “quarterback” to manage the process holistically meant consistency in treatment was generally lacking

Tags

- perfect timing

- value

- p1

- To Err is Human

- background

- benefits of hospital medicine model

- benefits

- tech effect

- coordination of care

- consistency in treatment

- history

- quality

- efficiency

- integration

- hospitalist model

- IOM

- growth spurt

- interprofessional teamwork

- interprofessional

- background economic benefits hospitalist model

- revenue rules the day

- hospital medicine

- evidence

- safety

Annotators

URL

-

-

iphysresearch.github.io iphysresearch.github.io

-

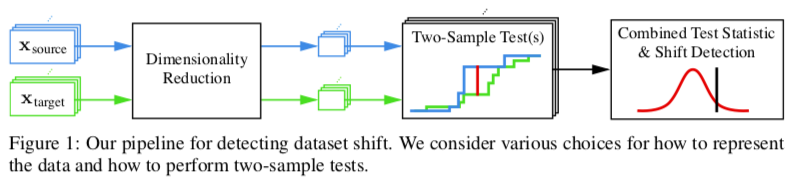

Failing Loudly: An Empirical Study of Methods for Detecting Dataset Shift

该文做的实验是探索对数据集进行 shifts (某种可控的扰动) 后的模型表现,提出了classifier-based的方法/pipeline 来观察和评价:

这对于我的引力波数据研究来说,可以借鉴其数据的 shift 方法以及评价机制 (two-sample tests)。

-

-

educationaltechnology.net educationaltechnology.net

-

“The ADDIE model consists of five steps: analysis, design, development, implementation, and evaluation. It is a strategic plan for course design and may serve as a blueprint to design IL assignments and various other instructional activities.”

This article provides a well diagrammed and full explanation of the addie model and its' application to technology.

Also included on the site is a link to an online course delivered via diversityedu.com

RATING: 4/5 (rating based upon a score system 1 to 5, 1= lowest 5=highest in terms of content, veracity, easiness of use etc.)

-

- Oct 2018

-

en.wikipedia.org en.wikipedia.org

-

It has been demonstrated that this formulation is almost equivalent to a SLIM model,[9] which is an item-item model based recommender

So a pre-trained item model can be used to make such recommendations.

-

-

iphysresearch.github.io iphysresearch.github.io

-

Approximate Fisher Information Matrix to Characterise the Training of Deep Neural Networks

深度神经网络训练(收敛/泛化性能)的近似Fisher信息矩阵表征,可自动优化mini-batch size/learning rate

挺有趣的 paper,提出了从 Fisher 矩阵抽象出新的量用来衡量训练过程中的模型表现,来优化mini-batch sizes and learning rates | 另外 paper 中的figure画的很好看 | 作者认为逐步增加batch sizes的传统理解只是partially true,存在逐步递减该 size 来提高 model 收敛和泛化能力的可能。

-

-

www.poetryfoundation.org www.poetryfoundation.org

-

But we by a love so much refined,

-

sublunary

Poets often put their own spin on the Ptolemaic Model of the Universe ( geocentric model). Donne is making reference to this here. In his version of the model the Earth is in the center most concentric circle, then the moon, and then heavenly bodies. By juxtaposing "sublunary lovers" (constantly changing lovers) to him and his lover, Donne is saying that his love with More resides in the outermost perfect and unchanging circle.

-

- Mar 2018

-

inside.tru.ca inside.tru.ca

-

Las aplicaciones aumentan el acceso a la justicia

-

- Jan 2018

-

www.ncbi.nlm.nih.gov www.ncbi.nlm.nih.gov

- Nov 2017

-

nvie.com nvie.com

-

exists as long as the feature is in development

When the development of a feature takes a long time, it may be useful to continuously merge from

developinto the feature branch. This has the following advantages:- We can use the new features introduced in

developin the feature branch. - We simplify the integration merge of the feature branch into

developthat will happen at a later point.

- We can use the new features introduced in

-

- Sep 2017

-

www.salon.com www.salon.com

-

Here’s a thought exercise. Barack Obama never once considered repealing George W. Bush’s Medicare Part D law

Nice opening.

-

-

watermark.silverchair.com watermark.silverchair.comuntitled1

- Jul 2017

-

www.gettingsmart.com www.gettingsmart.com

-

The SAMR model is a useful tool for helping teachers think about their own tech use as they begin to make small shifts in the design and implementation of technology driven learning experiences to achieve the next level.

-

- May 2017

-

nfnh2017.scholar.bucknell.edu nfnh2017.scholar.bucknell.edu

-

ditching machine

A ditching machine is used for digging ditches or trenches of a specified depth and width. These ditches are often used for irrigation, drainage, or pipe-laying. They could also be used to build fences or fortifications. These machines can also be used to excavate for any other purpose (Edwards, 1888). Within the Berger Inquiry, the Banister Model 710 and Model 812 wheel ditchers are discussed. This machine was designed and built by Banister Pipelines of Edmonton, Alberta. Banister Pipelines built their first ditcher, the Model 508, in 1965. The Model 508 was designed to “cut through frozen ground.” Banister Pipelines was later able to “develop the technology in the 1970s that led to some of the largest ditchers ever built.” They designed a prototype of the Model 710 in 1972 which was tested to cut through frozen ground. This machine weighs 115 tons and can dig a ditch 7 feet wide and 10 feet deep. It is powered by two Caterpillar diesel engines which produce 1,120 horsepower. This machine is so powerful that in thawed ground it can reach a production rate of up to 20 feet of trench per minute. A few years later, in 1978, Banister Pipelines built a larger ditching machine, the Model 812, which is almost twice the size of the Model 710. This machine can dig 12 feet deep. The Model 710 and Model 812 by Banister Pipelines are still in use today (Haddock, 1998).

References

Edwards, C. C. (1888, December 18). Ditching-Machine. Retrieved from The Portal to Texas History: https://texashistory.unt.edu/ark:/67531/metapth171924/ Haddock, K. (1998). Giant Earthmovers: An Illustrated History. Osceola: MBI.

-

- Apr 2017

-

www.wired.com www.wired.com

-

So how does that explain what happened in Disneyland? If you have a group of 1,000 people concentrated in a small space—like oh, say, hypothetically, an amusement park—about 90 percent of them will be vaccinated (hopefully). One person, maybe someone who contracted measles on a recent trip to the Philippines, moves around, spreading the virus. Measles is crazy contagious, so of the 100 people who aren’t vaccinated, about 90 will get infected. Then, of the 900 people who are vaccinated, 3 percent—27 people—get infected because they don’t have full immunity.

Good illustrative example

-

- Feb 2017

-

www.dabapps.com www.dabapps.com

-

Never write to a model field or call save() directly. Always use model methods and manager methods for state changing operations.

-

- Jan 2017

-

psgt.earth.lsa.umich.edu psgt.earth.lsa.umich.edu

-

Pressure and Temperature increase with depth into the earth

-

- Oct 2016

-

lti.hypothesislabs.com lti.hypothesislabs.com

-

“Why prolong the inevi-table? We are all one stitch from here [the shelf] to there [the yard sale].”

Note how the brackets are used for editorial clarification; to make the quote make sense.

-

(sitting on a bench to watch the sunset, riding a gondola, capturing her beauty through art)

Again, note the use of parentheticals here.

-

Alongside this sheer pleasure in ma-teriality and movement, Pixar operates with a nostalgia that is both regressive (in its reliance on traditional notions of gender, class, and morality) and liberat-ing (in its embrace of an ironic, detached view of the present).

Note the use of the parentheticals here to further clarify the author's point.

-

“making strange,” as Brecht would have it

Note how Scott uses/introduces another thinker's (in this case Brecht's) terminology.

-

That is, Pixar’s films encourage adult audiences to both encounter and deny each film’s veiled dark content and its implications for them.

Note how Scott restates her point here; clarification is always important.

-

- Sep 2016

-

campustechnology.com campustechnology.com

-

Imagine if our university system was structured around helping people accomplish the things that they're trying to do, Downes said — "that would be a real transformation."

-

-

-

The importance of models may need to be underscored in this age of “big data” and “data mining”. Data, no matter how big, can only tell you what happened in the past. Unless you’re a historian, you actually care about the future — what will happen, what could happen, what would happen if you did this or that. Exploring these questions will always require models. Let’s get over “big data” — it’s time for “big modeling”.

Tags

Annotators

URL

-

- Jun 2016

-

digitalhumanities.org:3030 digitalhumanities.org:3030

-

play either role

or we could say that it installs a 2 way dialogue between modeled and modeler.

Tags

Annotators

URL

-

-

scientistseessquirrel.wordpress.com scientistseessquirrel.wordpress.com

-

***By which I mean, it’s even in Wikipedia

Doesn't give reference on how the physicist detector models are known in wikipedia

-

Actually, I didn’t need Holmesian deductions to conclude that Aad et al. aren’t using a conventional definition of authorship. It’s widely known*** that at least two groups in experimental particle physics operate under the policy that every scientist or engineer working on a particular detector is an author on every paper arising from that detector’s data. (Two such detectors at the Large Hadron Collider were used in the Aad et al paper, so the author list is the union of the “ATLAS collaboration” and the “CMS collaboration”.) The result of this authorship policy, of course, is lots of “authorships” for everyone: for the easily searchable George Aad, for instance, over 400 since 2008.

Physicists authorship models

-

-

Local file Local file

-

The standard model of scholarly publishing assumes awork written by an author. There is typically a single authorwho receives full credit for theopusin question. By thesame token, the named author is held accountable for allclaims made in the text, excluding those attributed to othersvia citations. The appropriation of credit and allocation ofresponsibility thus go hand-in-hand, which makes for fairlystraightforward social accounting. The ethically informed,lone scholar has long been a popular figure, in both fact andscholarly mythology. Historically, authorship has beenviewed as a solitary profession, such that “when we picturewriting we see a solitary writer” (Brodkey, 1987, p. 55). Butthat model, as Price (1963) recognized almost three decadesago, is anachronistic as far as the great majority of contem-porary scientific, and much social scientific and humanistic,publishing is concerned.

On "standard model" of authorship: lone authority and responsibility; how this is anachronistic.

-

Inthebiomedicalresearchcommunity,multipleauthorshiphasincreasedtosuchanextentthatthetrustworthinessofthescientificcom-municationsystemhasbeencalledintoquestion.Doc-umentedabuses,suchashonorificauthorship,havese-riousimplicationsintermsoftheacknowledgmentofauthority,allocationofcredit,andassigningofaccount-ability.Withinthebiomedicalworldithasbeenproposedthatauthorsbereplacedbylistsofcontributors(theradicalmodel),whosespecificinputstoagivenstudywouldberecordedunambiguously.Thewiderimplica-tionsofthe‘hyperauthorship’phenomenonforscholarlypublicationareconsidered.

Discussion of how this is a problem in Biomedicine (as King, Christopher. 2012. “Multiauthor Papers: Onward and Upward - ScienceWatch Newsletter.” Science Watch Newsletter, July. http://archive.sciencewatch.com/newsletter/2012/201207/multiauthor_papers/.) notes, this changed later in the decade to physics.

Discusses "contributor" model.

Tags

Annotators

-

- Mar 2016

-

www.ncbi.nlm.nih.gov www.ncbi.nlm.nih.gov

-

Significant diagnostic differences were seen in the left and right cerebral volumes in interaction with sex (right: F3,93 = 2.9, P = .04; left: F3,93 = 3.1, P = .04). Post hoc comparisons showed that both bipolar groups (with and without psychosis) had significantly smaller left and right cerebral volumes than HCs; this difference was even more marked in the female BPD groups. The SZ group did not differ significantly from the other groups.

ID: Model2 Variable: Diagnosis Variable: Sex Variable: Diagnosis+Sex Variable: Age Type: Linear model, ANCOVA, Tukey HSD

-

2-way (diagnosis, sex) univariate analyses covarying for TCV and age

ID: Model1 Variable: Diagnosis Variable: Sex Variable: Diagnosis+Sex Variable: TCV Variable: Age

-

-

www.ncbi.nlm.nih.gov www.ncbi.nlm.nih.gov

-

A series of Analyses of Variance (ANOVA) were performed on CC1 through CC7 and total CC as dependent variables with sex, age, and TCV as covariates to compare CC volumes and CC midsagittal areas between youths with BPD and HC to determine if there were group differences.

ID: ANOVAvol Variable: CC1vol Variable: CC2vol Variable: CC3vol Variable: CC4vol Variable: CC5vol Variable: CC6vol Variable: CC7vol Variable: CCvol Variable: age Variable:sex Variable:TCV

ID: ANOVAarea Variable: CC1area Variable: CC2area Variable: CC3area Variable: CC4area Variable: CC5area Variable: CC6area Variable: CC7area Variable: CCarea Variable: age Variable:sex Variable:TCV

-

Equality of groups on demographic and clinical variables was evaluated by t-tests for continuous variables and chi-square tests for categorical variables

ID: Ttest Variable:

ID: chi-square Variable:

Tags

Annotators

URL

-

-

medium.com medium.com

-

What is a business model?

-

- Jan 2016

-

onewheeljoe.blogspot.com onewheeljoe.blogspot.com

-

These models

I use a model for analysis that goes like this:

- What is the "text"?

- What is the context?

- What is the subtext?

I initially used this model to teach 8th graders about how to analyze political cartoons. Later I used it as a tool for analyzing all manner of media. I have added a fourth text to this.

- What are the pretexts (assumptions)?

-

- Oct 2015

-

courses.edx.org courses.edx.org

-

The five-part model presented here as follows:

1) Data. This includes text, images, sound files, specifications of story grammars, declarative information about fictional worlds, tables of statistics about word frequencies, and so on. It also includes instructions to the reader (who may also be an interactor), including those that specify processes to be carried out by the reader.

2) Processes. These are processes actually carried out by the work, and are central to many efforts in the field (especially those proceeding from a computer science perspective). As Chris Crawford puts it: “processing data is the very essence of what a computer does." Nevertheless, processes are optional for digital literature (e.g., many email narratives carry out no processes within the work) as well as for ergodic literature and cybertext (in which all the effort and calculation may be on the reader's part).

3) Interaction. This is change to the state of the work, for which the work was designed, that comes from outside the work. For example, when a reader reconfigures a combinatory text (rather than this being performed by the work's processes) this is interaction. Similarly, when the work's processes accept input from outside the work-whether from the audience or other sources. This is a feature of many popular genres of digital literature, but it is again optional for digital literature and cybertext (e.g., Tale-Spin falls into both categories even when not run interactively) and for ergoilic literature as well (given that the page exploration involved in reading Apollinaire's poems qualifies them as ergoilic). However, it's important to note that cybertext requires calculation somewhere in the production of scriptons--either via processes or interaction.

4) Surface. The surface of a work of digital literature is what the audience experiences: the output of the processes operating on the data, in the context of the physical hardware and setting, through which any audience interaction takes place. No work that reaches an audience can do so without a surface, but some works are more tied to particular surfaces than others (e.g., installation works), and some (e.g., email narratives) make audience selections (e.g., one's chosen email reader) a detennining part of their context. 5) Context. Once there is a work and an audience, there is always context so this isn't optional. Context is important for interpreting any work, but digital literature calls us to consider types of context (e.g., intra-audience communication and relationships in an :MMO fiction) that print-based literature has had to confront less often.

-

-

desencadenado.com desencadenado.com

-

Sigo convencido de que Lean Startup y las ideas, herramientas y metodologías que le rodean como Customer Development, Business Model Design, Lean UX o Effectuation son la mejor vía para crear una empresa. Pero la interacción con mis lectores y con los alumnos de mis cursos me ha hecho ver que hay problemas para llevar a la práctica estas ideas.

-

- Sep 2015

-

onlinelibrary.wiley.com onlinelibrary.wiley.com

-

gravitoinertial ambiguity

I had to find other references for this phenomenon. The wikipedia page on Einstein's equivalency principle was helpful. As was this pdf with merfeld and zupan Until there is information from other sensory systems, fluid movement in otolith organs cannot disambiguate between a head tilt or a head acceleration.

-

- Aug 2015

-

www.investopedia.com www.investopedia.com

-

The Nordic Model: Pros and Cons

- Social benefits like free education, healthcare and pensions.

- Redistributive taxation.

- Relaxed employment laws.

- History in family-driven agriculture. Culture of small entrepreneurial enterprises.

- High rates of taxation.

-

-

-

total offive variables in the frontierestimate: three input types in dollars, output in number of patient-days, andquality in estimated HSMR values.

So this is the model translated as:

number of patient-days(by hospital*) = HSMR value(ratio) + capital prices($) + labor($) + materials($)

- all the co-variants are also accounted by Hospital

-

deterministic

Is appropriate the use of a deterministic model here? It seems to me that it will be less accurate than a probabilistic one, and that that could influence the results of the comparison of the different aggregation levels biasing the conclusions.

-

quality-adjusted frontier analysis are available inEckermann and Coelli (2013).

*Interesting reference for reviewing!

-

Details of the estimationprocess can be found in operations research textbooks (e.g., Banker, Charnes,and Cooper 1984; Ramanathran and Ramanathan 2003)

*Interesting reference for reviewing!

-

- Apr 2015

-

-

Coursera and Udacity have the opportunity to develop successful business models through various means, such as charging MOOC provider institutions for use of their platform, by collecting fees for badges or certificates, through the sale of participant data, through corporate sponsorship, or through direct advertising

-

- Feb 2015

-

wiki.chn.io wiki.chn.io

-

Num / Num summarizes a graph with nodes / arcs.

The underlying graph model is not explicitly mentionned here nor in the README of the plugin.

-

- Jan 2015

-

cs231n.github.io cs231n.github.io

-

k - Nearest Neighbor Classifier

Is there a probabilistic interpretation of k-NN? Say, something like "k-NN is equivalent to [a probabilistic model] under the following conditions on the data and the k."

Tags

Annotators

URL

-

- Feb 2014

-

www.dougengelbart.org www.dougengelbart.org

-

Concepts seem to be structurable, in that a new concept can be composed of an organization of established concepts. For present purposes, we can view a concept structure as something which we might try to develop on paper for ourselves or work with by conscious thought processes, or as something which we try to communicate to one another in serious discussion. We assume that, for a given unit of comprehension to be imparted, there is a concept structure (which can be consciously developed and displayed) that can be presented to an individual in such a way that it is mapped into a corresponding mental structure which provides the basis for that individual's "comprehending" behavior. Our working assumption also considers that some concept structures would be better for this purpose than others, in that they would be more easily mapped by the individual into workable mental structures, or in that the resulting mental structures enable a higher degree of comprehension and better solutions to problems, or both.

-

- Jan 2014

-

blogs.msdn.com blogs.msdn.com

-

I blogged a while back about how “references” are often described as “addresses” when describing the semantics of the C# memory model. Though that’s arguably correct, it’s also arguably an implementation detail rather than an important eternal truth. Another memory-model implementation detail I often see presented as a fact is “value types are allocated on the stack”. I often see it because of course, that’s what our documentation says.

-

- Nov 2013

-

caseyboyle.net caseyboyle.net

-

This awakens the idea that, in addition to the leaves, there exists in nature the "leaf": the original model according to which all the leaves were perhaps woven,

When in actuality "leaf" is merely the distinction of singularity, meaning not "leaves". Not based on an "original" model at all, but a distinction what it is related, and not equal to. Concepts and words only create "context"; the water that all distinctions, all rhetoric, and all convention swims in.

-