- Nov 2020

-

www.facebook.com www.facebook.com

-

As I think about the future of the internet, I believe a privacy-focused communications platform will become even more important than today's open platforms. Privacy gives people the freedom to be themselves and connect more naturally, which is why we build social networks.

Mark Zuckerberg claims he believes privacy focused communications will become even more important than today's open platforms (like Facebook).

-

-

opensource.com opensource.com

-

Now let me get back to your question. The FBI presents its conflict with Apple over locked phones as a case as of privacy versus security. Yes, smartphones carry a lot of personal data—photos, texts, email, and the like. But they also carry business and account information; keeping that secure is really important. The problem is that if you make it easier for law enforcement to access a locked device, you also make it easier for a bad actor—a criminal, a hacker, a determined nation-state—to do so as well. And that's why this is a security vs. security issue.

The debate should not be framed as privacy-vs-security because when you make it easier for law enforcement to access a locked device, you also make it easier for bad actors to do so as well. Thus it is a security-vs-security issue.

-

-

boingboing.net boingboing.net

-

The FBI — along with many other law enforcement and surveillance agents — insists that it is possible to make crypto that will protect our devices, transactions, data, communications and lives, but which will fail catastrophically whenever a cop needs it to. When technologists explain that this isn't a thing, the FBI insists that they just aren't nerding hard enough.

The infosec community has labelled the argument by the government that there should be a solution to the dilemma of wanting secure consumer tech, but also granting access to government officials as: nerd harder.

-

-

www.schneier.com www.schneier.com

-

Barr makes the point that this is about “consumer cybersecurity” and not “nuclear launch codes.” This is true, but it ignores the huge amount of national security-related communications between those two poles. The same consumer communications and computing devices are used by our lawmakers, CEOs, legislators, law enforcement officers, nuclear power plant operators, election officials and so on. There’s no longer a difference between consumer tech and government tech—it’s all the same tech.

The US government's defence for wanting to introduce backdoors into consumer encryption is that in doing so they would not be weakening the encryption for, say, nuclear launch codes.

Schneier holds that this distinction between government and consumer tech no longer exists. Weakening consumer tech amounts to weakening government tech. Therefore it's not worth doing.

-

-

www.schneier.com www.schneier.com

-

The answers for law enforcement, social networks, and medical data won’t be the same. As we move toward greater surveillance, we need to figure out how to get the best of both: how to design systems that make use of our data collectively to benefit society as a whole, while at the same time protecting people individually.

Each challenge needs to be treated on its own. The trade off to be made will be different for law enforcement vs. social media vs. health officials. We need to figure out "how to design systems that make use of our data collectively to benefit society as a whole, while at the same time protecting people individually."

-

-

www.schneier.com www.schneier.com

-

A future in which privacy would face constant assault was so alien to the framers of the Constitution that it never occurred to them to call out privacy as an explicit right. Privacy was inherent to the nobility of their being and their cause.

When we wrote our constitutions, it was a time where privacy was a given. It was inconceivable that there would be a threat of being surveilled everywhere we go.

-

We do nothing wrong when we make love or go to the bathroom. We are not deliberately hiding anything when we seek out private places for reflection or conversation. We keep private journals, sing in the privacy of the shower, and write letters to secret lovers and then burn them. Privacy is a basic human need.

Privacy is a basic human, psychological need.

-

Privacy protects us from abuses by those in power, even if we’re doing nothing wrong at the time of surveillance.

Privacy is what protects us from abuse at the hands of the powerful, even if we're doing nothing wrong at the time we're being surveilled.

-

My problem with quips like these — as right as they are — is that they accept the premise that privacy is about hiding a wrong. It’s not. Privacy is an inherent human right, and a requirement for maintaining the human condition with dignity and respect.

Common retorts to "If you aren't doing anything wrong, what do you have to hide?" accept the premise that privacy is about having a wrong to hide.

Bruce Schneier posits that Privacy is an inherent human right, and "a requirement for maintaining the human condition with dignity and respect".

-

- Oct 2020

-

-

“They can see you, but you can’t see them, which I didn’t feel good about,”

-

-

pairagraph.com pairagraph.com

-

Similarly, technology can help us control the climate, make AI safe, and improve privacy.

regulation needs to surround the technology that will help with these things

-

-

www.eff.org www.eff.org

-

Legislation to stem the tide of Big Tech companies' abuses, and laws—such as a national consumer privacy bill, an interoperability bill, or a bill making firms liable for data-breaches—would go a long way toward improving the lives of the Internet users held hostage inside the companies' walled gardens. But far more important than fixing Big Tech is fixing the Internet: restoring the kind of dynamism that made tech firms responsive to their users for fear of losing them, restoring the dynamic that let tinkerers, co-ops, and nonprofits give every person the power of technological self-determination.

-

-

ruben.verborgh.org ruben.verborgh.org

-

In fact, these platforms have become inseparable from their data: we use “Facebook” to refer to both the application and the data that drives that application. The result is that nearly every Web app today tries to ask you for more and more data again and again, leading to dangling data on duplicate and inconsistent profiles we can no longer manage. And of course, this comes with significant privacy concerns.

-

-

robinderosa.net robinderosa.net

-

working in public, and asking students to work in public, is fraught with dangers and challenges.

-

-

www.theatlantic.com www.theatlantic.com

-

I find it somewhat interesting to note that with 246 public annotations on this page using Hypothes.is, that from what I can tell as of 4/2/2019 only one of them is a simple highlight. All the rest are highlights with an annotation or response of some sort.

It makes me curious to know what the percentage distribution these two types have on the platform. Is it the case that in classroom settings, which many of these annotations appear to have been made, that much of the use of the platform dictates more annotations (versus simple highlights) due to the performative nature of the process?

Is it possible that there are a significant number of highlights which are simply hidden because the platform automatically defaults these to private? Is the friction of making highlights so high that people don't bother?

I know that Amazon will indicate heavily highlighted passages in e-books as a feature to draw attention to the interest relating to those passages. Perhaps it would be useful/nice if Hypothes.is would do something similar, but make the author of the highlights anonymous? (From a privacy perspective, this may not work well on articles with a small number of annotators as the presumption could be that the "private" highlights would most likely be directly attributed to those who also made public annotations.

Perhaps the better solution is to default highlights to public and provide friction-free UI to make them private?

A heavily highlighted section by a broad community can be a valuable thing, but surfacing it can be a difficult thing to do.

-

-

ceouimet.com ceouimet.com

-

recording it all in a Twitter thread that went viral and garnered the hashtag #PlaneBae.

I find it interesting that The Atlantic files this story with a URL that includes "/entertainment/" in it's path. Culture, certainly, but how are three seemingly random people's lives meant to be classified by such a journalistic source as "entertainment?"

-

-

asciinema.org asciinema.org

-

molecules

Turns out that because it's text-based, you can also annotate it with Hypothes.is!

-

-

elladawson.com elladawson.com

-

A friend of mine asked if I’d thought through the contradiction of criticizing Blair publicly like this, when she’s another not-quite public figure too.

Did this really happen? Or is the author inventing it to diffuse potential criticism as she's writing about the same story herself and only helping to propagate it?

There's definitely a need to write about this issue, so kudos for that. Ella also deftly leaves out the name of the mystery woman, I'm sure on purpose. But she does include enough breadcrumbs to make the rest of the story discover-able so that one could jump from here to participate in the piling on. I do appreciate that it doesn't appear that she's given Blair any links in the process, which for a story like this is some subtle internet shade.

-

Even when the attention is positive, it is overwhelming and frightening. Your mind reels at the possibility of what they could find: your address, if your voting records are logged online; your cellphone number, if you accidentally included it on a form somewhere; your unflattering selfies at the beginning of your Facebook photo archive. There are hundreds of Facebook friend requests, press requests from journalists in your Instagram inbox, even people contacting your employer when they can’t reach you directly. This story you didn’t choose becomes the main story of your life. It replaces who you really are as the narrative someone else has written is tattooed onto your skin.

-

the woman on the plane has deleted her own Instagram account after receiving violent abuse from the army Blair created.

Feature request: the ability to make one's social media account "disappear" temporarily while a public "attack" like this is happening.

We need a great name for this. Publicity ghosting? Fame cloaking?

-

We actively create our public selves, every day, one social media post at a time.

-

-

-

in the not-so-distant future, it will show targeted ads to users on WhatsApp

Tags

Annotators

URL

-

- Sep 2020

-

getpocket.com getpocket.com

-

To defeat facial recognition software, “you would have to wear a mask or disguises,” Tien says. “That doesn’t really scale up for people.”

Yeah, that sentence was written in 2017 and especially pertinent to Americans. 2020 has changed things a fair bit.

-

-

psyarxiv.com psyarxiv.com

-

Garrett, P. M., White, J. P., Lewandowsky, S., Kashima, Y., Perfors, A., Little, D. R., Geard, N., Mitchell, L., Tomko, M., & Dennis, S. (2020). The acceptability and uptake of smartphone tracking for COVID-19 in Australia [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/7tme6

-

-

www.youtube.com www.youtube.com

-

Computational Social Science to Address the (Post) COVID-19 Reality. (2020, June 27). https://www.youtube.com/watch?v=7d-Dq0e1JJ0&list=PL9UNgBC7ODr6eZkwB6W0QSzpDs46E8WPN&index=4

-

-

-

Romanini, Daniele, Sune Lehmann, and Mikko Kivelä. ‘Privacy and Uniqueness of Neighborhoods in Social Networks’. ArXiv:2009.09973 [Physics], 21 September 2020. http://arxiv.org/abs/2009.09973.

-

-

oneheglobal.org oneheglobal.org

-

reminding your students that you value and respect their privacy and their culture.

This constant reminder will make students feel inclusive and reduce the chances of unintended harm.

-

-

sens-public.org sens-public.org

-

L’homme asservi n’est pas seulement contraint, il consent à sa contrainte.

…mais la personne «asservie» le consent-elle vraiment en connaissance de cause?

Une étude montre que plus les gens sont conscients de ce qui est à l’œuvre, plus ils sont réticents à utiliser les services qui exploitent les données de leur vie privée.

Tags

Annotators

URL

-

-

onezero.medium.com onezero.medium.com

-

and as a result, the requirement to use this tracking permission will go into effect early next year.

Looking forward to the feature

-

There are clever ways around trackers

I also recommend switching to FIrefox, getting the Facebook container extension and Privacy Badger extension!

-

These creeping changes help us forget how important our privacy is and miss that it’s being eroded.

This is important we are normalizing the fact that our privacy is being taken slowly, update after update

-

-

www.nature.com www.nature.com

-

Hu, Y., & Wang, R.-Q. (2020). Understanding the removal of precise geotagging in tweets. Nature Human Behaviour, 1–3. https://doi.org/10.1038/s41562-020-00949-x

-

-

psyarxiv.com psyarxiv.com

-

Lewandowsky, Stephan, Simon Dennis, Amy Perfors, Yoshihisa Kashima, Joshua White, Paul Michael Garrett, Daniel R. Little, and Muhsin Yesilada. ‘Public Acceptance of Privacy-Encroaching Policies to Address the COVID-19 Pandemic in the United Kingdom’. Preprint. PsyArXiv, 4 September 2020. https://doi.org/10.31234/osf.io/njwmp.

Tags

- is:preprint

- tracking technology

- opt-out clause

- immunity passport

- infected

- social distancing

- contact

- co-location tracking

- UK

- privacy-encroaching policy

- willingness

- United Kingdom

- COVID-19

- antibodies

- public

- widespread acceptance

- lang:en

- public acceptance

- time limited

- health agencies

Annotators

URL

-

-

www.reddit.com www.reddit.com

-

r/BehSciResearch - From social licencing of contact tracing to political accountability: Input sought on next wave of representative surveys in Germany, Spain, and U.K. (n.d.). Reddit. Retrieved June 18, 2020, from https://www.reddit.com/r/BehSciResearch/comments/hbaj58/from_social_licencing_of_contact_tracing_to/

-

-

medium.com medium.com

-

Turns out, there’s a dedicated “Individual Account Appeal Form” where they ask you a list of privacy-touching mandatory questions, progressively shifting the Overton window

-

-

www.visualcapitalist.com www.visualcapitalist.com

-

Ali, A. (2020, August 28). Visualizing the Social Media Universe in 2020. Visual Capitalist. https://www.visualcapitalist.com/visualizing-the-social-media-universe-in-2020/

-

- Aug 2020

-

www.youtube.com www.youtube.comYouTube1

-

Online Harms & Disinformation Post-COVID. (n.d.). Retrieved 20 August 2020, from https://www.youtube.com/watch?v=N2BmRuXbNhk

-

-

-

Horstmann, K. T., Buecker, S., Krasko, J., Kritzler, S., & Terwiel, S. (2020). Who does or does not use the “Corona-Warn-App” and why? [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/e9fu3

-

-

www.nber.org www.nber.org

-

Argente, D. O., Hsieh, C.-T., & Lee, M. (2020). The Cost of Privacy: Welfare Effects of the Disclosure of COVID-19 Cases (Working Paper No. 27220; Working Paper Series). National Bureau of Economic Research. https://doi.org/10.3386/w27220

-

- Jul 2020

-

arxiv.org arxiv.org

-

Kaptchuk, G., Goldstein, D. G., Hargittai, E., Hofman, J., & Redmiles, E. M. (2020). How good is good enough for COVID19 apps? The influence of benefits, accuracy, and privacy on willingness to adopt. ArXiv:2005.04343 [Cs]. http://arxiv.org/abs/2005.04343

-

-

-

Perrott, D. (2020, May 26). Is Applied Behavioural Science reaching a Local Maximum? Medium. https://medium.com/@DavePerrott/is-applied-behavioural-science-reaching-a-local-maximum-538b536f7e7d

-

-

bugs.ruby-lang.org bugs.ruby-lang.org

-

I have pixelized the faces of the recognizable people wearing a red cord (as they don't want to appear on pictures). I hope is fine.

-

-

sens-public.org sens-public.org

-

a new kind of power

This is what Shoshana Zuboff sustains in The Age of Surveillance Capitalism: a new kind of power which can, at first, be apprehended through Marx’s lenses; but as a new form of capitalism, it <mark>“cannot be reduced to known harms—monopoly, privacy—and therefore do not easily yield to known forms of combat.”</mark>

It is <mark>“a new form of capitalism on its own terms and in its own words”</mark> which therefore requires new conceptual frameworks to be understood, negotiated.

-

-

-

Frimpong, J. A., & Helleringer, S. (2020). Financial Incentives for Downloading COVID–19 Digital Contact Tracing Apps [Preprint]. SocArXiv. https://doi.org/10.31235/osf.io/9vp7x

-

-

www.cs.cornell.edu www.cs.cornell.edu

-

Our membership inference attack exploits the observationthat machine learning models often behave differently on thedata that they were trained on versus the data that they “see”for the first time.

How well would this work on some of the more recent zero-shot models?

Tags

Annotators

URL

-

-

arxiv.org arxiv.org

-

Lovato, J., Allard, A., Harp, R., & Hébert-Dufresne, L. (2020). Distributed consent and its impact on privacy and observability in social networks. ArXiv:2006.16140 [Physics]. http://arxiv.org/abs/2006.16140

-

-

www.cbinsights.com www.cbinsights.com

-

It is the natural trajectory of business to seek out new ways to drive revenue from products like microwaves, televisions, refrigerators, and speakers. And now that microwaves and TVs can effectively operate as mini-computers, it feels inevitable that manufacturers would look to collect potentially valuable data — whether for resale, for product optimization, or to bring down the sticker price of the device.

-

- Jun 2020

-

-

Privacy Preserving Data Analysis of Personal Data (May 27, 2020). (n.d.). Retrieved June 25, 2020, from https://www.youtube.com/watch?time_continue=11&v=wRI84xP0cVw&feature=emb_logo

-

-

www.unforgettable.me www.unforgettable.me

-

Register here: (n.d.). Google Docs. Retrieved May 5, 2020, from https://docs.google.com/forms/d/e/1FAIpQLSdqXWlf0sbRR9wSH_42shm4vU4tHcCe0bQZuC-6ngHaI4I32w/viewform??embedded=true&usp=embed_facebook

-

-

www.nassiben.com www.nassiben.comLamphone1

-

-

AP NEWS. ‘Colombia’s Medellin Emerges as Surprise COVID-19 Pioneer’, 13 June 2020. https://apnews.com/b3f8860343323d0daeef72191b669baf.

-

-

featuredcontent.psychonomic.org featuredcontent.psychonomic.org

-

Okan, Y. (2020, May 22). From a tweet to Reddit and beyond: The road to a global behavioral science SWAT team. Psychonomic Society Featured Content. https://featuredcontent.psychonomic.org/from-a-tweet-to-reddit-and-beyond-the-road-to-a-global-behavioral-science-swat-team/

-

-

www.theguardian.com www.theguardian.com

-

Hern, A. (2020, June 8). People who think they have had Covid-19 ‘less likely to download contact-tracing app.’ The Guardian. https://www.theguardian.com/world/2020/jun/08/people-who-think-they-have-had-covid-19-less-likely-to-download-contact-tracing-app

-

-

spreadprivacy.com spreadprivacy.com

-

This advertising system is designed to enable hyper-targeting, which has many unintended consequences that have dominated the headlines in recent years, such as the ability for bad actors to use the system to influence elections, to exclude groups in a way that facilitates discrimination, and to expose your personal data to companies you’ve never even heard of.

Where your Google data goes to

-

if you search for something on Google, you may start seeing ads for it everywhere.

In comparison to DuckDuckGo, Google presents you ads everywhere, not just in the search results

-

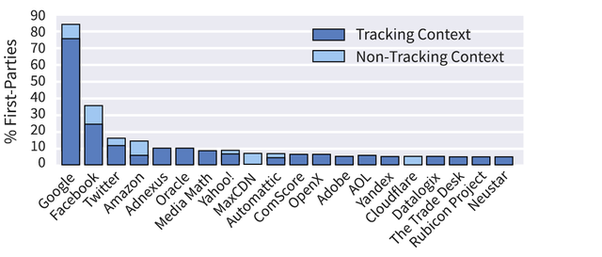

Alarmingly, Google now deploys hidden trackers on 76% of websites across the web to monitor your behavior and Facebook has hidden trackers on about 25% of websites, according to the Princeton Web Transparency & Accountability Project. It is likely that Google and/or Facebook are watching you on most sites you visit, in addition to tracking you when using their products.

-

-

twitter.com twitter.com

-

Michael Veale on Twitter

-

-

www.nature.com www.nature.com

-

Zastrow, M. (2020). Coronavirus contact-tracing apps: Can they slow the spread of COVID-19? Nature. https://doi.org/10.1038/d41586-020-01514-2

-

-

blogs.sap.com blogs.sap.com

-

Mueller, J. (2020 May 19). COVID-19: What the Technical Foundation of the Corona-Warn-App in Germany looks like | SAP Blogs. https://blogs.sap.com/2020/05/19/covid-19-how-the-technical-foundation-of-the-corona-warn-app-in-germany-looks-like/

-

-

github.com github.comDP^3T1

-

"You wanted open source privacy-preserving Bluetooth contact tracing code? #DP3T software development kits/calibration apps for iOS and Android, and backend server, now on GitHub. iOS/Android apps with nice interface to follow." Michael Veale on Twitter (see context)

-

-

blog.gds-gov.tech blog.gds-gov.tech

-

Bay, J. (2020 April 11). Automated contact tracing is not a coronavirus panacea. Medium. https://blog.gds-gov.tech/automated-contact-tracing-is-not-a-coronavirus-panacea-57fb3ce61d98

-

-

www.engadget.com www.engadget.com

-

There were also underlying security issues. Most of the messaging apps Tor Messenger supported are based on client-server architectures, and those can leak metadata (such as who's involved in a conversation and when) that might reveal who your friends are. There was no real way for the Tor crew to mitigate these issues.

-

Tor suggests CoyIM, but it's prone to the same metadata issues as Messenger. You may have to accept that a small amount of chat data could find its way into the wrong hands, even if the actual conversations are locked down tight.

-

-

-

Of course, with Facebook being Facebook, there is another, more commercial outlet for this type of metadata analysis. If the platform knows who you are, and knows what you do based on its multi-faceted internet tracking tools, then knowing who you talk to and when could be a commercial goldmine. Person A just purchased Object 1 and then chatted to Person B. Try to sell Object 1 to Person B. All of which can be done without any messaging content being accessed.

-

users will not want to see data mining expanding across their WhatsApp metadata. But if that’s the price to maintain encryption, one can assume it will be a relatively easy sell for most users.

-

-

www.forbes.com www.forbes.com

-

As uber-secure messaging platform Signal has warned, “Signal is recommended by the United States military. It is routinely used by senators and their staff. American allies in the EU Commission are Signal users too. End-to-end encryption is fundamental to the safety, security, and privacy of conversations worldwide.”

-

EFF describes this as “a major threat,” warning that “the privacy and security of all users will suffer if U.S. law enforcement achieves its dream of breaking encryption.”

-

-

www.forbes.com www.forbes.com

-

As EFF has warned, “undermining free speech and privacy is not the way to protect children.”

-

-

www.thelancet.com www.thelancet.com

-

Bauch, C. T., & Anand, M. (2020). COVID-19: When should quarantine be enforced? The Lancet Infectious Diseases, S147330992030428X. https://doi.org/10.1016/S1473-3099(20)30428-X

-

- May 2020

-

twitter.com twitter.comTwitter1

-

ReconfigBehSci en Twitter: “a google doc tracking all app development: https://t.co/vzmyWWF0qj” / Twitter. (n.d.). Twitter. Retrieved April 16, 2020, from https://twitter.com/SciBeh/status/1243171088256860160

-

-

cpg.doc.ic.ac.uk cpg.doc.ic.ac.uk

-

de Montjoye, Y. et al. (2020 April 02). Evaluating COVID-19 contact tracing apps? Here are 8 privacy questions we think you should ask. Computational Privacy Group. https://cpg.doc.ic.ac.uk/blog/evaluating-contact-tracing-apps-here-are-8-privacy-questions-we-think-you-should-ask/

-

-

secrecyresearch.com secrecyresearch.com

-

Beyer-Hunt, S., Carter, J., Goh, A., Li, N., & Natamanya, S.M. (2020, May 14) COVID-19 and the Politics of Knowledge: An Issue and Media Source Primer. SPIN. https://secrecyresearch.com/2020/05/14/covid19-spin-primer/

-

-

www.bleepingcomputer.com www.bleepingcomputer.com

-

www.theatlantic.com www.theatlantic.com

-

Someone had taken control of my iPad, blasting through Apple’s security restrictions and acquiring the power to rewrite anything that the operating system could touch. I dropped the tablet on the seat next to me as if it were contagious. I had an impulse to toss it out the window. I must have been mumbling exclamations out loud, because the driver asked me what was wrong. I ignored him and mashed the power button. Watching my iPad turn against me was remarkably unsettling. This sleek little slab of glass and aluminum featured a microphone, cameras on the front and back, and a whole array of internal sensors. An exemplary spy device.

-

-

www.scirp.org www.scirp.org

-

Makulilo, A. B. (2016). “A Person Is a Person through Other Persons”—A Critical Analysis of Privacy and Culture in Africa. Beijing Law Review, 7(3), 720–726. https://doi.org/10.4236/blr.2016.73020

-

-

www.sciencedirect.com www.sciencedirect.com

-

Chen, Z. (2020). COVID-19: A revelation – A reply to Ian Mitroff. Technological Forecasting and Social Change, 156, 120072. https://doi.org/10.1016/j.techfore.2020.120072

-

-

www.iubenda.com www.iubenda.com

-

In other words, it’s the procedure to prevent Google from “cross-referencing” information from Analytics with other data in its possession.

-

-

www.osano.com www.osano.com

-

Companies that show their customers that they take privacy seriously will earn their trust and loyalty.

-

-

www.iubenda.com www.iubenda.com

-

Consent means offering individuals real choice and control. Genuine consent should put individuals in charge, build trust and engagement, and enhance your reputation.

-

-

www.technologyreview.com www.technologyreview.com

-

A flood of coronavirus apps are tracking us. Now it’s time to keep track of them. (n.d.). MIT Technology Review. Retrieved May 12, 2020, from https://www.technologyreview.com/2020/05/07/1000961/launching-mittr-covid-tracing-tracker/

-

-

citizenlab.ca citizenlab.ca

-

We present results from technical experiments which reveal that WeChat communications conducted entirely among non-China-registered accounts are subject to pervasive content surveillance that was previously thought to be exclusively reserved for China-registered accounts.

WeChat not only tracks Chinese accounts

Tags

Annotators

URL

-

-

complianz.io complianz.io

-

A complete snapshot of the user’s browser window at that moment in time will be captured, pixel by pixel (!)

-

The mix of a fingerprint and first-party cookies is pervasive as Google can give a very high level of entropy when it comes to distinguishing an individual person.

-

-

www.fastcompany.com www.fastcompany.com

-

-

Google encouraging site admins to put reCaptcha all over their sites, and then sharing the resulting risk scores with those admins is great for security, Perona thinks, because he says it “gives site owners more control and visibility over what’s going on” with potential scammer and bot attacks, and the system will give admins more accurate scores than if reCaptcha is only using data from a single webpage to analyze user behavior. But there’s the trade-off. “It makes sense and makes it more user-friendly, but it also gives Google more data,”

-

This kind of cookie-based data collection happens elsewhere on the internet. Giant companies use it as a way to assess where their users go as they surf the web, which can then be tied into providing better targeted advertising.

-

For instance, Google’s reCaptcha cookie follows the same logic of the Facebook “like” button when it’s embedded in other websites—it gives that site some social media functionality, but it also lets Facebook know that you’re there.

-

one of the ways that Google determines whether you’re a malicious user or not is whether you already have a Google cookie installed on your browser.

-

But this new, risk-score based system comes with a serious trade-off: users’ privacy.

-

-

github.com github.com

-

-

they sought to eliminate data controllers and processors acting without appropriate permission, leaving citizens with no control as their personal data was transferred to third parties and beyond

-

-

kantarainitiative.org kantarainitiative.org

-

“Until CR 1.0 there was no effective privacy standard or requirement for recording consent in a common format and providing people with a receipt they can reuse for data rights. Individuals could not track their consents or monitor how their information was processed or know who to hold accountable in the event of a breach of their privacy,” said Colin Wallis, executive director, Kantara Initiative. “CR 1.0 changes the game. A consent receipt promises to put the power back into the hands of the individual and, together with its supporting API — the consent receipt generator — is an innovative mechanism for businesses to comply with upcoming GDPR requirements. For the first time individuals and organizations will be able to maintain and manage permissions for personal data.”

-

Its purpose is to decrease the reliance on privacy policies and enhance the ability for people to share and control personal information.

-

-

arxiv.org arxiv.org

-

Nguyen, C. T., Saputra, Y. M., Van Huynh, N., Nguyen, N.-T., Khoa, T. V., Tuan, B. M., Nguyen, D. N., Hoang, D. T., Vu, T. X., Dutkiewicz, E., Chatzinotas, S., & Ottersten, B. (2020). Enabling and Emerging Technologies for Social Distancing: A Comprehensive Survey. ArXiv:2005.02816 [Physics]. http://arxiv.org/abs/2005.02816

-

-

threadreaderapp.com threadreaderapp.com

-

Thread by @STWorg: “Live” update of analysis of 2K UK respondents and their views on privacy-encroaching tracking policies: stephanlewandowsky.github.io/UKsoci…. (n.d.). Retrieved April 17, 2020, from https://threadreaderapp.com/thread/1245060279047794688.html

-

-

featuredcontent.psychonomic.org featuredcontent.psychonomic.org

-

Hill, H. (2020 April 9). COVID-19: Does the British public condone cell phone data being used to monitor social distancing? Psychonomic Society. https://featuredcontent.psychonomic.org/covid-19-does-the-british-public-condone-cell-phone-data-being-used-to-monitor-social-distancing/

-

-

www.iubenda.com www.iubenda.com

-

-

I will need to find a workaround for one of my private extensions that controls devices in my home network, and its source code cannot be uploaded to Mozilla because of my and my family's privacy.

-

I will need to find a workaround for one of my private extensions that controls devices in my home network, and its source code cannot be uploaded to Mozilla because of my and my family's privacy.

-

-

-

Kojaku, S., Hébert-Dufresne, L., & Ahn, Y.-Y. (2020). The effectiveness of contact tracing in heterogeneous networks. ArXiv:2005.02362 [Physics, q-Bio]. http://arxiv.org/abs/2005.02362

-

-

github.com github.com

-

I'm really tired with mozilla,everything good about them is going away,I don't think they are security or privacy focused anymore and waterfox is a nice alternative to firefox.

-

-

www.iubenda.com www.iubenda.com

- Apr 2020

-

safetyholic.com safetyholic.com

-

Don’t share any private, identifiable information on social media It may be fun to talk about your pets with your friends on Instagram or Twitter, but if Fluffy is the answer to your security question, then you shouldn’t share that with the world. This may seem quite obvious, but sometimes you get wrapped up in an online conversation, and it is quite easy to let things slip out. You may also want to keep quiet about your past home or current home locations or sharing anything that is very unique and identifiable. It could help someone fake your identity.

-

Don’t share vacation plans on social media Sharing a status of your big trip to the park on Saturday may be a good idea if you are looking to have a big turnout of friends to join you, but not when it comes to home and personal safety. For starters, you have just broadcasted where you are going to be at a certain time, which can be pretty dangerous if you have a stalker or a crazy ex. Secondly, you are telling the time when you won’t be home, which can make you vulnerable to being robbed. This is also true if you are sharing selfies of yourself on the beach with a caption that states “The next 2 weeks are going to be awesome!” You have just basically told anyone who has the option to view your photo and even their friends that you are far away from home and for how long.

-

-

www.cnbc.com www.cnbc.com

-

www.cnbc.com www.cnbc.com

-

But now, I think there’s still some lack of clarity from consumers on exactly what they need to do

-

The notices were meant as a jumping-off point where people could begin the journey of understanding how each of their applications and the websites they visit use their data. But, they have probably had the opposite effect

-

-

iapp.org iapp.org

-

Finally, from a practical point of view, we suggest the adoption of "privacy label," food-like notices, that provide the required information in an easily understandable manner, making the privacy policies easier to read. Through standard symbols, colors and feedbacks — including yes/no statements, where applicable — critical and specific scenarios are identified. For example, whether or not the organization actually shares the information, under what specific circumstances this occurs, and whether individuals can oppose the share of their personal data. This would allow some kind of standardized information. Some of the key points could include the information collected and the purposes of its collection, such as marketing, international transfers or profiling, contact details of the data controller, and distinct differences between organizations’ privacy practices, and to identify privacy-invasive practices.

-

Finally, from a practical point of view, we suggest the adoption of "privacy label," food-like notices, that provide the required information in an easily understandable manner, making the privacy policies easier to read.

-

-

www.iubenda.comhttps www.iubenda.comhttps

-

be sure to read the complete iubenda privacy policy, part of these terms of service.

-

-

web.hypothes.is web.hypothes.is

-

people encountering public Hypothesis annotations anywhere don’t have to worry about their privacy.

In the Privacy Policy document there is an annotation that says:

I decided against using hypothes.is as the commenting system for my blog, since I don't want my readers to be traceable by a third party I choose on their behalf

Alhtough this annotation is a bit old -from 2016- I understand that Hypothes.is server would in fact get information from these readers through HTTP requests, correct? Such as IP address, browser's agent, etc. I wonder whether this is the traceability the annotator was referring to.

Anyway, I think this wouldn't be much different to how an embedded image hosted elsewhere would be displayed on one such site. And Hypothes.is' Privacy Policy states that

This information is collected in a log file and retained for a limited time

-

at any time,

It would be nice that it said here that Hypothes.is will notify its users if the Privacy Policy is changed.

-

-

www.brucebnews.com www.brucebnews.com

-

Before we get to passwords, surely you already have in mind that Google knows everything about you. It knows what websites you’ve visited, it knows where you’ve been in the real world thanks to Android and Google Maps, it knows who your friends are thanks to Google Photos. All of that information is readily available if you log in to your Google account. You already have good reason to treat the password for your Google account as if it’s a state secret.

-

-

www.cnet.com www.cnet.com

-

Alas, you'll have to manually visit each site in turn and figure out how to actually delete your account. For help, turn to JustDelete.me, which provides direct links to the cancellation pages of hundreds of services.

-

-

panopticlick.eff.org panopticlick.eff.org

-

When you visit a website, you are allowing that site to access a lot of information about your computer's configuration. Combined, this information can create a kind of fingerprint — a signature that could be used to identify you and your computer. Some companies use this technology to try to identify individual computers.

-

-

security.googleblog.com security.googleblog.com

-

Our approach strikes a balance between privacy, computation overhead, and network latency. While single-party private information retrieval (PIR) and 1-out-of-N oblivious transfer solve some of our requirements, the communication overhead involved for a database of over 4 billion records is presently intractable. Alternatively, k-party PIR and hardware enclaves present efficient alternatives, but they require user trust in schemes that are not widely deployed yet in practice. For k-party PIR, there is a risk of collusion; for enclaves, there is a risk of hardware vulnerabilities and side-channels.

-

At the same time, we need to ensure that no information about other unsafe usernames or passwords leaks in the process, and that brute force guessing is not an option. Password Checkup addresses all of these requirements by using multiple rounds of hashing, k-anonymity, and private set intersection with blinding.

-

Privacy is at the heart of our design: Your usernames and passwords are incredibly sensitive. We designed Password Checkup with privacy-preserving technologies to never reveal this personal information to Google. We also designed Password Checkup to prevent an attacker from abusing Password Checkup to reveal unsafe usernames and passwords. Finally, all statistics reported by the extension are anonymous. These metrics include the number of lookups that surface an unsafe credential, whether an alert leads to a password change, and the web domain involved for improving site compatibility.

-

-

stephanlewandowsky.github.io stephanlewandowsky.github.ioHome1

-

lsts.research.vub.be lsts.research.vub.be

-

twitter.com twitter.com

-

Michael Veale on Twitter.

-

-

arxiv.org arxiv.org

-

Nanni, M., Andrienko, G., Boldrini, C., Bonchi, F., Cattuto, C., Chiaromonte, F., Comandé, G., Conti, M., Coté, M., Dignum, F., Dignum, V., Domingo-Ferrer, J., Giannotti, F., Guidotti, R., Helbing, D., Kertesz, J., Lehmann, S., Lepri, B., Lukowicz, P., … Vespignani, A. (2020). Give more data, awareness and control to individual citizens, and they will help COVID-19 containment. ArXiv:2004.05222 [Cs]. http://arxiv.org/abs/2004.05222

-

-

Local file Local file

-

DP-3T/documents. (n.d.). GitHub. Retrieved April 8, 2020, from https://github.com/DP-3T/documents

-

-

Local file Local file

-

DP-3T/documents. (n.d.). GitHub. Retrieved April 17, 2020, from https://github.com/DP-3T/documents

Tags

- decentralized

- preserving

- security

- lang:en

- protection

- proximity

- data

- tracing

- tacking

- is:article

- privacy

Annotators

-

-

drive.google.com drive.google.com

-

Joint Statement.pdf. (n.d.). Google Docs. Retrieved April 21, 2020, from https://drive.google.com/file/d/1OQg2dxPu-x-RZzETlpV3lFa259Nrpk1J/view?usp=drive_open&usp=embed_facebook

-

-

-

Blog: Combatting COVID-19 through data: some considerations for privacy. (2020, April 17). ICO. https://ico.org.uk/about-the-ico/news-and-events/blog-combatting-covid-19-through-data-some-considerations-for-privacy/

-

-

-

Google says this technique, called "private set intersection," means you don't get to see Google's list of bad credentials, and Google doesn't get to learn your credentials, but the two can be compared for matches.

-

-

www.csoonline.com www.csoonline.com

-

"If someone knows your old passwords, they can catch onto your system. If you're in the habit of inventing passwords with the name of a place you've lived and the zip code, for example, they could find out where I have lived in the past by mining my Facebook posts or something."Indeed, browsing through third-party password breaches offers glimpses into the things people hold dear — names of spouses and children, prayers, and favorite places or football teams. The passwords may no longer be valid, but that window into people's secret thoughts remains open.

-

-

www.bloomberg.com www.bloomberg.com

-

“A phone number is worth more on the dark web than a Social Security number. Your phone is so much more rich with data,” says J.D. Mumford, who runs Anonyome Labs Inc. in Salt Lake City.

“Facial recognition technology is now cheap enough where you can put it in every Starbucks and have your coffee ready when you’re in the front of the line,” says Lorrie Cranor, a computer science professor at Carnegie Mellon University who runs its CyLab Usable Privacy and Security Laboratory in Pittsburgh. In March, the New York Times put three cameras on a rooftop in Manhattan, spent $60 on Amazon’s Rekognition system, and identified several people. I took an Air France flight that had passengers board using our faceprints, taken from our passports without our permission.

Private companies such as Vigilant Solutions Inc., headquartered in the Valley, have cameras that have captured billions of geotagged photos of cars on streets and in parking lots that they sell on the open market, mostly to police and debt collectors.

Project Kovr runs a similar workshop at schools, in which it assigns some kids to stalk another child from a distance so they can create a data profile and tailor an ad campaign for the stalkee. Baauw has also been planning a project in which he chisels a statue of Facebook Chief Executive Officer Mark Zuckerberg as a Roman god. “He’s the Zeus of our time,” he says.

Until people demand a law that makes privacy the default, I’m going to try to remember, each time I click on something, that free things aren’t free. That when I send an email or a text outside of Signal or MySudo, I should expect those messages to one day be seen.

-

-

www.troyhunt.com www.troyhunt.com

-

Someone, somewhere has screwed up to the extent that data got hacked and is now in the hands of people it was never intended to be. No way, no how does this give me license to then treat that data with any less respect than if it had remained securely stored and I reject outright any assertion to the contrary. That's a fundamental value I operate under

-

-

www.goldyarora.com www.goldyarora.com

-

Can G Suite Admin Read My Email?

tl;dr: YES (check the article for detailed steps)

-

-

spreadprivacy.com spreadprivacy.com

-

Unlike Zoom, Apple’s FaceTime video conference service is truly end-to-end encrypted. Group FaceTime calls offer a privacy-conscious alternative for up to 32 participants. The main caveat is that this option only works if everyone on the call has an Apple device that currently supports this feature.

Tags

Annotators

URL

-

-

www.buzzfeednews.com www.buzzfeednews.com

-

-

Covid-19 is an emergency on such a huge scale that, if anonymity is managed appropriately, internet giants and social media platforms could play a responsible part in helping to build collective crowd intelligence for social good, rather than profit

-

Google's move to release location data highlights concerns around privacy. According to Mark Skilton, director of the Artificial Intelligence Innovation Network at Warwick Business School in the UK, Google's decision to use public data "raises a key conflict between the need for mass surveillance to effectively combat the spread of coronavirus and the issues of confidentiality, privacy, and consent concerning any data obtained."

-

-

-

-

-

blog.zoom.us blog.zoom.us

-

Thousands of enterprises around the world have done exhaustive security reviews of our user, network, and data center layers and confidently selected Zoom for complete deployment.

This doesn't really account for the fact that Zoom have committed some atrociously heinous acts, such as (and not limited to):

- Abuse how installation works on macOS

- Claiming to support end-to-end encryption while not doing that and then, shadily inventing a nomenclature that steers away from standards

- Sending your data to Facebook even if you don't have a Facebook account and hiding this practice from their privacy policy

- Installing a web server on your Mac that Apple had to build a tool to erase

-

- Mar 2020

-

www.cmswire.com www.cmswire.com

-

To join the Privacy Shield Framework, a U.S.-based organization is required to self-certify to the Department of Commerce and publicly commit to comply with the Framework’s requirements. While joining the Privacy Shield is voluntary, the GDPR goes far beyond it.

-

-

www.cmswire.com www.cmswire.com

-

"users are not able to fully understand the extent of the processing operations carried out by Google and that ‘the information on processing operations for the ads personalization is diluted in several documents and does not enable the user to be aware of their extent."

-

None Of Your Business

-

-

www.iubenda.com www.iubenda.com

-

-

What data is being collected? How is that data being collected?

-

For which specific purposes are the data collected? Analytics? Email Marketing?

-

What is the Legal basis for the collection?

-

-

theintercept.com theintercept.com

-

This is known as transport encryption, which is different from end-to-end encryption because the Zoom service itself can access the unencrypted video and audio content of Zoom meetings. So when you have a Zoom meeting, the video and audio content will stay private from anyone spying on your Wi-Fi, but it won’t stay private from the company.

-

But despite this misleading marketing, the service actually does not support end-to-end encryption for video and audio content, at least as the term is commonly understood. Instead it offers what is usually called transport encryption, explained further below

-

-

www.iubenda.com www.iubenda.com

-

The cookie policy is a section of the privacy policy dedicated to cookies

-

If a website/app collects personal data, the Data Owner must inform users of this fact by way of a privacy policy. All that is required to trigger this obligation is the presence of a simple contact form, Google Analytics, a cookie or even a social widget; if you’re processing any kind of personal data, you definitely need one.

-

-

matomo.org matomo.org

-

By choosing Matomo, you are joining an ever growing movement. You’re standing up for something that respects user-privacy, you’re fighting for a safer web and you believe your personal data should remain in your own hands, no one else’s.

-

-

matomo.org matomo.org

-

Data privacy now a global movementWe’re pleased to say we’re not the only ones who share this philosophy, web browsing companies like Brave have made it possible so you can browse the internet more privately; and you can use a search engine like DuckDuckGo to search with the freedom you deserve.

-

our values remain the same – advocating for 100% data ownership, respecting user-privacy, being reliable and encouraging people to stay secure. Complete analytics, that’s 100% yours.

-

the privacy of your users is respected

-

-

www.graphitedocs.com www.graphitedocs.comGraphite1

-

Own Your Encryption KeysYou would never trust a company to keep a record of your password for use anytime they want. Why would you do that with your encryption keys? With Graphite, you don't have to. You own and manage your keys so only YOU can decrypt your content.

-

-

www.beyondorganicbaby.com www.beyondorganicbaby.com

-

www.iubenda.com www.iubenda.com

-

When you think about data law and privacy legislations, cookies easily come to mind as they’re directly related to both. This often leads to the common misconception that the Cookie Law (ePrivacy directive) has been repealed by the General Data Protection Regulation (GDPR), which in fact, it has not. Instead, you can instead think of the ePrivacy Directive and GDPR as working together and complementing each other, where, in the case of cookies, the ePrivacy generally takes precedence.

-

-

www.iubenda.com www.iubenda.com

-

In accordance with the general principles of privacy law, which do not permit the processing of data prior to consent, the cookie law does not allow the installation of cookies before obtaining the user’s consent, except for exempt categories.

-

-

techcrunch.com techcrunch.com

-

the deceptive practices it has been used to shield and enable are on borrowed time. The direction of travel — and the direction of innovation — is pro-privacy, pro-user control and therefore anti-deceptive-design.

-

Earlier this year it began asking Europeans for consent to processing their selfies for facial recognition purposes — a highly controversial technology that regulatory intervention in the region had previously blocked. Yet now, as a consequence of Facebook’s confidence in crafting manipulative consent flows, it’s essentially figured out a way to circumvent EU citizens’ fundamental rights — by socially engineering Europeans to override their own best interests.

-

But people clearly do care about privacy. Just look at the lengths to which ad tech entities go to obfuscate and deceive consumers about how their data is being collected and used. If people don’t mind companies spying on them, why not just tell them plainly it’s happening?

-

The deceitful obfuscation of commercial intention certainly runs all the way through the data brokering and ad tech industries that sit behind much of the ‘free’ consumer Internet. Here consumers have plainly been kept in the dark so they cannot see and object to how their personal information is being handed around, sliced and diced, and used to try to manipulate them.

-

design choices are being selected to be intentionally deceptive. To nudge the user to give up more than they realize. Or to agree to things they probably wouldn’t if they genuinely understood the decisions they were being pushed to make.

Tags

- data privacy

- deceptive

- sacrificing personal data/privacy in order to gain some benefit

- manipulation

- making it easy to do the wrong thing

- trickery

- pro-privacy

- obfuscation

- innovation

- ads: bad/malicious/unethical

- hidden costs

- dark pattern

- positive trend

- voluntarily giving up one's rights

- erosion of rights

- privacy

Annotators

URL

-

-

www.privacypolicies.com www.privacypolicies.com

-

provide users with information regarding how to update their browser settings. Many sites provide detailed information for most browsers. You could either link to one of these sites, or create a similar guide of your own. Your guide can either appear in a pop up after a user declines consent, or it can be part of your Privacy Policy, Cookie Information page, or its own separate page.

-

-

www.blastanalytics.com www.blastanalytics.com

-

What information is being collected? Who is collecting it? How is it collected? Why is it being collected? How will it be used? Who will it be shared with? What will be the effect of this on the individuals concerned? Is the intended use likely to cause individuals to object or complain?

-

-

www.google.com www.google.com

-

If your agreement with Google incorporates this policy, or you otherwise use a Google product that incorporates this policy, you must ensure that certain disclosures are given to, and consents obtained from, end users in the European Economic Area along with the UK. If you fail to comply with this policy, we may limit or suspend your use of the Google product and/or terminate your agreement.

-

-

github.com github.com

-

When joining a Zoom meeting, the "join from your browser" link is intentionally hidden. This browser extension solves this problem by transparently redirecting any meeting links to use Zoom's browser based web client.

Using this extension means one won't be affected by the tracking that occurs via Zoom's apps for desktop and mobile devices.

Tags

Annotators

URL

-

-

-

The host of a Zoom call has the capacity to monitor the activities of attendees while screen-sharing. This functionality is available in Zoom version 4.0 and higher.

This is true if one uses the Zoom apps for desktop or mobile devices.

There is a Chrome extension that redirects Zoom meetings via a web browser.

-

-

www.davideocompany.com www.davideocompany.com

-

Many people see tracking cookies as an invasion of privacy since they allow a site to build up profiles on users without their consent.

-

-

blog.focal-point.com blog.focal-point.com

-

Legitimate Interest may be used for marketing purposes as long as it has a minimal impact on a data subject’s privacy and it is likely the data subject will not object to the processing or be surprised by it.

-

-

-

-

Google Analytics created an option to remove the last octet (the last group of 3 numbers) from your visitor’s IP-address. This is called ‘IP Anonymization‘. Although this isn’t complete anonymization, the GDPR demands you use this option if you want to use Analytics without prior consent from your visitors. Some countris (e.g. Germany) demand this setting to be enabled at all times.

-

-

gdpr-info.eu gdpr-info.eu

-

-

A data subject should have the right of access to personal data which have been collected concerning him or her, and to exercise that right easily and at reasonable intervals, in order to be aware of, and verify, the lawfulness of the processing

-

Every data subject should therefore have the right to know and obtain communication in particular with regard to the purposes for which the personal data are processed, where possible the period for which the personal data are processed, the recipients of the personal data, the logic involved in any automatic personal data processing

-

Where possible, the controller should be able to provide remote access to a secure system which would provide the data subject with direct access to his or her personal data.

Tags

Annotators

URL

-

-

support.google.com support.google.com

-

www.civicuk.com www.civicuk.com

-

-

As a condition of use of this site, all users must give permission for CIVIC to use its access logs to attempt to track users who are reasonably suspected of gaining, or attempting to gain, unauthorised access. All log file information collected by CIVIC is kept secure and no access to raw log files is given to any third party.

-

CIVIC will make no attempt to identify individual users. You should be aware, however, that access to web pages will generally create log entries in the systems of your ISP or network service provider. These entities may be in a position to identify the client computer equipment used to access a page.

-

-

techcrunch.com techcrunch.com

-

ads that reward viewers for watching them — the next step in its ambitious push towards a consent-based, pro-privacy overhaul of online advertising

-

-

-

For mainly two reasons: I pay for things that bring value to my life, and when something's "free", you're usually really just giving away your privacy without being aware.

-

It's also a good way to make sure my personal thoughts don't get exposed to a more wider audience.

-

-

complianz.io complianz.io

-

Google Recaptcha and personal dataBut we all know: there’s no such thing as a free lunch right? So what is the price we pay for this great feature? Right: it’s personal data.

-

-

www.oracle.com www.oracle.com

-

A 1% sample of AddThis Data (“Sample Dataset”) is retained for a maximum of 24 months for business continuity purposes.

-

-

artificialintelligence-news.com artificialintelligence-news.com

-

The system has been criticised due to its method of scraping the internet to gather images and storing them in a database. Privacy activists say the people in those images never gave consent. “Common law has never recognised a right to privacy for your face,” Clearview AI lawyer Tor Ekeland said in a recent interview with CoinDesk. “It’s kind of a bizarre argument to make because [your face is the] most public thing out there.”

-

-

-

Enligt Polismyndighetens riktlinjer ska en konsekvensbedömning göras innan nya polisiära verktyg införs, om de innebär en känslig personuppgiftbehandling. Någon sådan har inte gjorts för det aktuella verktyget.

Swedish police have used Clearview AI without any 'consequence judgement' having been performed.

In other words, Swedish police have used a facial-recognition system without being allowed to do so.

This is a clear breach of human rights.

Swedish police has lied about this, as reported by Dagens Nyheter.

-

-

www.marketwatch.com www.marketwatch.com

-

The payment provider told MarketWatch that everyone has a unique walk, and it is investigating innovative behavioral biometrics such as gait, face, heartbeat and veins for cutting edge payment systems of the future.

This is a true invasion into people's lives.

Remember: this is a credit-card company. We use them to pay for stuff. They shouldn't know what we look like, how we walk, how our hearts beat, nor how our 'vein technology' works.

-

-

www.vox.com www.vox.com

-

Right now, if you want to know what data Facebook has about you, you don’t have the right to ask them to give you all of the data they have on you, and the right to know what they’ve done with it. You should have that right. You should have the right to know and have access to your data.

-

-

www.osano.com www.osano.com

-

Good data privacy practices by companies are good for the world. We wake up every day excited to change the world and keep the internet that we all know and love safe and transparent.

Tags

Annotators

URL

-

- Feb 2020

-

thenextweb.com thenextweb.com

-

Last year, Facebook said it would stop listening to voice notes in messenger to improve its speech recognition technology. Now, the company is starting a new program where it will explicitly ask you to submit your recordings, and earn money in return.

Given Facebook's history with things like breaking laws that end up with them paying billions of USD in damages (even though it's a joke), sold ads to people who explicitly want to target people who hate jews, and have spent millions of USD every year solely on lobbyism, don't sell your personal experiences and behaviours to them.

Facebook is nefarious and psychopathic.

-

-

latacora.micro.blog latacora.micro.blog

-

The most popular modern secure messaging tool is Signal, which won the Levchin Prize at Real World Cryptography for its cryptographic privacy design. Signal currently requires phone numbers for all its users. It does this not because Signal wants to collect contact information for its users, but rather because Signal is allergic to it: using phone numbers means Signal can piggyback on the contact lists users already have, rather than storing those lists on its servers. A core design goal of the most important secure messenger is to avoid keeping a record of who’s talking to whom. Not every modern secure messenger is as conscientious as Signal. But they’re all better than Internet email, which doesn’t just collect metadata, but actively broadcasts it. Email on the Internet is a collaboration between many different providers; and each hop on its store-and-forward is another point at which metadata is logged. .

-

-

journals.sagepub.com journals.sagepub.com

-

Research ethics concerns issues, such as privacy, anonymity, informed consent and the sensitivity of data. Given that social media is part of society’s tendency to liquefy and blur the boundaries between the private and the public, labour/leisure, production/consumption (Fuchs, 2015a: Chapter 8), research ethics in social media research is par-ticularly complex.

-

-

robertheaton.com robertheaton.com

-

I suspect that Wacom doesn’t really think that it’s acceptable to record the name of every application I open on my personal laptop. I suspect that this is why their privacy policy doesn’t really admit that this is what that they do.

-