The uptake of mis- and disinformation is intertwined with the way our minds work. The large body of research on the psychological aspects of information manipulation explains why.

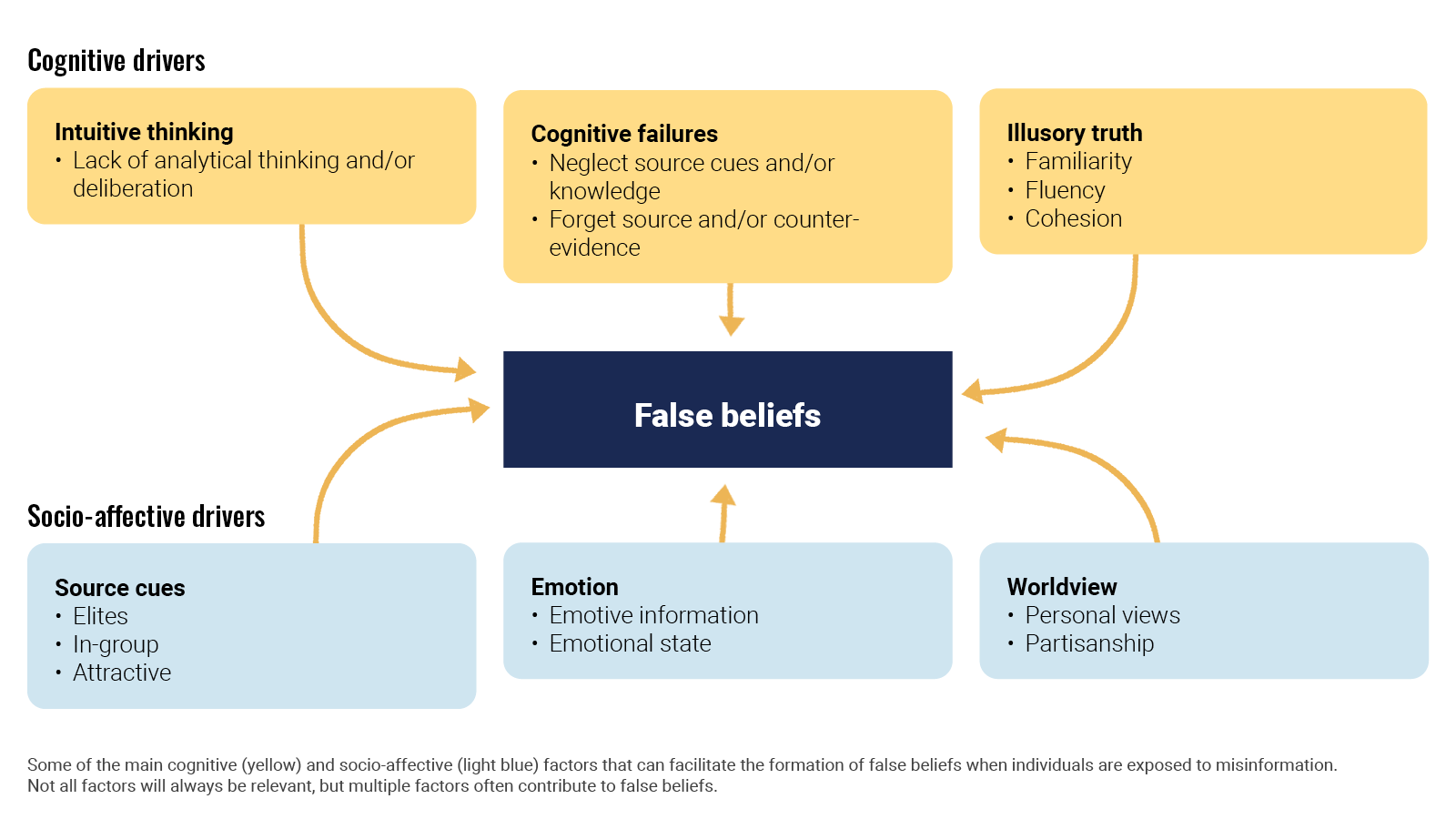

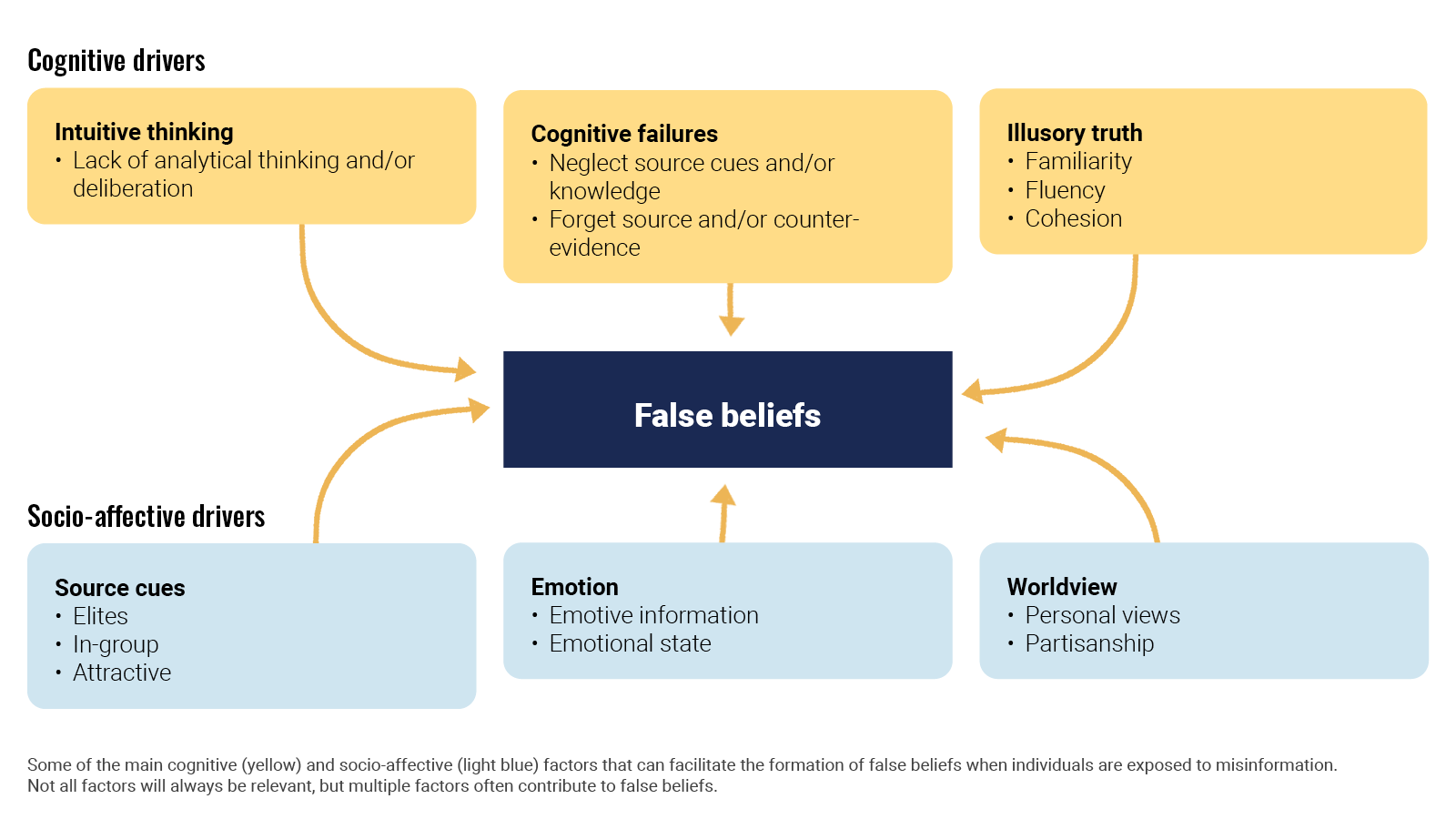

In an article for Nature Review Psychology, Ullrich K. H. Ecker et al looked(opens in a new tab) at the cognitive, social, and affective factors that lead people to form or even endorse misinformed views. Ironically enough, false beliefs generally arise through the same mechanisms that establish accurate beliefs. It is a mix of cognitive drivers like intuitive thinking and socio-affective drivers. When deciding what is true, people are often biased to believe in the validity of information and to trust their intuition instead of deliberating. Also, repetition increases belief in both misleading information and facts.

Ecker, U.K.H., Lewandowsky, S., Cook, J. et al. (2022). The psychological drivers of misinformation belief and its resistance to correction.

Going a step further, Álex Escolà-Gascón et al investigated the psychopathological profiles that characterise people prone to consuming misleading information. After running a number of tests on more than 1,400 volunteers, they concluded that people with high scores in schizotypy (a condition not too dissimilar from schizophrenia), paranoia, and histrionism (more commonly known as dramatic personality disorder) are more vulnerable to the negative effects of misleading information. People who do not detect misleading information also tend to be more anxious, suggestible, and vulnerable to strong emotions.