Das US-Anerikanische Hardland-Institut, lobbyiert inzwischen durch Vermittlung der FPÖ auch bei der EU in Brüssel you Benedikt Naudov-Slaski zeigt in einem Artikel im Falter auf, dass das Institut selbst bei der Darstellung der eigenen angeblichen Erfolge unseres Arbeiter. Herzlandvertreter behaupten, kommen ein EU-Klimagesetz gestoppt zu haben. Und nennen dafür ein Datum, kann man an dem tatsächlich nur eine ungarische Ministerin gegen die EU-Renaturierungsverordnung argumentiert hat, kann man die dann aber schließlich angenommen worden. https://www.derstandard.at/story/3000000254766/wie-us-klimawandelleugner-fake-news-ueber-die-eu-verbreiten-und-die-fpoe-ihnen-dabei-hilft

- Dec 2025

-

www.derstandard.at www.derstandard.at

Tags

- 2025-01-30

- Austria

- USA

- FPÖ

- Ungarn

- disinformation

- EU

- Heartland Institute

- by: Benedikt Narodoslawsky

- to_zotero

Annotators

URL

-

-

www.derstandard.de www.derstandard.de

-

Klaus Taschwe im Standard zur Arbeit des Hartland Instituts punkt das Institut hat jetzt in London eine Diplonore aufgemacht. Komma die von einer früheren Vorsitzenden der Antibrexipathal-Yukip geleitet wird. Es versucht in enger Kooperation mit der FPÖ den Europien Drain Deal abzusprechen bzw. zu blockieren. Das Institut gehört zum Aufdraggebern des sogenannten Project 2025-Kommar, dem Drehbuch für das Vorgehen der Tram-Administration gegen bisherige Regierungsinstitutionen vor allem In Bereichen wie Wissenschaft und Diversität https://www.derstandard.at/story/3000000254264/us-lobby-der-klimawandelleugner-dank-fpoe-weiter-auf-dem-vormarsch-in-europa

-

- Jul 2025

- May 2025

-

www.liberation.fr www.liberation.fr

- Feb 2025

-

www.derstandard.at www.derstandard.at

-

www.derstandard.de www.derstandard.de

-

www.derstandard.de www.derstandard.de

- Jan 2025

-

www.theguardian.com www.theguardian.com

-

www.theguardian.com www.theguardian.com

-

Das Heartland Institute betreibt seit Dezember eine Niederlassung in London, u.a. mit Unterstäützung von Nigel Farage. Das Institut arbeitet eng mit radikalen Rechtsparteien zusammen. Es sieht den aktuellen Rollback in der Klimapolitik auch als eigenen Erfolg. Zu seinen Wegbereitern in Europa gehörten die FPÖ-Politiker Harald Vilimsky und Roman Haider. Ausführlicher Bericht im Guardian https://www.theguardian.com/environment/2025/jan/22/us-thinktank-climate-science-deniers-working-with-rightwingers-in-eu-parliament-heartland-institute

DeSmog-Bericht: https://www.desmog.com/2025/01/22/usa-climate-denial-group-heartland-institute-using-far-right-to-attack-eu-green-policies/

Tags

- nature restoration law

- by: Helena Horton

- Austria

- Kenneth Haar

- Hungary

- Harald Vilimsky

- FPÖ

- disinformation

- Roman Haider

- Corporate Europe Observatory

- DeSmog

- Poland

- Heartland’s International Conference on Climate Change February 2022

- by: Clare Carlile

- EU

- Heartland Institute

- ExxonMobil

- 2024-01-22

- by: Sam Bright

Annotators

URL

-

-

www.theguardian.com www.theguardian.com

-

www.theguardian.com www.theguardian.com

-

Ein von 1000 Wissenschaftler:innen unterzeichnetes Papier, das sich für den Konsum vom Fleisch ausspricht, ist das Ergebnis einer PR- und Lobbying-Aktion der Fleischindustrie. Es diente der Beeinflussung der EU-Kommission. Der EU-Agrarkommissar übernahm die Argumentation. Offenbar ist es mit Hilfe der sogenannten Dublin Declaration, die von Fachleuten als wenig qualitätvoll beurteilt wird, gelungen, die EU-Kommission von ihrer ursprünglichen Absicht, Einschränkungen bei der Fleisch- und Milchproduktion zu vertreten, abzubringen. https://www.theguardian.com/environment/2023/oct/27/revealed-industry-figures-declaration-scientists-backing-meat-eating

-

- Dec 2024

-

media.dltj.org media.dltj.org

-

Every narrative in Putin's regime is a product of propaganda. From the from the school books which show a very different, distorted view on World War II, to every daily news episode on Russian State TV, the purpose of every piece of information is to forge a distorted view on reality in which Putin is the only solution. This is why the truth covered by Western media media and shared every day through social media is a threat to Vladimir Putin. And that is why he has launched an international war on truth.

The "Mind Bomb" defined

The firehose-of-bullshit problem...it can't all be combatted. Later:

But remember Olga Skabeyeva's words: There is no truth only interpretation. Her job and the job of every one of her colleagues is to replace truth with whatever narrative Putin desires.

-

Information is anything we know and can communicate. So now you know what information is, because I just communicated it. Misinformation is the subset of information which is false. So if by accident I tell you something which is wrong, then it's misinformation. But if tell you something wrong because I want to deceive you, trick you into doing something for me, then that's disinformation — that's the deliberate part.

Information versus Misinformation versus Disinformation

See also: Misinformation, Disinformation, and Malinformation from Leaked Documents Outline DHS’s Plans to Police Disinformation (The Intercept), 31-Oct-2022

-

- Nov 2024

-

www.desmog.com www.desmog.com

- Sep 2024

-

www.theguardian.com www.theguardian.com

-

Die Fossilindustrie finanziert seit Jahrzehten Universitäten und fördert damit Publikationen in ihrem Interesse, z.B. zu false solutions wie #CCS. Hintergrundbericht anlässlich einer neuen Studie: https://www.theguardian.com/business/article/2024/sep/05/universities-fossil-fuel-funding-green-energy

Studie: https://doi.org/10.1002/wcc.904

Tags

- Jake Lowe

- Fossilindustrie

- MIT Energy Initiative

- American Petroleum Institute

- disinformation

- Emily Eaton

- Campus Climate Network

- Princeton University’s Carbon Mitigation Initiative

- Jennie Stephens

- Fossil fuel industry influence in higher education: A review and a research agenda

- Geoffrey Supran

- BP

- Favourability towards natural gas relates to funding source of university energy centres

- climate obstructionism in.higher education

- negative emission technologies

- Data for Progress

- Accountable Allies: The Undue Influence of Fossil Fuel Money in Academia

- Exxon

- by: Dharma Noor

Annotators

URL

-

- Jun 2024

- May 2024

-

www.liberation.fr www.liberation.fr

-

Für eine neue Studie wurden die Klagen gegen climate washing, also gegen falsche Angaben von Unternehmen und Organisationen über die von ihnen verursachten Emissionen, erfasst. Global haben diese Prozesse in den letzten Jahren enorm zugenommen, wobei die Zahl der Prozesse etwa zum Ende der Amtszeit von Donald Trump in den USA am schnellsten wuchs. Die Verurteilungen, zu denen es bereits gekommen ist, führen aufgrund der mit ihnen verbundenen Kosten zu Veränderungen bei den Unternehmen.

-

-

www.liberation.fr www.liberation.fr

-

Vor einem Untersuchungsausschuss des französischen Senats hat sich der Chef von TotalEnergies, Pouyanné, mit den Standard-Argumenten von Big Oil gegen den Vorwurf gewehrt, die globale Erhitzung anzutreiben: Man befriedige nur die Nachfrage, nach fossilen Energien, senke den CO2-Ausstoß bei der Förderung, investiere auch in erneuerbare Energien und sei unverzichtbar, um die Energiewende zu vollziehen. https://www.liberation.fr/environnement/climat/totalenergies-interroge-au-senat-patrick-pouyanne-defend-ses-investissements-dans-le-fossile-20240429_Z5PVIU62UZDR3N2IKVRVW3UXJA/

-

-

www.carbonbrief.org www.carbonbrief.org

-

low, Carbon Brief walks through why all of these claims are, at best, highly misleading and, at worst, completely false – and examines how sections of the media have reported criticism of Labour’s promise to end new oil and gas licences

Genaue Analyse einer Desinformationsjampagne: Konservative Medien begleiten Sunaks Ankündigung, die Ausbeutung fossiler Energien in der Nordsee zu maximieren, mir einer publizistischen Breitseite gegen angebliche Labout-Pläne, keine Öl- und Gaslizenzen für die nötige zu vergeben und deren vorgeblich desaströse wirtschaftliche Konsequenzen. Dabei wird Labour in eine Verbindung zu Just Stop Oil gebracht, um die Partei zu diskreditieren. https://www.carbonbrief.org/factcheck-why-banning-new-north-sea-oil-and-gas-is-not-a-just-stop-oil-plan/

-

-

www.desmog.com www.desmog.com

-

In den USA besteht seit langem eine Netzwerk, in dem PR-Firmen mit Unternehmen der Fossilindustrie zusammenarbeiten, Dabei werden u.a. durch gefakete Kampagnen und die Gründung von Umweltgruppen die eigentlichen Stakeholder der Desinformation versteckt. In einem Hearing eines Unterausschusses des Repräsentantenhauses wurden die Praktiken von PR-Firmen aufgedeckt. https://www.desmog.com/2022/09/14/house-committee-pr-climate-disinformation/

Tags

- Story Partners

- 2022-09-14

- Robert Brulle

- by: Nick Cunningham

- disinformation

- Chevron

- Melissa Aronczyk

- FTI Consulting

- USA

- PAC\/West

- Clean Creatives

- Events/2022-09/Hearing des Unterausschusses Oversight & Investigations des Ausschusses für Natural Resources des US-Repräsentantenhauses

- Carter Werthman

- Christine Arena

- Anadarko Petroleum

- Noble Energy

- Duncan Meisel

Annotators

URL

-

-

www.nytimes.com www.nytimes.com

-

Verantwortliche der großen US-Fossilunternehmen haben vertraulich die öffentlichen Statements der Firmen zur Dekarbonisierung relativiert und in ihrer Öffentlichkeitsarbeit gegen die von ihnen selbst offiziell vertretene Politik agiert. Das geht aus Dokumenten hervor, deren Herausgabe der Ausschuss des Repräsentantenhauses für Oversight und Reform erzwungen hatte. https://www.nytimes.com/2022/09/14/climate/oil-industry-documents-disinformation.html

-

-

www.theguardian.com www.theguardian.com

-

In Chile wurde ein progressiver Verfassungsentwurf abgelehnt -– auch aufgrund einer massiven, von Superreichen finanzierten Desinformationskampagne. https://www.theguardian.com/commentisfree/2022/sep/06/chile-new-constitution-reject-pinochet

-

-

www.washingtonpost.com www.washingtonpost.com

-

Eine Studie von Avaaz zeigt, dass Facebook erheblich dazu beiträgt, Falschinformationen zu Klimafragen zu verbreiten. Dabei wirkt sich fatal aus, dass Facebook auf das Factchecking bei Politiker-Posts verzichtet. https://www.washingtonpost.com/politics/2021/04/23/technology-202-researchers-warn-misinformation-facebook-threatens-undermine-biden-climate-agenda/

-

-

www.liberation.fr www.liberation.fr

-

www.nytimes.com www.nytimes.com

-

Der Bezirk („county“) Maui hat die Fossilkonzern ExxonMobil und Chevron 2020 verklagt und für Umweltveränderungen verantwortlich gemacht, die u.a. Waldbrände begünstigen. ExxonMobil wurde dabei auch die jahrezehntelange Desinformation der Öffentlichkeit vorgeworfen. Die Klage könnte es nach den katastrophalen Bränden erleichtern, Schadenersatz zu fordern. https://www.nytimes.com/2023/08/18/climate/maui-fires-lawsuit.html

-

-

www.nytimes.com www.nytimes.com

-

www.theguardian.com www.theguardian.com

-

www.spiegel.de www.spiegel.de

-

www.liberation.fr www.liberation.fr

-

www.theguardian.com www.theguardian.com

-

George Monbiot zur Entscheidung Sunaks, die Öl- und Gasproduktion in der Nordsee zu maximieren. Er spricht vom pollution paradox: Die Firmen, die dem Planeten am meisten schaden, haben die triftigsten Gründe, in Politik und Desinformation zu investieren. Indiz für Desinformation sei der Rückgriff auf CCS. Die Konservativen erhalten große Spenden von der Fossilindustrie. Sunak hat verschuldet, dass der CO<sub>2</sub>-Preis in Uk nur noch halb so hoch ist wie in der EU. https://www.theguardian.com/commentisfree/2023/aug/01/rishi-sunak-north-sea-planet-climate-crisis-plutocrats

-

-

www.theguardian.com www.theguardian.com

-

In Myanmar wurde ein Journalist zu 20 Jahren Gefängnis verurteilt, weil er über die Folgen des Zyklons Mocha im Mai berichtet hatte. Der Sturm hatte mindestens 148 Todesopfer gefordert. Die Schäden trafen vor allem die Minderheit der Rohingya. https://www.theguardian.com/world/2023/sep/07/myanmar-journalist-jailed-for-20-years-for-cyclone-coverage

-

-

www.theguardian.com www.theguardian.com

-

Der Guardian ist in den Besitz eines detaillierten Dokuments zur PR und Desinformations-Strategie der Vereinigten Arabischen Emirate und des COP28- Präsidenten Sultan Al Jaber gekommen. Zu den sogenannten sensiblen Punkten gehört die Ausweitung der Öl- und Gas-Produktion der Emirate. Im Zentrum der Strategie steht offensichtlich das Bemühen, die Verbrennung von fossilen Energieträgern so wenig wie möglich zu thematisieren. https://www.theguardian.com/environment/2023/aug/01/leak-uae-presidency-un-climate-summit-oil-gas-emissions-yemen

-

-

www.liberation.fr www.liberation.fr

-

Auf die aktuellen Informationen von Meteorolog:innen und Klimawissenschaftleri:nnen zu Hitzewellen wird im Netz zunehmend und systematisch mit Beschimpfungen und Drohungen reagiert. In der Libération berichten Meteorologen des französischen Wetterdiensts und eine privaten Website über Beleidigungen und zunehmende Aggressivität der Hassposter.

Post von Alexandre Slowik: https://meteo-express.com/article/quand-parler-de-canicule-declenche-lhysterie-des-sceptiques

-

-

www.politico.com www.politico.com

-

www.theguardian.com www.theguardian.com

-

Staaten, die von der Viehwirtschaft abhängig sind, haben über Jahre großen Druck auf die FAO ausgeübt, Forschungsergebnisse zu den Methanemissionen durch Vieh zurückzuhalten. Wichtige Berichte wurden nicht publiziert. Wahrscheinlich wurde auch das volle Ausmaß der Treibhausgasemissionen durch die Viehzucht bewusst nicht dargestellt. https://www.theguardian.com/environment/2023/oct/20/ex-officials-at-un-farming-fao-say-work-on-methane-emissions-was-censored

Livestock's Long Shadow: https://www.fao.org/3/a0701e/a0701e.pdf

Tags

- actor: meat industry

- 2023-10-20

- actor: FAO

- expert: Henning Steinfeld

- by: Arthur Neslen

- expert: Gert Jan Nabuurs.

- disinformation

- topic: lobbying

- expert: Jennifer Jacquet

- event: FAO Global Conference on Sustainable Livestock Transformation

- report: Livestock’s Long Shadow (LLS)

- expert: Matthew Hayek

- actor: UN

Annotators

URL

-

-

-

In der taz formuliert Sara Schurma 7 Schritte zu einer adäquaten Klimaberichterstattung:

- Kontext liefern

- Klima immer und überall mitdenken

- Strukturelle Probleme anerkennen

- Redaktionen mehr Fachwissen ermöglichen

- Verzögerungsnarrative einordnen

- „False Baance“ vermeiden

- Lösungen kritisch mitberichten

Ihr Artikel gehört zu einem „Schwerpunkt Klima und Medien“. https://taz.de/7-Schritte-fuer-Redaktionen/!5956911/

-

-

milano.repubblica.it milano.repubblica.it

-

Der Chefredakteur der Repubblica, Maurizio Molinari, setzt auf lösungsorientierten Klimajournalismus. Artikel zu Klimathemen, die Lösungen beschreiben, würden auch von Jüngeren gelesen. Bericht über eine Diskussion zum Klimajournalismus in Mailand. https://milano.repubblica.it/cronaca/2023/11/18/news/rapporto_tra_scienza_e_giornalismo_molinari_le_soluzioni_sono_il_futuro_dell_informazione_sul_cambiamento_climatico-420686220/

-

-

www.theguardian.com www.theguardian.com

-

Der Finanzausschuss des amerikanischen Senats hat über einen Bericht zur Klima-Desinformation durch die Öl- und Gasbranche debattiert. Der Bericht führt detailliert auf, wie die Öffentlichkeit über Jahrzehnte manipuliert wurde. Inzwischen hätten „Täuschung, Desinformation und Doppelzüngigkeit“ die Klimaleugnung abgelöst. In der Debatte verwendeten republikanische Senatoren die traditionelle Rhetorik der Klimaleugnung. https://www.theguardian.com/us-news/2024/may/01/big-oil-danger-disinformation-fossil-fuels

Bericht; https://www.budget.senate.gov/imo/media/doc/fossil_fuel_report1.pdf

Tags

- expert: Geoffrey Supran

- institution: Center for Climate Integrity

- disinformation

- 2024-05-01

- actor: Republican Party

- country: USA

- event: Debate about climate disinformation in the US senate April 2024

- report: Denial, Disinformation and Doublespeak

- expert: Kert Davies

- actor: American Petroleum Institute

- NGO: Climate Defiance

Annotators

URL

-

-

www.theguardian.com www.theguardian.com

-

Amy Westervelt und Kyle Pope fassen kurz und treffend die wichtigsten Desinformationstaktiken der Fossilindustrie zusammen: - Fossilindustrie als Garantin der Energiesicherheit - Gegensatz von Wirtschaft und Umwelt - Verbraucher:innen brauchen fossile Energien für ihren Lebensstandard - Fossilindustrien sind Teil der Lösung, nicht des Problems - Fossilindustrien als Wohltäterinnen und Sponsorinnen.

https://www.theguardian.com/us-news/2024/apr/14/climate-disinformation-explainer

-

-

www.theguardian.com www.theguardian.com

-

Verantwortliche der großen amerikanischen Ölfirmen haben auch nach 2015 privat zugegeben, dass sie die Gefahren fossiler Brennstoffe heruntergespielt haben. Sie haben internationale Pläne gegen die globale Erhitzung nach außen hin unterstützt und nach innen kommuniziert, dass ihre Firmenpolitik diesen Plänen widerspricht. Und sie haben gegen politische Maßnahmen lobbyiert, hinter die sie sich offiziell gestellt haben. Das alles ergibt sich aus dem neuen Bericht des amerikanischen Kongresses über die Desinformations-Politik von Big Oil. Ausführlicher Bericht mit Informationen über mögliche juristische Konsequenzen. https://www.theguardian.com/us-news/2024/apr/30/big-oil-climate-crisis-us-senate-report

-

- Mar 2024

-

www.theguardian.com www.theguardian.com

-

In einem Interview mit dem Guardian spricht sich der noch amtierende US-Klimabeauftragte John Kerry scharf gegen Desinformation und Demagogie aus. In einer Phase, in der es in der Politik um den Kampf um Fortschritt oder den Kampf um Verzögerung gehe, würden sie gezielt eingesetzt. Die Klimapolitik der Biden-Administration orientiere sich ausschließlich an der Wissenschaft. Käme es zu dem aktuell von vielen betriebenen Rückschlag in der Klimapolitik, wäre eine Katastrophe für Milliarden und globale Unsicherheit die Folge. https://www.theguardian.com/environment/2024/feb/28/populism-imperilling-global-fight-against-climate-breakdown-says-john-kerry

-

-

www.theguardian.com www.theguardian.com

- Feb 2024

-

www.liberation.fr www.liberation.fr

-

Der Klimaforscher Michael Mann hat einen Prozess wegen Rufschädigung gegen zwei Klimaleugner gewonnen. Ihm wurde mehr als eine Million Entschädigung zugesprochen. Die Liberation geht in ihrem Bericht kurz auf die deutlich zunehmende Zahl der Kampagnen gegen Forschende in den USA ein. Der Bericht der New York Times zeigt, dass die globale Erhitzung in großen Teilen der US-Öffentlichkeit noch immer nicht als Faktum behandelt wird. https://www.liberation.fr/environnement/climat/etats-unis-deux-climatosceptiques-condamnes-a-un-million-de-dollars-pour-avoir-diffame-un-chercheur-20240212_FSHPNQRKV5DORO6CAX54GZLGUU/

Tags

Annotators

URL

-

- Jan 2024

-

bylinetimes.com bylinetimes.com

-

“Information has become a battlespace, like naval or aerial”, Carl Miller, Research Director of the Centre for the Analysis of Social Media, once explained to me. Information warfare impacts how information is used, shared and amplified. What matters for information combatants is not the truth, reliability, relevance, contextuality or accuracy of information, but its strategic impact on the battlespace; that is, how well it manipulates citizens into adopting desired actions and beliefs.

Information battlespace

Sharing “information” not to spread truth, but to influence behavior

-

-

www.repubblica.it www.repubblica.it

-

Die Desinformation zur globalen Erhitzung hat sich von der Klimaleugnung hin zum Säen von Zweifeln an möglichen Lösungen verschoben. Einer neuer Studie zufolge sind wichtige Strategien auf Youdas Tube das Herunterspielen der negativen Konsequenzen, Erzeugen von Misstrauen in die Klimaforschung und vor allem die Behauptung, dass vorhandene technische Lösungen nicht praktikabel sind. Außerdem werden Verschwörungstheorien wie die vom Grand Reset bemüht. https://www.repubblica.it/green-and-blue/2024/01/17/news/negazionismo_climatico_youtube-421894897/

Studie: https://counterhate.com/wp-content/uploads/2024/01/CCDH-The-New-Climate-Denial_FINAL.pdf

-

-

www.theguardian.com www.theguardian.com

-

Edelmann PR hat 2022 intensiv mit der Charles Koch Foundation zusammengearbeitet. Edelmann PR, der weltgrößte PR-Konzerne, hatte sich wiederholt verpflichtet, Klimaneugnung nicht zu unterstützen. Die Koch-Brüder, ein wichtiger Teil der amerikanischen Fossilindustrie förderten und fördern seit Jahrzehnten Klimarleugnung. Es gibt Hinweise auf weitere Verbindungen von Edelman zu Koch Industries. https://www.theguardian.com/us-news/2024/jan/14/edelman-charles-koch-foundation-climate

-

-

www.theguardian.com www.theguardian.com

-

Die amerikanische fossilindustrie finanziert mit einem achtstelligen Millionenbetrag eine Kampagne deren Ziel eine weitere Ausweitung der Produktion ist, wobei man auch die Gaza-Krise ausnutzt. Dabei werden vor allem Desinformationsstrategien der sogenannten climate delayers verwendet.

https://www.theguardian.com/us-news/2024/jan/10/oil-ads-lights-on-energy

-

- Dec 2023

-

www.desmog.com www.desmog.com

-

Zur PR für die Vereinigten Arabischen Emirate während der COP28 trugen Prominente Influencer: innen bei, die fast alle ihre Auftraggeber nicht nannten. Einige von ihnen erklärten später, die Seiten zum Greenwashing der Emirate missbraucht worden. https://www.desmog.com/2023/12/14/instagram-influencers-paid-to-boost-uaes-climate-credential-over-cop28/

-

-

www.desmog.com www.desmog.com

-

Dokumentation zu den PR-Firmen, die im Vorfeld der COP28 damit beauftragt waren, Sultan Al Jaber, die Vereinigten Arabischen Emirate und die Adnoc als Vorkämpfer einer ökologischen Wende darzustellen. Zu den Quellen des Artikels gehören die Offenlegungen, die das amerikanische Justizministerium von ausländischem Agenten verlangt https://www.desmog.com/2023/12/09/inoculate-from-criticism-a-closer-look-at-the-public-relations-companies-active-at-cop28/

-

-

www.derstandard.at www.derstandard.at

- Nov 2023

-

www.semanticscholar.org www.semanticscholar.org

-

Resilience to Online Disinformation: A Framework for Cross-National Comparative Research

This study is a secondary source as it compares data from existing researches. The authors compare several studies on disinformation targeted to develop a theoretical framework that discusses the prevalence and measurable conditions that are responsible for the spread of disinformation. Confirmation bias and motivated reasoning are two big factors in consumption and propagation. Other agents include social bots which spreads false political information resulting in misrepresented viewpoints, false information by strategic actors, and the phenomenon of naive realism that rejects views that are opposite of one's own. The accumulation of massive amounts of manipulated information combined with techniques and platforms all contribute to information pollution. Results on resiliency to disinformation between countries are explained through the number and values of framework indices. Results are categorized into three clusters - one country group with high resilience to disinformation has consensus political systems, strong welfare states, and pronounced democratic corporatism, and two groups with low resilience to disinformation – identified by its low levels of polarization, populist communication, social media news use, low trust levels, low shared media consumption, politicized, and fragmented environment. Frameworks, principal components factor analysis, and graph indices were used in the study to support and interpret the results.

-

- Sep 2023

-

docdrop.org docdrop.org

-

that's that is the Dirty Little Secret 00:12:08 of where we're at right now with Americans at each other's throats politically it's being created caused on purpose by the Chinese and the Russians who are manipulating people 00:12:22 through um use of phony websites and other disinformation campaigns being run which is a type of warfare that's being run 00:12:34 against the American people and they're falling for it

- for: example, example - internet flaws, polarization, disinformation,, example - polarization, political interference - Russia, political interference - China

- example: polarization, internet flaws

-

- Jul 2023

-

www.stockholmresilience.org www.stockholmresilience.org

-

Climate misinformation in a climate of misinformation

- Title

- Climate misinformation in a climate of misinformation

- Title

-

- May 2023

-

docs.google.com docs.google.com

-

Dave Troy is a US investigative journalist, looking at the US infosphere. Places resistance against disinformation not as a matter of factchecking and technology but one of reshaping social capital and cultural network topologies.

Early work by Valdis Krebs comes to mind vgl [[Netwerkviz en people nav 20091112072001]] and how the Finnish 'method' seemed to be a mix of [[Crap detection is civic duty 2018010073052]] and social capital aspects. Also re taking an algogen text as is / stand alone artefact vs seeing its provenance and entanglement with real world events, people and things.

-

-

-

Ein Bericht von Umwelt-Organisationen und des Center for Countering Digital Hate ergibt, dass Google nach wie vor viel Geld mit Anzeigen in der Umgebung von Inhalten von Klimleugnern verdient. 2021 hatte Google versprochen, auf solche Werbung zu verzichten. https://www.nytimes.com/2023/05/02/technology/google-youtube-disinformation-climate-change.html

-

- Mar 2023

-

www.ndss-symposium.org www.ndss-symposium.org

- Feb 2023

-

www.science.org www.science.org

-

a peer-reviewed article

This peer reviewed article titled "The Safety of COVID-19 Vaccinations—We Should Rethink the Policy" uses the mishandling of data provided by scientists to spready disinformation claiming that the Covid-19 vaccine is killing people. This is an example of disinformation because this study is peer reviewed, so the people involved in it are well educated and versed in the development and usage of the vaccine.

-

- Jan 2023

-

pubmed.ncbi.nlm.nih.gov pubmed.ncbi.nlm.nih.gov

-

Zika virus as a cause of birth defects: Were the teratogenic effects of Zika virus missed for decades?

Although it is not possible to prove definitively that ZIKV had teratogenic properties before 2013, several pieces of evidence support the hypothesis that its teratogenicity had been missed in the past. These findings emphasize the need for further investments in global surveillance for emerging infections and for birth defects so that infectious teratogens can be identified more expeditiously in the future.

-

-

archive.org archive.org

-

T he REVELATIONS about the possible complicity of the Bulgarian secret police in the shooting of the Pope have produced a grudging admission, even in previously skeptical quarters, that the Soviet Union may be involved in international terrorism. Some patterns have emerged in the past few years that tell us some- thing about the extent to which the Kremlin may use terrorism as an instrument of policy. A great deal of information has lately come to light, some of it accurate, some of it not. One of the most interesting developments appears to be the emergence of a close working relation- ship between organized crime (especially drug smug- glers and dealers) and some of the principal groups in the terrorist network.

-

-

www.sciencedirect.com www.sciencedirect.com

-

Highlights

- We exploit language differences to study the causal effect of fake news on voting.

- Language affects exposure to fake news.

- German-speaking voters from South Tyrol (Italy) are less likely to be exposed to misinformation.

- Exposure to fake news favours populist parties regardless of prior support for populist parties.

- However, fake news alone cannot explain most of the growth in populism.

-

-

www.science.org www.science.org

-

Results for the YouTube field experiment (study 7), showing the average percent increase in manipulation techniques recognized in the experimental (as compared to control) condition. Results are shown separately for items (headlines) 1 to 3 for the emotional language and false dichotomies videos, as well as the average scores for each video and the overall average across all six items. See Materials and Methods for the exact wording of each item (headline). Error bars show 95% confidence intervals.

-

-

www.sciencedirect.com www.sciencedirect.com

-

Who falls for fake news? Psychological and clinical profiling evidence of fake news consumers

Participants with a schizotypal, paranoid, and histrionic personality were ineffective at detecting fake news. They were also more vulnerable to suffer its negative effects. Specifically, they displayed higher levels of anxiety and committed more cognitive biases based on suggestibility and the Barnum Effect. No significant effects on psychotic symptomatology or affective mood states were observed. Corresponding to these outcomes, two clinical and therapeutic recommendations related to the reduction of the Barnum Effect and the reinterpretation of digital media sensationalism were made. The impact of fake news and possible ways of prevention are discussed.

Fake news and personality disorders

The observed relationship between fake news and levels of schizotypy was consistent with previous scientific evidence on pseudoscientific beliefs and magical ideation (see Bronstein et al., 2019; Escolà-Gascón, Marín, et al., 2021). Following the dual process theory model (e.g., Pennycook & Rand, 2019), when a person does not correctly distinguish between information with scientific arguments and information without scientific grounds it is because they predominantly use cognitive reasoning characterized by intuition (e.g., Dagnall, Drinkwater, et al., 2010; Swami et al., 2014; Dagnall et al., 2017b; Williams et al., 2021).

Concomitantly, intuitive thinking correlates positively with magical beliefs (see Šrol, 2021). Psychopathological classifications include magical beliefs as a dimension of schizotypal personality (e.g., Escolà-Gascón, 2020a). Therefore, it is possible that the high schizotypy scores in this study can be explained from the perspective of dual process theory (Denovan et al., 2018; Denovan et al., 2020; Drinkwater, Dagnall, Denovan, & Williams, 2021). Intuitive thinking could be the moderating variable that explains why participants who scored higher in schizotypy did not effectively detect fake news.

Something similar happened with the subclinical trait of paranoia. This variable scored the highest in both group 1 and group 2 (see Fig. 1). Intuition is also positively related to conspiratorial ideation (see Drinkwater et al., 2020; Gligorić et al., 2021). Similarly, psychopathology tends to classify conspiracy ideation as a frequent belief system in paranoid personality (see Escolà-Gascón, 2022). This is because conspiracy beliefs are based on systematic distrust of the systems that structure society (political system), knowledge (science) and economy (capitalism) (Dagnall et al., 2015; Swami et al., 2014). Likewise, it is known that distrust is the transversal characteristic of paranoid personality (So et al., 2022). Then, in this case the use of intuitive thinking and dual process theory could also justify the obtained paranoia scores. The same is not true for the histrionic personality.

The Barnum Effect

The Barnum Effect consists of accepting as exclusive a verbal description of an individual's personality, when, the description employs contents applicable or generalizable to any profile or personality that one wishes to describe (see Boyce & Geller, 2002; O’Keeffe & Wiseman, 2005). The error of this bias is to assume as exclusive or unique information that is not. This error can occur in other contexts not limited to personality descriptions. Originally, this bias was studied in the field of horoscopes and pseudoscience's (see Matute et al., 2011). Research results suggest that people who do not effectively detect fake news regularly commit the Barnum Effect. So, one way to prevent fake news may be to educate about what the Barnum Effect is and how to avoid it.

Conclusions

The conclusions of this research can be summarized as follows: (1) The evidence obtained proposes that profiles with high scores in schizotypy, paranoia and histrionism are more vulnerable to the negative effects of fake news. In clinical practice, special caution is recommended for patients who meet the symptomatic characteristics of these personality traits.

(2) In psychiatry and clinical psychology, it is proposed to combat fake news by reducing or recoding the Barnum effect, reinterpreting sensationalism in the media and promoting critical thinking in social network users. These suggestions can be applied from intervention programs but can also be implemented as psychoeducational programs for massive users of social networks.

(3) Individuals who do not effectively detect fake news tend to have higher levels of anxiety, both state and trait anxiety. These individuals are also highly suggestible and tend to seek strong emotions. Profiles of this type may inappropriately employ intuitive thinking, which could be the psychological mechanism that.

(4) Positive psychotic symptomatology, affective mood states and substance use (addiction risks) were not affected by fake news. In the field of psychosis, it should be analyzed whether fake news influences negative psychotic symptomatology.

-

-

euvsdisinfo.eu euvsdisinfo.eu

-

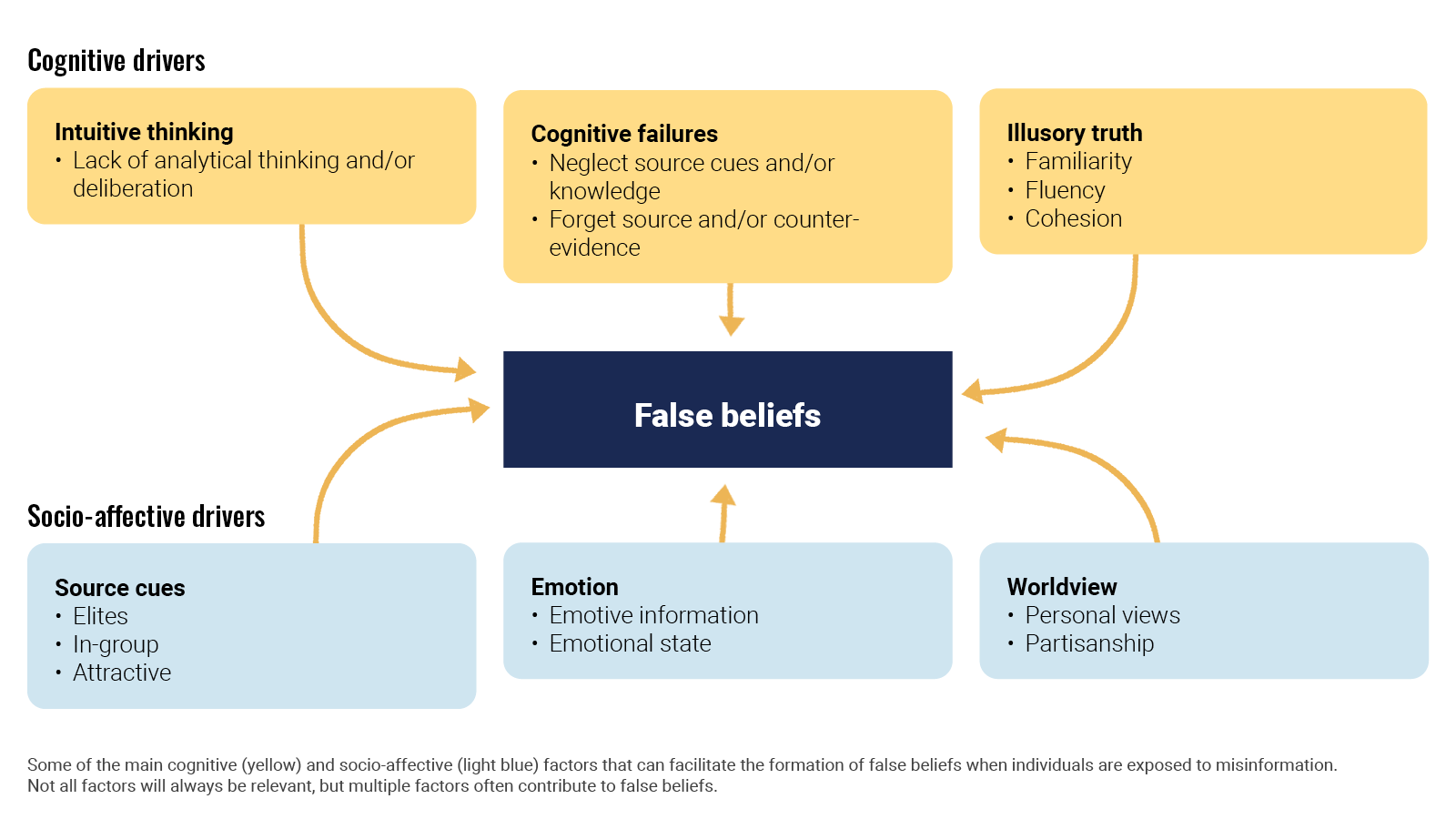

The uptake of mis- and disinformation is intertwined with the way our minds work. The large body of research on the psychological aspects of information manipulation explains why.

In an article for Nature Review Psychology, Ullrich K. H. Ecker et al looked(opens in a new tab) at the cognitive, social, and affective factors that lead people to form or even endorse misinformed views. Ironically enough, false beliefs generally arise through the same mechanisms that establish accurate beliefs. It is a mix of cognitive drivers like intuitive thinking and socio-affective drivers. When deciding what is true, people are often biased to believe in the validity of information and to trust their intuition instead of deliberating. Also, repetition increases belief in both misleading information and facts.

Ecker, U.K.H., Lewandowsky, S., Cook, J. et al. (2022). The psychological drivers of misinformation belief and its resistance to correction.

Going a step further, Álex Escolà-Gascón et al investigated the psychopathological profiles that characterise people prone to consuming misleading information. After running a number of tests on more than 1,400 volunteers, they concluded that people with high scores in schizotypy (a condition not too dissimilar from schizophrenia), paranoia, and histrionism (more commonly known as dramatic personality disorder) are more vulnerable to the negative effects of misleading information. People who do not detect misleading information also tend to be more anxious, suggestible, and vulnerable to strong emotions.

-

-

www.nytimes.com www.nytimes.com

-

The military has long engaged in information warfare against hostile nations -- for instance, by dropping leaflets and broadcasting messages into Afghanistan when it was still under Taliban rule.But it recently created the Office of Strategic Influence, which is proposing to broaden that mission into allied nations in the Middle East, Asia and even Western Europe. The office would assume a role traditionally led by civilian agencies, mainly the State Department.

US IO capacity was poor and the campaigns were ineffective.

-

-

www.ids.ac.uk www.ids.ac.uk

-

Indeed ‘anti-vaccination rumours’ have been defined as a major threat to achieving vaccine coverage goals. This is demonstrated in this paper through a case study of responses to the Global Polio Eradication Campaign (GPEI) in northern Nigeria where Muslim leaders ordered the boycott of the Oral Polio Vaccine (OPV). A 16-month controversy resulted from their allegations that the vaccines were contaminated with anti-fertility substances and the HIV virus was a plot by Western governments to reduce Muslim populations worldwide.

-

- Dec 2022

-

historymatters.gmu.edu historymatters.gmu.edu

-

Protestant Paranoia: The American Protective Association Oath

In 1887, Henry F. Bowers founded the nativist American Protective Association (APA) in Clinton, Iowa. Bowers was a Mason, and he drew from its fraternal ritual—elaborate regalia, initiation ceremonies, and a secret oath—in organizing the APA. He also drew many Masons, an organization that barred Catholics. The organization quickly acquired an anti-union cast. Among other things, the APA claimed that the Catholic leader of the Knights, Terence V. Powderly, was part of a larger conspiracy against American institutions. Even so, the APA successfully recruited significant numbers of disaffected trade unionists in an era of economic hard times and the collapse of the Knights of Labor. This secret oath taken by members of the American Protective Association in the 1890s revealed the depth of Protestant distrust and fear of Catholics holding public office.

I do most solemnly promise and swear that I will always, to the utmost of my ability, labor, plead and wage a continuous warfare against ignorance and fanaticism; that I will use my utmost power to strike the shackles and chains of blind obedience to the Roman Catholic church from the hampered and bound consciences of a priest-ridden and church-oppressed people; that I will never allow any one, a member of the Roman Catholic church, to become a member of this order, I knowing him to be such; that I will use my influence to promote the interest of all Protestants everywhere in the world that I may be; that I will not employ a Roman Catholic in any capacity if I can procure the services of a Protestant.

I furthermore promise and swear that I will not aid in building or maintaining, by my resources, any Roman Catholic church or institution of their sect or creed whatsoever, but will do all in my power to retard and break down the power of the Pope, in this country or any other; that I will not enter into any controversy with a Roman Catholic upon the subject of this order, nor will I enter into any agreement with a Roman Catholic to strike or create a disturbance whereby the Catholic employes may undermine and substitute their Protestant co-workers; that in all grievances I will seek only Protestants and counsel with them to the exclusion of all Roman Catholics, and will not make known to them anything of any nature matured at such conferences.

I furthermore promise and swear that I will not countenance the nomination, in any caucus or convention, of a Roman Catholic for any office in the gift of the American people, and that I will not vote for, or counsel others to vote for, any Roman Catholic, but will vote only for a Protestant, so far as may lie in my power. Should there be two Roman Catholics on opposite tickets, I will erase the name on the ticket I vote; that I will at all times endeavor to place the political positions of this government in the hands of Protestants, to the entire exclusion of the Roman Catholic church, of the members thereof, and the mandate of the Pope.

To all of which I do most solemnly promise and swear, so help me God. Amen.

Source: "The Secret Oath of the American Protective Association, October 31, 1893," in Michael Williams, The Shadow of the Pope (New York: McGraw-Hill Book Co., Inc., 1932), 103–104. Reprinted in John Tracy Ellis, ed., Documents of American Catholic History (Milwaukee: The Bruce Publishing Company, 1956), 500–501.

-

-

www.nytimes.com www.nytimes.com

-

In 1988, when polio was endemic in 125 countries, the annual assembly of national health ministers, meeting in Geneva, declared their intent to eradicate polio by 2000. That target was missed, but a $3 billion campaign had it contained in six countries by early 2003.

-

-

www.danielpipes.org www.danielpipes.org

-

The polio-vaccine conspiracy theory has had direct consequences: Sixteen countries where polio had been eradicated have in recent months reported outbreaks of the disease – twelve in Africa (Benin, Botswana, Burkina Faso, Cameroon, Central African Republic, Chad, Ethiopia, Ghana, Guinea, Mali, Sudan, and Togo) and four in Asia (India, Indonesia, Saudi Arabia, and Yemen). Yemen has had the largest polio outbreak, with more than 83 cases since April. The WHO calls this "a major epidemic."

-

-

arxiv.org arxiv.org

-

nalyze the content of 69,907 headlines pro-duced by four major global media corporations duringa minimum of eight consecutive months in 2014. In or-der to discover strategies that could be used to attractclicks, we extracted features from the text of the newsheadlines related to the sentiment polarity of the head-line. We discovered that the sentiment of the headline isstrongly related to the popularity of the news and alsowith the dynamics of the posted comments on that par-ticular news

-

-

www.sciencedirect.com www.sciencedirect.com

-

On the one hand, conspiracy theorists seem to disregard accuracy; they tend to endorse mutually incompatible conspiracies, think intuitively, use heuristics, and hold other irrational beliefs. But by definition, conspiracy theorists reject the mainstream explanation for an event, often in favor of a more complex account. They exhibit a general distrust of others and expend considerable effort to find ‘evidence’ supporting their beliefs. In searching for answers, conspiracy theorists likely expose themselves to misleading information online and overestimate their own knowledge. Understanding when elaboration and cognitive effort might backfire is crucial, as conspiracy beliefs lead to political disengagement, environmental inaction, prejudice, and support for violence.

-

-

www.nature.com www.nature.com

-

. Furthermore, our results add to the growing body of literature documenting—at least at this historical moment—the link between extreme right-wing ideology and misinformation8,14,24 (although, of course, factors other than ideology are also associated with misinformation sharing, such as polarization25 and inattention17,37).

Misinformation exposure and extreme right-wing ideology appear associated in this report. Others find that it is partisanship that predicts susceptibility.

-

And finally, at the individual level, we found that estimated ideological extremity was more strongly associated with following elites who made more false or inaccurate statements among users estimated to be conservatives compared to users estimated to be liberals. These results on political asymmetries are aligned with prior work on news-based misinformation sharing

This suggests the misinformation sharing elites may influence whether followers become more extreme. There is little incentive not to stoke outrage as it improves engagement.

-

In the co-share network, a cluster of websites shared more by conservatives is also shared more by users with higher misinformation exposure scores.

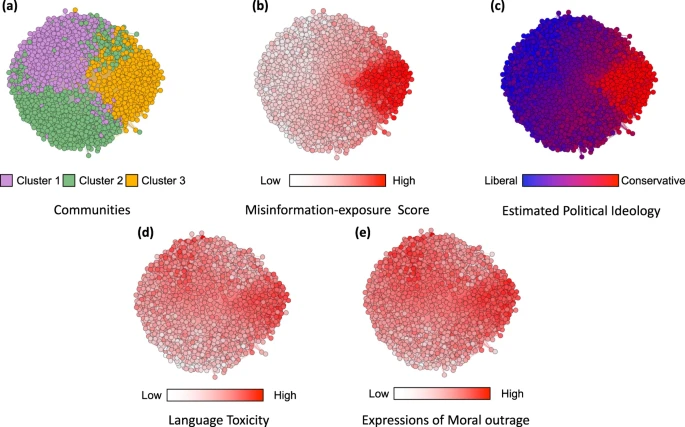

Nodes represent website domains shared by at least 20 users in our dataset and edges are weighted based on common users who shared them. a Separate colors represent different clusters of websites determined using community-detection algorithms29. b The intensity of the color of each node shows the average misinformation-exposure score of users who shared the website domain (darker = higher PolitiFact score). c Nodes’ color represents the average estimated ideology of the users who shared the website domain (red: conservative, blue: liberal). d The intensity of the color of each node shows the average use of language toxicity by users who shared the website domain (darker = higher use of toxic language). e The intensity of the color of each node shows the average expression of moral outrage by users who shared the website domain (darker = higher expression of moral outrage). Nodes are positioned using directed-force layout on the weighted network.

-

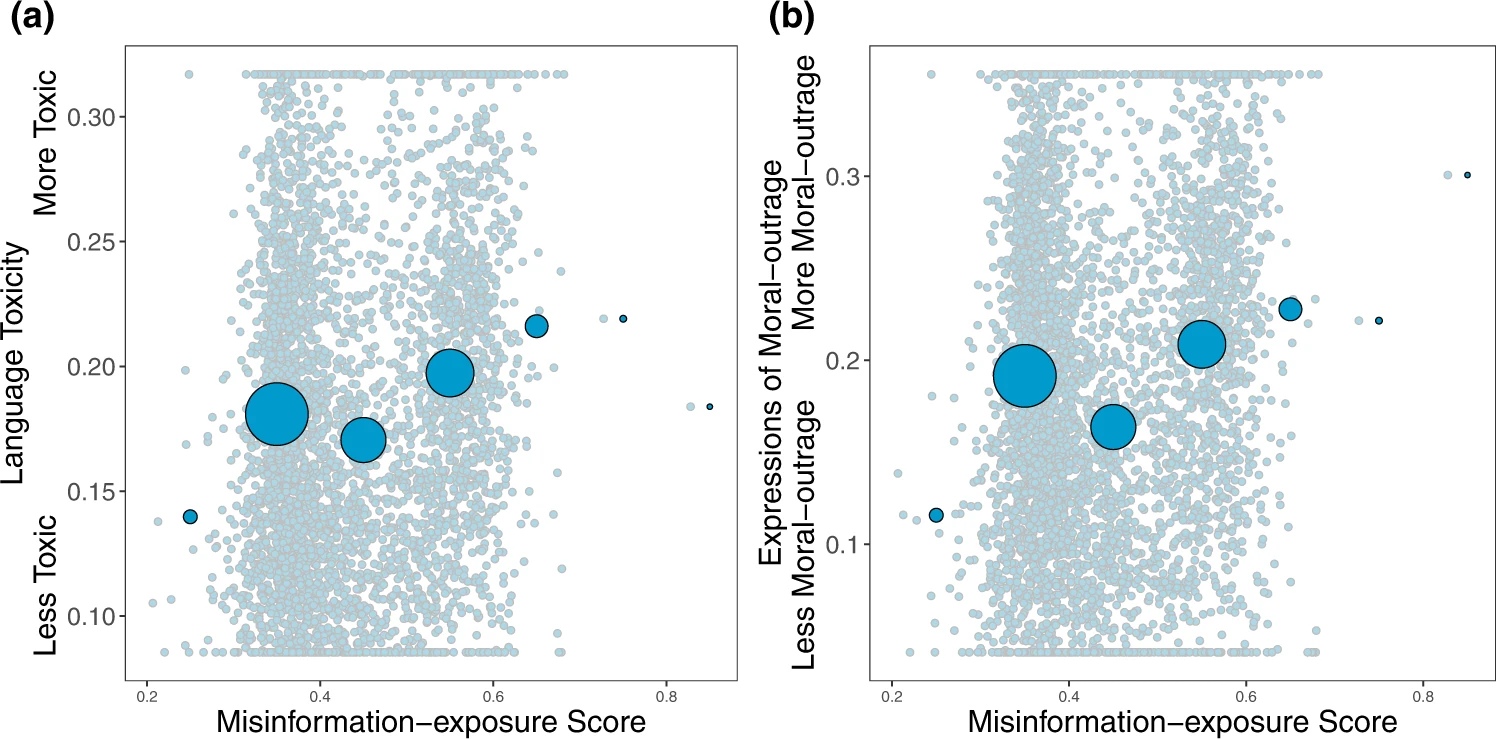

Exposure to elite misinformation is associated with the use of toxic language and moral outrage.

Shown is the relationship between users’ misinformation-exposure scores and (a) the toxicity of the language used in their tweets, measured using the Google Jigsaw Perspective API27, and (b) the extent to which their tweets involved expressions of moral outrage, measured using the algorithm from ref. 28. Extreme values are winsorized by 95% quantile for visualization purposes. Small dots in the background show individual observations; large dots show the average value across bins of size 0.1, with size of dots proportional to the number of observations in each bin. Source data are provided as a Source Data file.

-

We found that misinformation-exposure scores are significantly positively related to language toxicity (Fig. 3a; b = 0.129, 95% CI = [0.098, 0.159], SE = 0.015, t (4121) = 8.323, p < 0.001; b = 0.319, 95% CI = [0.274, 0.365], SE = 0.023, t (4106) = 13.747, p < 0.001 when controlling for estimated ideology) and expressions of moral outrage (Fig. 3b; b = 0.107, 95% CI = [0.076, 0.137], SE = 0.015, t (4143) = 14.243, p < 0.001; b = 0.329, 95% CI = [0.283,0.374], SE = 0.023, t (4128) = 14.243, p < 0.001 when controlling for estimated ideology). See Supplementary Tables 1, 2 for full regression tables and Supplementary Tables 3–6 for the robustness of our results.

-

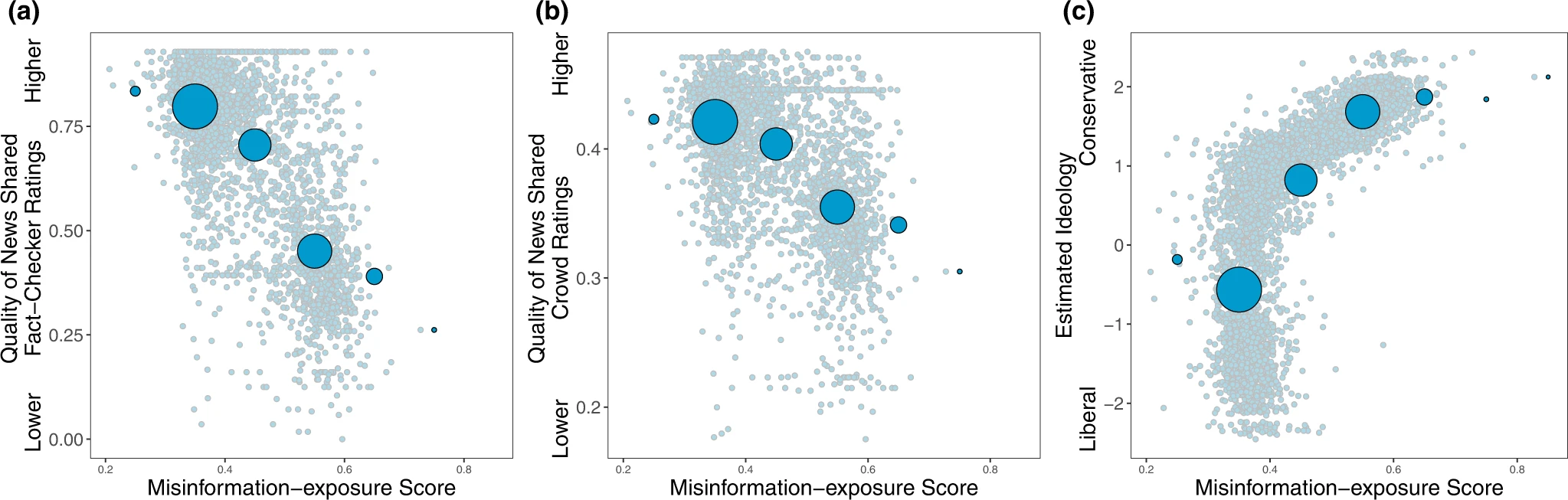

Aligned with prior work finding that people who identify as conservative consume15, believe24, and share more misinformation8,14,25, we also found a positive correlation between users’ misinformation-exposure scores and the extent to which they are estimated to be conservative ideologically (Fig. 2c; b = 0.747, 95% CI = [0.727,0.767] SE = 0.010, t (4332) = 73.855, p < 0.001), such that users estimated to be more conservative are more likely to follow the Twitter accounts of elites with higher fact-checking falsity scores. Critically, the relationship between misinformation-exposure score and quality of content shared is robust controlling for estimated ideology

-

-

www.nature.com www.nature.com

-

Exposure to elite misinformation is associated with sharing news from lower-quality outlets and with conservative estimated ideology.

Shown is the relationship between users’ misinformation-exposure scores and (a) the quality of the news outlets they shared content from, as rated by professional fact-checkers21, (b) the quality of the news outlets they shared content from, as rated by layperson crowds21, and (c) estimated political ideology, based on the ideology of the accounts they follow10. Small dots in the background show individual observations; large dots show the average value across bins of size 0.1, with size of dots proportional to the number of observations in each bin.

-

-

arxiv.org arxiv.org

-

Notice that Twitter’s account purge significantly impacted misinformation spread worldwide: the proportion of low-credible domains in URLs retweeted from U.S. dropped from 14% to 7%. Finally, despite not having a list of low-credible domains in Russian, Russia is central in exporting potential misinformation in the vax rollout period, especially to Latin American countries. In these countries, the proportion of low-credible URLs coming from Russia increased from 1% in vax development to 18% in vax rollout periods (see Figure 8 (b), Appendix).

-

Considering the behavior of users in no-vax communities,we find that they are more likely to retweet (Figure 3(a)), share URLs (Figure 3(b)), and especially URLs toYouTube (Figure 3(c)) than other users. Furthermore, the URLs they post are much more likely to be fromlow-credible domains (Figure 3(d)), compared to those posted in the rest of the networks. The differenceis remarkable: 26.0% of domains shared in no-vax communities come from lists of known low-credibledomains, versus only 2.4% of those cited by other users (p < 0.001). The most common low-crediblewebsites among the no-vax communities are zerohedge.com, lifesitenews.com, dailymail.co.uk (consideredright-biased and questionably sourced) and childrenshealthdefense.com (conspiracy/pseudoscience)

-

We find that, during the pandemic, no-vax communities became more central in the country-specificdebates and their cross-border connections strengthened, revealing a global Twitter anti-vaccinationnetwork. U.S. users are central in this network, while Russian users also become net exporters ofmisinformation during vaccination roll-out. Interestingly, we find that Twitter’s content moderationefforts, and in particular the suspension of users following the January 6th U.S. Capitol attack, had aworldwide impact in reducing misinformation spread about vaccines. These findings may help publichealth institutions and social media platforms to mitigate the spread of health-related, low-credibleinformation by revealing vulnerable online communities

-

-

arxiv.org arxiv.org

-

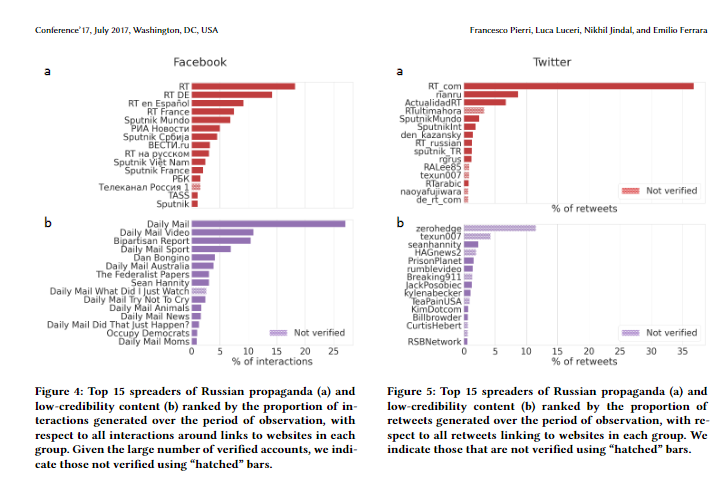

On Facebook, we identified 51,269 posts (0.25% of all posts)sharing links to Russian propaganda outlets, generating 5,065,983interactions (0.17% of all interactions); 80,066 posts (0.4% of allposts) sharing links to low-credibility news websites, generating28,334,900 interactions (0.95% of all interactions); and 147,841 postssharing links to high-credibility news websites (0.73% of all posts),generating 63,837,701 interactions (2.13% of all interactions). Asshown in Figure 2, we notice that the number of posts sharingRussian propaganda and low-credibility news exhibits an increas-ing trend (Mann-Kendall 𝑃 < .001), whereas after the invasion ofUkraine both time series yield a significant decreasing trend (moreprominent in the case of Russian propaganda); high-credibilitycontent also exhibits an increasing trend in the Pre-invasion pe-riod (Mann-Kendall 𝑃 < .001), which becomes stable (no trend)in the period afterward. T

-

We estimated the contribution of veri-fied accounts to sharing and amplifying links to Russian propagandaand low-credibility sources, noticing that they have a dispropor-tionate role. In particular, superspreaders of Russian propagandaare mostly accounts verified by both Facebook and Twitter, likelydue to Russian state-run outlets having associated accounts withverified status. In the case of generic low-credibility sources, a sim-ilar result applies to Facebook but not to Twitter, where we alsonotice a few superspreaders accounts that are not verified by theplatform.

-

On Twitter, the picture is very similar in the case of Russianpropaganda, where all accounts are verified (with a few exceptions)and mostly associated with news outlets, and generate over 68%of all retweets linking to these websites (see panel a of Figure 4).For what concerns low-credibility news, there are both verified (wecan notice the presence of seanhannity) and not verified users,and only a few of them are directly associated with websites (e.g.zerohedge or Breaking911). Here the top 15 accounts generateroughly 30% of all retweets linking to low-credibility websites.

-

Figure 5: Top 15 spreaders of Russian propaganda (a) andlow-credibility content (b) ranked by the proportion ofretweets generated over the period of observation, with re-spect to all retweets linking to websites in each group. Weindicate those that are not verified using “hatched” bars

-

-

ieeexplore.ieee.org ieeexplore.ieee.org

-

We applied two scenarios to compare how these regular agents behave in the Twitter network, with and without malicious agents, to study how much influence malicious agents have on the general susceptibility of the regular users. To achieve this, we implemented a belief value system to measure how impressionable an agent is when encountering misinformation and how its behavior gets affected. The results indicated similar outcomes in the two scenarios as the affected belief value changed for these regular agents, exhibiting belief in the misinformation. Although the change in belief value occurred slowly, it had a profound effect when the malicious agents were present, as many more regular agents started believing in misinformation.

-

-

www.mdpi.com www.mdpi.com

-

we found that social bots played a bridge role in diffusion in the apparent directional topic like “Wuhan Lab”. Previous research also found that social bots play some intermediary roles between elites and everyday users regarding information flow [43]. In addition, verified Twitter accounts continue to be very influential and receive more retweets, whereas social bots retweet more tweets from other users. Studies have found that verified media accounts remain more central to disseminating information during controversial political events [75]. However, occasionally, even the verified accounts—including those of well-known public figures and elected officials—sent misleading tweets. This inspired us to investigate the validity of tweets from verified accounts in subsequent research. It is also essential to rely solely on science and evidence-based conclusions and avoid opinion-based narratives in a time of geopolitical conflict marked by hidden agendas, disinformation, and manipulation [76].

-

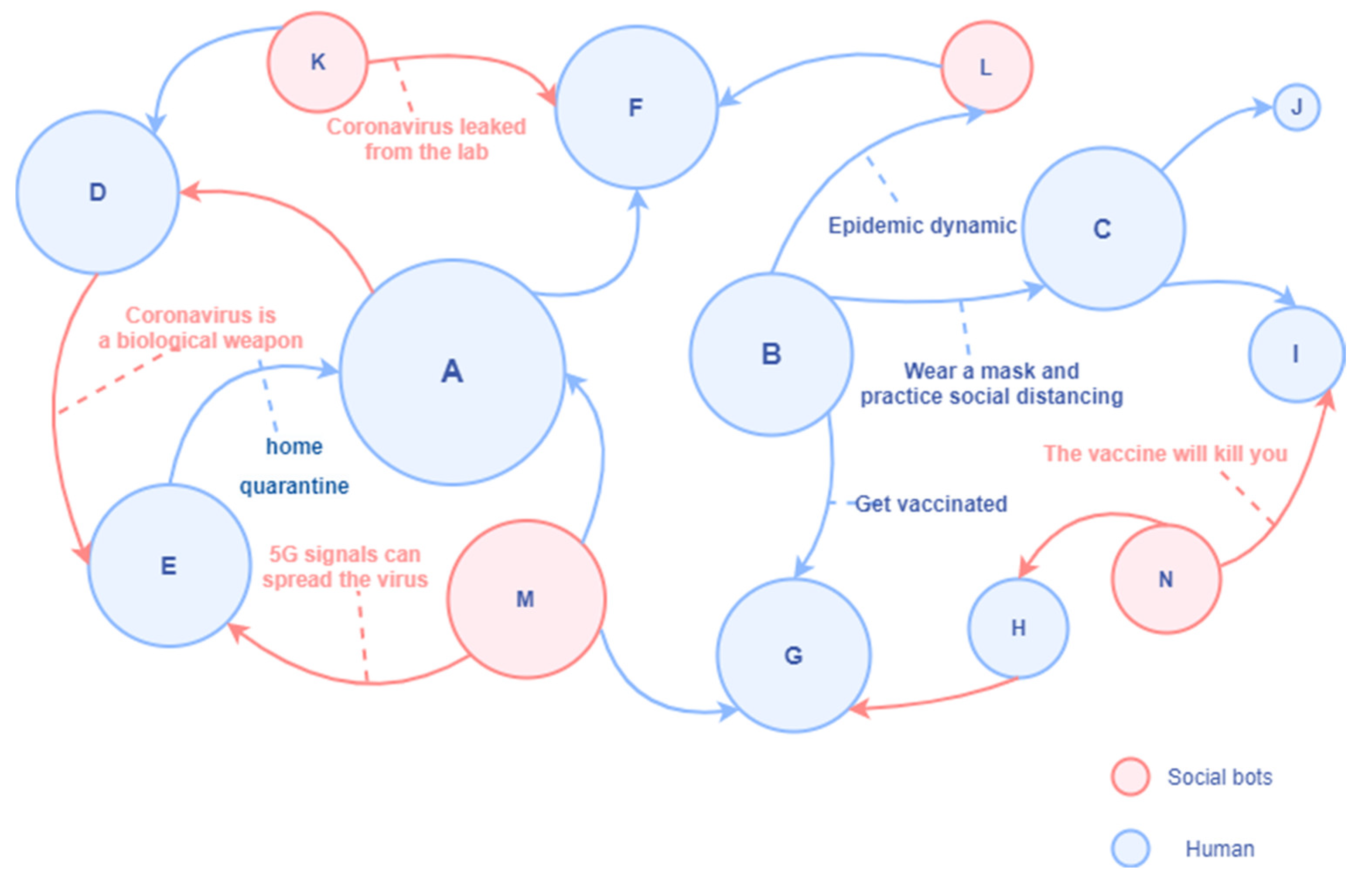

In Figure 6, the node represented by human A is a high-degree centrality account with poor discrimination ability for disinformation and rumors; it is easily affected by misinformation retweeted by social bots. At the same time, it will also refer to the opinions of other persuasive folk opinion leaders in the retweeting process. Human B represents the official institutional account, which has a high in-degree and often pushes the latest news, preventive measures, and suggestions related to COVID-19. Human C represents a human account with high media literacy, which mainly retweets information from information sources with high credibility. It has a solid ability to identify information quality and is not susceptible to the proliferation of social bots. Human D actively creates and spreads rumors and conspiracy theories and only retweets unverified messages that support his views in an attempt to expand the influence. Social bots K, M, and N also spread unverified information (rumors, conspiracy theories, and disinformation) in the communication network without fact-checking. Social bot L may be a social bot of an official agency.

-

-

www.universiteitleiden.nl www.universiteitleiden.nl

-

Our study of QAnon messages found a highprevalence of linguistic identity fusion indicators along with external threat narratives, violence-condoninggroup norms as well as demonizing, dehumanizing, and derogatory vocabulary applied to the out-group, espe-cially when compared to the non-violent control group. The aim of this piece of research is twofold: (i.) It seeksto evaluate the national security threat posed by the QAnon movement, and (ii.) it aims to provide a test of anovel linguistic toolkit aimed at helping to assess the risk of violence in online communication channels.

-

-

www.nature.com www.nature.com

-

The style is one that is now widely recognized as a tool of sowing doubt: the author just asked ‘reasonable’ questions, without making any evidence-based conclusions.Who is the audience of this story and who could potentially be targeted by such content? As Bratich argued, 9/11 represents a prototypical case of ‘national dissensus’ among American individuals, and an apparently legitimate case for raising concerns about the transparency of the US authorities13. It is indicative that whoever designed the launch of RT US knew how polarizing it would be to ask questions about the most painful part of the recent past.

-

Conspiracy theories that provide names of the beneficiaries of political, social and economic disasters help people to navigate the complexities of the globalized world, and give simple answers as to who is right and who is wrong. If you add to this global communication technologies that help to rapidly develop and spread all sorts of conspiracy theories, these theories turn into a powerful tool to target subnational, national and international communities and to spread chaos and doubt. The smog of subjectivity created by user-generated content and the crisis of expertise have become a true gift to the Kremlin’s propaganda.

-

To begin with, the US output of RT tapped into the rich American culture of conspiracy theories by running a story entitled ‘911 questions to the US government about 9/11’

-

Instead, to counter US hegemonic narratives, the Kremlin took to systematically presenting alternative narratives and dissenting voices. Russia’s public diplomacy tool — the international television channel Russia Today — was rebranded as RT in 2009, probably to hide its clear links to the Russian government11. After an aggressive campaign to expand in English-, Spanish-, German- and French-speaking countries throughout the 2010s, the channel became the most visible source of Russia’s disinformation campaigns abroad. Analysis of its broadcasts shows the adoption of KGB approaches, as well as the use of novel tools provided by the global online environment

-

-

www.nature.com www.nature.com

-

Our results show that Fox News is reducing COVID-19 vaccination uptake in the United States, with no evidence of the other major networks having any effect. We first show that there is an association between areas with higher Fox News viewership and lower vaccinations, then provide an instrumental variable analysis to account for endogeneity, and help pin down the magnitude of the local average treatment effect.

-

Overall, an additional weekly hour of Fox News viewership for the average household accounts for a reduction of 0.35–0.76 weekly full vaccinations per 100 people during May and June 2021. This result is not only driven by Fox News’ anti-science messaging, but also by the network’s skeptic coverage of COVID-19 vaccinations.

-

-

www.nature.com www.nature.com

-

highlights the need for public health officials to disseminate information via multiple media channels to increase the chances of accessing vaccine resistant or hesitant individuals.

-

Interestingly, while vaccine hesitant and resistant individuals in Ireland and the UK varied in relation to their social, economic, cultural, political, and geographical characteristics, both populations shared similar psychological profiles. Specifically, COVID-19 vaccine hesitant or resistant persons were distinguished from their vaccine accepting counterparts by being more self-interested, more distrusting of experts and authority figures (i.e. scientists, health care professionals, the state), more likely to hold strong religious beliefs (possibly because these kinds of beliefs are associated with distrust of the scientific worldview) and also conspiratorial and paranoid beliefs (which reflect lack of trust in the intentions of others).

-

Across the Irish and UK samples, similarities and differences emerged regarding those in the population who were more likely to be hesitant about, or resistant to, a vaccine for COVID-19. Three demographic factors were significantly associated with vaccine hesitance or resistance in both countries: sex, age, and income level. Compared to respondents accepting of a COVID-19 vaccine, women were more likely to be vaccine hesitant, a finding consistent with a number of studies identifying sex and gender-related differences in vaccine uptake and acceptance37,38. Younger age was also related to vaccine hesitance and resistance.

-

Similar rates of vaccine hesitance (26% and 25%) and resistance (9% and 6%) were evident in the Irish and UK samples, with only 65% of the Irish population and 69% of the UK population fully willing to accept a COVID-19 vaccine. These findings align with other estimates across seven European nations where 26% of adults indicated hesitance or resistance to a COVID-19 vaccine7 and in the United States where 33% of the population indicated hesitance or resistance34. Rates of resistance to a COVID-19 vaccine also parallel those found for other types of vaccines. For example, in the United States 9% regarded the MMR vaccine as unsafe in a survey of over 1000 adults35, while 7% of respondents across the world said they “strongly disagree” or “somewhat disagree” with the statement ‘Vaccines are safe’36. Thus, upwards of approximately 10% of study populations appear to be opposed to vaccinations in whatever form they take. Importantly, however, the findings from the current study and those from around Europe and the United States may not be consistent with or reflective of vaccine acceptance, hesitancy, or resistance in non-Western countries or regions.

-

There were no significant differences in levels of consumption and trust between the vaccine accepting and vaccine hesitant groups in the Irish sample. Compared to vaccine hesitant responders, vaccine resistant individuals consumed significantly less information about the pandemic from television and radio, and had significantly less trust in information disseminated from newspapers, television broadcasts, radio broadcasts, their doctor, other health care professionals, and government agencies.

-

In the Irish sample, the combined vaccine hesitant and resistant group differed most pronouncedly from the vaccine acceptance group on the following psychological variables: lower levels of trust in scientists (d = 0.51), health care professionals (d = 0.45), and the state (d = 0.31); more negative attitudes toward migrants (d’s ranged from 0.27 to 0.29); lower cognitive reflection (d = 0.25); lower levels of altruism (d’s ranged from 0.17 to 0.24); higher levels of social dominance (d = 0.22) and authoritarianism (d = 0.14); higher levels of conspiratorial (d = 0.21) and religious (d = 0.20) beliefs; lower levels of the personality trait agreeableness (d = 0.15); and higher levels of internal locus of control (d = 0.14).

-

-

tobaccotactics.org tobaccotactics.org

-

Undermining the Concept of Environmental Risk

By the mid-1990s, the Institute of Economic Affairs had extended its work on Risk Assessment (RA). More specifically, the head of IEA’s Environment Unit Roger Bate was interested in undermining the concept of “environmental risk”, especially in relation to key themes, such as climate change and pesticides, and second hand smoke.

-

- Nov 2022

-

theintercept.com theintercept.com

-

Under President Joe Biden, the shifting focus on disinformation has continued. In January 2021, CISA replaced the Countering Foreign Influence Task force with the “Misinformation, Disinformation and Malinformation” team, which was created “to promote more flexibility to focus on general MDM.” By now, the scope of the effort had expanded beyond disinformation produced by foreign governments to include domestic versions. The MDM team, according to one CISA official quoted in the IG report, “counters all types of disinformation, to be responsive to current events.” Jen Easterly, Biden’s appointed director of CISA, swiftly made it clear that she would continue to shift resources in the agency to combat the spread of dangerous forms of information on social media.

MDM == Misinformation, Disinformation, and Malinformation.

These definitions from earlier in the article: * misinformation (false information spread unintentionally) * disinformation (false information spread intentionally) * malinformation (factual information shared, typically out of context, with harmful intent)

-

- Oct 2022

-

-

getting people tofind their own information,

do your own research. Relate to Tripodi https://www.google.com/books/edition/The_Propagandists_Playbook/rWZ4EAAAQBAJ?hl=en&gbpv=1&printsec=frontcover

-

plant the seed of doubt

disinfo strategy

-

-

www.nytimes.com www.nytimes.com

-

“I think we were so happy to develop all this critique because we were so sure of the authority of science,” Latour reflected this spring. “And that the authority of science would be shared because there was a common world.”

This is crucial. Latour was constructing science based on the belief of its authority - not deconstructing science. And the point about the common world, as inherently connected to the authority of science, is great.

-

-

www.aljazeera.com www.aljazeera.com

-

What Labiste described as a “well-oiled operation” has been years in the making. The Marcos Jr campaign has utilised Facebook pages and groups, YouTube channels and TikTok videos to reach out to Filipino voters, most of whom use the internet to get their political news. A whistleblower at the British data analytics firm, Cambridge Analytica, which assisted with the presidential campaign of former US President Donald Trump, also said Marcos Jr sought help to rebrand the family’s image in 2016, a claim he denied.

Perhaps among those that can be considered mistakes by the election campaigns that were conducted in opposition to the Marcos presidential candidacy was the inability to comprehend the impact made by disinformation materials generated over social media. There was an awareness that these had to be constantly called out and corrected. However the efforts conducted to accomplish it failed to understand the population to which the disinformation campaign was directed. Corrections to wrong facts, and the presenting of the truth were only understood by those who corrected them. Moreover, by the time the campaign against wrong information started in earnest, the mediums for disinformation have already been well established and have taken root among those who easily believe it as the truth. It explains why plenty of the efforts done to fact check information has fallen on deaf ears, and in some cases have even pushed those who believe disinformation to hold onto it even more.

-

-

interaksyon.philstar.com interaksyon.philstar.com

-

Edgerly noted that disinformation spreads through two ways: The use of technology and human nature.Click-based advertising, news aggregation, the process of viral spreading and the ease of creating and altering websites are factors considered under technology.“Facebook and Google prioritize giving people what they ‘want’ to see; advertising revenue (are) based on clicks, not quality,” Edgerly said.She noted that people have the tendency to share news and website links without even reading its content, only its headline. According to her, this perpetuates a phenomenon of viral spreading or easy sharing.There is also the case of human nature involved, where people are “most likely to believe” information that supports their identities and viewpoints, Edgerly cited.“Vivid, emotional information grabs attention (and) leads to more responses (such as) likes, comments, shares. Negative information grabs more attention than (the) positive and is better remembered,” she said.Edgerly added that people tend to believe in information that they see on a regular basis and those shared by their immediate families and friends.

Spreading misinformation and disinformation is really easy in this day and age because of how accessible information is and how much of it there is on the web. This is explained precisely by Edgerly. Noted in this part of the article, there is a business for the spread of disinformation, particularly in our country. There are people who pay what we call online trolls, to spread disinformation and capitalize on how “chronically online” Filipinos are, among many other factors (i.e., most Filipinos’ information illiteracy due to poverty and lack of educational attainment, how easy it is to interact with content we see online, regardless of its authenticity, etc.). Disinformation also leads to misinformation through word-of-mouth. As stated by Edgerly in this article, “people tend to believe in information… shared by their immediate families and friends”; because of people’s human nature to trust the information shared by their loved ones, if one is not information literate, they will not question their newly received information. Lastly, it most certainly does not help that social media algorithms nowadays rely on what users interact with; the more that a user interacts with a certain information, the more that social media platforms will feed them that information. It does not help because not all social media websites have fact checkers and users can freely spread disinformation if they chose to.

-

-

-

"In 2013, we spread fake news in one of the provinces I was handling," he says, describing how he set up his client's opponent. "We got the top politician's cell phone number and photo-shopped it, then sent out a text message pretending to be him, saying he was looking for a mistress. Eventually, my client won."

This statement from a man who claims to work for politicians as an internet troll and propagator of fake news was really striking, because it shows how fabricating something out of the blue can have a profound impact in the elections--something that is supposed to be a democratic process. Now more than ever, mudslinging in popular information spaces like social media can easily sway public opinion (or confirm it). We have seen this during the election season, wherein Leni Robredo bore the brunt of outrageous rumors; one rumor I remember well was that Leni apparently married an NPA member before and had a kid with him. It is tragic that misinformation and disinformation is not just a mere phenomenon anymore, but a fully blown industry. It has a tight clutch on the decisions people make for the country, while also deeply affecting their values and beliefs.

-

-

www.cits.ucsb.edu www.cits.ucsb.edu

-

Trolls, in this context, are humans who hold accounts on social media platforms, more or less for one purpose: To generate comments that argue with people, insult and name-call other users and public figures, try to undermine the credibility of ideas they don’t like, and to intimidate individuals who post those ideas. And they support and advocate for fake news stories that they’re ideologically aligned with. They’re often pretty nasty in their comments. And that gets other, normal users, to be nasty, too.

Not only programmed accounts are created but also troll accounts that propagate disinformation and spread fake news with the intent to cause havoc on every people. In short, once they start with a malicious comment some people will engage with the said comment which leads to more rage comments and disagreements towards each other. That is what they do, they trigger people to engage in their comments so that they can be spread more and produce more fake news. These troll accounts usually are prominent during elections, like in the Philippines some speculates that some of the candidates have made troll farms just to spread fake news all over social media in which some people engage on.

-

So, bots are computer algorithms (set of logic steps to complete a specific task) that work in online social network sites to execute tasks autonomously and repetitively. They simulate the behavior of human beings in a social network, interacting with other users, and sharing information and messages [1]–[3]. Because of the algorithms behind bots’ logic, bots can learn from reaction patterns how to respond to certain situations. That is, they possess artificial intelligence (AI).

In all honesty, since I don't usually dwell on technology, coding, and stuff. I thought when you say "Bot" it is controlled by another user like a legit person, never knew that it was programmed and created to learn the usual patterns of posting of some people may be it on Twitter, Facebook, and other social media platforms. I think it is important to properly understand how "Bots" work to avoid misinformation and disinformation most importantly during this time of prominent social media use.

-

- Sep 2022

-

www.theatlantic.com www.theatlantic.com

-

the court upheld a preposterous Texas law stating that online platforms with more than 50 million monthly active users in the United States no longer have First Amendment rights regarding their editorial decisions. Put another way, the law tells big social-media companies that they can’t moderate the content on their platforms.

-

-

meta.wikimedia.org meta.wikimedia.org

-

Many of you are already aware of recent changes that the Foundation has made to its NDA policy. These changes have been discussed on Meta, and I won’t reiterate all of our disclosures there,[2] but I will briefly summarize that due to credible information of threat, the Foundation has modified its approach to accepting “non-disclosure agreements” from individuals. The security risk relates to information about infiltration of Wikimedia systems, including positions with access to personally identifiable information and elected bodies of influence. We could not pre-announce this action, even to our most trusted community partner groups (like the stewards), without fear of triggering the risk to which we’d been alerted. We restricted access to these tools immediately in the jurisdictions of concern, while working with impacted users to determine if the risk applied to them.

-

- Aug 2022

-

twitter.com twitter.com

-

ReconfigBehSci. (2022, January 3). masking is not an “unevidenced intervention” and, at this point, it is outright disinformation to claim so. Sad coming from an academic at a respectable institution [Tweet]. @SciBeh. https://twitter.com/SciBeh/status/1478003733518819334

-

-

-

Meet the media startups making big money on vaccine conspiracies. (n.d.). Fortune. Retrieved December 23, 2021, from https://fortune.com/2021/05/14/disinformation-media-vaccine-covid19/

-

-

Local file Local file

-

Kahne and Bowyer (2017) exposed thousands of young people in California tosome true messages and some false ones, similar to the memes they may see on social media

-

Many U.S.educators believe that increasing political polarization combine with the hazards ofmisinformation and disinformation in ways that underscore the need for learners to acquire theknowledge and skills required to navigate a changing media landscape (Hamilton et al. 2020a)

-

- Jul 2022

-

www.persuasion.community www.persuasion.community

-

An

Find common ground. Clear away the kindling. Provide context...don't de-platform.

-

You have three options:Continue fighting fires with hordes of firefighters (in this analogy, fact-checkers).Focus on the arsonists (the people spreading the misinformation) by alerting the town they're the ones starting the fire (banning or labeling them).Clear the kindling and dry brush (teach people to spot lies, think critically, and ask questions).Right now, we do a lot of #1. We do a little bit of #2. We do almost none of #3, which is probably the most important and the most difficult. I’d propose three strategies for addressing misinformation by teaching people to ask questions and spot lies.

-

Simply put, the threat of "misinformation" being spread at scale is not novel or unique to our generation—and trying to slow the advances of information sharing is futile and counter-productive.

-

It’s worth reiterating precisely why: The very premise of science is to create a hypothesis, put that hypothesis up to scrutiny, pit good ideas against bad ones, and continue to test what you think you know over and over and over. That’s how we discovered tectonic plates and germs and key features of the universe. And oftentimes, it’s how we learn from great public experiments, like realizing that maybe paper or reusable bags are preferable to plastic.

develop a hypothesis, and pit different ideas against one another

-

All of these approaches tend to be built on an assumption that misinformation is something that can and should be censored. On the contrary, misinformation is a troubling but necessary part of our political discourse. Attempts to eliminate it carry far greater risks than attempts to navigate it, and trying to weed out what some committee or authority considers "misinformation" would almost certainly restrict our continued understanding of the world around us.

-