- Jul 2020

-

medrxiv.org medrxiv.org

-

Fontanet, A., Grant, R., Tondeur, L., Madec, Y., Grzelak, L., Cailleau, I., Ungeheuer, M.-N., Renaudat, C., Pellerin, S. F., Kuhmel, L., Staropoli, I., Anna, F., Charneau, P., Demeret, C., Bruel, T., Schwartz, O., & Hoen, B. (2020). SARS-CoV-2 infection in primary schools in northern France: A retrospective cohort study in an area of high transmission. MedRxiv, 2020.06.25.20140178. https://doi.org/10.1101/2020.06.25.20140178

-

-

www.medrxiv.org www.medrxiv.org

-

Fontanet, A., Tondeur, L., Madec, Y., Grant, R., Besombes, C., Jolly, N., Pellerin, S. F., Ungeheuer, M.-N., Cailleau, I., Kuhmel, L., Temmam, S., Huon, C., Chen, K.-Y., Crescenzo, B., Munier, S., Demeret, C., Grzelak, L., Staropoli, I., Bruel, T., … Hoen, B. (2020). Cluster of COVID-19 in northern France: A retrospective closed cohort study. MedRxiv, 2020.04.18.20071134. https://doi.org/10.1101/2020.04.18.20071134

-

-

-

Sapoval, N., Mahmoud, M., Jochum, M. D., Liu, Y., Elworth, R. A. L., Wang, Q., Albin, D., Ogilvie, H., Lee, M. D., Villapol, S., Hernandez, K., Berry, I. M., Foox, J., Beheshti, A., Ternus, K., Aagaard, K. M., Posada, D., Mason, C., Sedlazeck, F. J., & Treangen, T. J. (2020). Hidden genomic diversity of SARS-CoV-2: Implications for qRT-PCR diagnostics and transmission. BioRxiv, 2020.07.02.184481. https://doi.org/10.1101/2020.07.02.184481

-

-

twitter.com twitter.com

-

Natalie E. Dean, PhD on Twitter: “THINK LIKE AN EPIDEMIOLOGIST: There are more new confirmed cases each day in the US than at any time during the earlier April peak. But is it really meaningful to compare those numbers? How do epidemiologists decide when to sound the alarm? A thread. 1/11 https://t.co/rPelzIvcxs” / Twitter. (n.d.). Twitter. Retrieved July 3, 2020, from https://twitter.com/nataliexdean/status/1278868210385915904

-

-

www1.nyc.gov www1.nyc.gov

-

COVID-19: Data Summary—NYC Health. (n.d.). Retrieved July 3, 2020, from https://www1.nyc.gov/site/doh/covid/covid-19-data.page

-

-

blogs.bmj.com blogs.bmj.com

-

Devi Sridhar and Adriel Chen: Scotland’s slow and steady approach to covid-19 may lead to a more sustainable future. (2020, June 30). The BMJ. https://blogs.bmj.com/bmj/2020/06/30/devi-sridhar-and-adriel-chen-scotlands-slow-and-steady-approach-to-covid-19-may-lead-to-a-more-sustainable-future/

-

- Jun 2020

-

twitter.com twitter.com

-

Firass Abiad on Twitter: “1.8 Is our #Covid19 situation in 🇱🇧 getting out of control? Several recent observations can help answer this question:” / Twitter. (n.d.). Twitter. Retrieved June 29, 2020, from https://twitter.com/firassabiad/status/1276784177178869762

-

-

www.bmj.com www.bmj.com

-

Godlee, F. (2020). Covid 19: Where’s the strategy for testing? BMJ, 369. https://doi.org/10.1136/bmj.m2518

-

-

www.medrxiv.org www.medrxiv.org

-

Liu, T., Wu, S., Tao, H., Zeng, G., Zhou, F., Guo, F., & Wang, X. (2020). Prevalence of IgG antibodies to SARS-CoV-2 in Wuhan—Implications for the ability to produce long-lasting protective antibodies against SARS-CoV-2. MedRxiv, 2020.06.13.20130252. https://doi.org/10.1101/2020.06.13.20130252

-

-

www.keranews.org www.keranews.org

-

Younger Adults Are Increasingly Testing Positive For The Coronavirus. (n.d.). Retrieved June 28, 2020, from https://www.keranews.org/post/younger-adults-are-increasingly-testing-positive-coronavirus

-

-

www.nbcnews.com www.nbcnews.com

-

CDC says COVID-19 cases in U.S. may be 10 times higher than reported. (n.d.). NBC News. Retrieved June 26, 2020, from https://www.nbcnews.com/health/health-news/cdc-says-covid-19-cases-u-s-may-be-10-n1232134

-

-

www.theguardian.com www.theguardian.com

-

Reicher, S. (2020, June 24). The way Boris Johnson has eased lockdown sends all the wrong messages | Stephen Reicher. The Guardian. https://www.theguardian.com/commentisfree/2020/jun/24/boris-johnson-ease-lockdown-england

-

-

covidtracking.com covidtracking.com

-

Blog | It’s Not Just Testing. (n.d.). The COVID Tracking Project. Retrieved June 25, 2020, from https://covidtracking.com/blog/its-not-just-testing

-

-

www.newscientist.com www.newscientist.com

-

Page Le, M. (2020, June 24). Town in UK takes steps to test entire population for coronavirus. New Scientist. https://www.newscientist.com/article/2246880-town-in-uk-takes-steps-to-test-entire-population-for-coronavirus/

-

-

www.newscientist.com www.newscientist.com

-

Liverpool, C. Q.-H. and L. (n.d.). Covid-19 news: WHO says poor global leadership making pandemic worse. New Scientist. Retrieved 23 June 2020, from https://www.newscientist.com/article/2237475-covid-19-news-who-says-poor-global-leadership-making-pandemic-worse/

-

-

ourworldindata.org ourworldindata.org

-

Per capita: COVID-19 tests vs. Confirmed deaths. (n.d.). Our World in Data. Retrieved June 23, 2020, from https://ourworldindata.org/grapher/covid-19-tests-deaths-scatter-with-comparisons

-

-

blogs.bmj.com blogs.bmj.com

-

Test and trace: It didn’t have to be this way. (2020, June 19). The BMJ. https://blogs.bmj.com/bmj/2020/06/19/test-and-trace-it-didnt-have-to-be-this-way/

-

-

-

Coronavirus: R number jumps to 1.79 in Germany after abattoir outbreak. (n.d.). Sky News. Retrieved June 22, 2020, from https://news.sky.com/story/coronavirus-r-number-jumps-to-1-79-in-germany-after-abattoir-outbreak-12011468

-

-

www.theguardian.com www.theguardian.com

-

Anderson, C. (2020, June 18). New Zealand reports fresh coronavirus case as more quarantine breaches emerge. The Guardian. https://www.theguardian.com/world/2020/jun/18/new-zealand-coronavirus-defences-under-scrutiny-as-more-breaches-emerge

-

-

www.newscientist.com www.newscientist.com

-

Hamzelou, J. (2020, June 17). How many of us are likely to have caught the coronavirus so far? New Scientist. https://www.newscientist.com/article/mg24632873-000-how-many-of-us-are-likely-to-have-caught-the-coronavirus-so-far/

-

-

www.thelancet.com www.thelancet.com

-

Kucharski, A. J., Klepac, P., Conlan, A. J. K., Kissler, S. M., Tang, M. L., Fry, H., Gog, J. R., Edmunds, W. J., Emery, J. C., Medley, G., Munday, J. D., Russell, T. W., Leclerc, Q. J., Diamond, C., Procter, S. R., Gimma, A., Sun, F. Y., Gibbs, H. P., Rosello, A., … Simons, D. (2020). Effectiveness of isolation, testing, contact tracing, and physical distancing on reducing transmission of SARS-CoV-2 in different settings: A mathematical modelling study. The Lancet Infectious Diseases, 0(0). https://doi.org/10.1016/S1473-3099(20)30457-6

-

-

www.medscape.com www.medscape.com

-

Dean, N. E. (2020, June 15). Asymptomatic Transmission? We Just Don’t Know. Medscape. Retrieved June 16, 2020, from http://www.medscape.com/viewarticle/932246

-

-

ncs-tf.ch ncs-tf.ch

-

Swiss National COVID-19 Sceince Task Force. Policy Briefs. (n.d.). Retrieved June 16, 2020, from https://ncs-tf.ch/de/policy-briefs

-

-

ncs-tf.ch ncs-tf.ch

-

Swiss National COVID-19 Science Task Force. Policy Briefs. (n.d.). Retrieved June 16, 2020, from https://ncs-tf.ch/fr/policy-briefs

-

-

github.com github.com

-

a technique that enable a test suite to perform as well as using fixtures (or better if you're running just a few tests from the suite) and read as good as you are used to when using factories

-

-

-

twitter.com twitter.com

-

Nivi Mani on Twitter. "I cannot stop smiling! Here is a first peek at the data from our online browser-based intermodal preferential looking set-up! We replicate the prediction effect (boy eats big cake, Mani & Huettig, 2012) using our online webcam testing software @julien__mayor @Kindskoepfe_Lab" / Twitter. (n.d.). Twitter. Retrieved June 15, 2020, from https://twitter.com/nivedita_mani/status/1265556217486815232

-

-

www.newyorker.com www.newyorker.com

-

Wallace-Wells, B. (2020, June 12). Can Coronavirus Contact Tracing Survive Reopening? The New Yorker. https://www.newyorker.com/news/us-journal/can-coronavirus-contact-tracing-survive-reopening

-

-

twitter.com twitter.com

-

Dean, N. E. PhD. (2020, June 14) "Partners in Health @PIH is used to working in the poorest regions of the poorest countries. Now they are leading Massachusetts' contact tracing. Their experiences remind us of the importance of "support" in test, trace, isolate, support. (A thread 1/8)" Twitter. https://twitter.com/nataliexdean/status/1272219726169718785

-

-

www.newscientist.com www.newscientist.com

-

Bender, M. (2020, June 12). Coronavirus second waves emerge in several US states as they reopen. New Scientist. https://www.newscientist.com/article/2246057-coronavirus-second-waves-emerge-in-several-us-states-as-they-reopen/

-

-

www.newscientist.com www.newscientist.com

-

Page Le, M. (2020, June 13). How can international travel resume during the coronavirus pandemic? New Scientist. https://www.newscientist.com/article/2246024-how-can-international-travel-resume-during-the-coronavirus-pandemic/

-

-

www.acpjournals.org www.acpjournals.org

-

Oran, D. P., & Topol, E. J. (2020). Prevalence of Asymptomatic SARS-CoV-2 Infection: A Narrative Review. Annals of Internal Medicine, M20-3012. https://doi.org/10.7326/M20-3012

-

-

email.primer.ai email.primer.ai

-

Primer Weekly Briefing 01/05/20

-

-

psyarxiv.com psyarxiv.com

-

Katz, B., & Yovel, P. C. and P. L.-I. (2020). Mood Symptoms Predict COVID-19 Pandemic Distress but not Vice Versa: An 18-Month Longitudinal Study. https://doi.org/10.31234/osf.io/6qske

-

-

twitter.com twitter.com

-

(((Howard Forman))) on Twitter: “#Italy remains one of the worst outbreaks & one of the best & most consistent responses to lockdown/NPI measures. 0.6% positive rate; STILL testing at rate of greater than 1/1000 each day. The US is NOT currently on this path. (some regions are). 33K fatalities. https://t.co/5lWdXMdlEf” / Twitter. (n.d.). Twitter. Retrieved June 2, 2020, from https://twitter.com/thehowie/status/1266873463681298433

-

-

www.theguardian.com www.theguardian.com

-

Hern, A. (2020, June 8). People who think they have had Covid-19 ‘less likely to download contact-tracing app.’ The Guardian. https://www.theguardian.com/world/2020/jun/08/people-who-think-they-have-had-covid-19-less-likely-to-download-contact-tracing-app

-

-

ourworldindata.org ourworldindata.org

-

Is the world making progress against the pandemic? We built the chart to answer this question. (n.d.). Our World in Data. Retrieved June 11, 2020, from https://ourworldindata.org/epi-curve-covid-19

-

-

-

Sturniolo, S., Waites, W., Colbourn, T., Manheim, D., & Panovska-Griffiths, J. (2020). Testing, tracing and isolation in compartmental models. MedRxiv, 2020.05.14.20101808. https://doi.org/10.1101/2020.05.14.20101808

-

-

edgeguides.rubyonrails.org edgeguides.rubyonrails.org

-

It is not customary in Rails to run the full test suite before pushing changes. The railties test suite in particular takes a long time, and takes an especially long time if the source code is mounted in /vagrant as happens in the recommended workflow with the rails-dev-box.As a compromise, test what your code obviously affects, and if the change is not in railties, run the whole test suite of the affected component. If all tests are passing, that's enough to propose your contribution.

-

-

www.washingtonpost.com www.washingtonpost.com

-

Pell, Samantha, closeSamantha PellReporter covering the Washington CapitalsEmailEmailBioBioFollowFollowC, ace Buckner, closeC, and ace BucknerNational Basketball Association with an emphasis in covering the Washington Wizards EmailEmailBioBioFollowFollowJacqueline Dupree closeJacqueline DupreeNewsroom Intranet EditorEmailEmailBioBioFollowFollow. ‘Coronavirus Hospitalizations Rise Sharply in Several States Following Memorial Day’. Washington Post. Accessed 10 June 2020. https://www.washingtonpost.com/health/2020/06/09/coronavirus-hospitalizations-rising/.

-

-

www.propublica.org www.propublica.org

-

Chen, C. (2020, April 28). What Antibody Studies Can Tell You—And More Importantly, What They Can’t. ProPublica. https://www.propublica.org/article/what-antibody-studies-can-tell-you-and-more-importantly-what-they-cant

-

-

www.nytimes.com www.nytimes.com

-

Qin, A. (2020, February 2). Coronavirus Pummels Wuhan, a City Short of Supplies and Overwhelmed. The New York Times. https://www.nytimes.com/2020/02/02/world/asia/china-coronavirus-wuhan.html

-

-

www.buzzfeednews.com www.buzzfeednews.com

-

Lee, S. M. (2020, April 22) Two Antibody Studies Say Coronavirus Infections Are More Common Than We Think. Scientists Are Mad. https://www.buzzfeednews.com/article/stephaniemlee/coronavirus-antibody-test-santa-clara-los-angeles-stanford

-

-

fivethirtyeight.com fivethirtyeight.com

-

Koerth, M. (2020, March 31). Why It’s So Freaking Hard To Make A Good COVID-19 Model. FiveThirtyEight. https://fivethirtyeight.com/features/why-its-so-freaking-hard-to-make-a-good-covid-19-model/

-

-

www.theatlantic.com www.theatlantic.com

-

Gutman, R. (2020, May 8). You’ll Probably Never Know If You Had the Coronavirus in January. The Atlantic. https://www.theatlantic.com/health/archive/2020/05/us-coronavirus-cases-january/611305/

-

-

slate.com slate.com

-

Craven, J. (2020, May 21). It’s Not Too Late to Save Black Lives. Slate Magazine. https://slate.com/news-and-politics/2020/05/covid-19-black-communities-health-disparity.html

-

-

twitter.com twitter.com

-

BBC Radio 5 Live. (2020, May 19) ""The government should've brought in [universities and researchers] from the outset" @GregClarkMP, chair of the @CommonsSTC, talks to #5LiveBreakfast about some of the lessons to learn about how the UK handled #COVID19." Twitter. https://twitter.com/bbc5live/status/1262642752032059392

-

-

-

Wise, J. (2020). Covid-19: Surveys indicate low infection level in community. BMJ, m1992. https://doi.org/10.1136/bmj.m1992

-

-

www.theguardian.com www.theguardian.com

-

Sridhar, D. (2020, March 15). Britain goes it alone over coronavirus. We can only hope the gamble pays off | Devi Sridhar. The Guardian. https://www.theguardian.com/commentisfree/2020/mar/15/britain-goes-it-alone-over-coronavirus-we-can-only-hope-the-gamble-pays-off

-

-

www.who.int www.who.int

-

WHO “Immunity passports” in the context of COVID-19. (2020 April 27). https://www.who.int/news-room/commentaries/detail/immunity-passports-in-the-context-of-covid-19

-

-

jamanetwork.com jamanetwork.com

-

Perencevich, E. N., Diekema, D. J., & Edmond, M. B. (2020). Moving Personal Protective Equipment Into the Community: Face Shields and Containment of COVID-19. JAMA. https://doi.org/10.1001/jama.2020.7477

Tags

- loosening restrictions

- community

- closure

- protection

- Singapore

- lang:en

- South Korea

- diagnostics

- COVID-19

- healthcare worker

- California

- physical distancing

- contact tracing

- containment

- ban

- equipment

- public health

- face mask

- is:article

- testing

- non-pharmaceutical intervention

- flatten the curve

- USA

Annotators

URL

-

-

www.thelancet.com www.thelancet.com

-

Diseases, T. L. I. (2020). COVID-19: Endgames. The Lancet Infectious Diseases, 0(0). https://doi.org/10.1016/S1473-3099(20)30298-X

-

-

www.thelancet.com www.thelancet.com

-

Tian, H., & Bjornstad, O. N. (2020). Population serology for SARS-CoV-2 is essential to regional and global preparedness. The Lancet Microbe, 0(0). https://doi.org/10.1016/S2666-5247(20)30055-0

-

-

psyarxiv.com psyarxiv.com

-

Han, H., & Dawson, K. J. (2020). JASP (Software) [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/67dcb

-

-

www.theatlantic.com www.theatlantic.com

-

Meyer, A. C. M., Robinson. (2020, May 21). ‘How Could the CDC Make That Mistake?’ The Atlantic. https://www.theatlantic.com/health/archive/2020/05/cdc-and-states-are-misreporting-covid-19-test-data-pennsylvania-georgia-texas/611935/

Tags

- conflation

- reopening

- lang:en

- bad science

- confusion

- infection rate

- testing

- is:news

- COVID-19

- outbreak detection

- CDC

- exposure

- antibody

- concern

- viral

- USA

Annotators

URL

-

-

www.vox.com www.vox.com

-

Matthews, D. (2020, May 20). How exposing healthy volunteers to Covid-19 for vaccine testing would work. Vox. https://www.vox.com/future-perfect/2020/5/20/21258725/covid-19-human-challenge-trials-vaccine-update-sars-cov-2

-

-

stm.sciencemag.org stm.sciencemag.org

-

Weissleder, R., Lee, H., Ko, J., & Pittet, M. J. (2020). COVID-19 diagnostics in context. Science Translational Medicine, 12(546). https://doi.org/10.1126/scitranslmed.abc1931

-

-

www.theguardian.com www.theguardian.com

-

editor, I. S. S. (2020, May 17). Doctors raise hopes of blood test for children with coronavirus-linked syndrome. The Guardian. https://www.theguardian.com/science/2020/may/17/possible-breakthrough-in-coronavirus-related-syndrome-in-children

-

-

www.ons.gov.uk www.ons.gov.uk

-

Coronavirus (COVID-19) Infection Survey pilot—Office for National Statistics. (n.d.). Retrieved June 2, 2020, from https://www.ons.gov.uk/peoplepopulationandcommunity/healthandsocialcare/conditionsanddiseases/bulletins/coronaviruscovid19infectionsurveypilot/england14may2020

Tags

- England

- age

- UK

- community

- lang:en

- survey

- likelihood

- risk of infection

- infection rate

- COVID-19

- bulletin

- testing

- is:report

- household

Annotators

URL

-

-

www.theguardian.com www.theguardian.com

-

Adams, R., Stewart, H., & Brooks, L. (2020, May 15). BMA backs teaching unions’ opposition to schools reopening. The Guardian. https://www.theguardian.com/world/2020/may/15/bma-backs-teaching-unions-in-opposing-reopening-of-schools

-

-

www.bbc.com www.bbc.com

-

Schools “safe” to reopen, Michael Gove insists. (2020, May 17). BBC News. https://www.bbc.com/news/education-52697488

-

-

www.thelancet.com www.thelancet.com

-

Guarneri, C., Rullo, E. V., Pavone, P., Berretta, M., Ceccarelli, M., Natale, A., & Nunnari, G. (2020). Silent COVID-19: What your skin can reveal. The Lancet Infectious Diseases, S1473309920304023. https://doi.org/10.1016/S1473-3099(20)30402-3

-

-

twitter.com twitter.com

-

Devi Sridhar on Twitter: “My suggestion: bring down daily new cases to a low level, get test/trace/isolate in place and core infrastructure build up, get regular testing going for essential workers/teachers/students, monitor borders for imported cases, & move to mandatory masks in shops/public transport.” / Twitter. (n.d.). Twitter. Retrieved June 2, 2020, from https://twitter.com/devisridhar/status/1266103290816839682

-

-

www.thelancet.com www.thelancet.com

-

Khalil, A., Hill, R., Ladhani, S., Pattisson, K., & O’Brien, P. (2020). COVID-19 screening of health-care workers in a London maternity hospital. The Lancet Infectious Diseases, S1473309920304035. https://doi.org/10.1016/S1473-3099(20)30403-5

-

-

-

Abbasi, K. (2020). Covid-19: Questions of conscience and duty for scientific advisers. BMJ, 369. https://doi.org/10.1136/bmj.m2102

-

- May 2020

-

twitter.com twitter.com

-

Devi Sridhar on Twitter: “Early serology results based on antibody testing: 5% for UK, 17% for London. From Matt Hancock, Health Secretary in today’s briefing. In line with what other countries have reported on their seroprevalence.” / Twitter. (n.d.). Twitter. Retrieved May 31, 2020, from https://twitter.com/devisridhar/status/1263507332224475136

-

-

twitter.com twitter.com

-

Devi Sridhar on Twitter: “Then ease measures while testing widely & w/ good data systems that alert public whether it is red/amber/green in their area. Need clusters of cases identified rapidly & broken up before tips over into sustained community transmission. If it tips, hard to avoid another lockdown.” / Twitter. (n.d.). Twitter. Retrieved May 30, 2020, from https://twitter.com/devisridhar/status/1266103292138065926

-

-

rs-delve.github.io rs-delve.github.io

-

Test, Trace, Isolate. (2020, May 27). Royal Society DELVE Initiative. http://rs-delve.github.io/reports/2020/05/27/test-trace-isolate.html

-

-

www.sciencedirect.com www.sciencedirect.com

-

Döhla, M., Boesecke, C., Schulte, B., Diegmann, C., Sib, E., Richter, E., Eschbach-Bludau, M., Aldabbagh, S., Marx, B., Eis-Hübinger, A.-M., Schmithausen, R. M., & Streeck, H. (2020). Rapid point-of-care testing for SARS-CoV-2 in a community screening setting shows low sensitivity. Public Health. https://doi.org/10.1016/j.puhe.2020.04.009

-

-

sciencebusiness.net sciencebusiness.net

-

What’s the COVID-19 re-entry plan? Experts debate Europe’s tricky road ahead. (n.d.). Science|Business. Retrieved April 20, 2020, from https://sciencebusiness.net/news/whats-covid-19-re-entry-plan-experts-debate-europes-tricky-road-ahead

-

-

www.ncbi.nlm.nih.gov www.ncbi.nlm.nih.gov

-

Scherer, L. D., Valentine, K. D., Patel, N., Baker, S. G., & Fagerlin, A. (2019). A bias for action in cancer screening? Journal of Experimental Psychology. Applied, 25(2), 149–161. https://doi.org/10.1037/xap0000177

-

-

-

Mei, X., Lee, H.-C., Diao, K., Huang, M., Lin, B., Liu, C., Xie, Z., Ma, Y., Robson, P. M., Chung, M., Bernheim, A., Mani, V., Calcagno, C., Li, K., Li, S., Shan, H., Lv, J., Zhao, T., Xia, J., … Yang, Y. (2020). Artificial intelligence for rapid identification of the coronavirus disease 2019 (COVID-19). MedRxiv, 2020.04.12.20062661. https://doi.org/10.1101/2020.04.12.20062661

-

-

www.theguardian.com www.theguardian.com

-

Davis, N. (2020, May 4). Rival Sage group says Covid-19 policy must be clarified. The Guardian. https://www.theguardian.com/world/2020/may/04/rival-sage-group-covid-19-policy-clarified-david-king

-

-

www.thelancet.com www.thelancet.com

-

Kirby, T. (2020). South America prepares for the impact of COVID-19. The Lancet Respiratory Medicine, S2213260020302186. https://doi.org/10.1016/S2213-2600(20)30218-6

-

-

softwareengineering.stackexchange.com softwareengineering.stackexchange.com

-

kellysutton.com kellysutton.com

-

-

The test is being marked as skipped because it has randomly failed. How much confidence do we have in that test and feature in the first place.

-

“Make it work” means shipping something that doesn’t break. The code might be ugly and difficult to understand, but we’re delivering value to the customer and we have tests that give us confidence. Without tests, it’s hard to answer “Does this work?”

-

-

www.theguardian.com www.theguardian.com

-

McCurry, J., Harding, L., & agencies. (2020, May 28). South Korea re-imposes some coronavirus restrictions after spike in new cases. The Guardian. https://www.theguardian.com/world/2020/may/28/south-korea-faces-return-to-coronavirus-restrictions-after-spike-in-new-cases

-

-

www.who.int www.who.int

-

WHO. (n.d.) Coronavirus disease (COVID-19) technical guidance: Laboratory testing for 2019-nCoV in humans. https://www.who.int/emergencies/diseases/novel-coronavirus-2019/technical-guidance/laboratory-guidance

-

-

science.sciencemag.org science.sciencemag.org

-

Cohen, J., & Kupferschmidt, K. (2020). Countries test tactics in ‘war’ against COVID-19. Science, 367(6484), 1287–1288. https://doi.org/10.1126/science.367.6484.1287

-

-

twitter.com twitter.com

-

Howard Forman on Twitter

-

-

-

Vaidyanathan, G. (2020). People power: How India is attempting to slow the coronavirus. Nature, 580(7804), 442–442. https://doi.org/10.1038/d41586-020-01058-5

-

-

www.gov.uk www.gov.uk

-

Sero-surveillance of COVID-19. (n.d.). GOV.UK. Retrieved May 27, 2020, from https://www.gov.uk/government/publications/national-covid-19-surveillance-reports/sero-surveillance-of-covid-19

-

-

twitter.com twitter.com

-

Natalie E. Dean, PhD on Twitter

-

-

insights.som.yale.edu insights.som.yale.edu

-

Is It Time to Reopen? (2020, May 20). Yale Insights. https://insights.som.yale.edu/insights/is-it-time-to-reopen

-

-

www.thelancet.com www.thelancet.com

-

Jordan, R. E., & Adab, P. (2020). Who is most likely to be infected with SARS-CoV-2? The Lancet Infectious Diseases, S1473309920303959. https://doi.org/10.1016/S1473-3099(20)30395-9

-

-

docs.gitlab.com docs.gitlab.com

-

It involves continuously building, testing, and deploying code changes at every small iteration, reducing the chance of developing new code based on bugged or failed previous versions.

-

-

-

Goodman, J. D., & Rothfeld, M. (2020, April 23). 1 in 5 New Yorkers May Have Had Covid-19, Antibody Tests Suggest. The New York Times. https://www.nytimes.com/2020/04/23/nyregion/coronavirus-antibodies-test-ny.html

-

-

github.com github.com

-

We are not testing styles specifically at this time

-

Integration specs are relied upon to ensure the application functions, but does not ensure pixel-level stylistic perfection.

-

-

percy.io percy.io

-

-

Hodgson, C. (2020, May 13). WHO’s chief scientist offers bleak assessment of challenges ahead. https://www.ft.com/content/69c75de6-9c6b-4bca-b110-2a55296b0875

-

-

www.thenakedscientists.com www.thenakedscientists.com

-

Smith, C. (2020, May 12). Hospital staff carrying COVID-19.https://www.thenakedscientists.com/articles/science-news/hospital-staff-carrying-covid-19

-

-

covidtracking.com covidtracking.com

-

The COVID Tracking Project. https://covidtracking.com/

-

-

twitter.com twitter.com

-

The COVID Tracking Project - Twitter

-

-

www.sciencedirect.com www.sciencedirect.com

-

Lourenco, S. F., & Tasimi, A. (2020). No Participant Left Behind: Conducting Science During COVID-19. Trends in Cognitive Sciences, S1364661320301157. https://doi.org/10.1016/j.tics.2020.05.003

-

-

www.theguardian.com www.theguardian.com

-

Savage, M. (2020, May 10). A return to work is on the cards. What are the fears and legal pitfalls? The Guardian | The Observer. https://www.theguardian.com/world/2020/may/09/coronavirus-return-to-work-employment-law-logistical-nightmare

-

-

www.imperial.ac.uk www.imperial.ac.uk

-

New report models Italy’s potential exit strategy from COVID-19 lockdown (2020 May 05). Imperial News | Imperial College London. https://www.imperial.ac.uk/news/189351/new-report-models-italys-potential-exit/

-

-

www.theatlantic.com www.theatlantic.com

-

Varmus, H. (2020, May 9). The World Doesn’t Yet Know Enough to Beat the Coronavirus. The Atlantic. https://www.theatlantic.com/ideas/archive/2020/05/lack-testing-holding-science-back/611422/

-

-

www.medrxiv.org www.medrxiv.org

-

Buitrago-Garcia, D. C., Egli-Gany, D., Counotte, M. J., Hossmann, S., Imeri, H., Salanti, G., & Low, N. (2020). The role of asymptomatic SARS-CoV-2 infections: Rapid living systematic review and meta-analysis [Preprint]. Epidemiology. https://doi.org/10.1101/2020.04.25.20079103

-

-

www.nejm.org www.nejm.org

-

Arons, M. M., Hatfield, K. M., Reddy, S. C., Kimball, A., James, A., Jacobs, J. R., Taylor, J., Spicer, K., Bardossy, A. C., Oakley, L. P., Tanwar, S., Dyal, J. W., Harney, J., Chisty, Z., Bell, J. M., Methner, M., Paul, P., Carlson, C. M., McLaughlin, H. P., … Jernigan, J. A. (2020). Presymptomatic SARS-CoV-2 Infections and Transmission in a Skilled Nursing Facility. New England Journal of Medicine, NEJMoa2008457. https://doi.org/10.1056/NEJMoa2008457

-

-

jamanetwork.com jamanetwork.com

-

Baggett, T. P., Keyes, H., Sporn, N., & Gaeta, J. M. (2020). Prevalence of SARS-CoV-2 Infection in Residents of a Large Homeless Shelter in Boston. JAMA. https://doi.org/10.1001/jama.2020.6887

-

-

-

Gudbjartsson, D. F., Helgason, A., Jonsson, H., Magnusson, O. T., Melsted, P., Norddahl, G. L., Saemundsdottir, J., Sigurdsson, A., Sulem, P., Agustsdottir, A. B., Eiriksdottir, B., Fridriksdottir, R., Gardarsdottir, E. E., Georgsson, G., Gretarsdottir, O. S., Gudmundsson, K. R., Gunnarsdottir, T. R., Gylfason, A., Holm, H., … Stefansson, K. (2020). Spread of SARS-CoV-2 in the Icelandic Population. New England Journal of Medicine, NEJMoa2006100. https://doi.org/10.1056/NEJMoa2006100

-

-

www.ecdc.europa.eu www.ecdc.europa.eu

-

Contact tracing for COVID-19: Current evidence, options for scale-up and an assessment of resources needed. (2020, May 5). European Centre for Disease Prevention and Control. https://www.ecdc.europa.eu/en/publications-data/contact-tracing-covid-19-evidence-scale-up-assessment-resources

-

-

www.health.govt.nz www.health.govt.nz

-

PDF - Ministry of Health, New Zealand - Approach for testing

-

-

theconversation.com theconversation.com

-

Chakravorti, B. (2020 May 06). Exit from coronavirus lockdowns – lessons from 6 countries. The Conversation. http://theconversation.com/exit-from-coronavirus-lockdowns-lessons-from-6-countries-136223

-

-

www.nature.com www.nature.com

-

Vespignani, A., Tian, H., Dye, C. et al. Modelling COVID-19. Nat Rev Phys (2020). https://doi.org/10.1038/s42254-020-0178-4

Tags

- containment measures

- infection

- lang:en

- transmission dynamics

- intervention

- pharmaceutical

- policy

- COVID-19

- challenge

- mathematics

- isolation

- war time

- China

- superspreading

- quarentine

- prediction

- open data

- public health

- complex network

- computational modeling

- epidemiology

- emergency

- is:article

- modeling

- antibody testing

- contact tracing

- forecast

Annotators

URL

-

-

dash.harvard.edu dash.harvard.edu

-

Larremore, Daniel B., Kate M. Bubar, and Yonatan H. Grad. Implications of test characteristics and population seroprevalence on ‘immune passport’ strategies (May 2020).https://dash.harvard.edu/handle/1/42664007

-

-

onlinelibrary.wiley.com onlinelibrary.wiley.com

-

Zahnd, W. E. (2020). The COVID‐19 Pandemic Illuminates Persistent and Emerging Disparities among Rural Black Populations. The Journal of Rural Health, jrh.12460. https://doi.org/10.1111/jrh.12460

Tags

- telehealth

- lang:en

- infection rate

- screening

- rural health

- demographics

- racial disparity

- COVID-19

- social determinants of health

- death rate

- access to care

- hospital

- black people

- inequality

- health equity

- is:article

- African American

- healthcare

- outbreak

- testing

- internet

- USA

- inadequately prepared

Annotators

URL

-

-

notes.andymatuschak.org notes.andymatuschak.org

-

In a classroom or professional setting, an expert might perform some of these tasks for a learner (Metacognitive supports as cognitive scaffolding), but when a learner’s on their own, these metacognitive activities may be taxing or beyond reach.

In a classroom setting a teacher may perform many of the metacognitive tasks that are necessary for the student to learn. E.g. they may take over monitoring for confusion as well as testing the students to evaluate their understanding.

-

-

onlinelibrary.wiley.com onlinelibrary.wiley.com

-

Roland, L. T., Gurrola, J. G., Loftus, P. A., Cheung, S. W., & Chang, J. L. (2020). Smell and taste symptom‐based predictive model for COVID‐19 diagnosis. International Forum of Allergy & Rhinology, alr.22602. https://doi.org/10.1002/alr.22602

-

-

testandtrace.com testandtrace.com

-

What U.S. States Are Ready To Test & Trace? (n.d.). #TestAndTrace: The Best Contact Tracing Resource. Retrieved May 12, 2020, from https://testandtrace.com/state-data/

Tags

Annotators

URL

-

-

jamanetwork.com jamanetwork.com

-

Steinbrook, R. (2020). Contact Tracing, Testing, and Control of COVID-19—Learning From Taiwan. JAMA Internal Medicine. https://doi.org/10.1001/jamainternmed.2020.2072

-

-

-

Kaplan, E. H., & Forman, H. P. (2020). Logistics of Aggressive Community Screening for Coronavirus 2019. JAMA Health Forum, 1(5), e200565–e200565. https://doi.org/10.1001/jamahealthforum.2020.0565

-

-

catalyst.nejm.org catalyst.nejm.org

-

Guney S., Daniels C., & Childers Z.. (2020 April 30). Using AI to Understand the Patient Voice During the Covid-19 Pandemic. Catalyst Non-Issue Content, 1(2). https://doi.org/10.1056/CAT.20.0103

-

-

psyarxiv.com psyarxiv.com

-

Brown, M., & Sacco, D. F. (2020, May 6). Testing the Motivational Tradeoffs in Pathogen Avoidance and Status Acquisition. https://doi.org/10.31234/osf.io/y8ct6

Brown, M., & Sacco, D. F. (2020, May 6). Testing the Motivational Tradeoffs in Pathogen Avoidance and Status Acquisition. https://doi.org/10.31234/osf.io/y8ct6

-

-

www.thelancet.com www.thelancet.com

-

Lipsitch, M., Perlman, S., & Waldor, M. K. (2020). Testing COVID-19 therapies to prevent progression of mild disease. The Lancet Infectious Diseases, 0(0). https://doi.org/10.1016/S1473-3099(20)30372-8

-

-

www.apaservices.org www.apaservices.org

-

Banks, G.G. & Butcher, C. (2020 April 17). Telehealth testing with children: Important factors to consider. American Psychological Association. https://www.apaservices.org/practice/legal/technology/telehealth-testing-children-covid-19

-

-

www.medrxiv.org www.medrxiv.org

-

Shental, N., Levy, S., Skorniakov, S., Wuvshet, V., Shemer-Avni, Y., Porgador, A., & Hertz, T. (2020). Efficient high throughput SARS-CoV-2 testing to detect asymptomatic carriers. MedRxiv, 2020.04.14.20064618. https://doi.org/10.1101/2020.04.14.20064618

-

-

www.scientificamerican.com www.scientificamerican.com

-

Krzywinski, M. F., Martin. (n.d.). Virus Mutations Reveal How COVID-19 Really Spread. Scientific American. Retrieved May 6, 2020, from https://www.scientificamerican.com/article/virus-mutations-reveal-how-covid-19-really-spread1/

-

-

www.tandfonline.com www.tandfonline.com

-

Fenton, N. E., Neil, M., Osman, M., & McLachlan, S. (2020). COVID-19 infection and death rates: The need to incorporate causal explanations for the data and avoid bias in testing. Journal of Risk Research, 0(0), 1–4. https://doi.org/10.1080/13669877.2020.1756381

-

-

www.reddit.com www.reddit.com

-

r/medicine - Are “immunity certificates” actually feasible? Thoughts from an expert on viral antibodies. (n.d.). Reddit. Retrieved April 21, 2020, from https://www.reddit.com/r/medicine/comments/g1ty3g/are_immunity_certificates_actually_feasible/

-

-

threadreaderapp.com threadreaderapp.com

-

Thread by @taaltree: Antibody tests are coming online. Never before have humans needed to understand Bayes rule more. Let’s talk about why it’s critical NOT to a…. (n.d.). Retrieved April 21, 2020, from https://threadreaderapp.com/thread/1248467731545911296.html

-

-

psyarxiv.com psyarxiv.com

-

Fenton, N., Hitman, G. A., Neil, M., Osman, M., & McLachlan, S. (2020). Causal explanations, error rates, and human judgment biases missing from the COVID-19 narrative and statistics [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/p39a4

-

-

-

Shweta, F., Murugadoss, K., Awasthi, S., Venkatakrishnan, A., Puranik, A., Kang, M., Pickering, B. W., O’Horo, J. C., Bauer, P. R., Razonable, R. R., Vergidis, P., Temesgen, Z., Rizza, S., Mahmood, M., Wilson, W. R., Challener, D., Anand, P., Liebers, M., Doctor, Z., … Badley, A. D. (2020). Augmented Curation of Unstructured Clinical Notes from a Massive EHR System Reveals Specific Phenotypic Signature of Impending COVID-19 Diagnosis [Preprint]. Infectious Diseases (except HIV/AIDS). https://doi.org/10.1101/2020.04.19.20067660

-

-

www.medrxiv.org www.medrxiv.org

-

Wurtzer, S., Marechal, V., Mouchel, J.-M., & Moulin, L. (2020). Time course quantitative detection of SARS-CoV-2 in Parisian wastewaters correlates with COVID-19 confirmed cases. MedRxiv, 2020.04.12.20062679. https://doi.org/10.1101/2020.04.12.20062679

-

-

arxiv.org arxiv.org

-

Müller, M., Derlet, P. M., Mudry, C., & Aeppli, G. (2020). Using random testing to manage a safe exit from the COVID-19 lockdown. ArXiv:2004.04614 [Cond-Mat, Physics:Physics, q-Bio]. http://arxiv.org/abs/2004.04614

-

-

www.thelancet.com www.thelancet.com

-

Lohse, S., Pfuhl, T., Berkó-Göttel, B., Rissland, J., Geißler, T., Gärtner, B., Becker, S. L., Schneitler, S., & Smola, S. (2020). Pooling of samples for testing for SARS-CoV-2 in asymptomatic people. The Lancet Infectious Diseases, S1473309920303625. https://doi.org/10.1016/S1473-3099(20)30362-5

-

-

www.thelancet.com www.thelancet.com

-

Makoni, M. (2020). Keeping COVID-19 at bay in Africa. The Lancet Respiratory Medicine, S2213260020302198. https://doi.org/10.1016/S2213-2600(20)30219-8

-

-

muldoon.cloud muldoon.cloud

-

I try to write a unit test any time the expected value of a defect is non-trivial.

Write unit tests at least for the most important parts of code, but every chunk of code should have a trivial unit test around it – this verifies that the code is written in a testable way, which indeed is extremely important

-

I’m defining an integration test as a test where you’re calling code that you don’t own

When to write integration tests:

- importing code you don't own

- when you can't trust the code you don't own

-

-

-

A few takeaways

Summarising the article:

- Types and tests save you from stupid mistakes; these're gifts for your future self!

- Use ESLint and configure it to be your strict, but fair, friend.

- Think of tests as a permanent console.

- Types: It is not only about checks. It is also about code readability.

- Testing with each commit makes fewer surprises when merging Pull Requests.

-

- Apr 2020

-

psyarxiv.com psyarxiv.com

-

Edelsbrunner, P. A., & Thurn, C. (2020, April 22). Improving the Utility of Non-Significant Results for Educational Research. https://doi.org/10.31234/osf.io/j93a2

-

-

-

I would use ESLint in full strength, tests for some (especially end-to-end, to make sure a commit does not make project crash), and add continuous integration.

Advantage of tests

-

Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.

According to the Kernighan's Law, writing code is not as hard as debugging

-

Creating meticulous tests before exploring the data is a big mistake, and will result in a well-crafted garbage-in, garbage-out pipeline. We need an environment flexible enough to encourage experiments, especially in the initial place.

Overzealous nature of TDD may discourage from explorable data science

-

-

psyarxiv.com psyarxiv.com

-

Derks, K., de swart, j., van Batenburg, P., Wagenmakers, E., & wetzels, r. (2020, April 28). Priors in a Bayesian Audit: How Integration of Existing Information into the Prior Distribution Can Increase Transparency, Efficiency, and Quality. Retrieved from psyarxiv.com/8fhkp

-

-

doi.org doi.org

-

Gray, N., Calleja, D., Wimbush, A., Miralles-Dolz, E., Gray, A., De-Angelis, M., Derrer-Merk, E., Oparaji, B. U., Stepanov, V., Clearkin, L., & Ferson, S. (2020). “No test is better than a bad test”: Impact of diagnostic uncertainty in mass testing on the spread of Covid-19 [Preprint]. Epidemiology. https://doi.org/10.1101/2020.04.16.20067884

-

-

stackoverflow.com stackoverflow.com

-

science.sciencemag.org science.sciencemag.org

-

Braun, J. von, Zamagni, S., & Sorondo, M. S. (2020). The moment to see the poor. Science, 368(6488), 214–214. https://doi.org/10.1126/science.abc2255

-

-

jamanetwork.com jamanetwork.com

-

Park, S., Choi, G. J., & Ko, H. (2020). Information Technology–Based Tracing Strategy in Response to COVID-19 in South Korea—Privacy Controversies. JAMA. https://doi.org/10.1001/jama.2020.6602

-

-

-

Wölfel, R., Corman, V.M., Guggemos, W. et al. Virological assessment of hospitalized patients with COVID-2019. Nature (2020). https://doi.org/10.1038/s41586-020-2196-x

-

-

www.thelancet.com www.thelancet.com

-

Black, J. R. M., Bailey, C., Przewrocka, J., Dijkstra, K. K., & Swanton, C. (2020). COVID-19: The case for health-care worker screening to prevent hospital transmission. The Lancet, S014067362030917X. https://doi.org/10.1016/S0140-6736(20)30917-X

-

-

gateway2.itc.u-tokyo.ac.jp:11002 gateway2.itc.u-tokyo.ac.jp:11002

-

Dorn, A. van, Cooney, R. E., & Sabin, M. L. (2020). COVID-19 exacerbating inequalities in the US. The Lancet, 395(10232), 1243–1244. https://doi.org/10.1016/S0140-6736(20)30893-X

-

-

www.technologyreview.com www.technologyreview.com

-

Rotman, D. (2020 April 8). Stop covid or save the economy? We can do both. MIT Technology Review. https://www.technologyreview.com/2020/04/08/998785/stop-covid-or-save-the-economy-we-can-do-both/

-

-

-

r/BehSciAsk—Is uncertainty worse than either test result? (n.d.). Reddit. Retrieved April 9, 2020, from https://www.reddit.com/r/BehSciAsk/comments/ft16ii/is_uncertainty_worse_than_either_test_result/

-

-

www.reddit.com www.reddit.com

-

r/BehSciResearch—Behavioural science research for guiding societies out of lockdown. (n.d.). Reddit. Retrieved April 20, 2020, from https://www.reddit.com/r/BehSciResearch/comments/g2bm09/behavioural_science_research_for_guiding/

-

-

sciencebusiness.net sciencebusiness.net

-

Imperial researchers develop lab-free COVID-19 test with results in less than an hour. (n.d.). Science|Business. Retrieved April 20, 2020, from https://sciencebusiness.net/network-updates/imperial-researchers-develop-lab-free-covid-19-test-results-less-hour

-

-

www.thelancet.com www.thelancet.com

-

Peto, J., Alwan, N. A., Godfrey, K. M., Burgess, R. A., Hunter, D. J., Riboli, E., Romer, P., Buchan, I., Colbourn, T., Costelloe, C., Smith, G. D., Elliott, P., Ezzati, M., Gilbert, R., Gilthorpe, M. S., Foy, R., Houlston, R., Inskip, H., Lawlor, D. A., … Yao, G. L. (2020). Universal weekly testing as the UK COVID-19 lockdown exit strategy. The Lancet, 0(0). https://doi.org/10.1016/S0140-6736(20)30936-3

-

-

twitter.com twitter.com

-

Adam Kucharski en Twitter: “I’m seeing more and more suggestions that contact tracing and/or physical distancing isn’t needed and we could solve COVID-19 with widespread testing alone. E.g. just test everyone once a week/fortnight to get R<1. Sounds straightforward? Unfortunately not... 1/” / Twitter. (n.d.). Twitter. Retrieved April 22, 2020, from https://twitter.com/adamjkucharski/status/1252241817829019648

-

-

code.visualstudio.com code.visualstudio.com

-

To customize settings for debugging tests, you can specify "request":"test" in the launch.json file in the .vscode folder from your workspace.

Customising settings for debugging tests while running

Python: Debug All Tests

or

Python: Debug Test Method

-

For example, the test_decrement functions given earlier are failing because the assertion itself is faulty.

Debugging tests themselves

- Set a breakpoint on the first line of the failing function (e.g.

test_decrement) - Click the "Debug Test" option above the function

- Open Debug Console and type:

inc_dec.decrement(3)to see what is the actual output when we use x=3 - Stop the debugger and correct the tests

- Save the test file and run the tests again to look for a positive result

- Set a breakpoint on the first line of the failing function (e.g.

-

Support for running tests in parallel with pytest is available through the pytest-xdist package.

pytest-xdist provides support for parallel testing.

- To enable it on Windows:

py -3 -m pip install pytest-xdist- Create a file

pytest.iniin your project directory and specify in it the number of CPUs to be used (e.g. 4):[pytest] addopts=-n4 - Run your tests

-

With pytest, failed tests also appear in the Problems panel, where you can double-click on an issue to navigate directly to the test

pytest displays failed tests also in PROBLEMS

-

VS Code also shows test results in the Python Test Log output panel (use the View > Output menu command to show the Output panel, then select Python Test Log

Another way to view the test results:

View > Output > Python Test Log

-

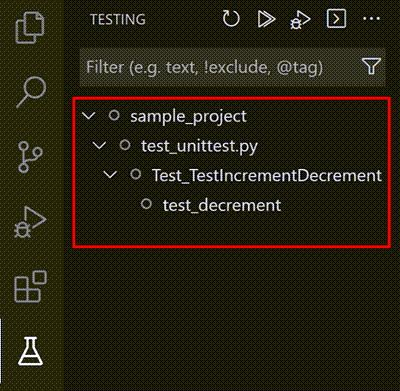

For Python, test discovery also activates the Test Explorer with an icon on the VS Code activity bar. The Test Explorer helps you visualize, navigate, and run tests

Test Explorer is activated after discovering tests in Python:

-

Once VS Code recognizes tests, it provides several ways to run those tests

After discovering tests, we can run them, for example, using CodeLens:

-

You can trigger test discovery at any time using the Python: Discover Tests command.

After using

python.testing.autoTestDiscoverOnSaveEnabled, it'll be set totrueand discovering tests whenever a test file is saved.If discovery succeeds, the status bar shows Run Tests instead:

-

Sometimes tests placed in subfolders aren't discovered because such test files cannot be imported. To make them importable, create an empty file named __init__.py in that folder.

Tip to use when tests aren't discoverable in subfolderds (create

__init__.pyfile) -

Testing in Python is disabled by default. To enable testing, use the Python: Configure Tests command on the Command Palette.

Start testing in VS Code by using

Python: Configure Tests(it automatically chooses one testing framework and disables the rest).Otherwise, you can configure tests manually by setting only one of the following to True:

python.testing.unittestEnabledpython.testing.pytestEnabledpython.testing.nosetestsEnabled

-

python.testing.pytestArgs: Looks for any Python (.py) file whose name begins with "test_" or ends with "_test", located anywhere within the current folder and all subfolders.

Default behaviour of test discovery by pytest framework

-

python.testing.unittestArgs: Looks for any Python (.py) file with "test" in the name in the top-level project folder.

Default behaviour of test discovery by unittest framework

-

Create a file named test_unittest.py that contains a test class with two test methods

Sample test file using unittest framework.

inc_decis the file that's being tested:import inc_dec # The code to test import unittest # The test framework class Test_TestIncrementDecrement(unittest.TestCase): def test_increment(self): self.assertEqual(inc_dec.increment(3), 4) # checks if the results is 4 when x = 3 def test_decrement(self): self.assertEqual(inc_dec.decrement(3), 4) if __name__ == '__main__': unittest.main() -

Each test framework has its own conventions for naming test files and structuring the tests within, as described in the following sections. Each case includes two test methods, one of which is intentionally set to fail for the purposes of demonstration.

- each testing framework has own naming conventions

- each case includes two test methods (one of which fails)

-

Nose2, the successor to Nose, is just unittest with plugins

Nose2 testing

-

Python tests are Python classes that reside in separate files from the code being tested.

-

general background on unit testing, see Unit Testing on Wikipedia. For a variety of useful unit test examples, see https://github.com/gwtw/py-sorting

-

Running the unit test early and often means that you quickly catch regressions, which are unexpected changes in the behavior of code that previously passed all its unit tests.

Regressions

-

Developers typically run unit tests even before committing code to a repository; gated check-in systems can also run unit tests before merging a commit.

When to run unit tests:

- before committing

- ideally before merging

- many CI systems run it after every build

-

in unit testing you avoid external dependencies and use mock data or otherwise simulated inputs

Unit tests are small, isolated piece of code making them quick and inexpensive to run

-

The practice of test-driven development is where you actually write the tests first, then write the code to pass more and more tests until all of them pass.

Essence of TDD

-

The combined results of all the tests is your test report

test report

-

each test is very simple: invoke the function with an argument and assert the expected return value.

e.g. test of an exact number entry:

def test_validator_valid_string(): # The exact assertion call depends on the framework as well assert(validate_account_number_format("1234567890"), true) -

Unit tests are concerned only with the unit's interface—its arguments and return values—not with its implementation

-

unit is a specific piece of code to be tested, such as a function or a class. Unit tests are then other pieces of code that specifically exercise the code unit with a full range of different inputs, including boundary and edge cases.

Essence of unit testing

-

-

www.guru99.com www.guru99.com

-

SMOKE TESTING is a type of software testing that determines whether the deployed build is stable or not.

stable or not

-

-

github.com github.com

-

the Mock Object or Test Stub types of Test Double

Tags

Annotators

URL

-

-

xunitpatterns.com xunitpatterns.com

-

github.com github.com

-

github.com github.com

-

github.com github.com

Tags

Annotators

URL

-

-

cucumber.io cucumber.io

-

Then the programmer(s) will go over the scenarios, refining the steps for clarification and increased testability. The result is then reviewed by the domain expert to ensure the intent has not been compromised by the programmers’ reworking.

-

-

cucumber.io cucumber.io

-

Enable Frictionless Collaboration CucumberStudio empowers the whole team to read and refine executable specifications without needing technical tools. Business and technology teams can collaborate on acceptance criteria and bridge their gap.

-

-

medium.com medium.com

-

We really only need to test that the button gets rendered at all (we don’t care about what the label says — it may say different things in different languages, depending on user locale settings). We do want to make sure that the correct number of clicks gets displayed

An example of how to think about tests. Also asserting against text that's variable isn't very useful.

-

- Mar 2020

-

github.com github.com

-

Methods must be tested both via a Lemon unit test and as a QED demo. The Lemon unit tests are for testing a method in detail whereas the QED demos are for demonstrating usage.

-

-

www.usatoday.com www.usatoday.com

-

Standardized test scores improved dramatically. In 2006, only 10% of Noyes' students scored "proficient" or "advanced" in math on the standardized tests required by the federal No Child Left Behind law. Two years later, 58% achieved that level. The school showed similar gains in reading. Because of the remarkable turnaround, the U.S. Department of Education named the school in northeast Washington a National Blue Ribbon School. Noyes was one of 264 public schools nationwide given that award in 2009. Michelle Rhee, then chancellor of D.C. schools, took a special interest in Noyes. She touted the school, which now serves preschoolers through eighth-graders, as an example of how the sweeping changes she championed could transform even the lowest-performing Washington schools. Twice in three years, she rewarded Noyes' staff for boosting scores: In 2008 and again in 2010, each teacher won an $8,000 bonus, and the principal won $10,000. A closer look at Noyes, however, raises questions about its test scores from 2006 to 2010. Its proficiency rates rose at a much faster rate than the average for D.C. schools. Then, in 2010, when scores dipped for most of the district's elementary schools, Noyes' proficiency rates fell further than average.

-

-

www.theguardian.com www.theguardian.com

-

Atlanta’s rampant test manipulation amplified calls for nationwide education reform. Seven years after the Atlanta Journal-Constitution first reported on testing problems, policymakers have failed to make significant progress toward changing the way students take standardized tests and how teachers interpret those scores. In fact, the problem has worsened, resulting in documented cheating in at least 40 states, since the APS cheating scandal first came to light. “Atlanta is the tip of the iceberg,” says Bob Schaeffer, public education director of FairTest, a nonprofit opposed to current testing standards. “Cheating is a predictable outcome of what happens when public policy puts too much pressure on test scores.” Some experts, including Schaeffer, point to the No Child Left Behind Act of 2001 as a source of today’s testing problems, though others say the woes predated the law. Then-president George W Bush, who signed the measure in January 2002, aimed to boost national academic performance and close the achievement gap between white and minority students. To make that happen, the law relied upon standardized tests designed to hold teachers accountable for classroom improvements. Federal funding hinged on school improvements, as did the future of the lowest-performing schools. But teachers in many urban school districts already faced enormous challenges that fell outside their control – including high poverty, insufficient food access, and unstable family situations. Though high-stakes testing increased student achievement in some schools, the federal mandate turned an already-difficult challenge into a feat some considered insurmountable. The pressure led to problems. Dr Beverly Hall, the former APS superintendent who was praised for turning around student performance, was later accused of orchestrating the cheating operation. During her tenure, Georgia investigators found 178 educators had inflated test scores at 44 elementary and middle schools.

-

-

www.nytimes.com www.nytimes.com

-

Atlanta public schools. The urban school district has already suffered one of the most devastating standardized-testing scandals of recent years. A state investigation in 2011 found that 178 principals and teachers in the city school district were involved in cheating on standardized tests. Dozens of former employees of the school district have either been fired or have resigned, and 21 educators have pleaded guilty to crimes like obstruction and making false statements.

They were convicted see https://www.nytimes.com/2015/04/02/us/verdict-reached-in-atlanta-school-testing-trial.html

-

-

-

For automated testing, include the parameter is_test=1 in your tests. That will tell Akismet not to change its behaviour based on those API calls – they will have no training effect. That means your tests will be somewhat repeatable, in the sense that one test won’t influence subsequent calls.

-

-

code.djangoproject.com code.djangoproject.com

-

I would like to make an appeal to core developers: all design decisions involving involuntary session creation MUST be made with a great caution. In case of a high-load project, avoiding to create a session for non-authenticated users is a vital strategy with a critical influence on application performance. It doesn't really make a big difference, whether you use a database backend, or Redis, or whatever else; eventually, your load would be high enough, and scaling further would not help anymore, so that either network access to the session backend or its “INSERT” performance would become a bottleneck. In my case, it's an application with 20-25 ms response time under a 20000-30000 RPM load. Having to create a session for an each session-less request would be critical enough to decide not to upgrade Django, or to fork and rewrite the corresponding components.

-

- Feb 2020

-

-

I had created a bunch of annotations on: https://loadimpact.com/our-beliefs/ https://hyp.is/bYpY5lKoEeqO_HdxChFU0Q/loadimpact.com/our-beliefs/

But when I click "Visit annotations in context"

Hypothesis shows an error:

Annotation unavailable The current URL links to an annotation, but that annotation cannot be found, or you do not have permission to view it.

How do I edit my existing annotations for the previous URL and update them to reference the new URL instead?

Tags

Annotators

URL

-

-

about.gitlab.com about.gitlab.com

-

development is hard because you have to preserve the ability to quickly improve the product in the future

-

-

work.stevegrossi.com work.stevegrossi.com

-

Performance Benchmarking What it is: Testing a system under certain reproducible conditions Why do it: To establish a baseline which can be tested against regularly to ensure a system’s performance remains constant, or validate improvements as a result of change Answers the question: “How is my app performing, and how does that compare with the past?”

-

-

github.com github.com