Language Range ... matches ... does not match de de, de-CH, de-AT, de-DE, de-1901, de-AT-1901 en, fr-CH

- Jun 2021

-

www.w3.org www.w3.org

-

-

Because ISO code lists were not always free and because they change over time, a key idea was to create a permanent, stable registry for all of the subtags valid in a language tag.

Why was it not free???

-

-

dba.stackexchange.com dba.stackexchange.com

-

FROM test x1 LEFT JOIN test x2 ON x1.id = (x2.data->>'parent')::INT;

-

-

-

Wanted: Rules for pandemic data access that everyone can trust. (2021). Nature, 594(7861), 8–8. https://doi.org/10.1038/d41586-021-01460-7

-

-

www.bmj.com www.bmj.com

-

Mahase, E. (2021). Covid-19: Government faces legal challenge over alleged suppression of school data. BMJ, 373, n1408. https://doi.org/10.1136/bmj.n1408

-

-

dplyr.tidyverse.org dplyr.tidyverse.org

-

variables or computations to group by

data-masking

Tags

Annotators

URL

-

- May 2021

-

www.sciencemag.org www.sciencemag.org

-

Wadman, M. (2021). Antivaccine activists use a government database on side effects to scare the public. Science. https://doi.org/10.1126/science.abj6981

Tags

- VAERS

- vaccine-safety

- misinterpretation

- false

- vaccine

- data

- misinformation

- side-effects

- database

- COVID-19

- Centers for Disease Control and Prevention

- CDC

- Vaccine Adverse Events Reporting System

- public

- bad science

- USA

- anti-vaxxer

- blood clots

- Fox News

- lang:en

- antivaccine

- government

- misleading

- activism

- science

- is:article

Annotators

URL

-

-

-

On April 27, 2021, Portugal's data protection authority, the National Data Protection Commission, ordered Statistics Portugal, in carrying out the national census, to suspend processing of personal data in any third country that lacks adequate privacy protections, including the United States

Cloudflare

-

-

www.eventbrite.com www.eventbrite.com

-

Data Collection and Integration to Enhance Public Health Registration, Thu, Jun 10, 2021 at 1:00 PM | Eventbrite. (n.d.). Retrieved May 28, 2021, from https://www.eventbrite.com/e/data-collection-and-integration-to-enhance-public-health-registration-156146370999

-

-

-

Wang, C. J. (2021). Contact-tracing app curbed the spread of COVID in England and Wales. Nature, d41586-021-01354–01358. https://doi.org/10.1038/d41586-021-01354-8

-

-

-

The O’Reilly report suggests that the second-most significant barrier to AI adoption is a lack of quality data, with 18% of respondents saying their organization is only beginning to realize the importance of high-quality data.

Data quality is secondary in the sense that data can be cleaned but generating test data for training AI is a non-starter.

-

-

humanitas.ai humanitas.ai

-

www.theguardian.com www.theguardian.com

-

No 10 ‘tried to block’ data on spread of new Covid variant in English schools | Coronavirus | The Guardian. (n.d.). Retrieved May 24, 2021, from https://www.theguardian.com/world/2021/may/22/no-10-tried-to-block-data-on-spread-of-new-covid-variant-in-english-schools

-

-

www.connectedpapers.com www.connectedpapers.com

-

-

The research found that working 55 hours or more a week was associated with a 35% higher risk of stroke and a 17% higher risk of dying from heart disease, compared with a working week of 35 to 40 hours.

Tags

Annotators

URL

-

-

moodle.southwestern.edu moodle.southwestern.edu

-

To investigate these hypotheses, I created an election-year-country dataset covering the period from the early 1990s to the present for all post- communist democracies.7 The dataset is structured as a quasi-time series of 93 parliamentary elections in 17 countries from 1991 to 2012, and the depen-dent variable is the natural log of the radical right party’s combined vote share in elections held at time t.

this is the data, her explanation of the dataset she created

-

-

www.skyhunter.com www.skyhunter.com

-

a hypermedia server might use sensors to alert users to the arrival of new material: if a sensor were attached to a document, running a new link to the document would set off the sensor

Linked data notifications?

(I like the "sensor" imagery.)

Tags

Annotators

URL

-

-

graph.global graph.global

-

Surely RDF already has something like this...? I tried looking around briefly but couldn't find it.

-

-

howtomeasureghosts.substack.com howtomeasureghosts.substack.com

-

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>Kevin Marks</span> in "@alexstamos You gave a pithy quote about 'strangers' which downplayed the sustained attempts by the social silos to gather and document our lives in their dossiers and cash in on it. @matlock explains more here https://t.co/lo4dG4CuqV" / Twitter (<time class='dt-published'>05/18/2021 19:32:39</time>)</cite></small>

-

-

www.global-solutions-initiative.org www.global-solutions-initiative.org

-

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>Maria Farrell</span> in What is Ours is Only Ours to Give — Crooked Timber (<time class='dt-published'>05/18/2021 11:28:17</time>)</cite></small>

-

-

www.dougengelbart.org www.dougengelbart.org

-

Draft notes, E-mail, plans, source code, to-do lists, what have you

The personal nature of this information means that users need control of their information. Tim Berners-Lee's Solid (Social Linked Data) project) looks like it could do some of this stuff.

-

-

www.frontiersin.org www.frontiersin.org

-

Di Sebastiano, K. M., Chulak-Bozzer, T., Vanderloo, L. M., & Faulkner, G. (2020). Don’t Walk So Close to Me: Physical Distancing and Adult Physical Activity in Canada. Frontiers in Psychology, 11. https://doi.org/10.3389/fpsyg.2020.01895

-

-

data-collective.org.uk data-collective.org.uk

Tags

Annotators

URL

-

-

www.nytimes.com www.nytimes.com

-

Kramer, J. (2021, May 7). In Covid Vaccine Data, L.G.B.T.Q. People Fear Invisibility. The New York Times. https://www.nytimes.com/2021/05/07/health/coronavirus-lgbtq.html

-

-

www.ncbi.nlm.nih.gov www.ncbi.nlm.nih.gov

-

Jacobson Vann, J. C., Jacobson, R. M., Coyne‐Beasley, T., Asafu‐Adjei, J. K., & Szilagyi, P. G. (2018). Patient reminder and recall interventions to improve immunization rates. The Cochrane Database of Systematic Reviews, 2018(1). https://doi.org/10.1002/14651858.CD003941.pub3

-

-

wintoncentre.maths.cam.ac.uk wintoncentre.maths.cam.ac.uk

-

Winton Centre Cambridge. (n.d.). Retrieved May 12, 2021, from https://wintoncentre.maths.cam.ac.uk/news/latest-data-mhra-blood-clots-associated-astra-zeneca-covid-19-vaccine/

-

-

psyarxiv.com psyarxiv.com

-

Westgate, Erin, Nick Buttrick, Yijun Lin, and Gaelle Milad El Helou. ‘Pandemic Boredom: Predicting Boredom and Its Consequences during Self-Isolation and Quarantine’. PsyArXiv, 11 May 2021. https://doi.org/10.31234/osf.io/78kma.

-

-

communityfoundations.ca communityfoundations.ca

-

twitter.com twitter.com

-

Darren Dahly on Twitter. (n.d.). Twitter. Retrieved 1 May 2021, from https://twitter.com/statsepi/status/1385127211699691520

-

-

twitter.com twitter.com

-

ReconfigBehSci on Twitter. (n.d.). Twitter. Retrieved 1 March 2021, from https://twitter.com/SciBeh/status/1351197475282022404

-

-

-

Ashokkumar, A., & Pennebaker, J. W. (2021). The Social and Psychological Changes of the First Months of COVID-19. PsyArXiv. https://doi.org/10.31234/osf.io/a34qp

-

-

opportunityinsights.org opportunityinsights.org

-

The Economic Impacts of COVID-19: Evidence from a New Public Database Built Using Private Sector Data. (2020, May 7). Opportunity Insights. https://opportunityinsights.org/paper/tracker/

-

-

twitter.com twitter.com

-

ReconfigBehSci. (2020, November 18). @danielmabuse yes, we all make mistakes, but a responsible actor also factors the kinds of mistakes she is prone to making into decisions on what actions to take: I’m not that great with my hands, so I never contemplated being a neuro-surgeon. Not everyone should be a public voice on COVID [Tweet]. @SciBeh. https://twitter.com/SciBeh/status/1329002783094296577

-

-

twitter.com twitter.comTwitter1

-

Robert Colvile. (2021, February 16). The vaccine passports debate is a perfect illustration of my new working theory: That the most important part of modern government, and its most important limitation, is database management. Please stick with me on this—It’s much more interesting than it sounds. (1/?) [Tweet]. @rcolvile. https://twitter.com/rcolvile/status/1361673425140543490

-

-

twitter.com twitter.com

-

Stephan Lewandowsky. (2021, March 6). 25 March deadline for submissions to our ‘special track’ https://t.co/qwLxCCSjks at Data for Policy conference, 14-16 September at UCL. Please consider submitting @SciBeh @stefanmherzog @Sander_vdLinden https://t.co/A8KSC1Tkh9 [Tweet]. @STWorg. https://twitter.com/STWorg/status/1368280722709110789

-

-

twitter.com twitter.com

-

Tommy Shane on Twitter. (n.d.). Twitter. Retrieved 14 February 2021, from https://twitter.com/tommyshane/status/1357385093514461184

-

-

twitter.com twitter.com

-

ReconfigBehSci on Twitter: ‘the SciBeh initiative is about bringing knowledge to policy makers and the general public, but I have to say this advert I just came across worries me: Where are the preceding data integrity and data analysis classes? Https://t.co/5LwkC1SVyF’ / Twitter. (n.d.). Retrieved 18 February 2021, from https://twitter.com/SciBeh/status/1362344945697308674

-

-

twitter.com twitter.com

-

Jens von Bergmann on Twitter. (n.d.). Twitter. Retrieved 3 March 2021, from https://twitter.com/vb_jens/status/1329450564019761156

-

-

-

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>Maria Farrell</span> in What is Ours is Only Ours to Give — Crooked Timber (<time class='dt-published'>05/06/2021 13:32:31</time>)</cite></small>

-

-

www.ianbrown.tech www.ianbrown.tech

-

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>Maria Farrell</span> in What is Ours is Only Ours to Give — Crooked Timber (<time class='dt-published'>05/06/2021 13:32:31</time>)</cite></small>

-

-

psyarxiv.com psyarxiv.com

-

Rohrer, J. M., Schmukle, S., & McElreath, R. (2021). The Only Thing That Can Stop Bad Causal Inference Is Good Causal Inference. PsyArXiv. https://doi.org/10.31234/osf.io/mz5jx

-

-

twitter.com twitter.com

-

ReconfigBehSci. (2021, May 4). RT @CT_Bergstrom: In today’s much-discussed @nytimes story from @apoorva_nyc (https://t.co/WoyAuPyQNt) there is a graph that I find quite p… [Tweet]. @SciBeh. https://twitter.com/SciBeh/status/1389740184158183427

-

-

www.propublica.org www.propublica.org

-

Martin, N. (n.d.). Nobody Accurately Tracks Health Care Workers Lost to COVID-19. So She Stays Up At Night Cataloging the Dead. ProPublica. Retrieved March 1, 2021, from https://www.propublica.org/article/nobody-accurately-tracks-health-care-workers-lost-to-covid-19-so-she-stays-up-at-night-cataloging-the-dead?token=k0iWSlfCBQl5DBRfyvJNIuVaKXSL22HN

-

-

www.quantamagazine.org www.quantamagazine.org

-

Cepelewicz, J. (n.d.). The Hard Lessons of Modeling the Coronavirus Pandemic. Quanta Magazine. Retrieved February 11, 2021, from https://www.quantamagazine.org/the-hard-lessons-of-modeling-the-coronavirus-pandemic-20210128/

-

-

-

NERVTAG paper on COVID-19 variant of concern B.1.1.7. (n.d.). GOV.UK. Retrieved March 1, 2021, from https://www.gov.uk/government/publications/nervtag-paper-on-covid-19-variant-of-concern-b117

-

-

-

Ross, J. S. (2021). Covid-19, open science, and the CVD-COVID-UK initiative. BMJ, 373, n898. https://doi.org/10.1136/bmj.n898

-

-

-

The Data Visualizations Behind COVID-19 Skepticism. (n.d.). The Data Visualizations Behind COVID-19 Skepticism. Retrieved March 27, 2021, from http://vis.csail.mit.edu/covid-story/

-

- Apr 2021

-

twitter.com twitter.com

-

Benjy Renton on Twitter: “For those who are wondering: There is a slight association (r = 0.34) between the percentage a county voted for Trump in 2020 and estimated hesitancy levels. As @JReinerMD mentioned, GOP state, county and local levels need to do their part to promote vaccination. Https://t.co/ZY2lUqHgLd” / Twitter. (n.d.). Retrieved April 28, 2021, from https://twitter.com/bhrenton/status/1382330404586274817

-

-

twitter.com twitter.com

-

Seth Trueger. (2021, April 16). *far lower than expectations that’s < 1 in 10k, which is way better than 95% https://t.co/mxQ84MFKc2 [Tweet]. @MDaware. https://twitter.com/MDaware/status/1383065988204220419

-

-

twitter.com twitter.com

-

Mehdi Hasan. (2021, April 12). ‘Given you acknowledged...in March 2020 that Asian countries were masking up at the time, saying we shouldn’t mask up as well was a mistake, wasn’t it... At the time, not just in hindsight?’ My question to Dr Fauci. Listen to his very passionate response: Https://t.co/BAf4qp0m6G [Tweet]. @mehdirhasan. https://twitter.com/mehdirhasan/status/1381405233360814085

-

-

-

Zur Studie der Heinrich-Böll Stiftung vom Dezember, die jetzt vom IPCC debattiert wird.

-

-

twitter.com twitter.com

-

(20) Carolyn Barber, MD on Twitter: ‘@VincentRK Thank you. Very helpful. Retweeting this which lines up with your UK data. Https://t.co/ECNaGuqiaB’ / Twitter. (n.d.). Retrieved 24 April 2021, from https://twitter.com/cbarbermd/status/1381407627884695556

-

-

-

Tollefson, J. (2021, April 16). The race to curb the spread of COVID vaccine disinformation. Nature. https://www.nature.com/articles/d41586-021-00997-x?error=cookies_not_supported&code=0d3302c0-59b3-4065-8835-2a6d99ca35cc

-

-

investors.modernatx.com investors.modernatx.com

-

Moderna Provides Clinical and Supply Updates on COVID-19 Vaccine Program Ahead of 2nd Annual Vaccines Day. (2021, April 13). Moderna, Inc. https://investors.modernatx.com/news-releases/news-release-details/moderna-provides-clinical-and-supply-updates-covid-19-vaccine

Tags

- dose

- is:webpage

- press release

- data

- variant

- antibody

- supply

- global

- COVID-19

- clinical

- moderna

- booster

- biotechnology

- vaccination

- efficacy

- lang:en

Annotators

URL

-

-

arxiv.org arxiv.org

-

Yang, K.-C., Pierri, F., Hui, P.-M., Axelrod, D., Torres-Lugo, C., Bryden, J., & Menczer, F. (2020). The COVID-19 Infodemic: Twitter versus Facebook. ArXiv:2012.09353 [Cs]. http://arxiv.org/abs/2012.09353

-

-

journals.sagepub.com journals.sagepub.com

-

Scheel, Anne M., Mitchell R. M. J. Schijen, and Daniël Lakens. ‘An Excess of Positive Results: Comparing the Standard Psychology Literature With Registered Reports’. Advances in Methods and Practices in Psychological Science 4, no. 2 (1 April 2021): 25152459211007468. https://doi.org/10.1177/25152459211007467.

-

-

blogs.bmj.com blogs.bmj.com

-

Nabavi, N., & Dobson, J. (2021, April 21). Covid-19 new variants—known unknowns. The BMJ Opinion. https://blogs.bmj.com/bmj/2021/04/21/covid-19-new-variants-known-unknowns/?utm_campaign=shareaholic&utm_medium=twitter&utm_source=socialnetwork

-

-

www.nejm.org www.nejm.org

-

Shimabukuro, Tom T., Shin Y. Kim, Tanya R. Myers, Pedro L. Moro, Titilope Oduyebo, Lakshmi Panagiotakopoulos, Paige L. Marquez, et al. ‘Preliminary Findings of MRNA Covid-19 Vaccine Safety in Pregnant Persons’. New England Journal of Medicine 0, no. 0 (21 April 2021): null. https://doi.org/10.1056/NEJMoa2104983.

Tags

- VAERS

- preterm

- Vaccine Adverse Event Reporting System

- injection

- data

- research

- medicine

- neonatal

- preliminary

- abortion

- myalgia

- COVID-19

- maternal

- headache

- women

- v-safe after vaccination health checker

- chills

- vaccination

- birth

- v-safe pregnancy registry

- lang:en

- fever

- safety

- mRNA

- pain

- infant

- is:article

- pregnant

Annotators

URL

-

-

www.geeksforgeeks.org www.geeksforgeeks.org

-

Drop missing values from the dataframeIn this method we can see that by using dropmissing() method, we are able to remove the rows having missing values in the data frame. Drop missing values is good for those datasets which are large enough to miss some data that will not affect the prediction and it’s not good for small datasets it may lead to underfitting the models.

Listwise Deletion

-

-

www.thelancet.com www.thelancet.com

-

Ten scientific reasons in support of airborne transmission of SARS-CoV-2—The Lancet. (n.d.). Retrieved April 19, 2021, from https://www.thelancet.com/journals/lancet/article/PIIS0140-6736(21)00869-2/fulltext

-

-

Local file Local file

-

Taquet, M. (2021, April 15). COVID-19 and cerebral venous thrombosis: a retrospective cohort study of 513,284 confirmed COVID-19 cases. https://doi.org/10.17605/OSF.IO/H2MT7

-

-

www.imperial.ac.uk www.imperial.ac.uk

-

More transmissible and evasive SARS-CoV-2 variant growing rapidly in Brazil | Imperial News | Imperial College London. (n.d.). Imperial News. Retrieved 18 April 2021, from https://www.imperial.ac.uk/news/216053/more-transmissible-evasive-sarscov2-variant-growing/

-

-

ec.europa.eu ec.europa.eu

-

ec.europa.eu ec.europa.eu

-

GDP & main components pin EU countries.

-

-

ec.europa.eu ec.europa.eu

Tags

Annotators

URL

-

-

twitter.com twitter.com

-

Jeremy Faust MD MS (ER physician) on Twitter: “Let’s talk about the background risk of CVST (cerebral venous sinus thrombosis) versus in those who got J&J vaccine. We are going to focus in on women ages 20-50. We are going to compare the same time period and the same disease (CVST). DEEP DIVE🧵 KEY NUMBERS!” / Twitter. (n.d.). Retrieved April 15, 2021, from https://twitter.com/jeremyfaust/status/1382536833863651330

-

-

www.dataprotectionreport.com www.dataprotectionreport.com

-

www.pnas.org www.pnas.org

-

West, J. D., & Bergstrom, C. T. (2021). Misinformation in and about science. Proceedings of the National Academy of Sciences, 118(15). https://doi.org/10.1073/pnas.1912444117

-

-

www.theguardian.com www.theguardian.com

-

UCL team’s claim that herd immunity set to be achieved in UK disputed | Coronavirus | The Guardian. (n.d.). Retrieved April 12, 2021, from https://www.theguardian.com/world/2021/apr/09/ucl-team-claim-covid-19-herd-immunity-achieved-uk-disputed-scientists

-

-

www.washingtonpost.com www.washingtonpost.com

-

Emails show Trump officials celebrate efforts to change CDC reports on coronavirus—The Washington Post. (n.d.). Retrieved April 12, 2021, from https://www.washingtonpost.com/health/2021/04/09/cdc-covid-political-interference/

Tags

- response

- data

- schools

- Trump

- scientific practice

- misinformation

- scientific advice

- economy

- politics

- COVID-19

- Centers for Disease Control and Prevention

- CDC

- political interference

- bad science

- scientific integrity

- USA

- children

- public health

- lang:en

- government

- science

- Donald Trump

- is:article

Annotators

URL

-

-

-

Covid: Red states vaccinating at lower rate than blue states—CNNPolitics. (n.d.). Retrieved April 12, 2021, from https://edition.cnn.com/2021/04/10/politics/vaccinations-state-analysis/

-

-

www.theguardian.com www.theguardian.com

-

UK’s Covid vaccine programme on track despite AstraZeneca problems | Vaccines and immunisation | The Guardian. (n.d.). Retrieved April 12, 2021, from https://www.theguardian.com/society/2021/apr/11/uks-covid-vaccine-programme-on-track-despite-astrazeneca-problems

-

-

www.carbonbrief.org www.carbonbrief.org

-

Wenn die Temperaturen bis 2100 um 4 Grad steigen, werden ca. 40% des antarktischen Schelfeises mit hoher Wahrscheinlichkeit kollabieren.

Tags

- time:1980-2100

- concerned:ice shelves

- parameter: ice shelf runoff extent

- region:Antarctica

- intensity:depending on temperature rise (1.5-2-4)

- data-source:simulation

- mediatype:blogpost

- threshold:collapse

- researcher:Ella Gilbert

- distribution:depending on geographic situation

- process:ice shelf hydrofracture

- impact:glacier melting

- trigger:atmospheric heating

Annotators

URL

-

-

frankensteinvariorum.github.io frankensteinvariorum.github.io

-

Interesting to see this actually hosted by GitHub pages.

-

-

reallifemag.com reallifemag.com

-

The privacy policy — unlocking the door to your profile information, geodata, camera, and in some cases emails — is so disturbing that it has set off alarms even in the tech world.

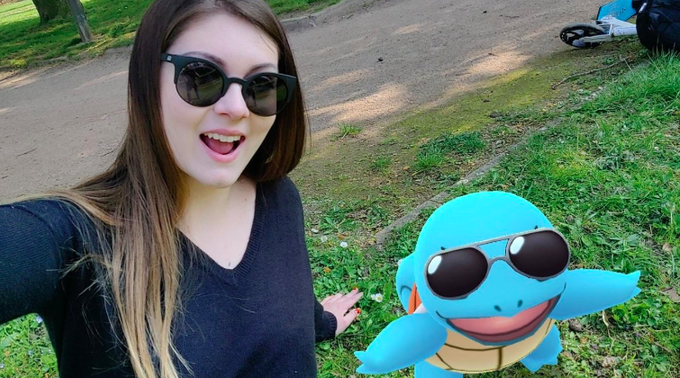

This Intercept article covers some of the specific privacy policy concerns Barron hints at here. The discussion of one of the core patents underlying the game, which is described as a “System and Method for Transporting Virtual Objects in a Parallel Reality Game" is particularly interesting. Essentially, this system generates revenue for the company (in this case Niantic and Google) through the gamified collection of data on the real world - that selfie you took with squirtle is starting to feel a little bit less innocent in retrospect...

-

Yelp, like Google, makes money by collecting consumer data and reselling it to advertisers.

This sentence reminded me of our "privacy checkup" activity from week 7 and has made me want to go and review the terms of service for some of the companies featured in this article- I don't use yelp, but Venmo and Lyft are definitely keeping track of some of my data.

-

-

med.stanford.edu med.stanford.edu

-

The insertion of an algorithm’s predictions into the patient-physician relationship also introduces a third party, turning the relationship into one between the patient and the health care system. It also means significant changes in terms of a patient’s expectation of confidentiality. “Once machine-learning-based decision support is integrated into clinical care, withholding information from electronic records will become increasingly difficult, since patients whose data aren’t recorded can’t benefit from machine-learning analyses,” the authors wrote.

There is some work being done on federated learning, where the algorithm works on decentralised data that stays in place with the patient and the ML model is brought to the patient so that their data remains private.

-

-

medium.com medium.com

-

To improve the usability of text fields and to determine which text field variables to alter, our researchers and designers conducted two studies between November 2016 and February 2017, with actual users.

-

You might not always notice, but Material Design is constantly evolving and iterating based on research.

-

-

www.peakgrantmaking.org www.peakgrantmaking.org

-

foundation.mozilla.org foundation.mozilla.org

- Mar 2021

-

www.theatlantic.com www.theatlantic.com

-

The scholars Nick Couldry and Ulises Mejias have called it “data colonialism,” a term that reflects our inability to stop our data from being unwittingly extracted.

I've not run across data colonialism before.

-

-

www.bloomberg.com www.bloomberg.com

-

Younger Brazilians Are Dying From Covid in an Alarming New Shift. (2021, March 26). Bloomberg.Com. https://www.bloomberg.com/news/articles/2021-03-26/younger-brazilians-are-dying-from-covid-in-an-alarming-new-shift

-

-

tatianamac.com tatianamac.com

-

Visualise written content into a more dynamic way. Many people, some neurodivergent folks especially, benefit from information being distilled into diagrams, comics, or less word-dense formats. Visuals can also benefit people who might not read/understand the language you wrote it in. They can also be an effective lead-in to your long-form from visually-driven avenues like Pinterest or Instagram.

This is also a great exercise for readers and learners. If the book doesn't do this for you already, spend some time to annotate it or do it yourself.

-

-

science.sciencemag.org science.sciencemag.org

-

Monod, Mélodie, Alexandra Blenkinsop, Xiaoyue Xi, Daniel Hebert, Sivan Bershan, Simon Tietze, Marc Baguelin, et al. ‘Age Groups That Sustain Resurging COVID-19 Epidemics in the United States’. Science 371, no. 6536 (26 March 2021). https://doi.org/10.1126/science.abe8372.

-

-

www.medrxiv.org www.medrxiv.org

-

Ioannidis, John P. A. (2020) ‘The Infection Fatality Rate of COVID-19 Inferred from Seroprevalence Data’. MedRxiv. https://doi.org/10.1101/2020.05.13.20101253.

-

-

data.cdc.gov data.cdc.gov

-

Calgary, Open. ‘COVID-19 Case Surveillance Public Use Data with Geography | Data | Centers for Disease Control and Prevention’. Accessed 26 March 2021. https://data.cdc.gov/Case-Surveillance/COVID-19-Case-Surveillance-Public-Use-Data-with-Ge/n8mc-b4w4.

-

-

theconversation.com theconversation.com

-

Hale, Thomas. ‘What We Learned from Tracking Every COVID Policy in the World’. The Conversation. Accessed 26 March 2021. http://theconversation.com/what-we-learned-from-tracking-every-covid-policy-in-the-world-157721.

-

-

github.com github.com

-

The repository also contains the datasets used in our experiments, in JSON format. These are in the data folder.

Tags

Annotators

URL

-

-

en.wikipedia.org en.wikipedia.org

-

In computer science, a tree is a widely used abstract data type that simulates a hierarchical tree structure

a tree (data structure) is the computer science analogue/dual to tree structure in mathematics

-

-

en.wikipedia.org en.wikipedia.org

-

graph theory is the study of graphs, which are mathematical structures used to model pairwise relations between objects

-

-

www.nature.com www.nature.com

-

Nsoesie, E. O., Oladeji, O., Abah, A. S. A., & Ndeffo-Mbah, M. L. (2021). Forecasting influenza-like illness trends in Cameroon using Google Search Data. Scientific Reports, 11(1), 6713. https://doi.org/10.1038/s41598-021-85987-9

-

-

scholar.google.com scholar.google.com

-

-

Webpages for hundreds of hospitals require users to click through to find prices, undermining federal transparency rule, Journal analysis shows

-

-

puntofisso.net puntofisso.net

Tags

Annotators

URL

-

-

www.theguardian.com www.theguardian.com

-

Carl Bergstrom: “People are using data to bullshit.” (2020, August 1). The Guardian. http://www.theguardian.com/science/2020/aug/01/carl-bergstrom-people-are-using-data-to-bullshit

-

-

journals.sagepub.com journals.sagepub.com

-

Hoogeveen, S., Sarafoglou, A., & Wagenmakers, E.-J. (2020). Laypeople Can Predict Which Social-Science Studies Will Be Replicated Successfully: Advances in Methods and Practices in Psychological Science. https://doi.org/10.1177/2515245920919667

-

-

www.newscientist.com www.newscientist.com

-

Stokel-Walker, C. (n.d.). Concerns raised about pubs collecting data for coronavirus tracing. New Scientist. Retrieved June 25, 2020, from https://www.newscientist.com/article/2246965-concerns-raised-about-pubs-collecting-data-for-coronavirus-tracing/

-

-

twitter.com twitter.com

-

Carl T. Bergstrom on Twitter. (n.d.). Twitter. Retrieved 19 February 2021, from https://twitter.com/CT_Bergstrom/status/1357799981977985025

-

-

twitter.com twitter.com

-

ReconfigBehSci. (2021, January 14). RT @d_spiegel: Extraordinary data from Scotland on excess deaths by cause and location in 2020 https://t.co/41KClWvMyr 6,686 deaths involvi… [Tweet]. @SciBeh. https://twitter.com/SciBeh/status/1349741040664776706

-

-

www.sciencedirect.com www.sciencedirect.com

-

Perra, N. (2021). Non-pharmaceutical interventions during the COVID-19 pandemic: A review. Physics Reports. https://doi.org/10.1016/j.physrep.2021.02.001

-

-

twitter.com twitter.com

-

OC. (2021, January 22). Leadership. One of the most important and non-trivial steps taken by @JoeBiden is the decision to prioritize the protection of those at the highest risk. In Israel, our analysis shows that municipalities at low SES have the lowest rates of vaccination of at-risk populations.1/4 https://t.co/1aiqymQlMQ [Tweet]. @MDCaspi. https://twitter.com/MDCaspi/status/1352590064900038662

Tags

- data scientists

- metric

- vaccine

- municipalities

- accurate

- data

- reduction

- education

- is:tweet

- policy makers

- COVID-19

- virus cases

- infections

- policy experts

- vulnerable

- rates

- leadership

- transparency

- elders

- vaccination

- lang:en

- pandemic

- high risk

- lower socio-economic status

- Israel

- healthcare

Annotators

URL

-

-

twitter.com twitter.com

-

Ashish K. Jha, MD, MPH. (2020, December 12). Michigan vs. Ohio State Football today postponed due to COVID But a comparison of MI vs OH on COVID is useful Why? While vaccines are coming, we have 6-8 hard weeks ahead And the big question is—Can we do anything to save lives? Lets look at MI, OH for insights Thread [Tweet]. @ashishkjha. https://twitter.com/ashishkjha/status/1337786831065264128

-

-

scholarphi.org scholarphi.org

-

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>hyperlink.academy</span> in The Future of Textbooks (<time class='dt-published'>03/18/2021 23:54:19</time>)</cite></small>

Tags

Annotators

URL

-

-

journals.lww.com journals.lww.com

-

Clinical Data Systems to Support Public Health Practice: A National Survey of Software and Storage Systems Among Local Health Departments

-

-

www.theguardian.com www.theguardian.com

-

the Guardian. ‘There’s No Proof the Oxford Vaccine Causes Blood Clots. So Why Are People Worried? | David Spiegelhalter’, 15 March 2021. http://www.theguardian.com/commentisfree/2021/mar/15/evidence-oxford-vaccine-blood-clots-data-causal-links.

-

-

www.thelancet.com www.thelancet.com

-

Vijayasingham, L., Bischof, E., & Wolfe, J. (2021). Sex-disaggregated data in COVID-19 vaccine trials. The Lancet, 397(10278), 966–967. https://doi.org/10.1016/S0140-6736(21)00384-6

-

-

www.pnas.org www.pnas.org

-

Hong, B., Bonczak, B. J., Gupta, A., Thorpe, L. E., & Kontokosta, C. E. (2021). Exposure density and neighborhood disparities in COVID-19 infection risk. Proceedings of the National Academy of Sciences, 118(13). https://doi.org/10.1073/pnas.2021258118

-

-

-

Coenen, A., & Gureckis, T. (2021). The distorting effects of deciding to stop sampling information. PsyArXiv. https://doi.org/10.31234/osf.io/tbrea

-

-

www.nature.com www.nature.com

-

Guglielmi, G. (2020). Coronavirus and public holidays: What the data say. Nature, 588(7839), 549–549. https://doi.org/10.1038/d41586-020-03545-1

-

-

www.nature.com www.nature.com

-

Savaris, R. F., G. Pumi, J. Dalzochio, and R. Kunst. ‘Stay-at-Home Policy Is a Case of Exception Fallacy: An Internet-Based Ecological Study’. Scientific Reports 11, no. 1 (5 March 2021): 5313. https://doi.org/10.1038/s41598-021-84092-1.

-

-

www.thelancet.com www.thelancet.com

-

Varsavsky, Thomas, Mark S. Graham, Liane S. Canas, Sajaysurya Ganesh, Joan Capdevila Pujol, Carole H. Sudre, Benjamin Murray, et al. ‘Detecting COVID-19 Infection Hotspots in England Using Large-Scale Self-Reported Data from a Mobile Application: A Prospective, Observational Study’. The Lancet Public Health 6, no. 1 (1 January 2021): e21–29. https://doi.org/10.1016/S2468-2667(20)30269-3.

-

-

twitter.com twitter.com

-

ReconfigBehSci. ‘RT @rypan: After 365 Days of Aggregating COVID Data, the Volunteers at Https://T.Co/Bhl4cjts8N Are Passing the Baton Back to @HHSGov & @CDC…’. Tweet. @SciBeh (blog), 9 March 2021. https://twitter.com/SciBeh/status/1369567816026841088.

-

-

-

Weber, Hannah Recht, Lauren. ‘As Vaccine Rollout Expands, Black Americans Still Left Behind’. Kaiser Health News (blog), 29 January 2021. https://khn.org/news/article/as-vaccine-rollout-expands-black-americans-still-left-behind/.

-

-

quillette.com quillette.com

-

Quillette. ‘Rise of the Coronavirus Cranks’, 16 January 2021. https://quillette.com/2021/01/16/rise-of-the-coronavirus-cranks/.

-

-

arxiv.org arxiv.org

-

Gupta, Prateek, Tegan Maharaj, Martin Weiss, Nasim Rahaman, Hannah Alsdurf, Abhinav Sharma, Nanor Minoyan, et al. ‘COVI-AgentSim: An Agent-Based Model for Evaluating Methods of Digital Contact Tracing’. ArXiv:2010.16004 [Cs], 29 October 2020. http://arxiv.org/abs/2010.16004.

-

-

jedkolko.com jedkolko.com

-

Jed. ‘The Geography of the COVID19 Third Wave | Jed Kolko’. Accessed 25 February 2021. http://jedkolko.com/2020/10/18/the-geography-of-the-covid19-third-wave/.

-

-

www.washingtonpost.com www.washingtonpost.com

-

Long, Heather. ‘Millions of Americans Are Heading into the Holidays Unemployed and over $5,000 behind on Rent’. Washington Post. Accessed 26 February 2021. https://www.washingtonpost.com/business/2020/12/07/unemployed-debt-rent-utilities/.

-

-

www.census.gov www.census.gov

-

U.S. Census Bureau. (2021, February 4). Small Business Pulse Survey Shows Shift in Expectations from Spring to Winter. The United States Census Bureau. https://www.census.gov/library/stories/2021/01/small-business-pulse-survey-shows-shift-in-expectations-from-spring-to-winter.html?utm_campaign=20210126msacos1ccstors&utm_medium=email&utm_source=govdelivery

-

-

www.washingtonpost.com www.washingtonpost.com

-

Meckler, L. (2021, January 26). CDC finds scant spread of coronavirus in schools with precautions in place. Washington Post. https://www.washingtonpost.com/education/cdc-school-virus-spread/2021/01/26/bf949222-5fe6-11eb-9061-07abcc1f9229_story.html?wpmk=1&wpisrc=al_news__alert-hse--alert-national&utm_source=alert&utm_medium=email&utm_campaign=wp_news_alert_revere&location=alert&pwapi_token=eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJjb29raWVuYW1lIjoid3BfY3J0aWQiLCJpc3MiOiJDYXJ0YSIsImNvb2tpZXZhbHVlIjoiNWE0ZTVmZjg5YmJjMGYwMzU5M2MxMzQ0IiwidGFnIjoid3BfbmV3c19hbGVydF9yZXZlcmUiLCJ1cmwiOiJodHRwczovL3d3dy53YXNoaW5ndG9ucG9zdC5jb20vZWR1Y2F0aW9uL2NkYy1zY2hvb2wtdmlydXMtc3ByZWFkLzIwMjEvMDEvMjYvYmY5NDkyMjItNWZlNi0xMWViLTkwNjEtMDdhYmNjMWY5MjI5X3N0b3J5Lmh0bWw_d3Btaz0xJndwaXNyYz1hbF9uZXdzX19hbGVydC1oc2UtLWFsZXJ0LW5hdGlvbmFsJnV0bV9zb3VyY2U9YWxlcnQmdXRtX21lZGl1bT1lbWFpbCZ1dG1fY2FtcGFpZ249d3BfbmV3c19hbGVydF9yZXZlcmUmbG9jYXRpb249YWxlcnQifQ.lDTv2Ht4JO64K3NlGmF4Uk3pW4oGuD4Vetm18lpknHY

Tags

- CDC

- transmission

- social distancing

- prevention

- is:news

- mask

- data

- lang:en

- policy

- remote schooling

- education

- asymptomatic

- sports

- united states

- COVID-19

Annotators

URL

-

-

www-sciencedirect-com.remote.baruch.cuny.edu www-sciencedirect-com.remote.baruch.cuny.edu

-

Goolsbee, Austan, and Chad Syverson. ‘Fear, Lockdown, and Diversion: Comparing Drivers of Pandemic Economic Decline 2020’. Journal of Public Economics 193 (1 January 2021): 104311. https://doi.org/10.1016/j.jpubeco.2020.104311.

-

-

media.ed.ac.uk media.ed.ac.uk

-

Li, Y. (2020, October 18). Public health measures and R. Media Hopper Create. https://media.ed.ac.uk/media/1_1uhkv3uc

-

-

-

McCabe, Stefan, Leo Torres, Timothy LaRock, Syed Arefinul Haque, Chia-Hung Yang, Harrison Hartle, and Brennan Klein. ‘Netrd: A Library for Network Reconstruction and Graph Distances’. ArXiv:2010.16019 [Physics], 29 October 2020. http://arxiv.org/abs/2010.16019.

-

-

inews.co.uk inews.co.uk

-

Fears for double Covid vaccine dosing as GPs left without up-to-date data amid NHS IT chaos. (2020, December 11). Inews.Co.Uk. https://inews.co.uk/news/health/covid-vaccines-fears-double-dosing-gps-nhs-it-chaos-exclusive-792346

-

-

psyarxiv.com psyarxiv.com

-

Kejriwal, M., & Shen, K. (2021, March 9). Affective Correlates of Metropolitan Food Insecurity and Misery during COVID-19. https://doi.org/10.31234/osf.io/6zxfe

-

-

-

Heroy, Samuel, Isabella Loaiza, Alexander Pentland, and Neave O’Clery. ‘Controlling COVID-19: Labor Structure Is More Important than Lockdown Policy’. ArXiv:2010.14630 [Physics], 5 November 2020. http://arxiv.org/abs/2010.14630.

-

-

-

Cantwell, G. T., Kirkley, A., & Newman, M. E. J. (2020). The friendship paradox in real and model networks. ArXiv:2012.03991 [Physics]. http://arxiv.org/abs/2012.03991

-

-

www.theguardian.com www.theguardian.com

-

Boseley, S. (2021, March 15). Coronavirus: report scathing on UK government’s handling of data. The Guardian. https://www.theguardian.com/world/2021/mar/15/mp-report-scathing-on-uk-goverment-handling-and-sharing-of-covid-data

-

-

www.forbes.com www.forbes.com

-

The urgent argument for turning any company into a software company is the growing availability of data, both inside and outside the enterprise. Specifically, the implications of so-called “big data”—the aggregation and analysis of massive data sets, especially mobile

Every company is described by a set of data, financial and other operational metrics, next to message exchange and paper documents. What else we find that contributes to the simulacrum of an economic narrative will undeniably be constrained by the constitutive forces of its source data.

-

-

en.wikipedia.org en.wikipedia.org

-

The granularity of data refers to the size in which data fields are sub-divided

-

-

aatishb.com aatishb.com

-

Double-time for the world.

-

-

www.nesta.org.uk www.nesta.org.uk

-

rapidreviewscovid19.mitpress.mit.edu rapidreviewscovid19.mitpress.mit.edu

-

Editorial Office · Rapid Reviews COVID-19. (n.d.). Rapid Reviews COVID-19. Retrieved 6 March 2021, from https://rapidreviewscovid19.mitpress.mit.edu/editors2

-

-

twitter.com twitter.com

-

ReconfigBehSci on Twitter: ‘RT @d_spiegel: Excellent new Covid RED dashboard from UCL https://t.co/wHMG8LzTUb Would be good to also know (a) how many contacts isolate…’ / Twitter. (n.d.). Retrieved 6 March 2021, from https://twitter.com/SciBeh/status/1323316018484305920

-

-

medium.com medium.com

-

a data donation platform that allows users of browsers to donate data on their usage of specific services (eg Youtube, or Facebook) to a platform.

This seems like a really promising pattern for many data-driven problems. Browsers can support opt-in donation to contribute their data to improve Web search, social media, recommendations, lots of services that implicitly require lots of operational data.

-

-

twitter.com twitter.com

-

DataBeers Brussels. (2020, October 26). ⏰ Our next #databeers #brussels is tomorrow night and we’ve got a few tickets left! Don’t miss out on some important and exciting talks from: 👉 @svscarpino 👉 Juami van Gils 👉 Joris Renkens 👉 Milena Čukić 🎟️ Last tickets here https://t.co/2upYACZ3yS https://t.co/jEzLGvoxQe [Tweet]. @DataBeersBru. https://twitter.com/DataBeersBru/status/1320743318234562561

-

-

twitter.com twitter.com

-

Cailin O’Connor. (2020, November 10). New paper!!! @psmaldino look at what causes the persistence of poor methods in science, even when better methods are available. And we argue that interdisciplinary contact can lead better methods to spread. 1 https://t.co/C5beJA5gMi [Tweet]. @cailinmeister. https://twitter.com/cailinmeister/status/1326221893372833793

-

-

twitter.com twitter.com

-

ReconfigBehSci. (2020, November 23). RT @lakens: Ongoing, an incredibly awesome talk on computational reproducibility by @AdinaKrik. Slides: Https://t.co/QslHW3gEtm. If you are… [Tweet]. @SciBeh. https://twitter.com/SciBeh/status/1331265422109396995

-

-

twitter.com twitter.com

-

The COVID Tracking Project. (2020, November 19). Our daily update is published. States reported 1.5M tests, 164k cases, and 1,869 deaths. A record 79k people are currently hospitalized with COVID-19 in the US. Today’s death count is the highest since May 7. Https://t.co/8ps5itYiWr [Tweet]. @COVID19Tracking. https://twitter.com/COVID19Tracking/status/1329235190615474179

-

-

twitter.com twitter.com

-

Flightradar24. (2020, November 24). The skies above North America at Noon ET on the Tuesday before Thanksgiving. Active flights 2018: 6,815 2019: 7,630 2020: 6,972 📡 https://t.co/NePPWZCDVp https://t.co/WOY9j0BXpx [Tweet]. @flightradar24. https://twitter.com/flightradar24/status/1331286193875640322

-

-

www.gov.uk www.gov.uk

-

Coronavirus cases by local authority: Epidemiological data, 27 November 2020. (n.d.). GOV.UK. Retrieved 4 March 2021, from https://www.gov.uk/government/publications/coronavirus-cases-by-local-authority-epidemiological-data-27-november-2020

-

-

guides.rubyonrails.org guides.rubyonrails.org

-

These methods should be used with caution, however, because important business rules and application logic may be kept in callbacks. Bypassing them without understanding the potential implications may lead to invalid data.

-

-

-

Unrealistic optimism about future life events: A cautionary note. (n.d.). Retrieved March 4, 2021, from https://psycnet.apa.org/fulltext/2010-22979-001.pdf?auth_token=a25fd4b7f008a50b15fd7b0f1fdb222fc38373f4

-

-

data.wprdc.org data.wprdc.org

-

twitter.com twitter.com

-

ReconfigBehSci on Twitter: ‘RT @AdamJKucharski: Summary of NERVTAG view on new SARS-CoV-2 variant, from 18 Dec (full document here: Https://t.co/yll9beVI9A) https://t.…’ / Twitter. (n.d.). Retrieved 2 March 2021, from https://twitter.com/SciBeh/status/1341034652082036739

-

-

www.google.co.uk www.google.co.uk

-

Greene, G. (1999). The Woman who Knew Too Much: Alice Stewart and the Secrets of Radiation. University of Michigan Press.

-

-

twitter.com twitter.com

-

ReconfigBehSci on Twitter: ‘RT @AdamJKucharski: Alice Stewart on epidemiology (from: Https://t.co/mt3pAwCLXP) https://t.co/P5oI6k4HjG’ / Twitter. (n.d.). Retrieved 2 March 2021, from https://twitter.com/SciBeh/status/1341017627746050049

-

-

twitter.com twitter.com

-

David Spiegelhalter. (2020, December 17). Wow, Our World in Data now showing both Sweden and Germany having a higher daily Covid death rate than the UK https://t.co/EKx7ntil6m https://t.co/YCy4a0DrqP [Tweet]. @d_spiegel. https://twitter.com/d_spiegel/status/1339493869394780160

-

-

twitter.com twitter.com

-

Rowland Manthorpe. (2020, December 16). What’s happening with the data about the vaccine? Well, let’s put it this way: There’s a lot to sort out A THREAD on my reporting today [Tweet]. @rowlsmanthorpe. https://twitter.com/rowlsmanthorpe/status/1339347825147195392

-

-

psyarxiv.com psyarxiv.com

-

Hyland, P., Vallières, F., Shevlin, M., Bentall, R. P., McKay, R., Hartman, T. K., McBride, O., & Murphy, J. (2021). Resistance to COVID-19 vaccination has increased in Ireland and the UK during the pandemic. PsyArXiv. https://doi.org/10.31234/osf.io/ry6n4

Tags

- Russia

- second wave

- vaccine

- statistical analysis

- vaccine resistance

- statistics

- COVID-19

- resistance

- public health

- UK

- cross-sectional data

- social behavior

- vaccination

- vaccine hesitance

- officials

- lang:en

- pandemic

- is:preprint

- ireland

- attitudes

- longitudinal

- China

- communication strategies

Annotators

URL

-

-

twitter.com twitter.com

-

ReconfigBehSci on Twitter: ‘RT @PsyArXivBot: Resistance to COVID-19 vaccination has increased in Ireland and the UK during the pandemic https://t.co/AgKErDr7Yj’ / Twitter. (n.d.). Retrieved 2 March 2021, from https://twitter.com/SciBeh/status/1366707710151053312

-

-

-

Szabelska, A., Pollet, T. V., Dujols, O., Klein, R. A., & IJzerman, H. (2021). A Tutorial for Exploratory Research: An Eight-Step Approach. PsyArXiv. https://doi.org/10.31234/osf.io/cy9mz

-

-

www.bloomberg.com www.bloomberg.com

-

Covid-19 Isn’t the Only Thing Shortening American Lives. (2021, February 23). Bloomberg.Com. https://www.bloomberg.com/opinion/articles/2021-02-23/covid-19-isn-t-the-only-thing-shortening-american-lives

-

-

www.youtube.com www.youtube.com

-

JAMA Network. (2020, November 6). Herd Immunity as a Coronavirus Pandemic Strategy. https://www.youtube.com/watch?v=2tsUTAWBJ9M

-

-

twitter.com twitter.com

-

Erich Neuwirth. (2020, November 11). #COVID19 #COVID19at https://t.co/9uudp013px Zu meinem heutigen Bericht sind Vorbemerkungen notwendig. Das EMS - aus dem kommen die Daten über positive Tests—Hat anscheinend ziemliche Probleme. Heute wurden viele Fälle nachgemeldet. In Wien gab es laut diesem [Tweet]. @neuwirthe. https://twitter.com/neuwirthe/status/1326556742113746950

-

-

www.statnews.com www.statnews.com

-

A hidden success in the Covid-19 mess: The internet. (2020, November 11). STAT. https://www.statnews.com/2020/11/11/a-hidden-success-in-the-covid-19-mess-the-internet/

-

-

www.theguardian.com www.theguardian.com

-

Geddes, L. (2020, November 15). Damage to multiple organs recorded in ‘long Covid’ cases. The Guardian. https://www.theguardian.com/world/2020/nov/15/damage-to-multiple-organs-recorded-in-long-covid-cases

-

- Feb 2021

-

thestatsgeek.com thestatsgeek.com

-

Confounding vs. Effect modification – The Stats Geek. (n.d.). Retrieved February 27, 2021, from https://thestatsgeek.com/2021/01/13/confounding-vs-effect-modification/

-

-

www.nature.com www.nature.com

-

Chwalisz, C. (2021). The pandemic has pushed citizen panels online. Nature, 589(7841), 171–171. https://doi.org/10.1038/d41586-021-00046-7

-

-

www.coursera.org www.coursera.org

-

Data on blockchains are different from data on the Internet, and in one important way in particular. On the Internet most of the information is malleable and fleeting. The exact date and time of its publication isn't critical to past or future information. On a blockchain, the truth of the present relies on the details of the past. Bitcoins moving across the network have been permanently stamped from the moment of their coinage.

data on blockchain vs internet

-

-

osf.io osf.io

-

Armeni, Kristijan, Loek Brinkman, Rickard Carlsson, Anita Eerland, Rianne Fijten, Robin Fondberg, Vera Ellen Heininga, et al. ‘Towards Wide-Scale Adoption of Open Science Practices: The Role of Open Science Communities’. MetaArXiv, 6 October 2020. https://doi.org/10.31222/osf.io/7gct9.

-

-

twitter.com twitter.com

-

ReconfigBehSci. (2020, December 6). RT @statsepi: Lol ok. Https://t.co/eCPpU3Linv [Tweet]. @SciBeh. https://twitter.com/SciBeh/status/1335894181248643073

-

-

osf.io osf.io

-

Peer, L., Orr, L., & Coppock, A. (2020). Active Maintenance: A Proposal for the Long-term Computational Reproducibility of Scientific Results. SocArXiv. https://doi.org/10.31235/osf.io/8jwhk

-

-

advances.sciencemag.org advances.sciencemag.org

-

Morgan, A. C., Way, S. F., Hoefer, M. J. D., Larremore, D. B., Galesic, M., & Clauset, A. (2021). The unequal impact of parenthood in academia. Science Advances, 7(9), eabd1996. https://doi.org/10.1126/sciadv.abd1996

-

-

-

Dickie, Mure, and John Burn-Murdoch. ‘Scotland Reaps Dividend of Covid Response That Diverged from England’, 25 February 2021. https://www.ft.com/content/e1eddd2f-cb0b-4c7a-8872-2783810fae8d.

-

-

the-turing-way.netlify.com the-turing-way.netlify.comWelcome1

-

github.com github.com

-

Tree Navigation

-

-

www.nature.com www.nature.com

-

Chande, A., Lee, S., Harris, M., Nguyen, Q., Beckett, S. J., Hilley, T., Andris, C., & Weitz, J. S. (2020). Real-time, interactive website for US-county-level COVID-19 event risk assessment. Nature Human Behaviour, 4(12), 1313–1319. https://doi.org/10.1038/s41562-020-01000-9

-

-

twitter.com twitter.com

-

ReconfigBehSci. (2021, January 12). RT @shaunabrail: Our 4th dashboard launches today at https://t.co/tBn16KDr6h. It focuses on changes in work, revealing a range of employmen… [Tweet]. @SciBeh. https://twitter.com/SciBeh/status/1349058908023873538

-

-

twitter.com twitter.com

-

Dr Elaine Toomey on Twitter. (n.d.). Twitter. Retrieved 24 February 2021, from https://twitter.com/ElaineToomey1/status/1357343820417933316

-

-

twitter.com twitter.com

-

Christina Pagel. (2021, February 23). 1. LONG THREAD ON COVID, LOCKDOWN & THE ROADMAP: TLDR: There’s a lot to like about the roadmap – but it could be & should be made much more effective. Because this will be tying current situation to the roadmap, I’m concentrating on English data Read on… (22 tweets—Sorry) [Tweet]. @chrischirp. https://twitter.com/chrischirp/status/1364019581971558401

-

-

trailblazer.to trailblazer.to

-

Trailblazer will automatically create a new Context object around your custom input hash. You can write to that without interferring with the original context.

Tags

Annotators

URL

-

-

twitter.com twitter.com

-

IrrationalLabs. (2021, February 3). We designed an intervention that reduced shares of flagged content on TikTok by 24% via a large scale RCT, thread 👇1/7 [Tweet]. @IrrationalLabs. https://twitter.com/IrrationalLabs/status/1357033901311451140

-

-

en.wikipedia.org en.wikipedia.org

-

A type constructor M that builds up a monadic type M T

-

Monads achieve this by providing their own data type (a particular type for each type of monad), which represents a specific form of computation

-

-

en.wikipedia.org en.wikipedia.org

-

Purely functional programming may also be defined by forbidding state changes and mutable data.

-

Purely functional data structures are persistent. Persistency is required for functional programming; without it, the same computation could return different results.

-

-

trailblazer.to trailblazer.to

-

What this means is: I better refrain from writing a new book and we rather focus on more and better docs.

I'm glad. I didn't like that the book (which is essentially a form of documentation/tutorial) was proprietary.

I think it's better to make documentation and tutorials be community-driven free content

-

ather, data is passed around from operation to operation, from step to step. We use OOP and inheritance solely for compile-time configuration. You define classes, steps, tracks and flows, inherit those, customize them using Ruby’s built-in mechanics, but this all happens at compile-time. At runtime, no structures are changed anymore, your code is executed dynamically but only the ctx (formerly options) and its objects are mutated. This massively improves the code quality and with it, the runtime stability

-

-

psyarxiv.com psyarxiv.com

-

Aczel, Balazs, Marton Kovacs, and Rink Hoekstra. ‘The Role of Human Fallibility in Psychological Research: A Survey of Mistakes in Data Management’. PsyArXiv, 5 November 2020. https://doi.org/10.31234/osf.io/xcykz.

-

-

assets.publishing.service.gov.uk assets.publishing.service.gov.uk

-

GOV.UK. „Investigation of Novel SARS-CoV-2 Variant: Variant of Concern 202012/01“. Zugegriffen 22. Februar 2021. https://www.gov.uk/government/publications/investigation-of-novel-sars-cov-2-variant-variant-of-concern-20201201.

Tags

- transmission

- virus

- UK

- public health

- investigation

- technical

- data

- briefing

- healtcare

- mutation

- surveillance

- COVID-19

- tracking

Annotators

URL

-

-

crystalprisonzone.blogspot.com crystalprisonzone.blogspot.com

-

Joe. (2021, January 26). Crystal Prison Zone: I tried to report scientific misconduct. How did it go? Crystal Prison Zone. http://crystalprisonzone.blogspot.com/2021/01/i-tried-to-report-scientific-misconduct.html

Tags

- false

- statistic

- data

- self-correction

- significant

- scientific

- correction

- forge

- is:blog

- table

- non-signficant

- test

- outcome

- lang:en

- value

- unreliable

- science

- misconduct

- result

Annotators

URL

-

-

www.youtube.com www.youtube.com

-

otra prueba de hypothesis en youtube

-

-

www.youtube.com www.youtube.com

-

Miguel Andariego comentó en este momento:

Sería mejor arrancar con Duckduckgo para no promover del todo al gigante.

-

-

twitter.com twitter.com

-

Kit Yates. (2021, January 22). Is this lockdown 3.0 as tough as lockdown 1? Here are a few pieces of data from the @IndependentSage briefing which suggest that despite tackling a much more transmissible virus, lockdown is less strict, which might explain why we are only just keeping on top of cases. [Tweet]. @Kit_Yates_Maths. https://twitter.com/Kit_Yates_Maths/status/1352662085356937216

-

-

www.thelancet.com www.thelancet.com

-

Li, You, Harry Campbell, Durga Kulkarni, Alice Harpur, Madhurima Nundy, Xin Wang, and Harish Nair. ‘The Temporal Association of Introducing and Lifting Non-Pharmaceutical Interventions with the Time-Varying Reproduction Number (R) of SARS-CoV-2: A Modelling Study across 131 Countries’. The Lancet Infectious Diseases 21, no. 2 (1 February 2021): 193–202. https://doi.org/10.1016/S1473-3099(20)30785-4.

-

-

www.washingtonpost.com www.washingtonpost.com

-

Dam, A. V. (n.d.). Analysis | We’ve been cooped up with our families for almost a year. This is the result. Washington Post. Retrieved 18 February 2021, from https://www.washingtonpost.com/road-to-recovery/2021/02/16/pandemic-togetherness-never-have-so-many-spent-so-much-time-with-so-few/

Tags

- children and family

- is:blog

- USA

- children

- home

- house

- data

- lang:en

- pandemic

- isolation

- lockdown

- family

- togetherness

- human behaviour

- COVID-19

Annotators

URL

-

-

twitter.com twitter.com

-

David Speigelhalter. (2021, January 26). @d_spiegel: COVID deaths within 28 days of +ve test may reach 100,000 today. But ONS data https://t.co/I4GVcUncll show that over 100,00… [Tweet]. https://twitter.com/d_spiegel/status/1354021097822425093?s=20

-

-

-

Excel: Why using Microsoft’s tool caused Covid-19 results to be lost. (2020, October 5). BBC News. https://www.bbc.com/news/technology-54423988

-

-

psyarxiv.com psyarxiv.com

-

Lo, C., Mani, N., Kartushina, N., Mayor, J., & Hermes, J. (2021, February 11). e-Babylab: An Open-source Browser-based Tool for Unmoderated Online Developmental Studies. https://doi.org/10.31234/osf.io/u73sy

-

-

en.wikipedia.org en.wikipedia.org

-

This is done to separate internal representations of information from the ways information is presented to and accepted from the user.

-